How to See Critical Incidents in Alert Overload: A Guide for SOCs and MSSPs

Alert overload is one of the hardest ongoing challenges for a Tier 1 SOC analyst. Every day brings hundreds, sometimes thousands of alerts waiting to be triaged, categorized, and escalated. Many of them are false positives, duplicates, or low-value notifications that muddy the signal.

When the queue never stops growing, even experienced analysts start losing clarity, missing patterns, and risking oversight of critical threats.

Beyond Burnout: How Alert Fatigue Destroys Careers

Alert overload isn’t just unproductive — it’s toxic. Constant false positives create chronic stress, anxiety, and decision fatigue. Analysts doubt themselves, experience imposter syndrome, and burn out fast. Many leave the industry within years, citing mental health tolls like sleep loss and eroded confidence from missing “the big one” amid the chaos.

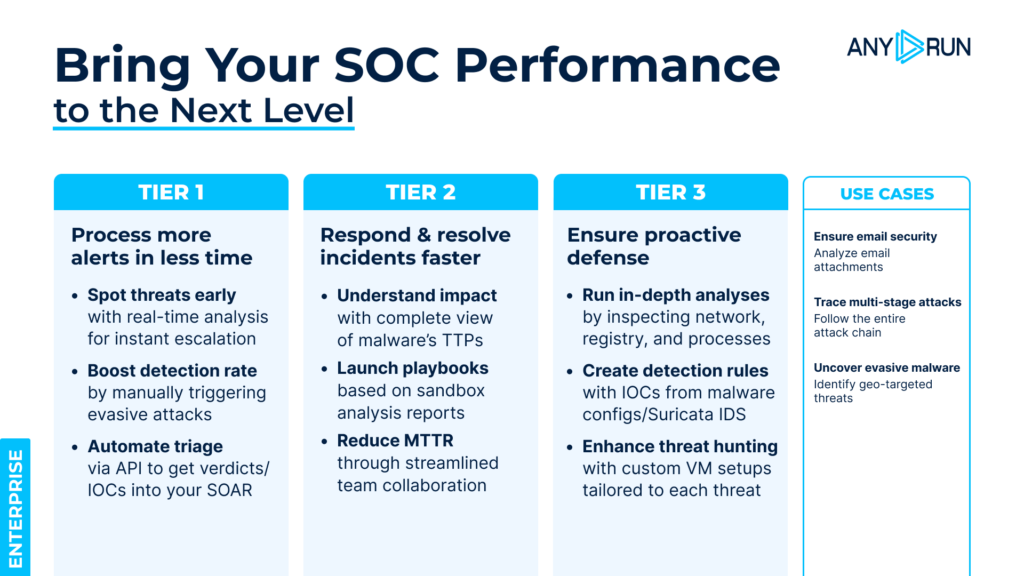

Tier 1 analysts who triage efficiently using context gain sharp investigation skills, earn trust for escalations, and accelerate to Tier 2/3 roles. They avoid burnout, stay passionate about cybersecurity, and position themselves as indispensable experts in a high-demand field. Solutions like ANY.RUN’s Threat Intelligence Lookup can provide a master key not only to an analyst’s career, but to the next level of SOC efficiency.

Cutting Through the Chaos: How Threat Intelligence Keeps Analysts Effective

Alert overload at Tier 1 creates bottlenecks: unnecessary escalations flood senior analysts, response times balloon, and real breaches slip through. This drains budgets on prolonged incidents, erodes team morale, and weakens organizational defenses, turning a proactive SOC into a reactive firefighting unit.

Threat intelligence gives analysts the missing piece they often need during triage: context. Instead of manually searching for data across multiple sources, TI instantly tells you what the alert is truly about.

Was this domain seen in phishing attacks? Is this hash connected to a malware family? Is the mutex associated with known malicious samples?

With enriched data, Tier 1 analysts spend less time guessing and more time making confident decisions. Context transforms alerts from ambiguous into actionable and significantly reduces both cognitive load and triage time.

The key is having threat intelligence that’s immediately accessible during your investigation workflow, comprehensive enough to cover the indicators you encounter, and current enough to reflect the latest threat landscape. When used effectively, threat intelligence doesn’t just help you process alerts faster. It improves your accuracy, reduces the anxiety of uncertainty, and helps you develop the threat intuition that distinguishes experienced analysts.

Context on Demand: Understand an Alert Fast

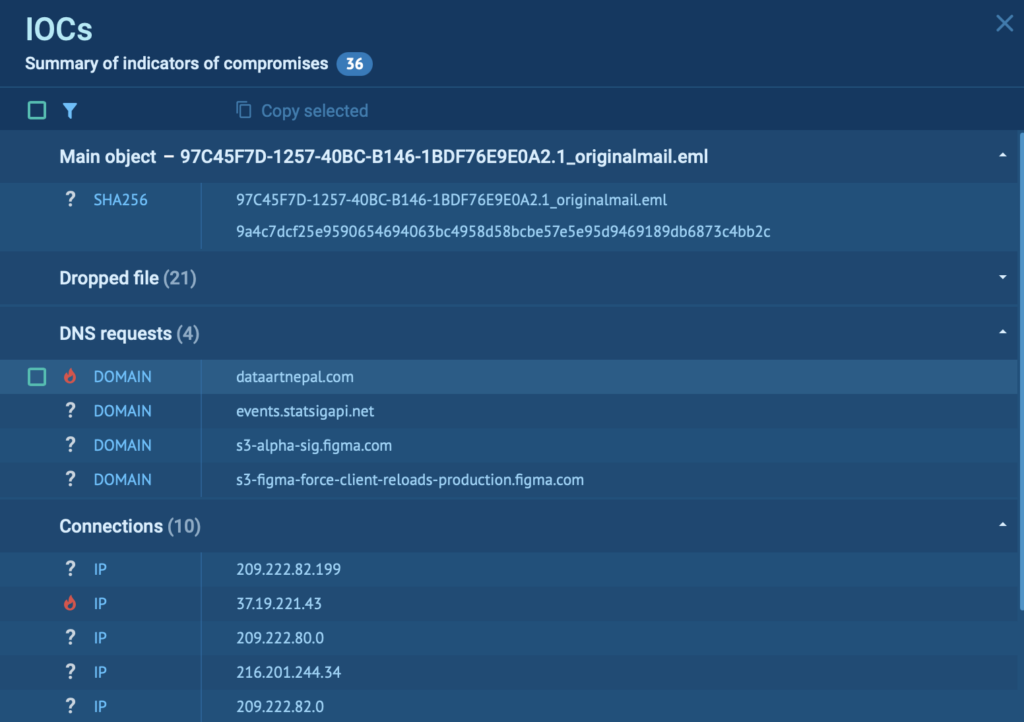

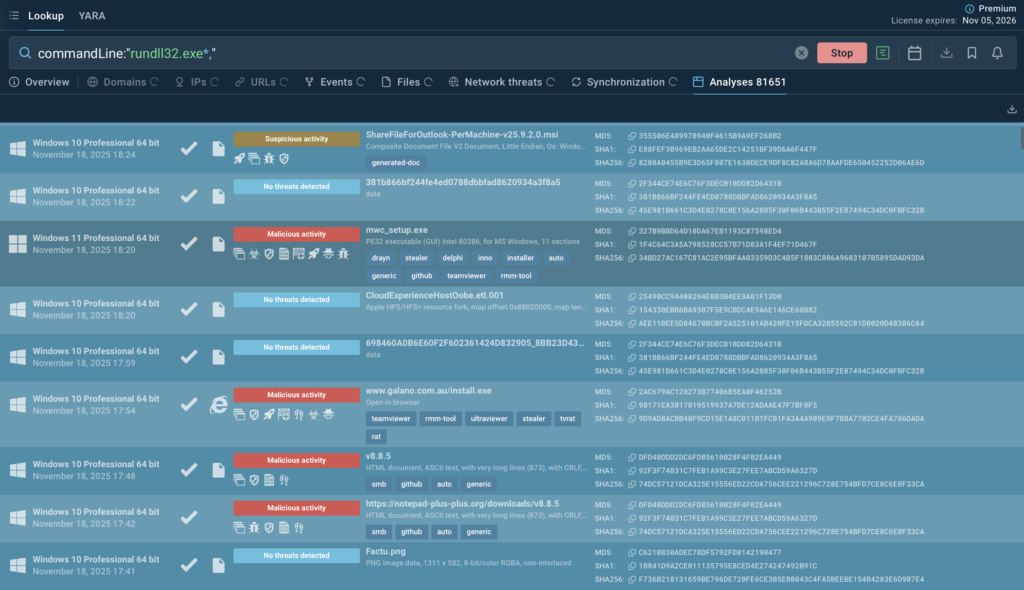

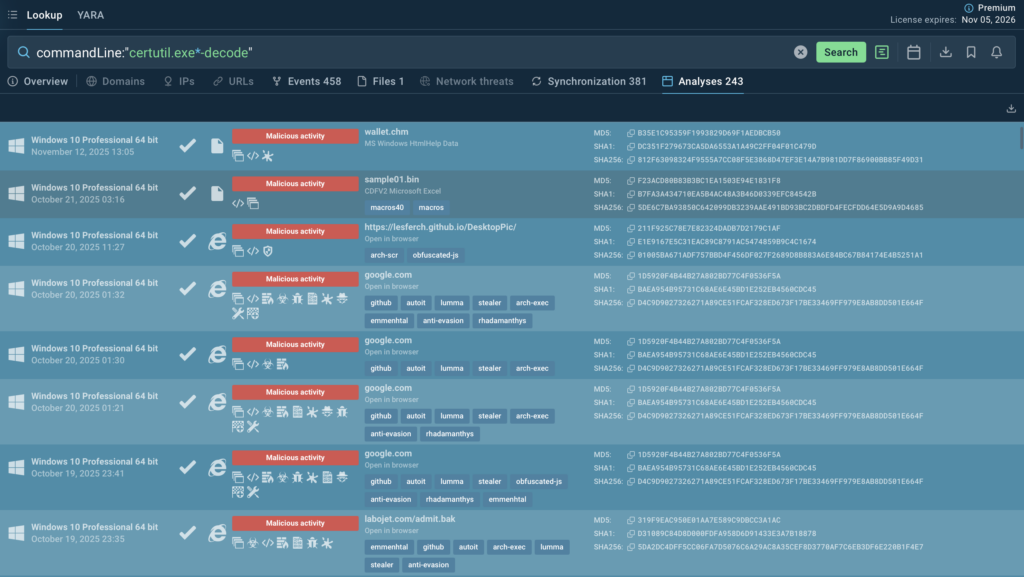

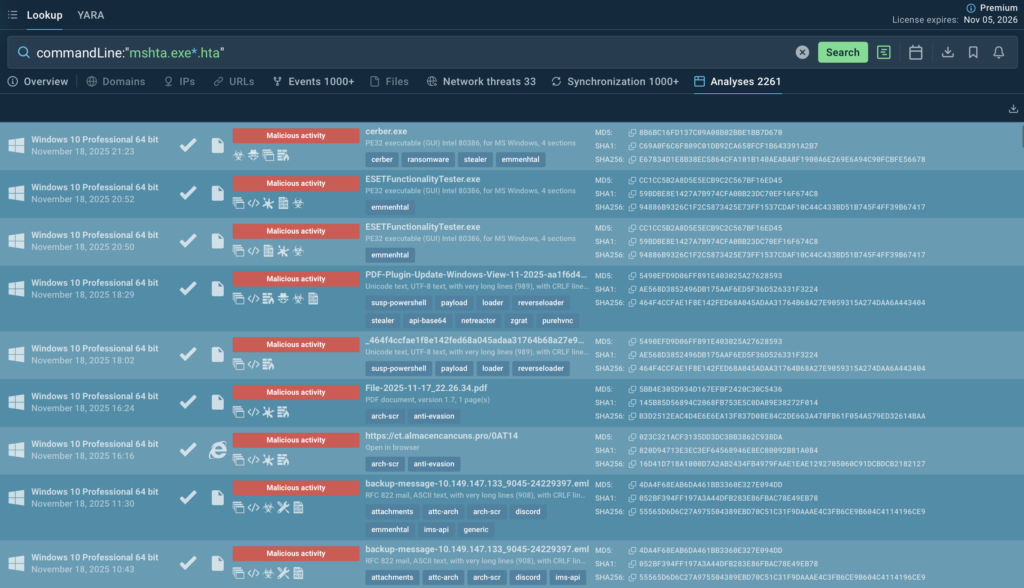

ANY.RUN’s Threat Intelligence Lookup provides immediate, precise context from one of the largest ecosystems of analyst-generated data worldwide. It connects information from 15,000+ SOCs and security teams and presents it in a clean, friendly format.

Instead of digging through scattered reports, teams get immediate answers: malware classification, sample behavior, network connections, relationships, and IOCs — all based on real sandbox runs.

This dramatically shortens triage time and reduces the chance of overlooking critical details hidden inside the noise.

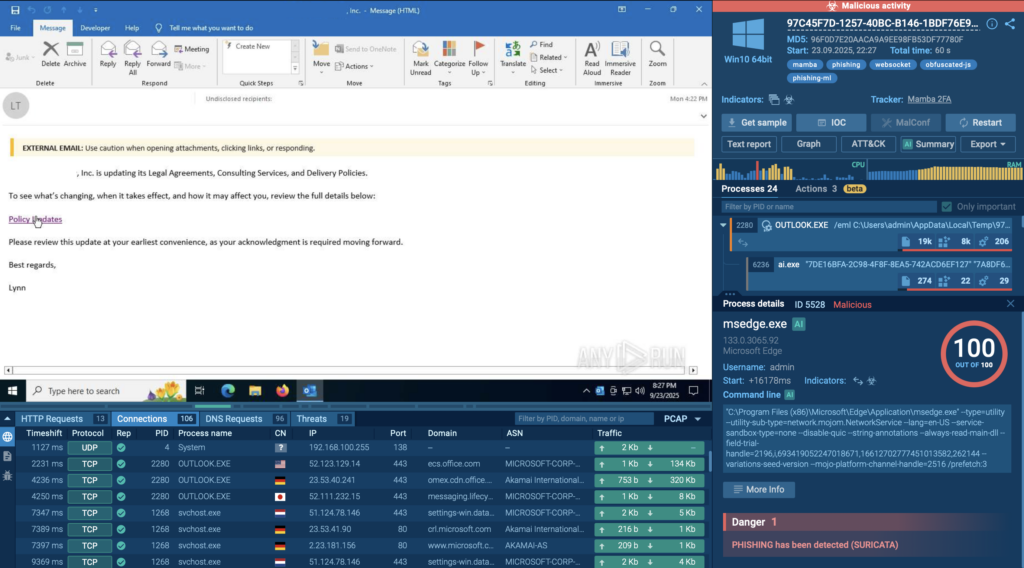

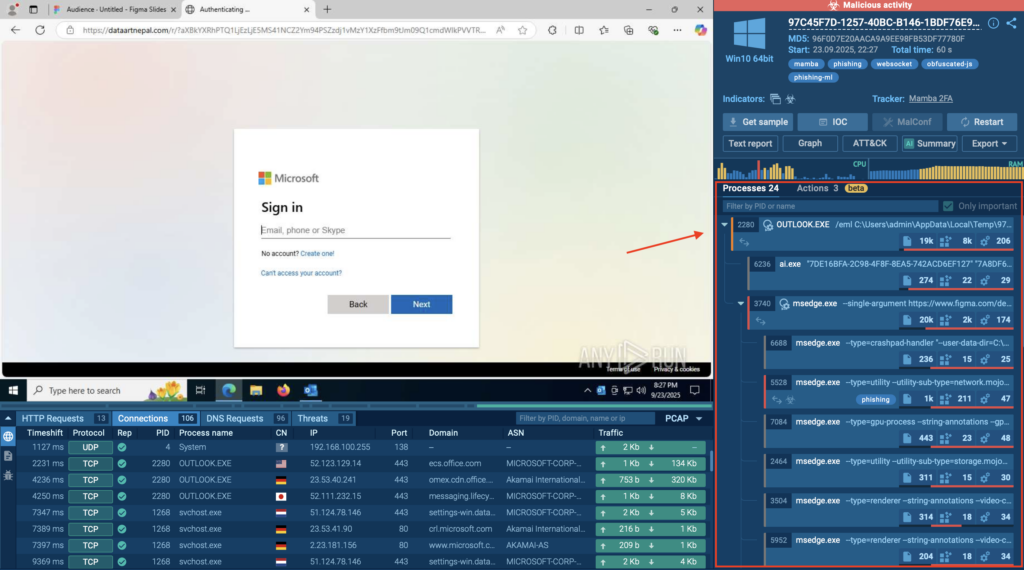

Real-World Wins: See TI Lookup in Action

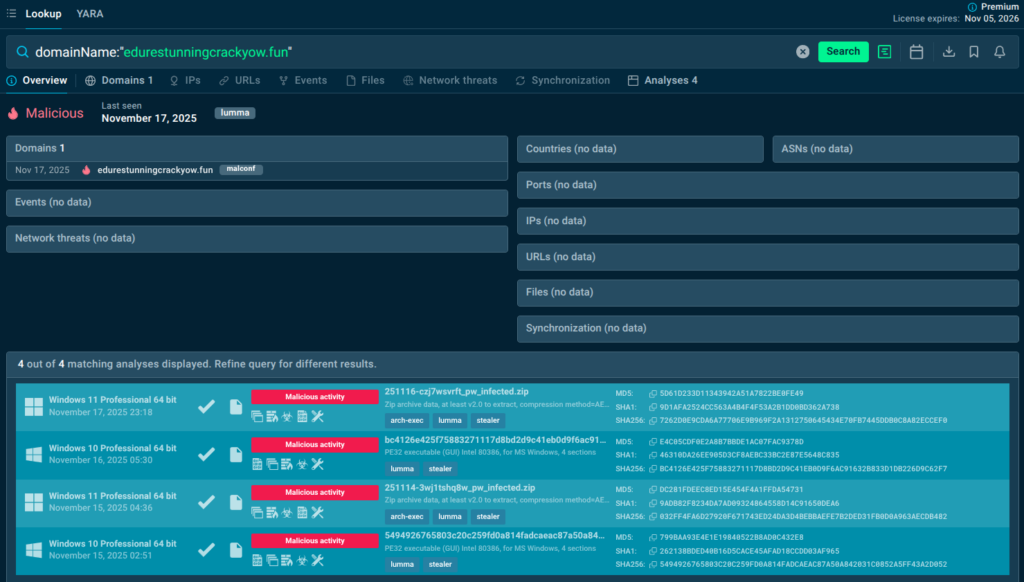

From Vague Domain to Clear Verdict

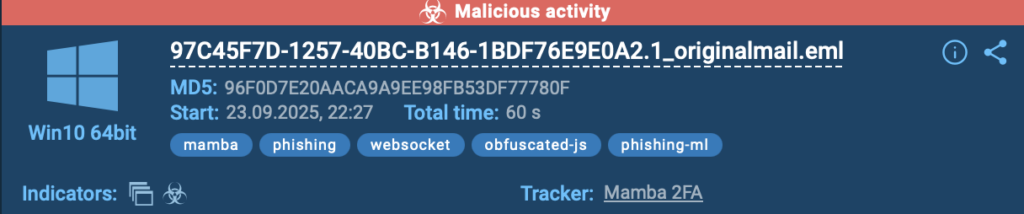

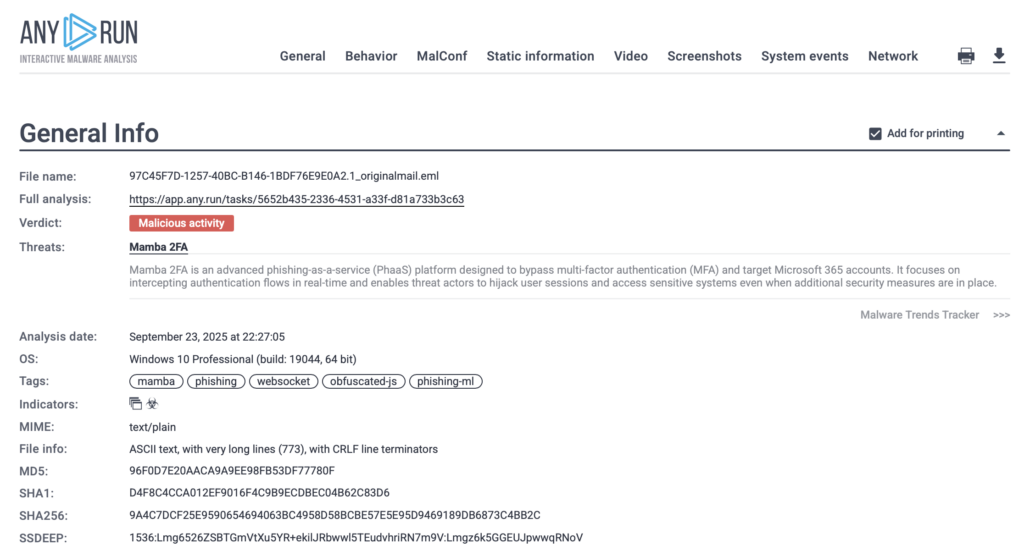

An alert flags a weird domain in network traffic. Paste it into ANY.RUN TI Lookup: instantly reveal if it’s a known C2 server, tied to ransomware like LockBit, with resolved IPs, associated hashes, and full attack chains from recent sandbox runs. Result? Confident closure or escalation, saving hours and stopping lateral movement cold.

domainName:”edurestunningcrackyow.fun”

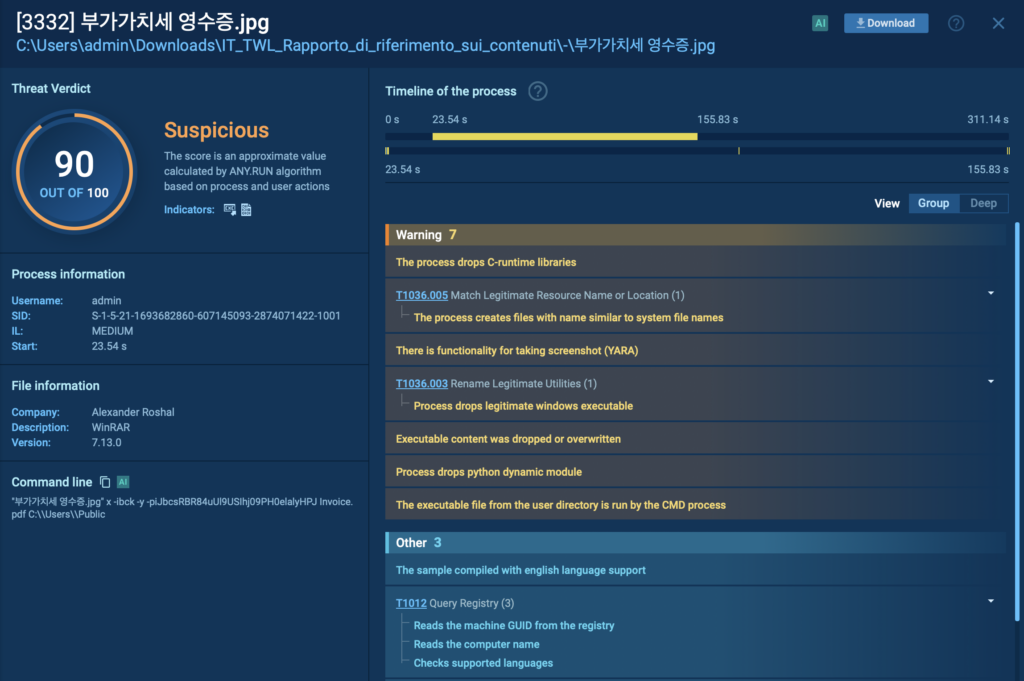

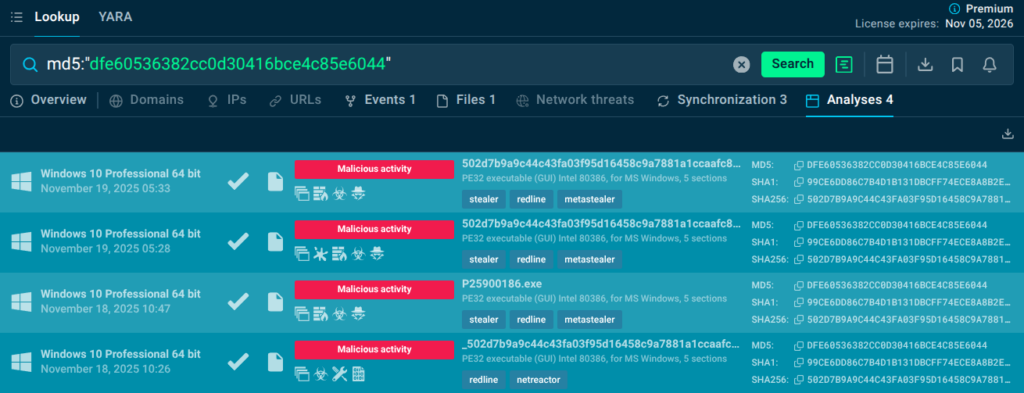

How To Make a Hash Talk

EDR alerts on a dropped executable hash. Query TI Lookup: uncover the exact malware family (e.g., RedLine stealer), prevalence stats, extraction TTPs, and behavioral details from detonations. Benefit: Precise containment (block similar hashes), updated detections, and proof for stakeholders: no deep dives needed.

md5:”dfe60536382cc0d30416bce4c85e6044″

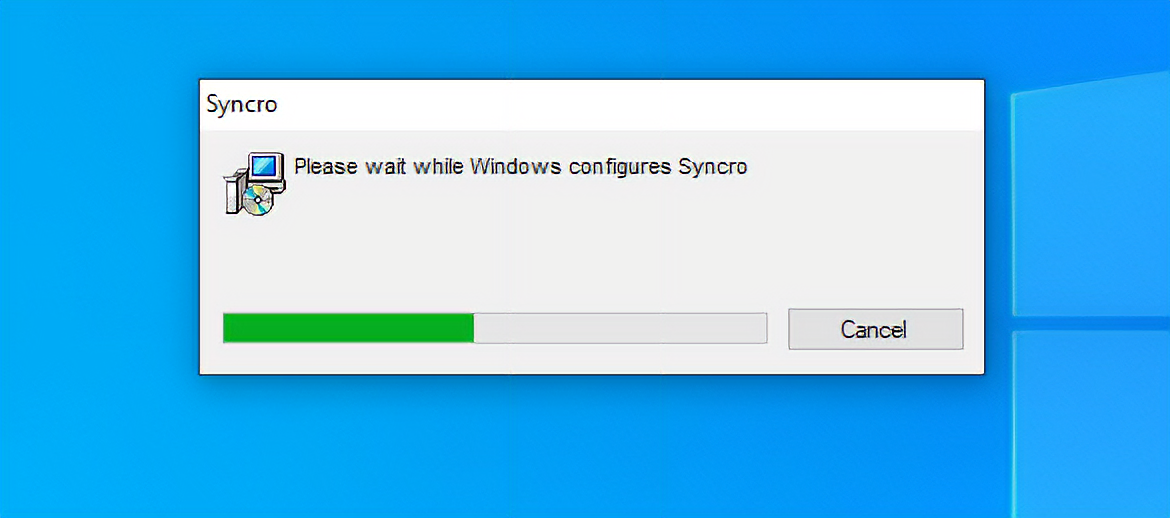

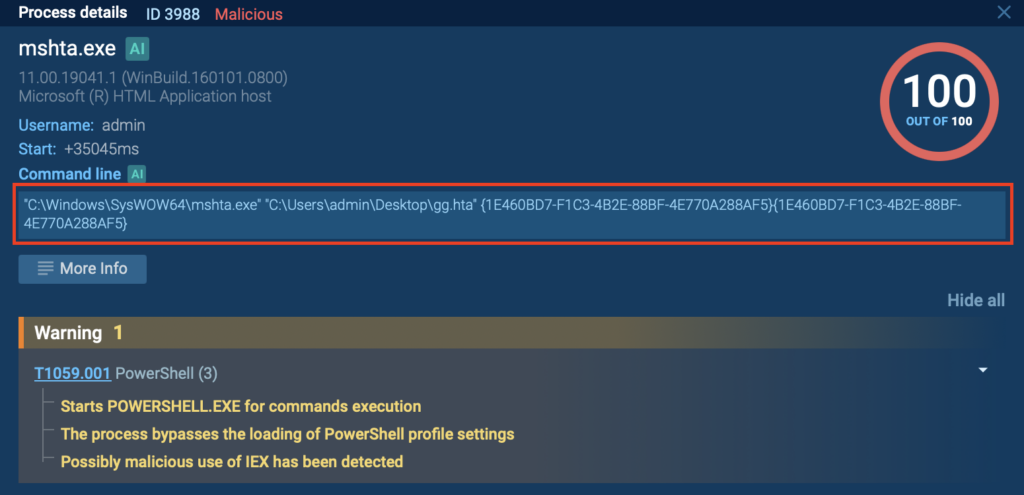

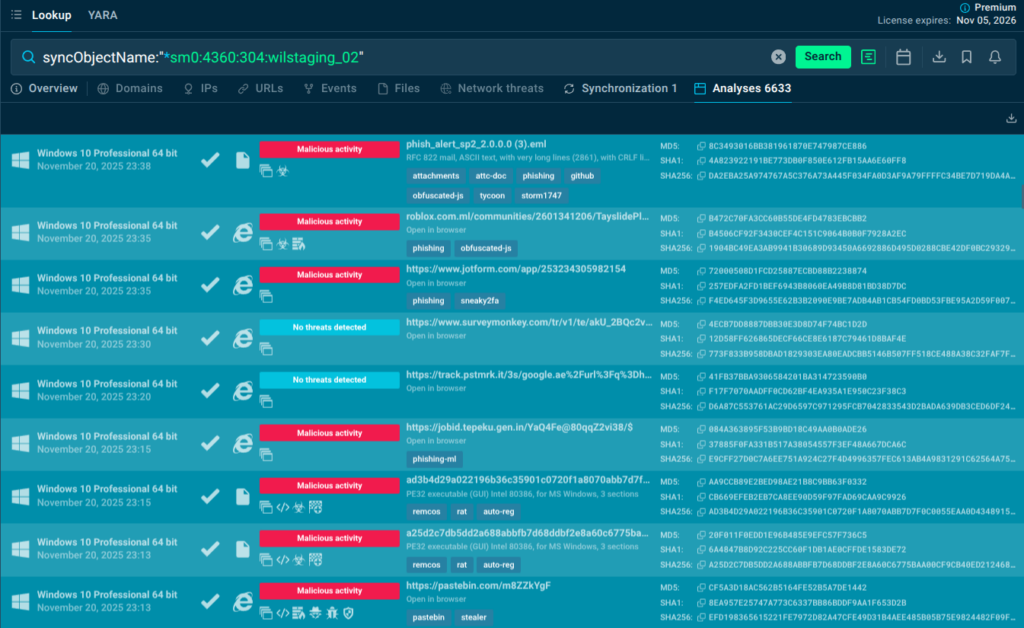

Mutex Magic: Unmask Persistent Threats Fast

A process creates an odd mutex (mutual exclusion object). Search it in TI Lookup’s synchronizations tab: link it to families like DCRat or AsyncRAT, view creating processes, and jump to sandbox sessions showing persistence tactics. Outcome: Rapid hunting across endpoints, stronger YARA rules, and blocking reinfection before damage spreads.

syncObjectName:”*sm0:4360:304:wilstaging_02″

Stop Surviving Alerts. Start Dominating Them

Alert overload is not an inevitable curse of SOC work, it’s a solvable problem that demands both systemic improvements and individual strategy.

The difference between analysts who burn out and those who thrive often comes down to their ability to extract context quickly, make confident decisions, and focus their limited time on high-value investigations. Threat intelligence platforms like ANY.RUN’s Threat Intelligence Lookup are not magic solutions that eliminate alerts, but they are force multipliers that transform your effectiveness by providing the context that turns ambiguous indicators into clear decisions.

By integrating threat intelligence into your daily workflow, you reduce investigation times from minutes to seconds, improve accuracy by relying on aggregated community knowledge, and build the pattern recognition skills that define senior analysts. The critical incidents hiding in your alert queue will only become visible when you clear away the noise efficiently enough to spot them.

Take control of your alerts before they control you, leverage the intelligence resources available to you, and remember that becoming a great analyst isn’t about handling every alert. It’s about handling the right alerts in the right way.

FAQ

Tier 1 analysts are the first responders to every alert. High volume, repetitive tasks, and time pressure make it easy to overlook critical incidents and lead to burnout, stress, and reduced accuracy.

Overwhelmed analysts escalate incorrectly, miss key signals, and slow down triage. This cascades across the SOC, delaying incident response and weakening the organization’s security posture.

Threat intelligence adds immediate context to alerts, helping analysts understand whether an IOC is benign or malicious without manual research. This shortens triage time and reduces cognitive load.

TI Lookup provides fast, behavior-based context from millions of real sandbox runs. Analysts can check domains, hashes, IPs, and mutexes in seconds and see relationships, malware families, and activity patterns.

Yes. By revealing whether an indicator is tied to known malware, seen in threats before, or associated with clean activity, TI Lookup allows analysts to make confident classification decisions.

TI Lookup supports enrichment for domains, URLs, IP addresses, file hashes, mutexes, and many other IOCs, each supplemented by sandbox-based behavioral insights and real analyst data.

By reducing guesswork and manual searching, TI Lookup lowers stress, improves accuracy, and helps analysts manage workloads more sustainably — supporting long-term career growth instead of fatigue-driven turnover.

About ANY.RUN

ANY.RUN is a leading provider of interactive malware analysis and threat intelligence solutions. Today, 15,000+ organizations worldwide use ANY.RUN to speed up investigations, strengthen detection pipelines, and give their teams a clearer view of what’s really happening on their endpoints.

SOC teams using ANY.RUN report measurable improvements, including:

- 3× boost in SOC efficiency;

- 95% faster initial triage;

- Up to 58% more threats identified;

- 21-minute reduction in MTTR per incident.

Start your 14-day trial of ANY.RUN today →

The post How to See Critical Incidents in Alert Overload: A Guide for SOCs and MSSPs appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More