React2Shell flaw (CVE-2025-55182) exploited for remote code execution

Post Content

Sophos Blogs – Read More

Post Content

Sophos Blogs – Read More

Post Content

Sophos Blogs – Read More

Welcome to this week’s edition of the Threat Source newsletter.

For us in America, we’re in the holiday doldrums and things slow and/or shut down until the new year. At Cisco, we shut down the last week of the year to reset and recharge, and I’ve grown to be quite fond of it. I’ve worked plenty of gigs where there were no holiday breaks, and now that I’m living that dream, I gotta tell ya, it’s a damn civilized way to live if you can get it.

It’s only natural for us to think on 2025 — what happened to us, what made the news, and with some trepidation (and maybe some hope) what lies in store for 2026.

I thought I’d summarize the notable things that come to mind for me:

If you celebrate, enjoy the holidays. At the same time, I know this season can feel especially lonely for those of us who are missing loved ones. This year I lost my grandmother, and I am still processing the tremendous grief and loss for someone who helped raise me to be the man I am today. Find the time to spend with others and be kind to yourself. Resist the urge to isolate yourself. Use the holidays to invest in yourself and your health. I believe in you. I’ll see you all in 2026.

For this end-of-year Talos Takes episode — and Hazel’s last as host — we took a time machine back to 2015 to ask, “What would a defender from back then think of the madness we deal with in 2025?” Alongside Pierre, Alex, and yours truly, we reminisced about our own journeys, then got into the real meat: just how much ransomware has exploded (thanks, “as-a-service” model), why identity is now the main battleground, and how the lines between state-sponsored actors and APTs have blurred to the point of being almost meaningless.

You don’t need me to tell you it’s a different world than it was ten years ago. The ransomware industry is bigger and nastier than ever, and attackers are more organized, more efficient, and more professionalized. The tools (and the stakes) keep changing, but burnout and complexity are constants. If you’re not keeping pace, you’re falling behind, and the attackers aren’t waiting up.

Don’t panic, and don’t try to win it all alone. Double down on the basics, like identity and access management and keeping tabs on those “service accounts” that keep multiplying. Make sure your team is trained, supported, and has permission to step away from the keyboard once in a while. Don’t get distracted by AI; it is powerful, but it’s not a magic bullet. And maybe most important of all: Take care of yourself and your people. 2026 is going to bring more of the same (and some surprises), but if you stay grounded, curious, and human, you’ll be ready for whatever’s next.

Microsoft: Recent Windows updates break VPN access for WSL users

This known issue affects users who installed the KB5067036 October 2025 non-security update, released October 28th, or any subsequent updates, including the KB5072033 cumulative update released during this month’s Patch Tuesday. (Bleeping Computer)

French Interior Ministry confirms cyber attack on email servers

While the attack (detected overnight between Thursday, December 11, and Friday, December 12) allowed the threat actors to gain access to some document files, officials have yet to confirm whether data was stolen. (Bleeping Computer)

In-the-wild exploitation of fresh Fortinet flaws begins

The two flaws (CVE-2025-59718 and CVE-2025-59719 [CVSS score of 9.8]) are described as improper verification of cryptographic signature issues impacting FortiOS, FortiWeb, FortiProxy, and FortiSwitchManager. (SecurityWeek)

Google to shut down dark web monitoring tool in February 2026

Google has announced that it’s discontinuing its dark web report tool in February 2026, less than two years after it was launched as a way for users to monitor if their personal information is found on the dark web. (The Hacker News)

Compromised IAM credentials power a large AWS crypto mining campaign

The activity, first detected on Nov. 2, 2025, employs never-before-seen persistence techniques to hamper incident response and continue unimpeded, according to a new report shared by the tech giant ahead of publication. (The Hacker News)

Humans of Talos: Lexi DiScola

Amy chats with Senior Cyber Threat Analyst Lexi DiScola, who brings a political science and French background to her work tracking global cyber threats. Even as most people wind down for the holidays, Lexi is tackling the Talos 2025 Year in Review.

UAT-9686 actively targets Cisco Secure Email Gateway and Secure Email and Web Manager

Our analysis indicates that appliances with non-standard configurations, as described in Cisco’s advisory, are what we have observed as being compromised by the attack.

TTP: Talking through a year of cyber threats, in five questions

In this episode of the Talos Threat Perspective, Hazel is joined by Talos’ Head of Outreach Nick Biasini to reflect on what stood out, what surprised them, and what didn’t in 2025. What might defenders want to think about differently as we head into 2026?

We’ll be back in 2026 — see ya then!

SHA256: 9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507

MD5: 2915b3f8b703eb744fc54c81f4a9c67f

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507

Example Filename: 9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507.exe

Detection Name: Win.Worm.Coinminer::1201

SHA256: a31f222fc283227f5e7988d1ad9c0aecd66d58bb7b4d8518ae23e110308dbf91

MD5: 7bdbd180c081fa63ca94f9c22c457376 Talos Rep: https://talosintelligence.com/talos_file_reputation?s=a31f222fc283227f5e7988d1ad9c0aecd66d58bb7b4d8518ae23e110308dbf91

Example Filename: e74d9994a37b2b4c693a76a580c3e8fe_3_Exe.exe

Detection Name: Win.Dropper.Miner::95.sbx.tg

SHA256: 96fa6a7714670823c83099ea01d24d6d3ae8fef027f01a4ddac14f123b1c9974

MD5: aac3165ece2959f39ff98334618d10d9

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=96fa6a7714670823c83099ea01d24d6d3ae8fef027f01a4ddac14f123b1c9974

Example Filename: 96fa6a7714670823c83099ea01d24d6d3ae8fef027f01a4ddac14f123b1c9974.exe

Detection Name: W32.Injector:Gen.21ie.1201

SHA256: 90b1456cdbe6bc2779ea0b4736ed9a998a71ae37390331b6ba87e389a49d3d59

MD5: c2efb2dcacba6d3ccc175b6ce1b7ed0a

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=90b1456cdbe6bc2779ea0b4736ed9a998a71ae37390331b6ba87e389a49d3d59

Example Filename:ck8yh2og.dll

Detection Name: Auto.90B145.282358.in02

SHA256: 1aa70d7de04ecf0793bdbbffbfd17b434616f8de808ebda008f1f27e80a2171b

MD5: a8fd606be87a6f175e4cfe0146dc55b2

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=1aa70d7de04ecf0793bdbbffbfd17b434616f8de808ebda008f1f27e80a2171b

Example Filename: 1aa70d7de04ecf0793bdbbffbfd17b434616f8de808ebda008f1f27e80a2171b.exe

Detection Name: W32.1AA70D7DE0-95.SBX.TG

Cisco Talos Blog – Read More

In November 2025, Kaspersky experts uncovered a new stealer named Stealka, which targets Windows users’ data. Attackers are using Stealka to hijack accounts, steal cryptocurrency, and install a crypto miner on their victims’ devices. Most frequently, this infostealer disguises itself as game cracks, cheats and mods.

Here’s how the attackers are spreading the stealer, and how you can protect yourself.

A stealer is a type of malware that collects confidential information stored on the victim’s device and sends it to the attackers’ server. Stealka is primarily distributed via popular platforms like GitHub, SourceForge, Softpedia, sites.google.com, and others, disguised as cracks for popular software, or cheats and mods for games. For the malware to be activated, the user must run the file manually.

Here’s an example: a malicious Roblox mod published on SourceForge.

And here’s one on GitHub posing as a crack for Microsoft Visio.

Sometimes, however, attackers go a step further (and possibly use AI tools) to create entire fake websites that look quite professional. Without the help of a robust antivirus, the average user is unlikely to realize anything is amiss.

Admittedly, the cracks and software advertised on these fake sites can sometimes look a bit off. For example, here the attackers are offering a download for Half-Life 3, while at the same time claiming it’s not actually a game but some kind of “professional software solution designed for Windows”.

Malware disguised as Half-Life 3, which is also somehow “a professional software solution designed for Windows”. A lot of professionals clearly spent their best years on this software…

The truth is that both the page title and the filename are just bait. The attackers simply use popular search terms to lure users into downloading the malware. The actual file content has nothing to do with what’s advertised — inside, it’s always the same infostealer.

The site also claimed that all hosted files were scanned for viruses. When the user decides to download, say, a pirated game, the site displays a banner saying the file is being scanned by various antivirus engines. Of course, no such scanning actually takes place; the attackers are merely trying to create an illusion of trustworthiness.

Stealka has a fairly extensive arsenal of capabilities, but its prime target is data from browsers built on the Chromium and Gecko engines. This puts over a hundred different browsers at risk, including popular ones like Chrome, Firefox, Opera, Yandex Browser, Edge, Brave, as well as many, many others.

Browsers store a huge amount of sensitive information, which attackers use to hijack accounts and continue their attacks. The main targets are autofill data, such as sign-in credentials, addresses, and payment card details. We’ve warned repeatedly that saving passwords in your browser is risky — attackers can extract them in seconds. Cookies and session tokens are perhaps even more valuable to hackers, as they can allow criminals to bypass two-factor authentication and hijack accounts without entering the password.

The story doesn’t end with the account hack. Attackers use these compromised accounts to spread the malware further. For example, we discovered the stealer in a GTAV mod posted on a dedicated site by an account that had previously been compromised.

Beyond stealing browser data, Stealka also targets the settings and databases of 115 browser extensions for crypto wallets, password managers, and 2FA services. Here are some of the most popular extensions now at risk:

Finally, the stealer also downloads local settings, account data, and service files from a wide variety of applications:

That’s an extensive list — and we haven’t even named all of them! In addition to local files, this infostealer also harvests general system data: a list of installed programs, the OS version and language, username, computer hardware information, and miscellaneous settings. And as if that weren’t enough, the malware also takes screenshots.

Curious what other stealers are out there, and what they’re capable of? Read more in our other posts:

Kaspersky official blog – Read More

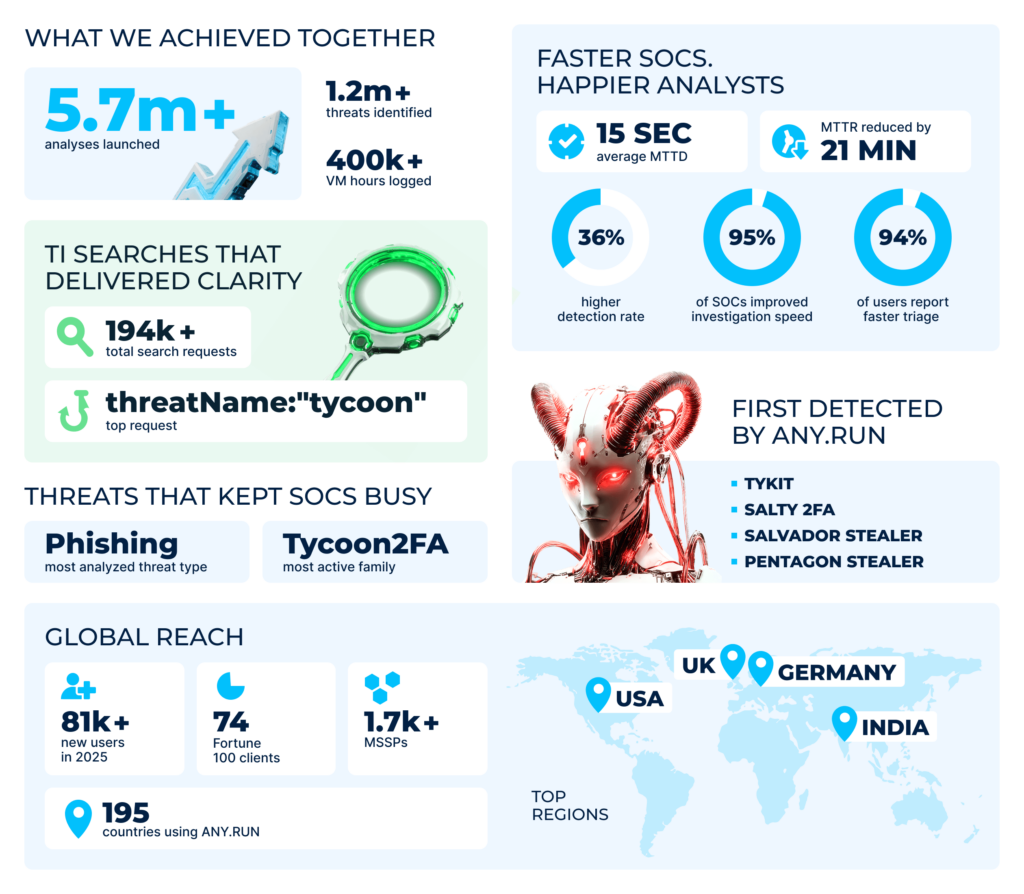

It’s December — that time of year when we take a pause and look back at how much we’ve achieved.

If you’re reading this, chances are you’ve shared these wins with us. Maybe you’ve launched one analysis, maybe thousands. Maybe you’ve browsed our Threat Intelligence Lookup daily or just joined us. Anyhow, thanks for being here!

2025 kept all of us busy for sure. But it also brought a ton of breakthrough studies, insights, and improvements. Let’s glance back at the year and see what we accomplished together — through numbers, stories, and proud moments.

We bet it’s safe to say that no analyst was idle this year, and the numbers support this statement: the total number of analyses launched in ANY.RUN’s Interactive Sandbox across 195(!) countries exceeded 5.7 millions, with 1.1 million threats uncoveredin the process.

Our most active users this year were based in the US, Germany, UK, and India. Many of them represent big enterprises. In fact, 74 of Fortune 100 companies used our sandbox this year.

The community overall kept growing: out of 500,000+ users, 81K joined us this year, bringing new insights with them.

Altogether, ANY.RUN’s users have spent 400,000+ hours in our sandbox — that’s more than 45 years of research! Just imagine how much longer it would take without a solution built for fast and efficient analysis.

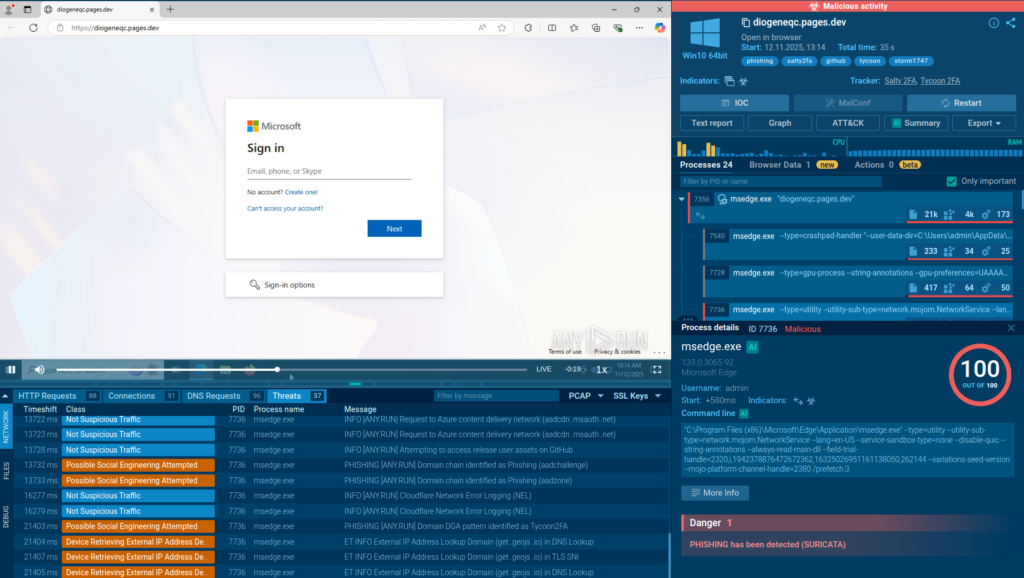

When it comes to what exactly our community analyzed most, there are no surprises: in 2025, phishing continued to reign over the threat landscape. In particular, the most active threat was Tycoon2FA.

The top suspects among file types were: executables, ZIP archives, PDFs, and emails (EML and MSG). A clear proof of how widespread both file- and email-based malware is.

But no threat should scare an analyst equipped with strong security solutions. Here are some of the tangible results reported by ANY.RUN’s users in 2025:

| Measurable impact with ANY.RUN, 2025 |

|---|

| Average MTTD: 15 seconds |

| MTTR reduced by: 21 minutes |

| Investigation speed improved: in 95% of SOCs |

This is a solid proof of the fact that our malware analysis and threat intelligence solutions change SOC workflows for the better.

This year we broadened the sandbox horizons by adding new operating systems to our VM for more flexible and realistic environments.

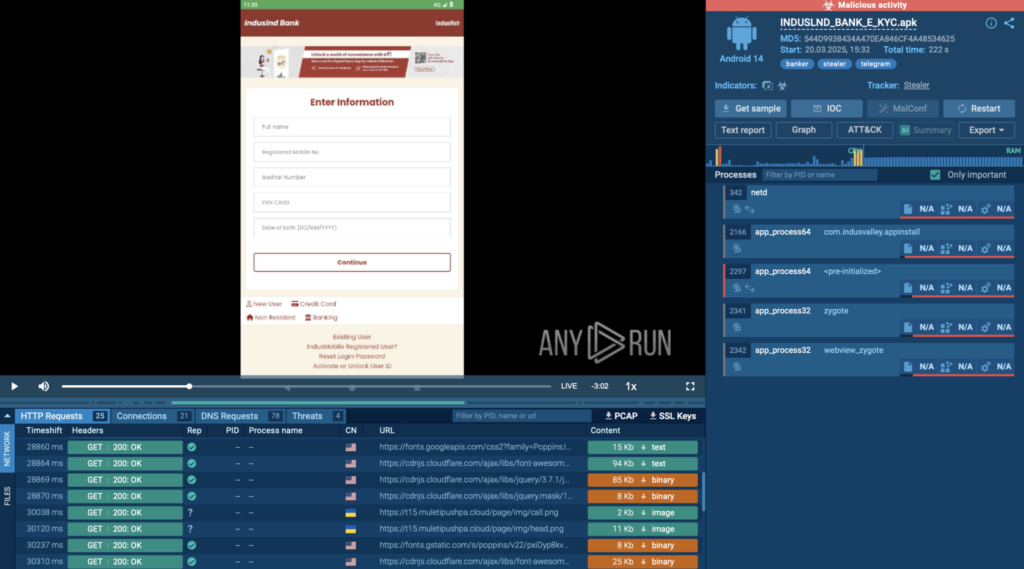

For teams tackling mobile threats, we introduced Android support. It gives you the opportunity to upload, interact, and analyze APK files in ANY.RUN’s virtual machine closely replicating a real Android device. Great timing, since mobile threats have been pretty active this year! But more on that below.

We also added Linux Debian OS, helping you detonate ARM-based threats. Since 2025, you can do full-scale malware built for IoT devices and other ARM systems in ANY.RUN’s Interactive Sandbox.

Thanks to these and other updates, our sandbox became even more universal and useful for faster, deeper, and more reliable analysis.

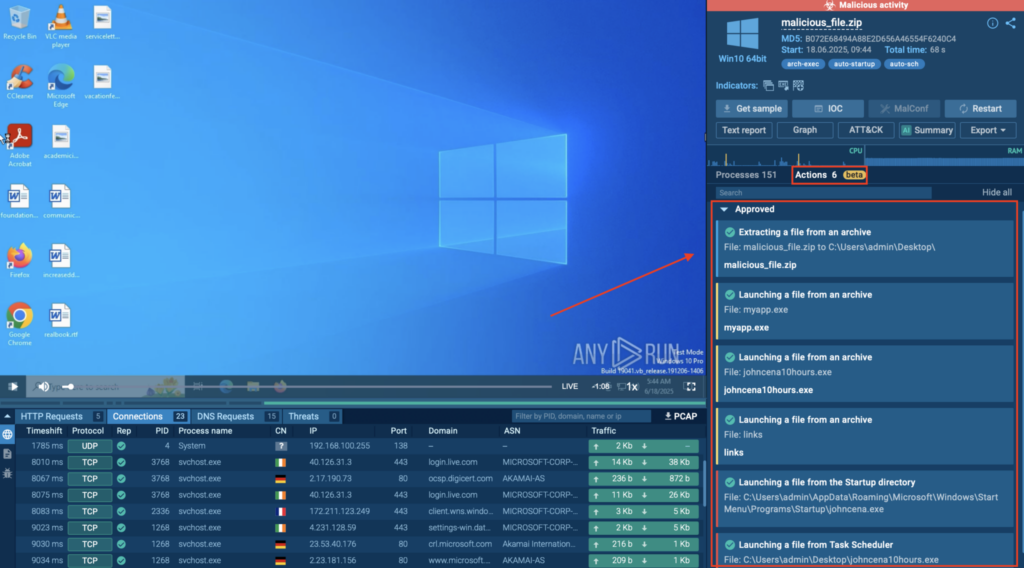

When it comes to malware analysis, it’s not always clear where to start, as threats get increasingly more complex and evasive. To simplify the process of uncovering them, we came up with Detonation Actions — hints that guide you through the analysis in our ANY.RUN Sandbox as you search for hidden threats.

Another feature we added solves one of the most time-consuming parts of detection: rule creation. Now our sandbox is equipped with AI Sigma Rules that reveal the logic behind threat behavior while saving manual effort. Just copy them to your SIEM, SOAR, or EDR for smooth deployment.

In 2025, our users made almost 195k requests in Threat Intelligence Lookup in search of actionable insights and verified indicators. Tycoon topped the list as the most searched malware.

Thanks to our global community, we have access to a rich collection of fresh, verified, ready- and safe-to-use data. It would be a shame not to share it with the world, right?

So, an important step we took this year to make TI Lookup more accessible. Namely, we introduced the Free plan, giving everyone the opportunity to enrich threat research with 100% verified context at no cost. It’s a perfect way to tap into quality intel and see it bring tangible results.

We also supported knowledge exchange by launching TI Reports, analyst-driven articles covering APTs, campaigns, and emerging threats. Each report comes with IOCs and queries for a deeper dive.

Finally, in 2025 we boosted threat monitoring capabilities of our users with Industry & geo threat landscape. It shows exactly how a given threat or indicator relates to sectors and countries — a real live-saver for those drowning in alerts with no context.

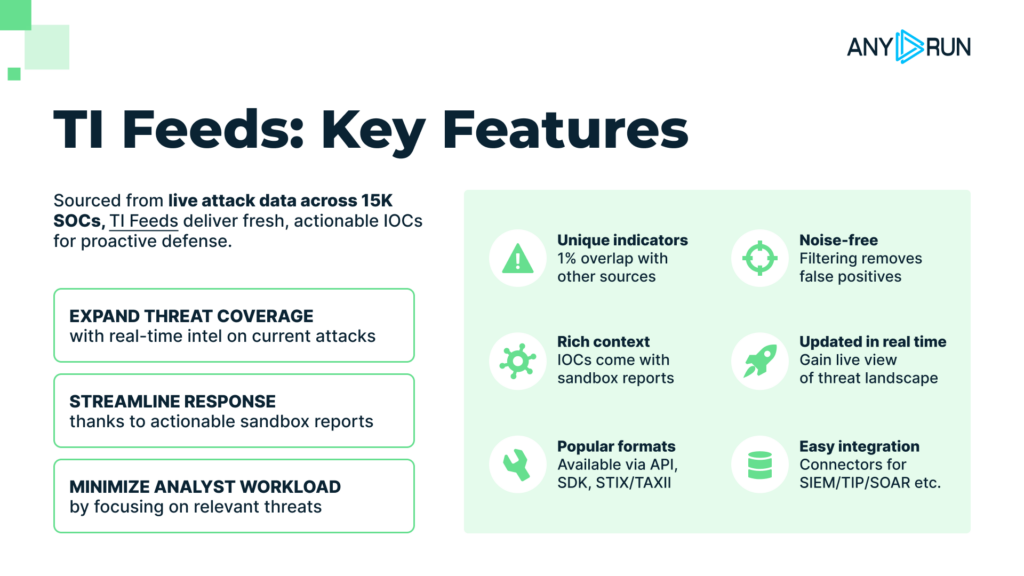

Throughout 2025, Threat Intelligence Feeds grew both in terms of data and interoperability. It was powered by constant data updates coming from over 15K SOC teams, which guarantee that TI Feeds always remain on point.

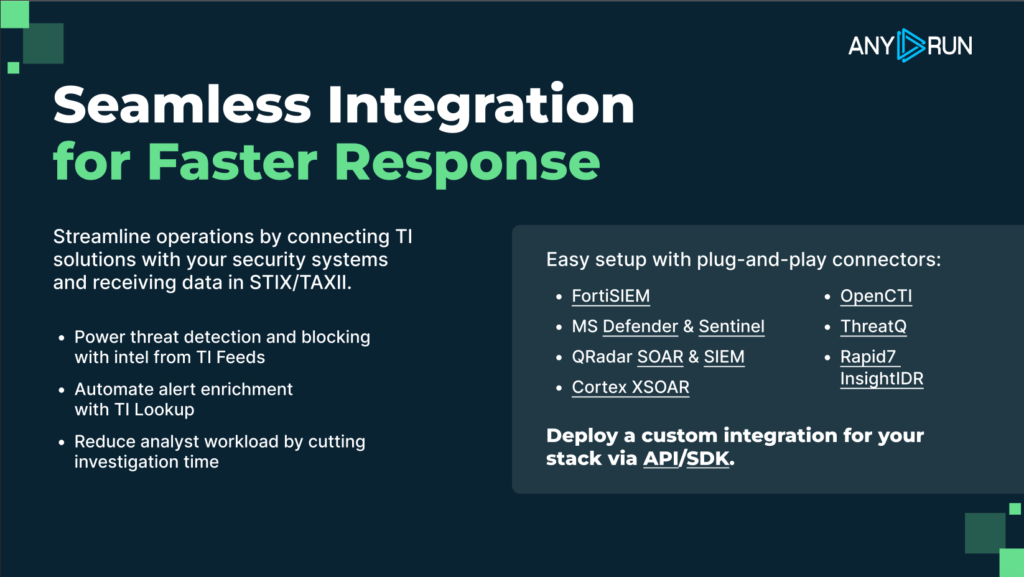

The STIX/TAXII integration made the delivery of fresh, real-time data more efficient. And newly added integrations like ThreatQ + TI Feeds connector brought live, behavior-based malware for better prioritization and contextualization of indicators.

Our goal is to make your workflow smoother and more efficient, simplifying daily tasks and automating what’s possible. One of the steps we took in this direction is the launch of SDK, which makes it easy to connect our solutions with tools you’re already using.

We also released a lot of ready-to-use integrations, such as:

These and other integrations and connectors support your work without disrupting the way you already operate.

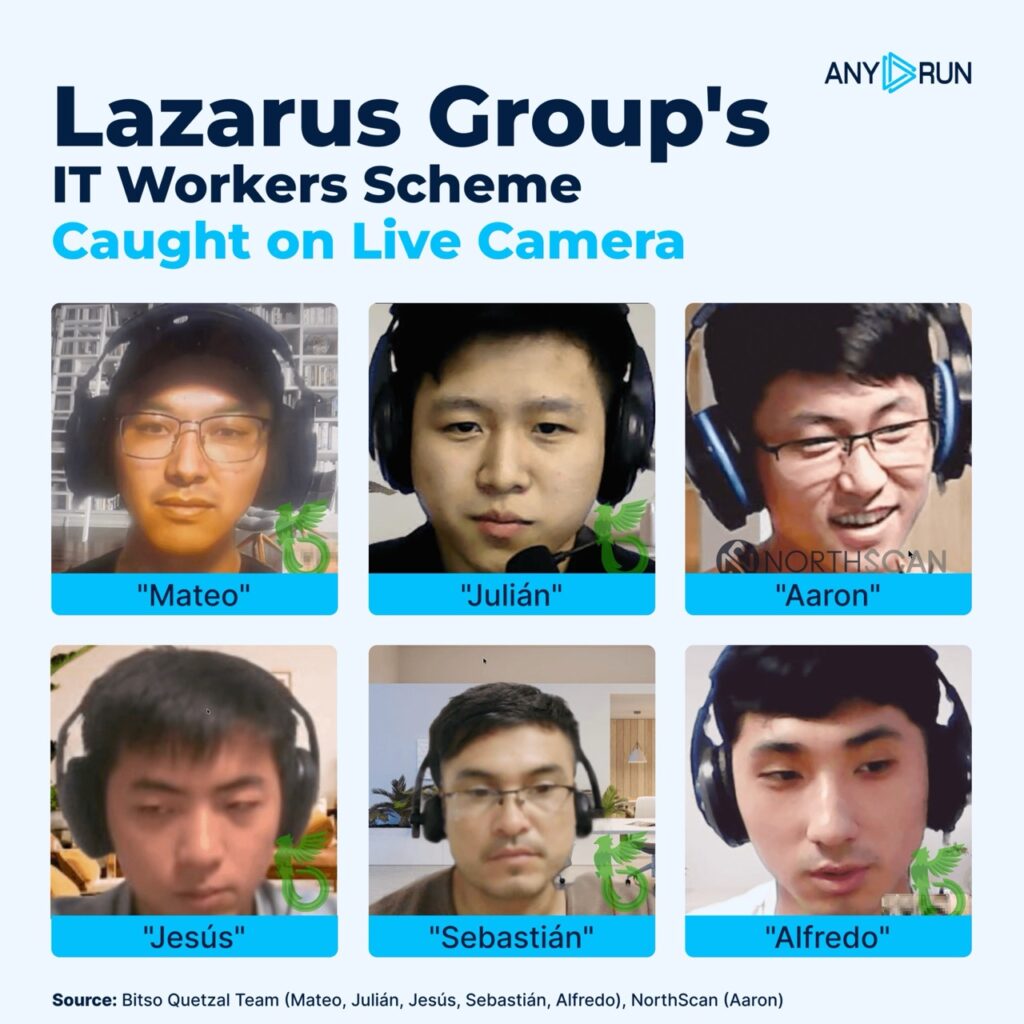

In 2025, ANY.RUN was the first to uncover multiple campaigns and malware families, giving a head start to the entire cybersecurity community. Let’s recap the most notable cases:

A newly discovered PhaaS framework that quickly raised to the level of major phishing kits in today’s threat landscape. Its ability to distribute payloads at scale, intercept 2FA authentication methods, and complex communication models ensured that.

Some of the recently occurred threats were Android-based, and we were able to break them down in detail and analyze their behavior in our sandbox.

In October we took a closer look at Tykit, a credential-stealing malware. It might not reinvent phishing per se but clearly demonstrates how a tiny loophole in a defense system can lead to significant real-world impact.

We ended the year with a detection of a hybrid cross-kit malware Salty2FA & Tycoon2FA. It combines two phishing frameworks, multiplying the dangers of both.

2025 brought us a handful of awards, indicating recognition and acclaim in the industry, for which we’re super grateful.

| Award | Title |

|---|---|

| Top InfoSec Innovators Awards | Winner at Trailblazing Threat Intelligence |

| Globee Awards | Gold winner (TI Lookup) Silver winner (Sandbox) |

| Cybersecurity Excellence Awards | Best TI Service |

| CyberSecurity Breakthrough Awards | Threat Intelligence Company of 2025 |

What we appreciate more than anything, however, is our community. Every nomination, vote, and kind word reflect your trust — a big thank-you to everyone involved!

Alongside TI Reports you can find in TI Lookup, we regularly share technical analyses on our blog. 2025 was no exception. We published many nuanced studies of both newly discovered and evolved threats.

These and other reports by ANY.RUN are a testament to how interactive sandboxing and knowledge exchange makes analysis sharper and the entire community stronger.

We’ve grown a lot this year and we’re not planning to stop. Here’s a peek into what we’re working on and what you can expect from ANY.RUN in the coming year:

Everything’s changing — threats, TTPs, security measures… But our goal stays the same: to make malware analysis and threat investigations faster, easier, and smarter.

Thanks for analyzing, researching, experimenting, and growing together with us. Every contribution, insight, and a bit of feedback brings us closer to a more secure future.

Have alert-free holidays and stay safe in 2026!

ANY.RUN supports over 500,000 cybersecurity professionals around the world. Its Interactive Sandbox makes malware analysis easier by enabling the investigation of threats targeting Windows, Android, and Linux systems. ANY.RUN’s threat intelligence solutions—Threat Intelligence Lookup and TI Feeds—allow teams to quickly identify IOCs and analyze files, helping them better understand threats and respond to incidents more efficiently.

Start a 2-week trial of ANY.RUN’s solutions →

The post Year in Review by ANY.RUN: Key Threats, Solutions, and Breakthroughs of 2025 appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More

Cisco Talos’ Vulnerability Discovery & Research team recently disclosed vulnerabilities in Biosig Project Libbiosig, Grassroot DiCoM, and Smallstep step-ca.

The vulnerabilities mentioned in this blog post have been patched by their respective vendors, all in adherence to Cisco’s third-party vulnerability disclosure policy.

For Snort coverage that can detect the exploitation of these vulnerabilities, download the latest rule sets from Snort.org, and our latest Vulnerability Advisories are always posted on Talos Intelligence’s website.

Discovered by Mark Bereza of Cisco Talos.

BioSig is an open source software library for biomedical signal processing. The BioSig Project seeks to encourage research in biomedical signal processing by providing open source software tools.

TALOS-2025-2296 (CVE-2025-66043-CVE-2025-66048) includes several stack-based buffer overflow vulnerabilities in the MFER parsing functionality of the Biosig Project libbiosig 3.9.1. An attacker can supply a specially crafted MFER file to trigger these vulnerabilities, possibly leading to arbitrary code execution.

Discovered by Emmanuel Tacheau of Cisco Talos.

Grassroots DiCoM is a C++ library for DICOM medical files, accessible from Python, C#, Java, and PHP. It supports RAW, JPEG, JPEG 2000, JPEG-LS, RLE and deflated transfer syntax. Talos found three out-of-bounds read vulnerabilities in DiCoM. An attacker can provide a malicious file to trigger these vulnerabilities.

Discovered by Stephen Kubik of the Cisco Advanced Security Initiatives Group (ASIG).

Smallstep step-ca is a TLS-secured online Certificate Authority (CA) for X.509 and SSH certificate management. TALOS-2025-2242 (CVE-2025-44005) is an authentication bypass vulnerability in step-ca. An attacker can bypass authorization checks and force a Step-CA ACME or SCEP provisioner to create certificates without completing certain protocol authorization checks.

Cisco Talos Blog – Read More

· Cisco Talos recently discovered a campaign targeting Cisco AsyncOS Software for Cisco Secure Email Gateway, formerly known as Cisco Email Security Appliance (ESA), and Cisco Secure Email and Web Manager, formerly known as Cisco Content Security Management Appliance (SMA).

· We assess with moderate confidence that the adversary, who we are tracking as UAT-9686, is a Chinese-nexus advanced persistent threat (APT) actor whose tool use and infrastructure are consistent with other Chinese threat groups.

· As part of this activity, UAT-9686 deploys a custom persistence mechanism we track as “AquaShell” accompanied by additional tooling meant for reverse tunneling and purging logs.

· Our analysis indicates that appliances with non-standard configurations, as described in Cisco’s advisory, are what we have observed as being compromised by the attack.

Cisco Talos is tracking the active targeting of Cisco AsyncOS Software for Cisco Secure Email Gateway, formerly known as Cisco Email Security Appliance (ESA), and Cisco Secure Email and Web Manager, formerly known as Cisco Content Security Management Appliance (SMA), enabling attackers to execute system-level commands and deploy a persistent Python-based backdoor, AquaShell. Cisco became aware of this activity on December 10, which has been ongoing since at least late November 2025. Additional tools observed include AquaTunnel (reverse SSH tunnel), chisel (another tunneling tool), and AquaPurge (log-clearing utility). Talos’ analysis indicates that appliances with non-standard configurations, as described in Cisco’s advisory, are what we have observed as being compromised by the attack.

The Cisco Secure Email and Web Manager centralizes management and reporting functions across multiple Cisco Email Security Appliances (ESAs) and Web Security Appliances (WSAs), offering centralized services such as spam quarantine, policy management, reporting, tracking, and configuration management to simplify administration and enhance security enforcement.

Customers are strongly advised to follow the guidance published in the security advisories discussed below. Additional recommendations specific to Cisco are available here.

Talos assesses with moderate confidence that this activity is being conducted by a Chinese-nexus threat actor, which we track as UAT-9686. We have observed overlaps in tactics, techniques and procedures (TTPs), infrastructure, and victimology between UAT-9686 and other Chinese-nexus threat actors Talos tracks. Tooling used by UAT-9686, such as AquaTunnel (aka ReverseSSH), also aligns with previously disclosed Chinese-nexus APT groups such as APT41 and UNC5174. Additionally, the tactic of using a custom-made web-based implant such as AquaShell is increasingly being adopted by highly sophisticated Chinese-nexus APTs.

AquaShell is a lightweight Python backdoor that is embedded into an existing file within a Python-based web server. The backdoor is capable of receiving encoded commands and executing them in the system shell. It listens passively for unauthenticated HTTP POST requests containing specially crafted data. If such a request is identified, the backdoor will then attempt to parse the contents using a custom decoding routine and execute them in the system shell.

AquaShell is delivered as an encoded data blob that is decoded and ultimately placed in “/data/web/euq_webui/htdocs/index.py”.

The result of decoding the data blob is the Python code that constitutes the AquaShell backdoor. AquaShell parses the HTTP POST request, decodes it using a combination custom algorithm and Base64 decoding and executes the resulting commands on the appliance.

AquaPurge removes lines containing specific keywords from the log files specified. It uses the “egrep” command to filter out (invert search) all content that doesn’t contain the keywords and then simply commits them to the log files:

AquaTunnel is a compiled GoLang ELF binary based on the open-source “ReverseSSH” backdoor. AquaTunnel creates a reverse SSH connection from the compromised system back to an attacker‑controlled server, enabling unauthorized remote access even when the system is behind firewalls or NAT.

Chisel is an open‑source tunneling tool that supports creating TCP/UDP tunnels over a single‑port HTTP‑based connection. Chisel allows an attacker to proxy traffic through a compromised edge device, allowing them to easily pivot through that device into the internal environment.

Recommendations for Cisco customers are available here. If your organization does find connections to the provided actor Indicators of Compromise (IOCs), please open a case with Cisco TAC.

All IOCs, including IPs and file hashes determined to be associated with this campaign have been blocked across the Cisco portfolio.

The IOCs can also be found in our GihtHub repository here.

AquaTunnel

2db8ad6e0f43e93cc557fbda0271a436f9f2a478b1607073d4ee3d20a87ae7ef

AquaPurge

145424de9f7d5dd73b599328ada03aa6d6cdcee8d5fe0f7cb832297183dbe4ca

Chisel

85a0b22bd17f7f87566bd335349ef89e24a5a19f899825b4d178ce6240f58bfc

172[.]233[.]67[.]176

172[.]237[.]29[.]147

38[.]54[.]56[.]95

Cisco Talos Blog – Read More

The Australian Cyber Security Centre (ACSC) has published a new guide, Quantum Technology Primer: Overview, aimed at helping organizations understand the field of quantum technologies for cybersecurity. The publication is part of a bigger effort to raise awareness and preparedness as quantum capabilities move closer to practical deployment across digital systems and organizational infrastructure.

The primer provides a foundational understanding of key quantum technologies, the scientific principles behind them, and the cybersecurity considerations organizations need to address today to prepare for a quantum-enabled future. According to the ACSC, this guidance is essential for cybersecurity leaders, IT managers, and decision-makers responsible for technology strategy and risk management.

Quantum technologies rely on principles of quantum mechanics, the branch of physics that describes the behavior of matter and energy at atomic and subatomic scales. Two core concepts underpin these technologies: superposition and entanglement.

Superposition allows a particle to exist in multiple states simultaneously, collapsing to a single state only when measured. In practical terms, this property enables quantum systems to evaluate many potential outcomes at once, offering computational advantages far beyond classical computers.

Entanglement occurs when particles share a quantum state, creating correlations that persist even across great distances. Measuring one particle instantaneously provides information about the other. This capability underpins emerging quantum communication methods and has significant implications for secure data transmission.

The ACSC emphasizes that understanding these principles is no longer relevant only to quantum specialists. Decision-makers must grasp the basics to integrate quantum cybersecurity considerations into organizational planning effectively.

While many quantum technologies remain in development, their potential impact on digital systems, data protection, and organizational resilience is significant. The ACSC’s Technology Primer notes that quantum computing could render some current cryptographic methods obsolete.

“Preparing now for quantum technologies is crucial,” the ACSC states. “Adopting post-quantum cryptography is a key step, as capable quantum computers will break some existing encryption. Organizations that delay preparation risk vulnerabilities and costly remediation.”

The primer outlines several proactive steps organizations can take:

By incorporating these measures, organizations can strengthen their resilience and reduce potential threats from new quantum technologies.

The ACSC primer details several categories of quantum technologies that could affect business and cybersecurity landscapes:

Although most quantum technologies are still in the early stages, some are already integrated into research, development, and pilot implementations. The ACSC notes that as these technologies mature, they will become part of organizational supply chains and digital infrastructure, making awareness and preparedness essential.

The ACSC’s Technology Primer highlights quantum cybersecurity as a strategic priority, weighing on both the risks and opportunities of quantum technologies. Organizations that plan for quantum today will be better prepared for a future where these technologies are standard. Cyble’s AI-powered threat intelligence and autonomous security solutions help identify new cyber threats, protect data, and maintain resilience.

Schedule a free demo to see how Cyble can protect your organization better!

The post Australia’s ACSC Releases Quantum Technology Primer for Cybersecurity Leaders appeared first on Cyble.

Cyble – Read More

Welcome back to Humans of Talos. This month, Amy chats with Senior Cyber Threat Analyst Lexi DiScola from the Strategic Analysis team. Lexi’s journey into cybersecurity is anything but traditional — she brings a background in political science and French to her work tracking global cyber threats and collaborating with colleagues across continents.

Tune in as Lexi opens up about finding her place in cybersecurity, the unique strengths that come from a non-technical path, and the joys (and challenges) of balancing complex intel analysis with a towering stack of books to be read (TBR) at home.

Amy Ciminnisi: Can you introduce yourself? What do you do here at Talos? What team do you work on, and what does your day-to-day look like?

Lexi DiScola: Sure. I’m on the strategic analysis team here at Talos. I joined about three years ago. What my team does is a whole bunch of things, really, but we focus on tracking and analyzing major trends in the cyber threat landscape. We maintain intelligence sharing relationships with a bunch of private sector and government partners. We conduct regular threat hunting activity in our telemetry and support the Talos Incident Response team. My favorite part is producing written analytical products — logs, intelligence bulletins, threat assessment reports, and our annual Year in Review report, which we just started working on. We’ve kicked into high gear, prepping for the year in review, taking all the data we’ve accumulated and seeing what we can pull out of it. It sounds like a headache to some people, but for us, it’s fun, so we’re looking forward to it.

AC: What made you want to join Talos, and when did you join?

LD: I joined about three years ago this fall. I worked in cyber threat intelligence in a government position before. Because of that experience, I was always aware of Talos’s reputation in this space. When I was looking to shift to the private sector from the government, I knew I’d be working with some of the best of the best here. I knew I wouldn’t be stagnant if I came here. That was my focus in a new position — I always want to be learning and working toward something.

AC: What are your favorite resources for staying up to date with current trends in cybersecurity?

LD: There are multiple sources I look at. OSINT, or open-source intelligence, is a huge tool, especially when focusing on specific countries or nation-state actors. Looking at their local reporting and translating it is super helpful, and looking at competitors’ or cybersecurity researchers’ reporting is also useful. But I really rely on the people I work with. Talos has so many talented people who are always willing to help. At first, I was hesitant to ask questions, but as I got to know people better, I stopped feeling embarrassed. It’s a two-way street. You might feel awkward asking for help, but down the road, they may ask you for help with something you’re an expert in. Asking people and not being afraid or embarrassed has served me well.

Want to see more? Watch the full interview, and don’t forget to subscribe to our YouTube channel for future episodes of Humans of Talos.

Cisco Talos Blog – Read More

Our experts from the Global Research and Analysis Team (GReAT) have investigated a new wave of targeted emails from the ForumTroll APT group. Whereas previously their malicious emails were sent to public addresses of organizations, this time the attackers have targeted specific individuals — scientists from Russian universities and other organizations specializing in political science, international relations, and global economics. The purpose of the campaign was to infect victims’ computers with malware to gain remote access thereto.

The attackers sent the emails from the address support@e-library{.}wiki, which imitates the address of the scientific electronic library eLibrary (its real domain is elibrary.ru). The emails contained personalized links to a report on the plagiarism check of some material, which, according to the attackers’ plan, was supposed to be of interest to scientists.

In reality, the link downloaded an archive from the same e-library{.}wiki domain. Inside was a malicious .lnk file and a .Thumbs directory with some images that were apparently needed to bypass security technologies. The victim’s full name was used in the filenames of the archive and the malicious link-file.

In case the victim had doubts about the legitimacy of the email and visited the e-library{.}wiki page, they were shown a slightly outdated copy of the real website.

If the scientist who received the email clicked on the file with the .lnk extension, a malicious PowerShell script was executed on their computer, triggering a chain of infection. As a result, the attackers installed a commercial framework Tuoni for red teams on the attacked machine, providing the attackers with remote access and other opportunities for further compromising the system. In addition, the malware used COM Hijacking to achieve persistency, and downloaded and displayed a decoy PDF file, the name of which also included the victim’s full name. The file itself, however, was not personalized — it was a rather vague report in the format of one of the Russian plagiarism detection systems.

Interestingly, if the victim tried to open the malicious link from a device running on a system that didn’t support PowerShell, they were prompted to try again from a Windows computer. A more detailed technical analysis of the attack, along with indicators of compromise, can be found in a post on the Securelist website.

The malware used in this attack is successfully detected and blocked by Kaspersky’s security products. We recommend installing a reliable security solution not only on all devices used by employees to access the internet, but also on the organization’s mail gateway, which can stop most threats delivered via email before they reach an employee’s device.

Kaspersky official blog – Read More