Old habits die hard: 2025’s most common passwords were as predictable as ever

Once again, data shows an uncomfortable truth: the habit of choosing eminently hackable passwords is alive and well

WeLiveSecurity – Read More

Once again, data shows an uncomfortable truth: the habit of choosing eminently hackable passwords is alive and well

WeLiveSecurity – Read More

Hacktivists moved well beyond their traditional DDoS attacks and website defacements in 2025, increasingly targeting industrial control systems (ICS), ransomware, breaches, and data leaks, as their sophistication and alignment with nation-state interests grew.

That was one of the conclusions in Cyble’s exhaustive new 2025 Threat Landscape report, from which this blog was adapted.

Looking ahead to 2026 and beyond, Cyble expects critical infrastructure attacks by hacktivists to continue to grow, increasing use of custom tools by hacktivists, and deepening alignment between nation-state interests and hacktivists.

Between December 2024 and December 2025, several hacktivist groups increased their focus on ICS and operational technology (OT) attacks. Z-Pentest was the most active actor, conducting repeated intrusions against a wide range of industrial technologies. Dark Engine (Infrastructure Destruction Squad) and Sector 16 persistently targeted ICS, primarily exposing Human Machine Interfaces (HMI).

A secondary tier of groups, including Golden Falcon Team, NoName057 (16), TwoNet, RipperSec, and Inteid, also claimed to have conducted recurrent ICS-disrupting attacks, albeit on a smaller scale.

HMI and web-based Supervisory Control and Data Acquisition (SCADA) interfaces were the most frequently targeted systems, followed by a limited number of Virtual Network Computing (VNC) compromises, which posed the greatest operational risks to several industries.

Building Management System (BMS) platforms and Internet of Things (IoT) or edge-layer controllers were also targeted in increasing numbers, reflecting the broader exploitation of weakly secured IoT interfaces.

Europe remained the primary region affected by pro-Russian hacktivist groups, with sustained targeting of Spain, Italy, the Czech Republic, France, Poland, and Ukraine contributing to the highest concentration of ICS-related intrusions.

State-aligned hacktivist activity remained persistent throughout 2025. Operation Eastwood (14–17 July) disrupted NoName057(16)’s DDoS infrastructure, prompting swift retaliatory attacks from the hacktivist group. The group rapidly rebuilt capacity and resumed operations against Ukraine, the EU, and NATO, underscoring the resilience of state-directed ecosystems.

U.S. indictments and sanctions further exposed alleged structured cooperation between Russian intelligence services and pro-Kremlin hacktivist fronts. The Justice Department detailed GRU-backed financing and tasking of the Cyber Army of Russia Reborn (CARR), as well as the state-sanctioned development of NoName057(16)’s DDoSia platform.

Z-Pentest, identified as part of the same CARR ecosystem and attributed to GRU, continued targeting EU and NATO critical infrastructure, reinforcing the convergence of activist personas, state mandates, and operational doctrine.

Pro-Ukrainian hacktivist groups, though not formally state-directed, conducted sustained, destructive operations against networks linked to the Russian military. The BO Team and the Ukrainian Cyber Alliance conducted several data destruction and wiper attacks, encrypting key Russian businesses and state machinery. Ukrainian actors repeatedly stated that exfiltrated datasets were passed to national intelligence services.

Hacktivist groups Cyber Partisans BY (Belarus) and Silent Crow claimed a year-long Tier-0 compromise of Aeroflot’s IT environment, allegedly exfiltrating more than 20TB of data, sabotaging thousands of servers, and disrupting core airline systems, a breach that Russia’s General Prosecutor confirmed caused significant operational outages and flight cancellations.

Research into BQT.Lock (BaqiyatLock) suggests a plausible ideological alignment with Hezbollah, as evidenced by narrative framing and targeting posture. However, no verifiable technical evidence has confirmed a direct organizational link.

Cyb3r Av3ngers, associated with the Islamic Revolutionary Guard Corps (IRGC), struck critical infrastructure assets, including electrical networks and water utilities in Israel, the United States, and Ireland. After being banned on Telegram, the group resurfaced under the alias Mr. Soul Team.

Tooling and capability development by hacktivist groups also grew significantly in 2025. Observed activities have included:

In 2025, hacktivism evolved into a globally coordinated threat, closely tracking geopolitical flashpoints. Armed conflicts, elections, trade disputes, and diplomatic crises fueled intensified campaigns against state institutions and critical infrastructure, with hacktivist groups weaponizing cyber-insurgency to advance their propaganda agendas.

Pro-Ukrainian, pro-Palestinian, pro-Iranian, and other nationalist groups launched ideologically driven campaigns tied to the Russia-Ukraine War, the Israel-Hamas conflict, Iran-Israel tensions, South Asian tensions, and the Thailand-Cambodia border crisis. Domestic political unrest in the Philippines and Nepal triggered sustained attacks on government institutions.

Cyble recorded a 51% increase in hacktivist sightings in 2025, from 700,000 in 2024 to 1.06 million in 2025, with the bulk of activity focused on Asia and Europe (chart below).

Pro-Russian state-aligned hacktivists and pro-Palestinian, anti-Israel collectives continued to be the primary drivers of hacktivist activity throughout 2025, shaping the operational tempo and geopolitical focus of the threat landscape.

Alongside these dominant ecosystems, Cyble observed a marked increase in operations by Kurdish hacktivist groups and emerging Cambodian clusters, both of which conducted campaigns closely aligned with regional strategic interests.

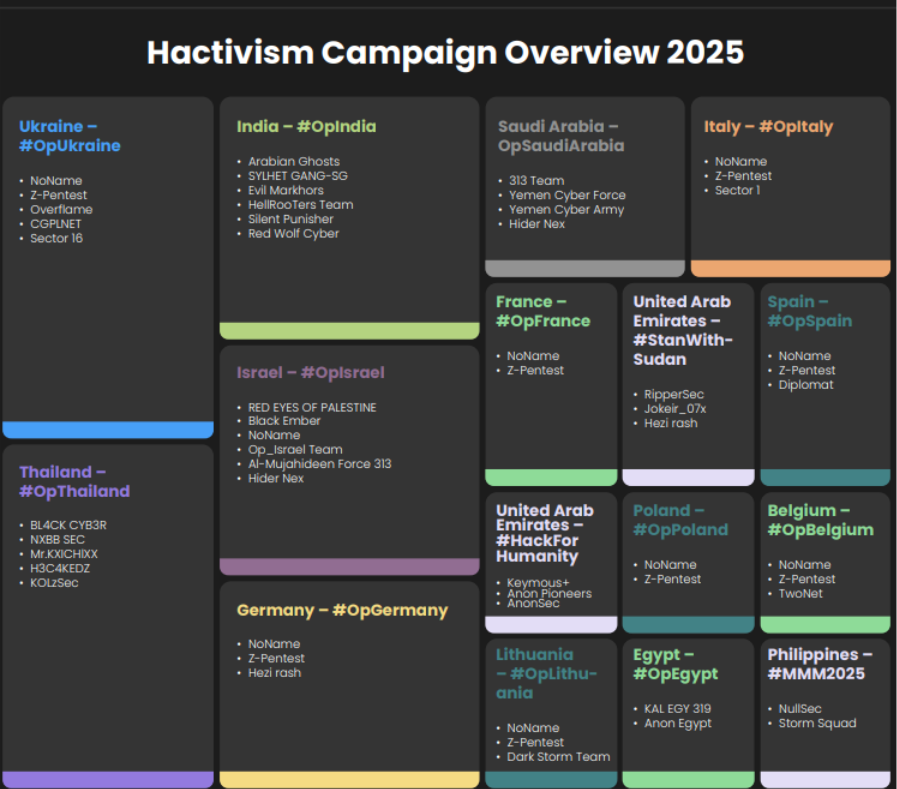

Below are some of the major hacktivist groups of 2025:

India, Ukraine, and Israel were the countries most impacted by hacktivist activity in 2025 (country breakdown below).

Among global regions targeted, Europe and NATO faced a sustained pro-Russian campaign marked by coordinated DDoS attacks, data leaks, and escalating ICS intrusions against NATO and EU member states. Government & LEA, Energy & Utilities, Manufacturing, and Transportation were consistent targets.

In the Middle East, Israel remains the principal target amid the Gaza conflict-related escalation, Iran-Israel confrontation, and Yemen-Saudi hostilities. Saudi Arabia, UAE, Egypt, Jordan, Iraq, Syria, and Yemen faced sustained DDoS attacks, defacements, data leaks, and illicit access to exposed ICS assets from ideologically aligned coalitions operating across the region.

In South Asia, India-Pakistan and India-Bangladesh tensions fueled high-volume, ideologically framed offensives, peaking around political flashpoints and militant incidents. Activity concentrated on Government & LEA, BFSI, Telecommunication, and Education.

In Southeast Asia, border tensions and domestic unrest shaped a fragmented but active theatre: Thailand-Cambodia conflicts triggered reciprocal DDoS and defacements; Indonesia & Malaysia incidents stemmed from political and social disputes; the Philippines saw attacks linked to internal instability; and Taiwan emerged as a recurring target for pro-Russian actors.

Below are some of the major hacktivist campaigns of 2025:

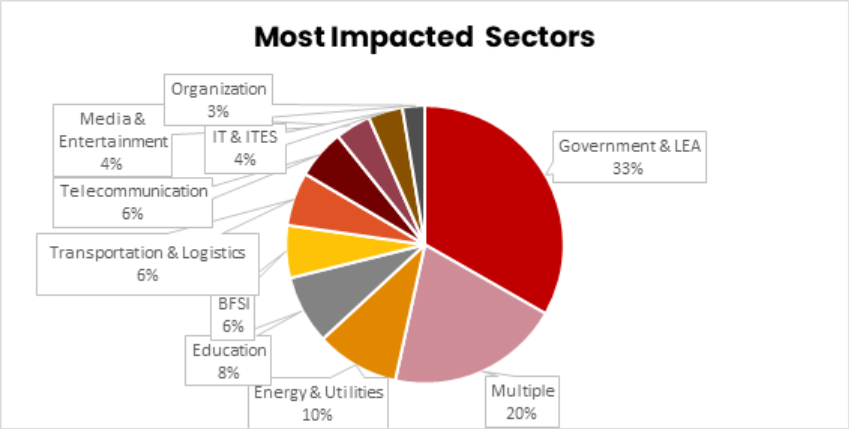

2025 witnessed a marked expansion of hacktivist focus across multiple industries. Government & LEA, Energy & Utilities, Education, IT & ITES, Transportation & Logistics, and Manufacturing experienced the most pronounced growth in targeting, driving the year’s overall increase in operational activity.

The dataset also reveals a broadened attack surface, with several new or significantly expanded categories, including Agriculture & Livestock, Food & Beverages, Hospitality, Construction, Automotive, and Real Estate.

Government & LEA was the most impacted sector by a wide margin, followed by Energy & Utilities (chart below).

Hacktivism has evolved into a geopolitically charged, ICS-focused threat, continuing to exploit exposed OT environments and increasingly weaponizing ransomware as a protest mechanism.

In 2026, hacktivists and cybercriminals will increasingly target exposed HMI/SCADA systems and VNC takeovers, aided by public PoCs and automated scanning templates, creating ripple effects across the energy, water, transportation, and healthcare sectors.

Hacktivists and state actors will increasingly employ financially motivated tactics and appearances. State actors in Iran, Russia, and North Korea will increasingly adopt RaaS platforms to fund operations and maintain plausible deniability. Critical infrastructure attacks in Taiwan, the Baltic states, and South Korea will appear financially motivated while serving geopolitical objectives, complicating attribution and response.

Critical assets should be isolated from the Internet wherever possible, and operational technology (OT) and IT networks should be segmented and protected with Zero Trust access controls. Vulnerability management, along with network and endpoint monitoring and hardening, is another critical cybersecurity best practice.

Cyble’s comprehensive attack surface management solutions can help by scanning network and cloud assets for exposures and prioritizing fixes, in addition to monitoring for leaked credentials and other early warning signs of major cyberattacks. Get a free external threat profile for your organization today.

The post Critical Infrastructure Attacks Became Routine for Hacktivists in 2025 appeared first on Cyble.

Cyble – Read More

The original and best cybersecurity system now includes Sophos Workspace Protection.

Categories: Products & Services

Tags: Workspace, Firewall, Endpoint

Sophos Blogs – Read More

An integrated bundle of security solutions that protect apps, data, workers, and guests easily and affordably – wherever they are.

Categories: Products & Services

Tags: Workspace

Sophos Blogs – Read More

Summarizing the past year’s threat landscape based on activity observed in ANY.RUN’s Interactive Sandbox, this annual report provides insights into the most detected malware types, families, TTPs, and phishing threats of 2025.

For additional insights, view ANY.RUN’s quarterly malware trends reports.

| Total | 6,891,075 |

| Malicious | 1,401,910 |

| Suspicious | 430,223 |

| IOCs | 3,807,063,591 |

In 2025, ANY.RUN experienced significant growth alongside a rise in malicious activity. The numbers reflect a substantial growth of deep investigations and the detections of evasive threats facilitated by Interactive Sandbox:

As investigation volume and behavioral visibility increase, 15K+ security teams gain earlier detection, richer context, and faster response capabilities with ANY.RUN.

Interactive Sandbox helps them ensure a strong, enterprise-grade defense system by enabling:

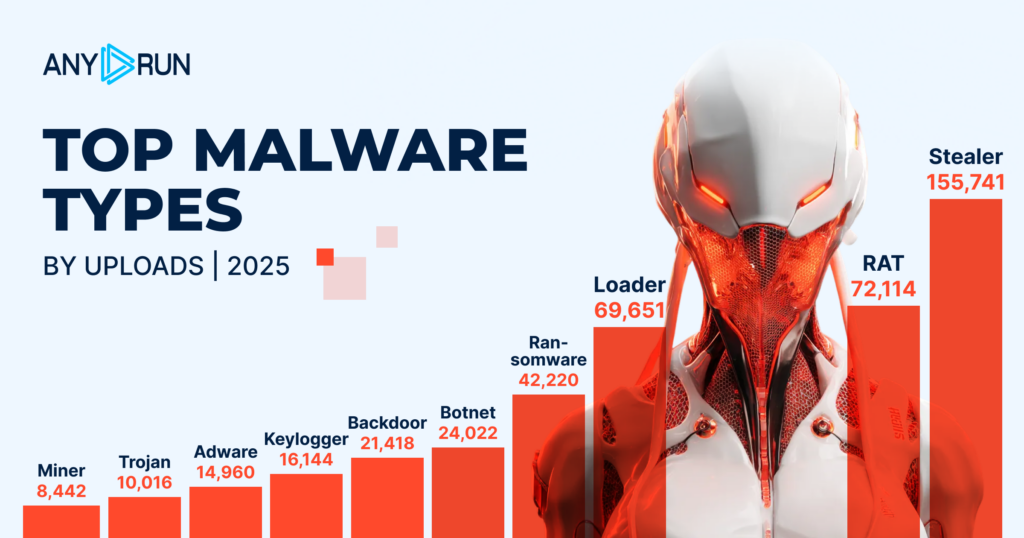

The upper part of the most active malware types chart closely resembles that of 2024. The top four most detected threats remained unchanged, underscoring the long-term impact and growth in activity of Stealer and RAT (their intensity grew 3x), Loader (2.5x) and Ransomware (2x) malware.

Other types have seen notable growth, too. Particularly dramatic increases are seen in Backdoor and Adware attacks. This points to an ongoing trend towards persistent access, credential theft, and multi-stage malware campaigns as opposed to short-spanned attacks.

A new addition to the list is Botnet with 21K+ detections that secured fifth place for this malware type.

From 2024 to 2025, most recurring malware families at least doubled in activity, as indicated by ANY.RUN’s statistics.

XWorm that led the ranking in 2024 was detected 4.3x times more often in 2025. Despite the sharp growth, it moved a place down and gave way to Lumma, this year’s leader, which grew from 12K to 31K+ detections.

Third and fourth places are taken by AsyncRAT and Remcos: both doubled in activity and were detected roughly 16K times.

A notable 3x growth in activity is seen in Snake threats, which occupied sixth place with 13,556 total detections.

Quasar and Vidar families newly entered the top list, signaling renewed RAT and stealer diversification.

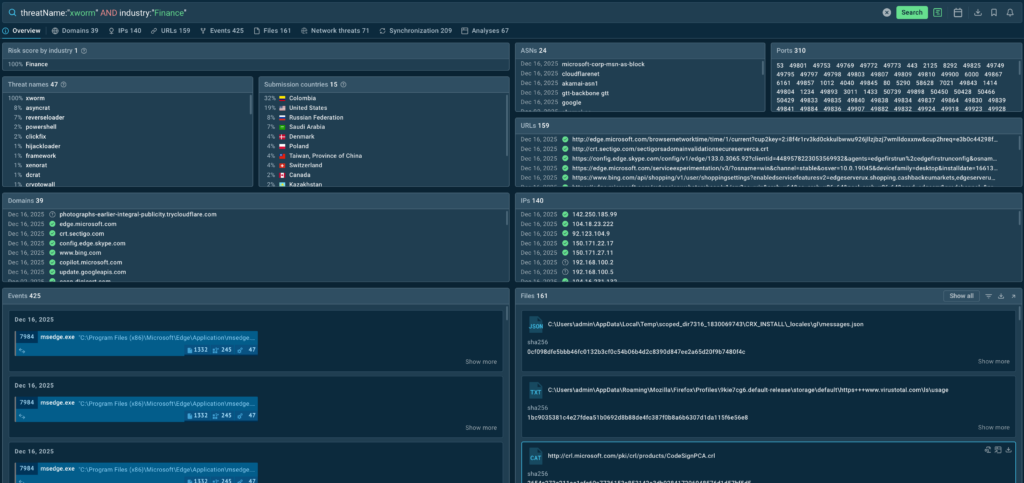

You can browse Threat Intelligence Lookup for further insights into threats relevant for you country or industry. For that, use requests like:

threatName:”xworm” AND industry:”Finance”

SOC teams can use these insights from a searchable indicator databases with IOCs, IOAs, and IOBs to:

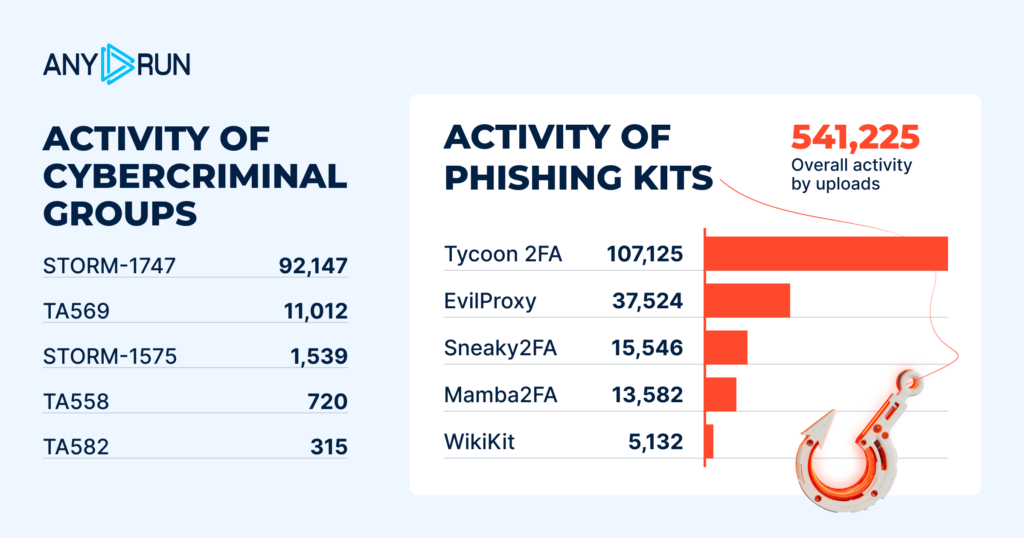

| Actor | Total Detections |

|---|---|

| Storm-1747 | 92,147 |

| TA569 | 11,012 |

| Storm-1575 | 1,539 |

| TA558 | 720 |

| TA582 | 315 |

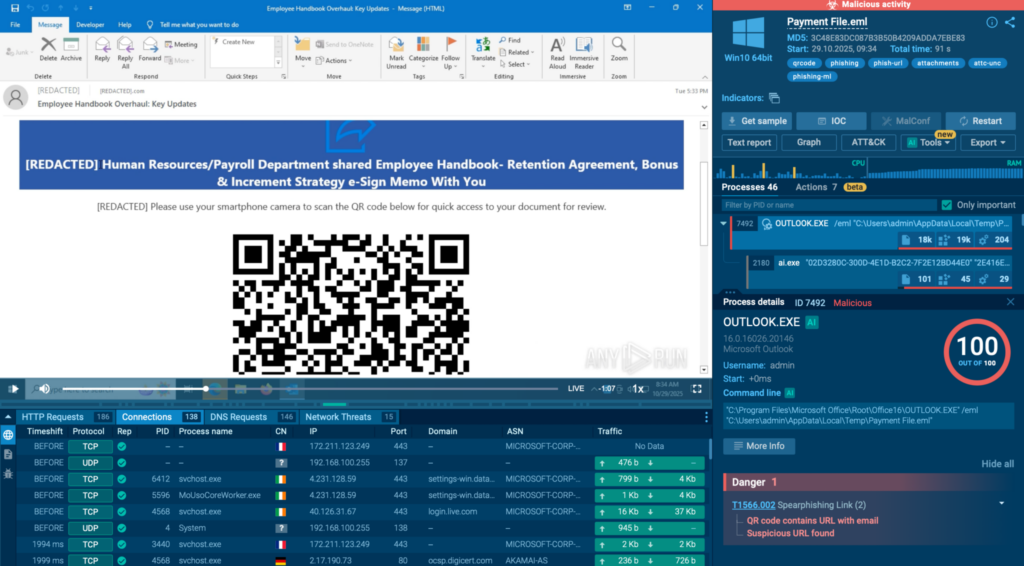

Phishing remained a key initial infection and credential-harvesting method

throughout 2025. In ANY.RUN’s Interactive Sandbox, phishing-related activity was detected 541,225 times.

The dominance of these actors over the months highlights the superiority of these groups on the threat landscape, which allows them to take up a disproportionately large share of phishing operations.

The year’s top three is concluded by Storm-1575 with significantly fewer detections than the chart’s leaders, emphasizing the gap between the leading actors and other groups.

| Kit | Total Detections |

|---|---|

| Tycoon2FA | 107,125 |

| EvilProxy | 37,524 |

| Sneaky2FA | 15,546 |

| Mamba2FA | 13,582 |

| WikiKit | 5,132 |

Tycoon2FA and EvilProxy reigned among most detected phishing kits throughout the year. Their total number of detections: 107,125 and 37,524 respectively, underscoring a clear dominance of phishing-as-a-service (PhaaS) platforms capable of bypassing multi-factor authentication at scale.

Third place is taken by Sneaky2FA, another threat that has shown steady growth from quarter to quarter, reflecting focus on session hijacking and interception of credentials in real time.

The top five in 2025 phishing threats is rounded out by Mamba2FA and WikiKit, with roughly 13.5K and 5K total detections respectively.

These figures prove that phishing has evolved into a large-scale threat built around MFA abuse, modular tooling, and reusable infrastructures.

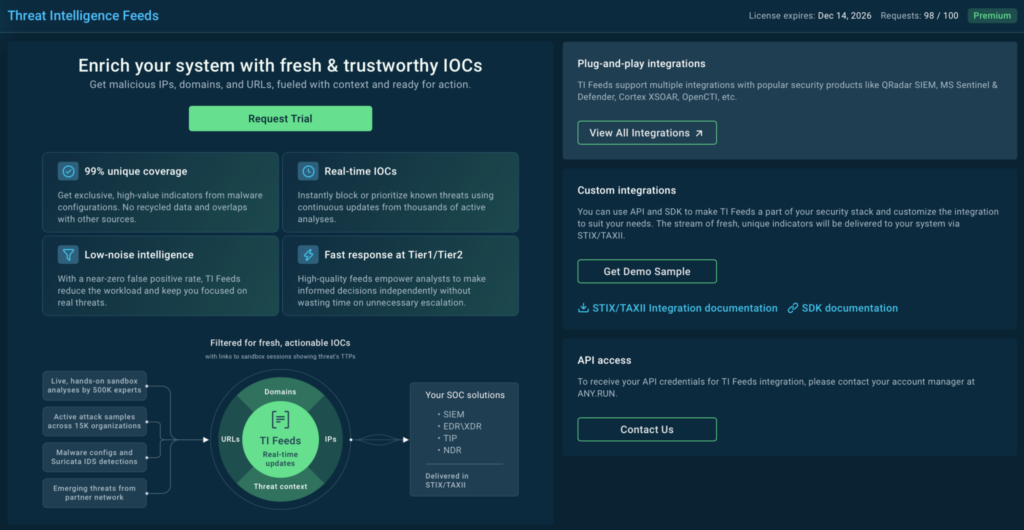

You can ensure eraly threat detection of phishing threats like Tycoon2FA, EvilProxy, and more with Threat Intelligence Feeds delivering 99% unique threat data directly into your SIEM and other security solutions.

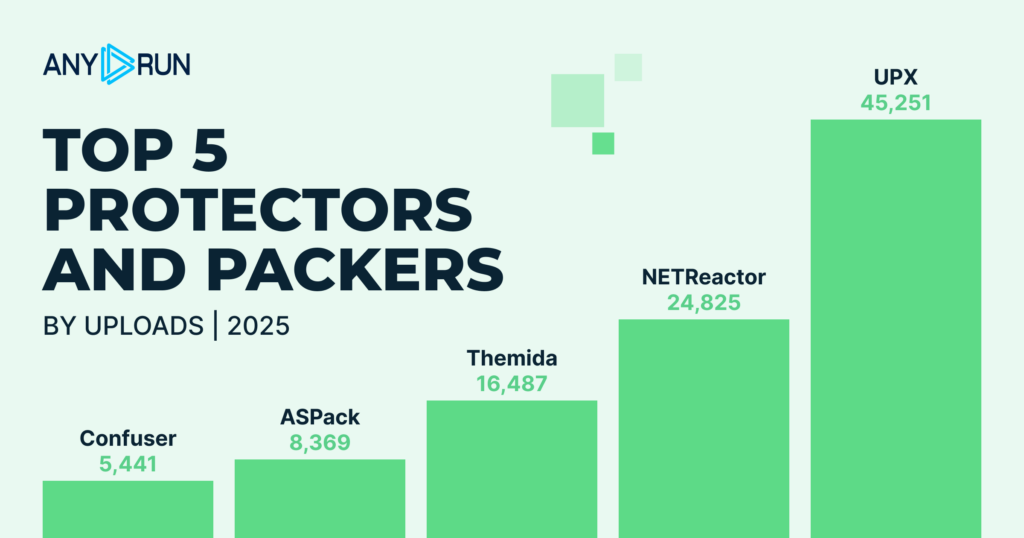

| Packer | Total Detections |

|---|---|

| UPX | 45,251 |

| NETReactor | 24,825 |

| Themida | 16,487 |

| ASPack | 8,369 |

| Confuser | 5,441 |

The list of top protectors and packers used by attackers during 2025 remained mostly stable throughout the year, reflecting continued reliance on established obfuscation tools.

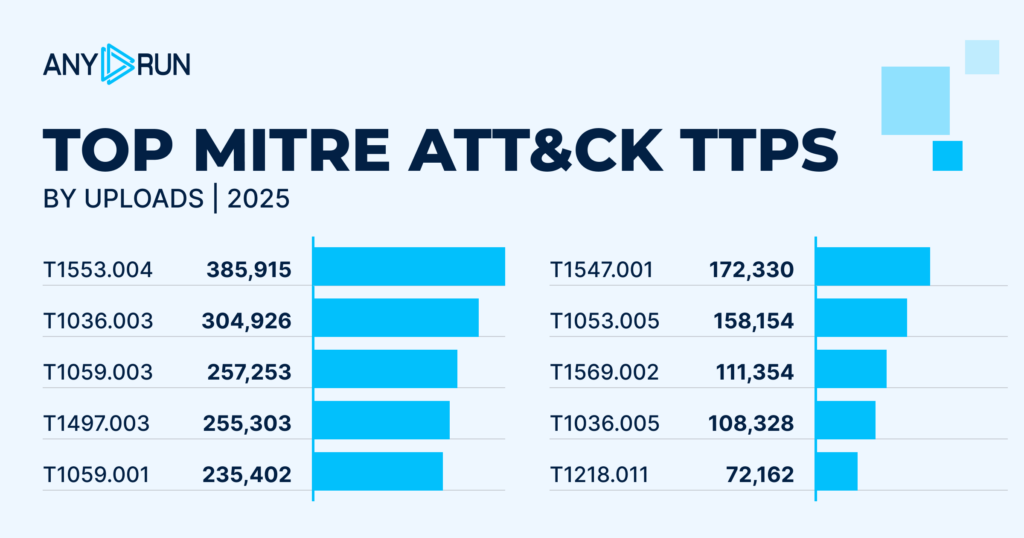

| Rank | TTP ID | Name | Total Detections |

|---|---|---|---|

| 1 | 1553.004 | Subvert Trust Controls: Install Root Certificate | 385,915 |

| 2 | 1036.003 | Masquerading: Rename Legitimate Utilities | 304,926 |

| 3 | 1059.003 | Command and Scripting Interpreter: Windows Command Shell | 257,253 |

| 4 | 1497.003 | Virtualization/Sandbox Evasion: Time Based Checks | 255,303 |

| 5 | 1059.001 | Command and Scripting Interpreter: PowerShell | 235,402 |

| 6 | 1547.001 | Boot or Logon Autostart Execution: Registry Run Keys / Startup Folder | 172,330 |

| 7 | 1053.005 | Scheduled Task/Job: Scheduled Task | 158,154 |

| 8 | 1569.002 | System Services: Service Execution | 111,354 |

| 9 | 1036.005 | Masquerading: Match Legitimate Name or Location | 108,328 |

| 10 | 1218.011 | System Binary Proxy Execution: Rundll32 | 72,162 |

Among widespread TTPs, a new 2025 leader is T1553.004 – Subvert Trust Controls: Install Root Certificate with 385K+ detections. This technique didn’t appear on the list a year before, signaling a shift toward TLS interception, traffic inspection, and deep trust abuse.

Second place is taken by T1036.003 – Masquerading: Rename Legitimate Utilities. This TTP moved two places up with a 2.4x growth in total detections.

Other recurring TTPs like T1059.003 – Command and Scripting Interpreter: Windows Command Shell and T1497.003 – Virtualization/Sandbox Evasion: Time-Based Checks

also experienced drastic increases in activity, confirming a rise in evasive behavior and the use of reliable execution methods, especially in phishing-delivered malware.

Understanding what happened is the first step to knowing what to do next. This report is built on threat intelligence gathered from millions of real investigations conducted by 15,000+ SOC teams worldwide throughout 2025. For actionable insights, high-quality threat data, and in-depth, dynamic analysis available in your security system 24/7, integrate ANY.RUN:

Overall, 2025 was marked by strong growth in investigation activity, increased malware sophistication, and a clear shift toward persistence, evasion, and trust abuse among threat actors, underscoring the need for continuous monitoring and proactive threat analysis.

ANY.RUN builds advanced solutions for malware analysis and threat hunting. Its interactive malware analysis sandbox is trusted by 600,000+ cybersecurity professionals worldwide, enabling hands-on investigation of threats targeting Windows, Linux, and Android environments with real-time behavioral visibility.

Threat Intelligence Lookup and Threat Intelligence Feeds help security teams quickly identify indicators of compromise, enrich alerts with context, and investigate incidents at early stages. This empowers analysts to gain actionable insights, uncover stealthy threats, and strengthen their overall security posture.

Request ANY.RUN access for your company

It is ANY.RUN’s annual analysis of global malware activity in 2025, based on millions of sandbox investigations and billions of collected indicators.

The report is derived from activity in ANY.RUN’s Interactive Sandbox, reflecting real-world investigations conducted by security teams, researchers, and SOCs worldwide.

Stealers, RATs, and phishing campaigns—especially those using MFA-bypassing phishing kits—were the most prevalent and impactful threats.

Phishing evolved into a scalable access mechanism in 2025, enabling attackers to bypass MFA, harvest sessions, and gain persistent access to corporate environments.

Attackers increasingly relied on stealth, persistence, and trust abuse, including masquerading, sandbox evasion, and root certificate installation.

Enterprises should prioritize behavioral detection, continuous monitoring, and fresh threat intelligence to detect evasive and persistent threats early.

ANY.RUN’s Interactive Sandbox and threat intelligence solutions enable hands-on analysis, early detection, and faster response to modern, evasive attacks.

The post Malware Trends Overview Report: 2025 appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More

Millions of IT systems — some of them industrial and IoT — may start behaving unpredictably on January 19. Potential failures include: glitches in processing card payments; false alarms from security systems; incorrect operation of medical equipment; failures in automated lighting, heating, and water supply systems; and many more less serious types of errors. The catch is — it will happen on January 19, 2038. Not that that’s a reason to relax — the time left to prepare may already be insufficient. The cause of this mass of problems will be an overflow in the integers storing date and time. While the root cause of the error is simple and clear, fixing it will require extensive and systematic efforts on every level — from governments and international bodies and down to organizations and private individuals.

The Unix epoch is the timekeeping system adopted by Unix operating systems, which became popular across the entire IT industry. It counts the seconds from 00:00:00 UTC on January 1, 1970, which is considered the zero point. Any given moment in time is represented as the number of seconds that have passed since that date. For dates before 1970, negative values are used. This approach was chosen by Unix developers for its simplicity — instead of storing the year, month, day, and time separately, only a single number is needed. This facilitates operations like sorting or calculating the interval between dates. Today, the Unix epoch is used far beyond Unix systems: in databases, programming languages, network protocols, and in smartphones running iOS and Android.

Initially, when Unix was developed, a decision was made to store time as a 32-bit signed integer. This allowed for representing a date range from roughly 1901 to 2038. The problem is that on January 19, 2038, at 03:14:07 UTC, this number will reach its maximum value (2,147,483,647 seconds) and overflow, becoming negative, and causing computers to “teleport” from January 2038 back to December 13, 1901. In some cases, however, shorter “time travel” might happen — to point zero, which is the year 1970.

This event, known as the “year 2038 problem”, “Epochalypse”, or “Y2K38”, could lead to failures in systems that still use 32-bit time representation — from POS terminals, embedded systems, and routers, to automobiles and industrial equipment. Modern systems solve this problem by using 64 bits to store time. This extends the date range to hundreds of billions of years into the future. However, millions of devices with 32-bit dates are still in operation, and will require updating or replacement before “day Y” arrives.

In this context, 32 and 64 bits refer specifically to the date storage format. Just because an operating system or processor is 32-bit or 64-bit, it doesn’t automatically mean it stores the date in its “native” bit format. Furthermore, many applications store dates in completely different ways, and might be immune to the Y2K38 problem, regardless of their bitness.

In cases where there’s no need to handle dates before 1970, the date is stored as an unsigned 32-bit integer. This type of number can represent dates from 1970 to 2106, so the problem will arrive in the more distant future.

The infamous year 2000 problem (Y2K) from the late 20th century was similar in that systems storing the year as two digits could mistake the new date for the year 1900. Both experts and the media feared a digital apocalypse, but in the end there were just numerous isolated manifestations that didn’t lead to global catastrophic failures.

The key difference between Y2K38 and Y2K is the scale of digitization in our lives. The number of systems that will need updating is way higher than the number of computers in the 20th century, and the count of daily tasks and processes managed by computers is beyond calculation. Meanwhile, the Y2K38 problem has already been, or will soon be, fixed in regular computers and operating systems with simple software updates. However, the microcomputers that manage air conditioners, elevators, pumps, door locks, and factory assembly lines could very well chug along for the next decade with outdated, Y2K38-vulnerable software versions.

The date’s rolling over to 1901 or 1970 will impact different systems in different ways. In some cases, like a lighting system programmed to turn on every day at 7pm, it might go completely unnoticed. In other systems that rely on complete and accurate timestamps, a full failure could occur — for example, in the year 2000, payment terminals and public transport turnstiles stopped working. Comical cases are also possible, like issuing a birth certificate with a date in 1901. Far worse would be the failure of critical systems, such as a complete shutdown of a heating system, or the failure of a bone marrow analysis system in a hospital.

Cryptography holds a special place in the Epochalypse. Another crucial difference between 2038 and 2000 is the ubiquitous use of encryption and digital signatures to protect all communications. Security certificates generally fail verification if the device’s date is incorrect. This means a vulnerable device would be cut off from most communications — even if its core business applications don’t have any code that incorrectly handles the date.

Unfortunately, the full spectrum of consequences can only be determined through controlled testing of all systems, with separate analysis of a potential cascade of failures.

IT and InfoSec teams should treat Y2K38 not as a simple software bug, but as a vulnerability that can lead to various failures, including denial of service. In some cases, it can even be exploited by malicious actors. To do this, they need the ability to manipulate the time on the targeted system. This is possible in at least two scenarios:

Exploitation of this error is most likely in OT and IoT systems, where vulnerabilities are traditionally slow to be patched, and the consequences of a failure can be far more substantial.

An example of an easily exploitable vulnerability related to time counting is CVE-2025-55068 (CVSSv3 8.2, CVSSv4 base 8.8) in Dover ProGauge MagLink LX4 automatic fuel-tank gauge consoles. Time manipulation can cause a denial of service at the gas station, and block access to the device’s web management panel. This defect earned its own CISA advisory.

The foundation for solving the Y2K38 problem has been successfully laid in major operating systems. The Linux kernel added support for 64-bit time even on 32-bit architectures starting with version 5.6 in 2020, and 64-bit Linux was always protected from this issue. The BSD family, macOS, and iOS use 64-bit time on all modern devices. All versions of Windows released in the 21st century aren’t susceptible to Y2K38.

The situation at the data storage and application level is far more complex. Modern file systems like ZFS, F2FS, NTFS, and ReFS were designed with 64-bit timestamps, while older systems like ext2 and ext3 remain vulnerable. Ext4 and XFS require specific flags to be enabled (extended inode for ext4, and bigtime for XFS), and might need offline conversion of existing filesystems. In the NFSv2 and NFSv3 protocols, the outdated time storage format persists. It’s a similar patchwork landscape in databases: the TIMESTAMP type in MySQL is fundamentally limited to the year 2038, and requires migration to DATETIME, while the standard timestamp types in PostgreSQL are safe. For applications written in C, pathways have been created to use 64-bit time on 32-bit architectures, but all projects require recompilation. Languages like Java, Python, and Go typically use types that avoid the overflow, but the safety of compiled projects depends on whether they interact with vulnerable libraries written in C.

A massive number of 32-bit systems, embedded devices, and applications remain vulnerable until they’re rebuilt and tested, and then have updates installed by all their users.

Various organizations and enthusiasts are trying to systematize information on this, but their efforts are fragmented. Consequently, there’s no “common Y2K38 vulnerability database” out there (1, 2, 3, 4, 5).

The methodologies created for prioritizing and fixing vulnerabilities are directly applicable to the year 2038 problem. The key challenge will be that no tool today can create an exhaustive list of vulnerable software and hardware. Therefore, it’s essential to update inventory of corporate IT assets, ensure that inventory is enriched with detailed information on firmware and installed software, and then systematically investigate the vulnerability question.

The list can be prioritized based on the criticality of business systems and the data on the technology stack each system is built on. The next steps are: studying the vendor’s support portal, making direct inquiries to hardware and software manufacturers about their Y2K38 status, and, as a last resort, verification through testing.

When testing corporate systems, it’s critical to take special precautions:

If a system is found to be vulnerable to Y2K38, a fixing timeline should be requested from the vendor. If a fix is impossible, plan a migration; fortunately, the time we have left still allows for updating even fairly complex and expensive systems.

The most important thing in tackling Y2K38 is not to think of it as a distant future problem whose solution can easily wait another five to eight years. It’s highly likely that we already have insufficient time to completely eradicate the defect. However, within an organization and its technology fleet, careful planning and a systematic approach to solving the problem will allow to actually make it in time.

Kaspersky official blog – Read More

The business social networking site is a vast, publicly accessible database of corporate information. Don’t believe everyone on the site is who they say they are.

WeLiveSecurity – Read More

Brand, website, and corporate mailout impersonation is becoming an increasingly common technique used by cybercriminals. The World Intellectual Property Organization (WIPO) reported a spike in such incidents in 2025. While tech companies and consumer brands are the most frequent targets, every industry in every country is generally at risk. The only thing that changes is how the imposters exploit the fakes In practice, we typically see the following attack scenarios:

The words “luring” and “prompting” here imply a whole toolbox of tactics: email, messages in chat apps, social media posts that look like official ads, lookalike websites promoted through SEO tools, and even paid ads.

These schemes all share two common features. First, the attackers exploit the organization’s brand, and strive to mimic its official website, domain name, and corporate style of emails, ads, and social media posts. And the forgery doesn’t have to be flawless — just convincing enough for at least some of business partners and customers. Second, while the organization and its online resources aren’t targeted directly, the impact on them is still significant.

When fakes are crafted to target employees, an attack can lead to direct financial loss. An employee might be persuaded to transfer company funds, or their credentials could be used to steal confidential information or launch a ransomware attack.

Attacks on customers don’t typically imply direct damage to the company’s coffers, but they cause substantial indirect harm in the following areas:

Popular cyber-risk insurance policies typically only cover costs directly tied to incidents explicitly defined in the policy — think data loss, business interruption, IT system compromise, and the like. Fake domains and web pages don’t directly damage a company’s IT systems, so they’re usually not covered by standard insurance. Reputational losses and the act of impersonation itself are separate insurance risks, requiring expanded coverage for this scenario specifically.

Of the indirect losses we’ve listed above, standard insurance might cover DFIR expenses and, in some cases, extra customer support costs (if the situation is recognized as an insured event). Voluntary customer reimbursements, lost sales, and reputational damage are almost certainly not covered.

If you find out someone is using your brand’s name for fraud, it makes sense to do the following:

While the open nature of the internet and the specifics of these attacks make preventing them outright impossible, a business can stay on top of new fakes and have the tools ready to fight back.

Kaspersky official blog – Read More

Post Content

Sophos Blogs – Read More

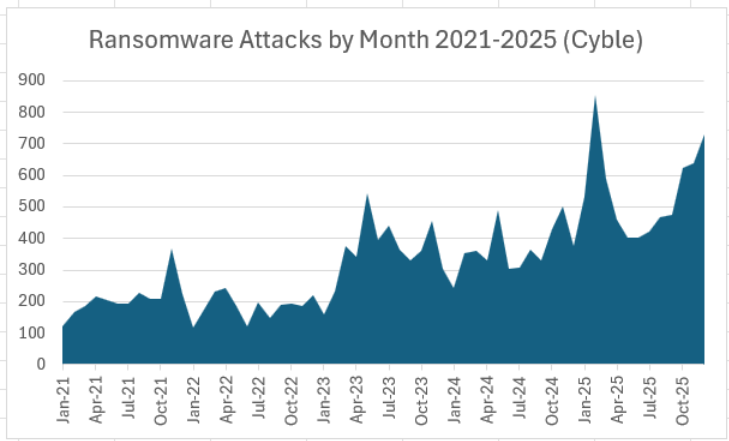

Ransomware and supply chain attacks soared in 2025, and persistently elevated attack levels suggest that the global threat landscape will remain perilous heading into 2026.

Cyble recorded 6,604 ransomware attacks in 2025, up 52% from the 4,346 attacks claimed by ransomware groups in 2024. The year ended with a near-record 731 ransomware attacks in December, second only to February 2025’s record totals (chart below).

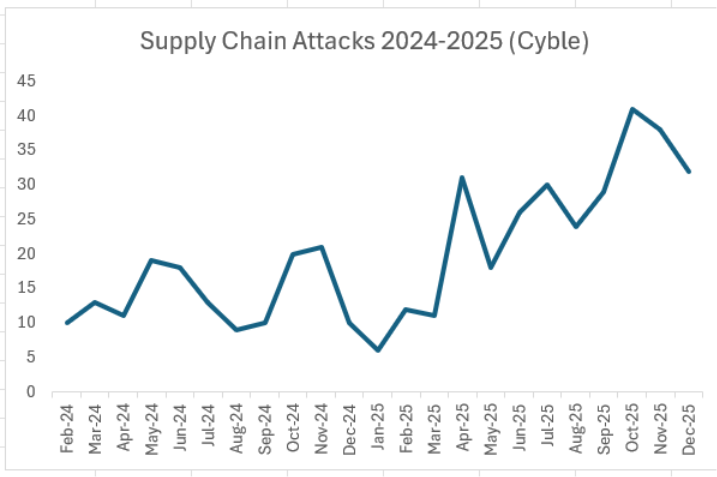

Supply chain attacks nearly doubled in 2025, as Cyble dark web researchers recorded 297 supply chain attacks claimed by threat groups in 2025, up 93% from 154 such events in 2024 (chart below). As ransomware groups are consistently behind more than half of supply chain attacks, the two attack types have become increasingly linked.

While supply chain attacks have declined in the two months since October’s record, they remain above even the elevated trend that began in April 2025.

We’ll take a deeper look at ransomware and supply chain attack data, including targeted sectors and regions, attack trends, and leading threat actors. Some of the data and insights come from Cyble’s new Annual Threat Landscape Report covering cybercrime, ransomware, vulnerabilities, and other 2025-2026 cyber threat trends.

Qilin emerged as the leading ransomware group in April after RansomHub went offline amid possible sabotage by rival Dragonforce. Qilin has remained on top in every month but one since, and was once again the top ransomware group in December with 190 claimed victims (December chart below).

December was also noteworthy for the long-awaited resurgence of Lockbit and the continued emergence of Sinobi.

For full-year 2025, Qilin dominated, claiming 17% of all ransomware victims (full-year chart below). Of the top five ransomware groups in 2025, only Akira and Play also made the top five in 2024, as RansomHub, Lockbit and Hunters all fell from the top five. Lockbit was hampered by repeated law enforcement actions, while Hunters announced it was shutting down in mid-2025.

Cyble documented 57 new ransomware groups and 27 new extortion groups in 2025, including emerging leaders like Sinobi and The Gentlemen. Over 350 new ransomware strains were discovered in 2025, largely based on the MedusaLocker, Chaos, and Makop ransomware families.

Among newly emerged ransomware groups, Cyble observed heightened attacks on critical infrastructure industries (CII), especially in Government & LEA and Energy & Utilities, by groups such as Devman, Sinobi, Warlock, and Gunra. Several newly emerged groups targeted the software supply chain, among them RALord/Nova, Warlock, Sinobi, The Gentlemen, and BlackNevas, with a particular focus on the IT & ITES, Technology, and Transportation & Logistics sectors.

Cl0p’s Oracle E-Business Suite vulnerability exploitation campaign led to a supply-chain impact on more than 118 entities globally, including those in the IT & ITES sector. Among these, six entities from the critical infrastructure industries (CII) were observed to have fallen victim to this exploitation campaign. The Fog ransomware group also leaked multiple GitLab source codes from several IT companies.

The U.S. remains by far the most frequent target of ransomware groups, accounting for 55% of ransomware attacks in 2025 (chart below). Canada, Germany, the UK, Italy, and France were also consistent targets for ransomware groups.

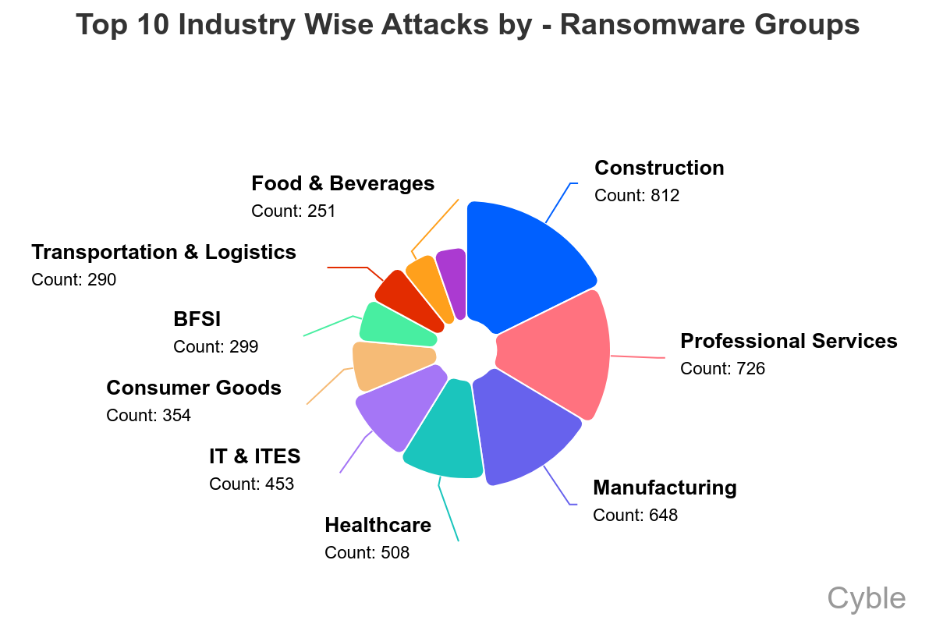

Construction, professional services, and manufacturing were consistently the sectors most targeted by ransomware groups, with healthcare and IT rounding out the top five (chart below).

Every sector tracked by Cyble was hit by a software supply chain attack in 2025 (chart below), but because of the rich target they represent and their significant downstream customer base, the IT and Technology sectors were by far the most frequently targeted, accounting for more than a third of supply chain attacks.

Supply chain intrusions in 2025 expanded far beyond traditional package poisoning, targeting cloud integrations, SaaS trust relationships, and vendor distribution pipelines.

Adversaries are increasingly abusing upstream services—such as identity providers, package registries, and software delivery channels—to compromise downstream environments on a large scale.

A few examples highlighting the evolving third-party risk landscape include:

Attacks targeting Salesforce data via third-party integrations did not modify code; instead, they weaponized trust between SaaS platforms, illustrating how OAuth-based integrations can become high-impact supply chain vulnerabilities when third-party tokens have been compromised.

The nation-state group Silk Typhoon intensified operations against IT and cloud service providers, exploiting VPN zero-days, password-spraying attacks, and misconfigured privileged access systems. After breaching upstream vendors such as MSPs, remote-management platforms, or PAM service providers, the group pivoted into customer environments via inherited admin credentials, compromised service principals, and high-privilege cloud API permissions.

A China-aligned APT group, PlushDaemon, compromised the distribution channel of a South Korean VPN vendor, replacing legitimate installers with a trojanized version bundling the SlowStepper backdoor. The malicious installer, delivered directly from the vendor’s website, installed both the VPN client and a modular surveillance framework supporting credential theft, keylogging, remote execution, and multimedia capture. By infiltrating trusted security software, the attackers gained persistent access to organizations relying on the VPN for secure remote connectivity, turning a defensive tool into an espionage vector.

The significant supply chain and ransomware threats facing security teams as we enter 2026 require a renewed focus on cybersecurity best practices that can help protect against a wide range of cyber threats. These practices include:

Cyble’s comprehensive attack surface management solutions can help by scanning network and cloud assets for exposures and prioritizing fixes, in addition to monitoring for leaked credentials and other early warning signs of major cyberattacks. Additionally, Cyble’s third-party risk intelligence can help organizations carefully vet partners and suppliers, providing an early warning of potential risks.

The post Ransomware and Supply Chain Attacks Soared in 2025 appeared first on Cyble.

Cyble – Read More