Your item has sold! Avoiding scams targeting online sellers

- There are many risks associated with selling items on online marketplaces that individuals and organizations should be aware of when conducting business on these platforms.

- Many of the general recommendations related to the use of these platforms are tailored towards purchasing items; however, there are several threats to those selling items as well.

- Recent phishing campaigns targeting sellers on these marketplaces have leveraged the platforms’ direct messaging feature(s) to attempt to steal credit card details for sellers’ payout accounts.

- Shipment detail changes, pressure to conduct off-platform transactions, and attempted use of “friends and family” payment options are commonly encountered scam techniques, all of which seek to remove the seller protections usually afforded by these platforms.

- There are several steps that sellers can take to help protect themselves and their data from these threats. Being mindful of the common scams and threats targeting sellers can help sellers identify when they may be being targeted by malicious buyers while it is occurring so that they can take defensive actions to protect themselves.

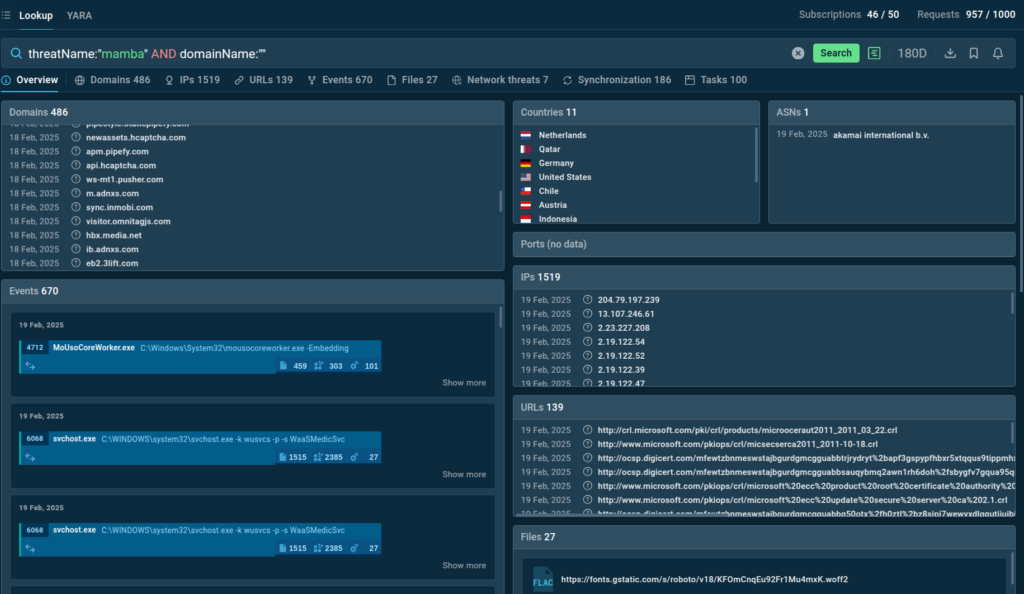

The emergence of online marketplaces has facilitated the convenient exchange of goods between individuals and organizations around the world. It has also provided a means for people to easily resell items, enabling them to recapture value from assets they may not otherwise wish to maintain ownership of. The type of new and used items sold via marketplaces varies widely, and platforms such as Ebay, Facebook Marketplace, Reverb, and others are extremely popular avenues for selling everything from $15 vintage tissue boxes to $40,000 Gibson Les Paul guitars. You can even find $70,000,000 domains targeting affluent individuals with above-average BMIs being sold on online marketplaces.

When we think about online safety in the context of these platforms, we often concentrate the majority of our efforts and recommendations on the threats targeting buyers. In many cases, scammers are also actively targeting the people selling items on these platforms as well. From an adversarial perspective, it makes sense to target sellers, as these are likely to be individuals with large amounts of cash sitting in their accounts as they frequently receive payments for items they sell. Likewise, adversaries can easily monitor the public listings of seller accounts to identify when high-value items are listed, as well as when they are sold, so that they can identify when a target could reasonably be expected to receive an influx of cash into their accounts.

Likewise, many platforms train their sellers to expect frequent, unsolicited account verification prompts delivered in any number of ways, which provides the perfect pretext for scammers seeking to take advantage of this pool of targets. From a detection and analysis standpoint, these types of attacks are often difficult to effectively combat, as platform providers, intelligence analysts, and law enforcement agencies are forced to primarily rely on self-reporting from victims to quantify the scope and impact of these types of threats. Let’s break down a few examples of some of the most common scams that target the individuals or organizations selling products on online marketplaces.

Phishing and malware scams

While some scams attempt to fraudulently steal items, others are primarily aimed at stealing financial information from sellers by leveraging the platform’s messaging feature(s) as a mechanism to directly communicate with them for the purposes of phishing or malware distribution. In some cases, phishing is used to compromise the seller account itself, enabling the attacker to manipulate listings, shipments, modify automated payout settings, and communicate with current and previous buyers to conduct additional fraudulent activities. In other cases, phishing is used to obtain financial information, such as bank account or credit card information, that can be used to steal money from the seller.

Payout account verification scams

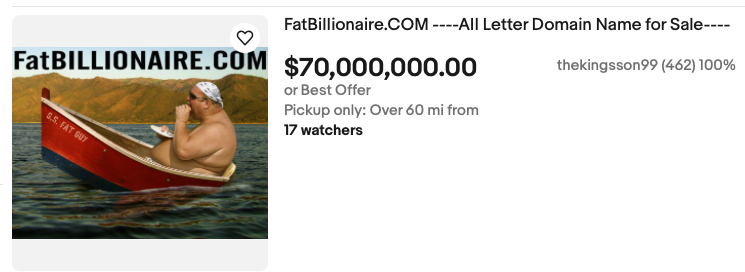

In a recent example, a /r/guitarpedals user reported that they had received a suspicious direct message on their Reverb seller account. The message was crafted to appear as if it was sent from the Reverb team itself. It informs the seller that their item has sold and prompts them to complete account verification to ensure that they receive payment for the item(s) they have sold.

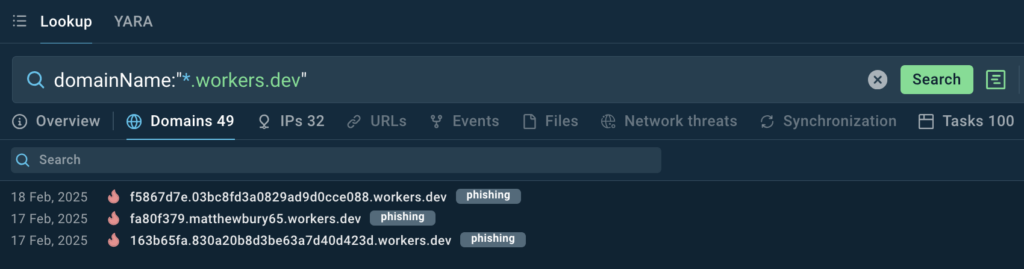

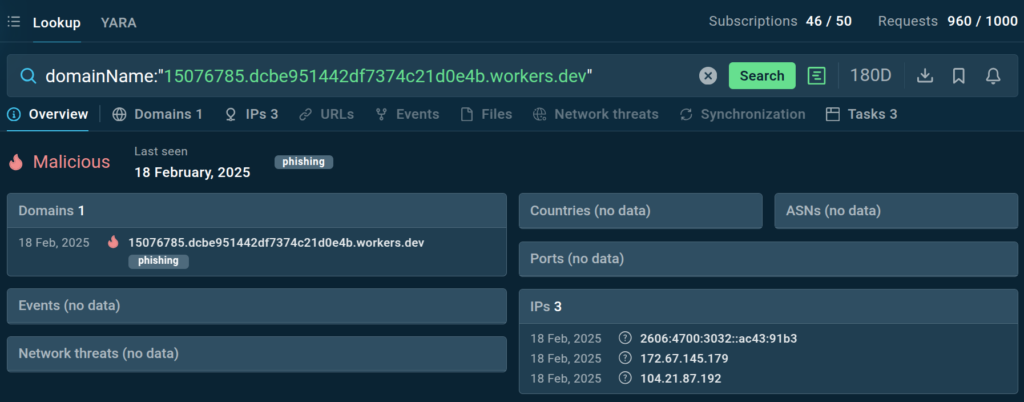

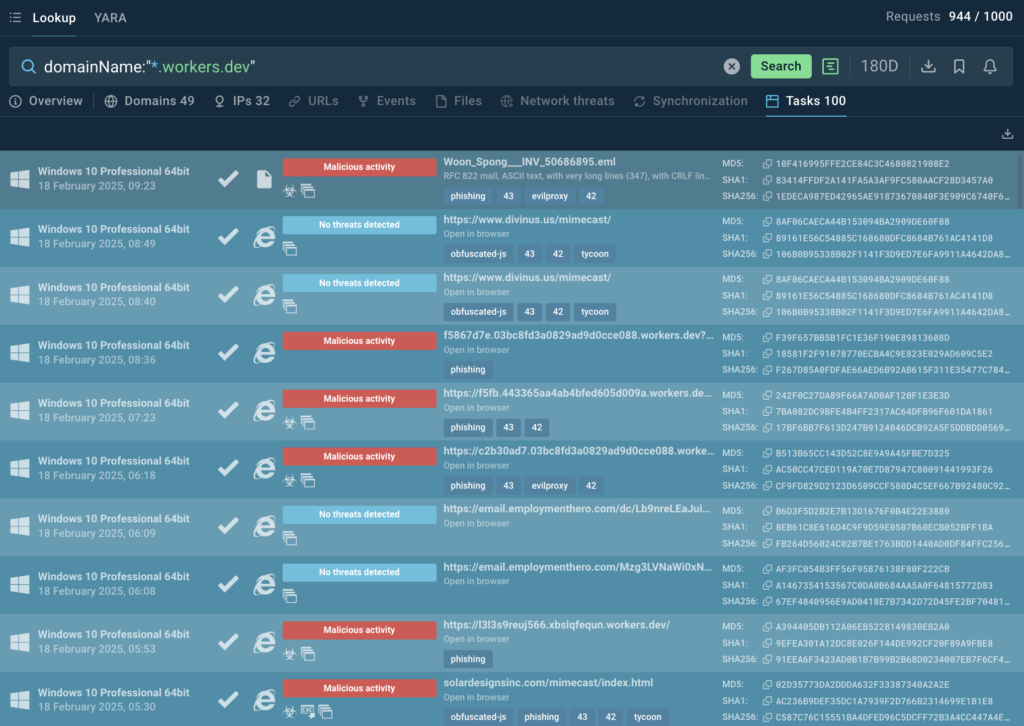

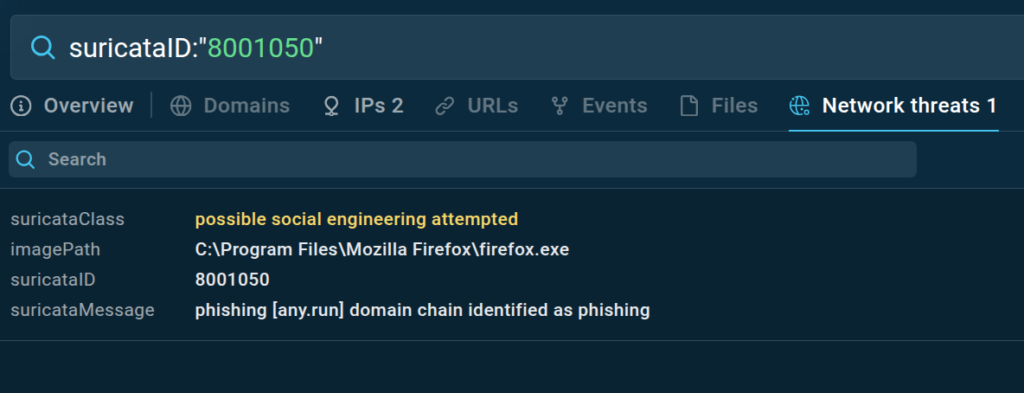

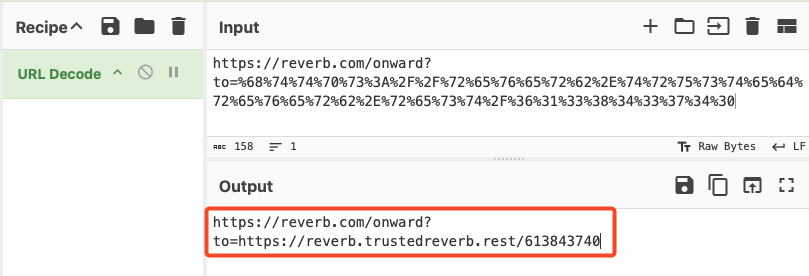

A hyperlink, present in the message (and shown below), attempts to leverage Reverb’s own redirection functionality to direct victims who click on the hyperlink to a malicious web server under the attacker’s control.

This technique uses percent encoding, an obfuscation technique, to mask the destination of the redirect and make it appear as if the link is pointing to the legitimate Reverb website.

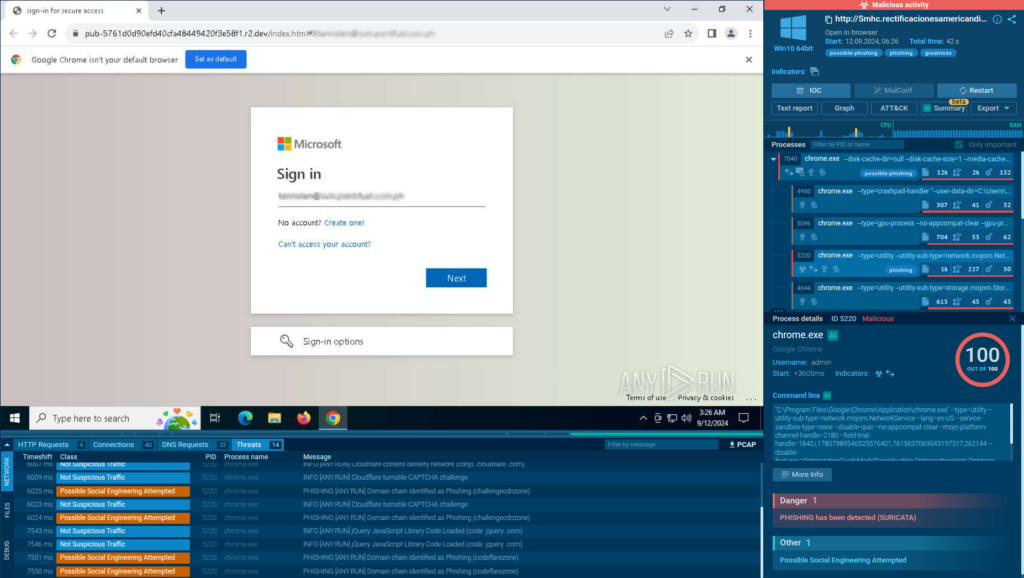

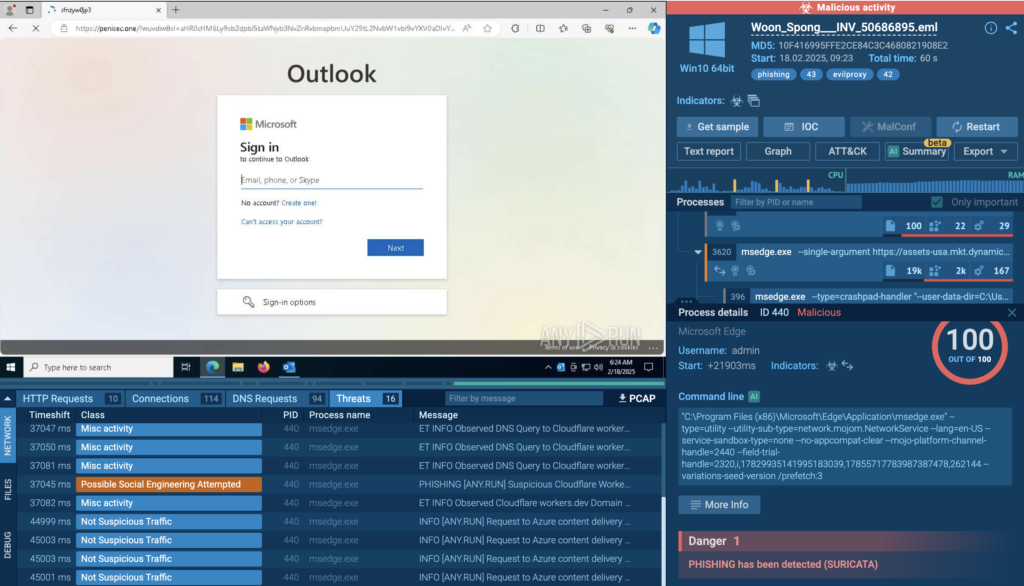

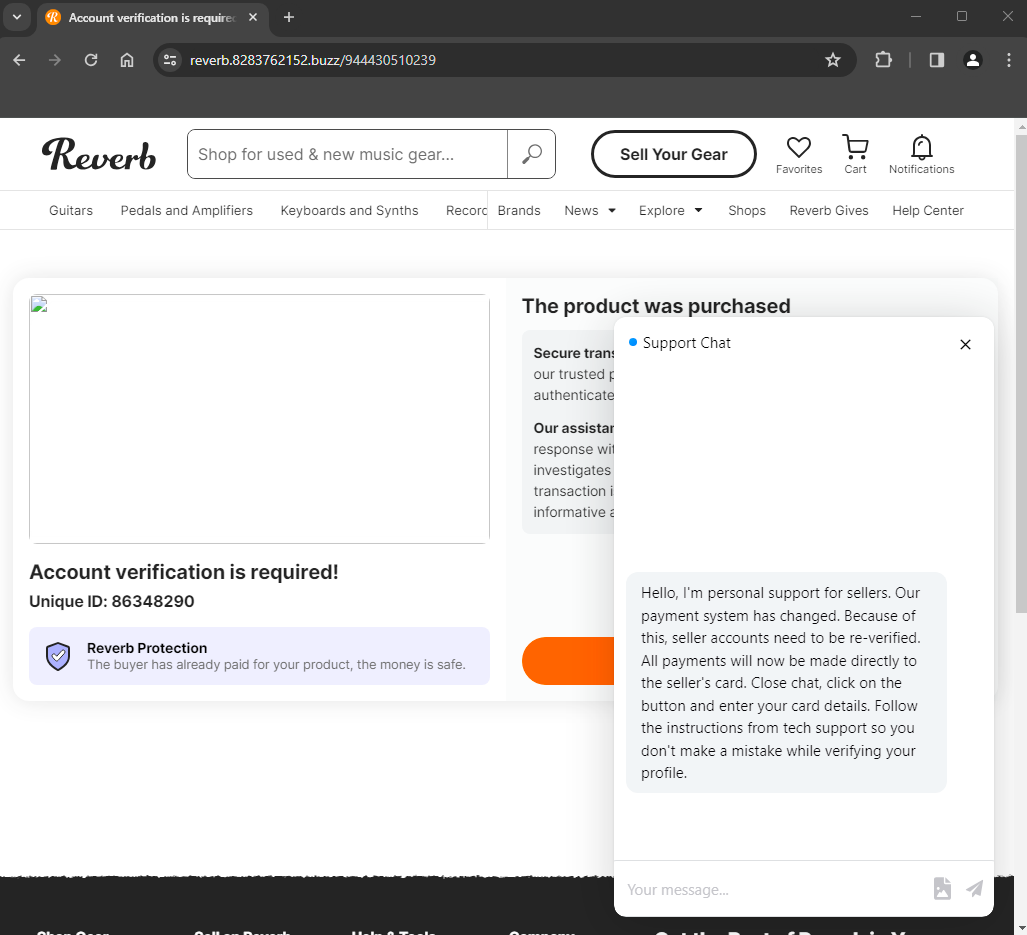

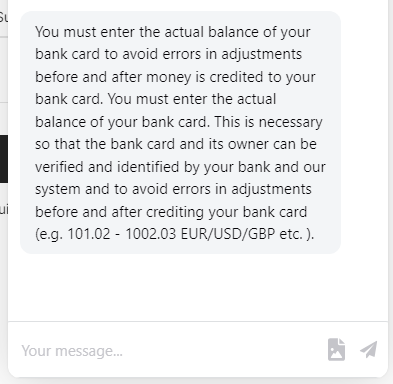

This URL, when accessed, returns an HTTP/302 redirection to another attacker-controlled web server, but ultimately the victim receives the following landing page. The attacker has put effort into making sure it appears legitimate and mimics what the victim expects to see. A chat message prompts the victim to complete the verification process by following the on-screen instructions.

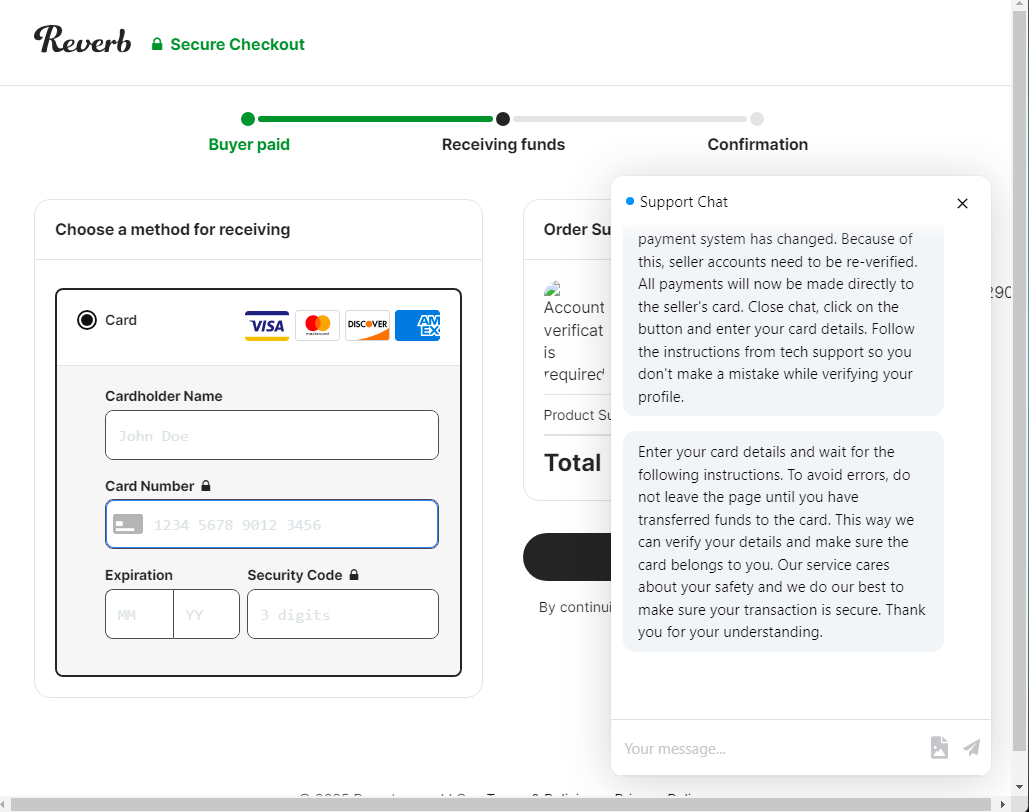

A “Receive Funds” button has been positioned behind the chat popup shown in the previous screenshot. When the victim clicks this button, they are presented an input form and prompted to provide the credit card information necessary to verify their desired payout account. Throughout this process, additional chat message popups are delivered to lend credibility to the process.

Since this is the account the seller would like to use to receive payments for items sold on the platform, it likely also contains any funds associated with previous sales, and will likely frequently receive lump sums when future items are sold, presenting a myriad of opportunities for attackers to monetize the account and assets within it, now or in the future. For example, if a threat actor successfully obtains credit card details for a seller account with multiple high-value items actively listed, they can simply wait until an item sells before attempting to monetize it.

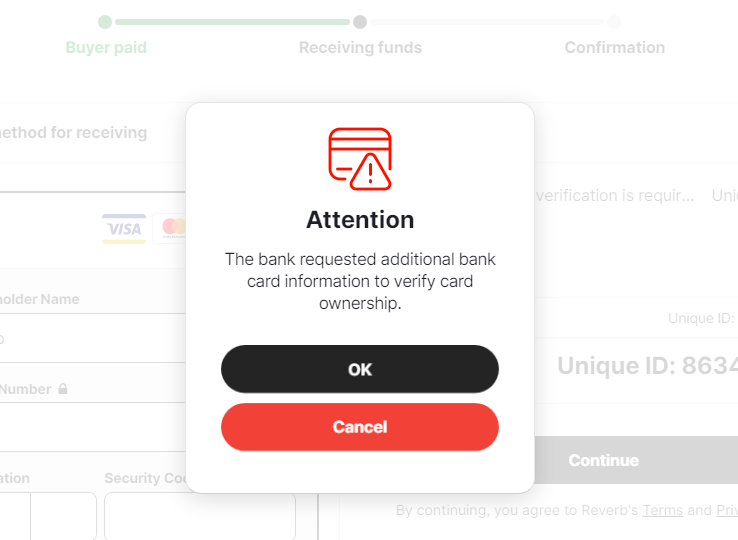

Assuming the victim enters valid credit card details, a message will be displayed requesting additional information.

The additional information in this case is the balance of the account that is being “verified.” A new chat message appears, prompting the user to enter the balance in their account, presumably so that the attacker can more effectively prioritize which accounts to empty first.

Assuming the victim enters their balance information, they are presented with the following message letting them know that it will take a period of time before they notice any changes to their accounts.

At this point, the adversary has obtained credit card details that they can then monetize however they choose. It’s important to note that while this particular example targeted sellers using the Reverb platform, most other online marketplaces experience similar threats as well. We have also observed Reverb often taking rapid response actions when attempting to send messages containing percent-encoded hyperlinks, indicating that the platform is aware of the issue and has put in place some mechanisms to help reduce the frequency and quantity of these types of messages on the platform.

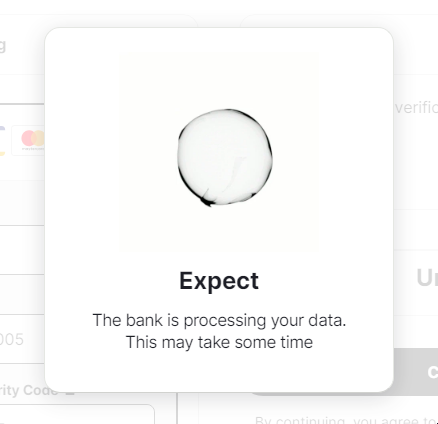

Reverb also presents warning messages to let users know when they are being redirected to a third-party domain, as shown in the screenshot below.

It is important to always validate the destination of hyperlinks received from unsolicited sources and to limit access to off-platform resources when conducting business on online marketplaces.

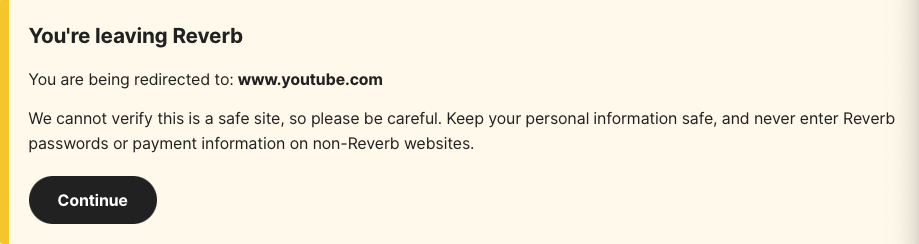

It is also important to note that direct messaging within marketplaces is not the only mechanism used for this type of scam. Below is a screenshot of an email received by a Shopify storefront owner attempting to convince them to verify their payout account information, similar to the previous example described above.

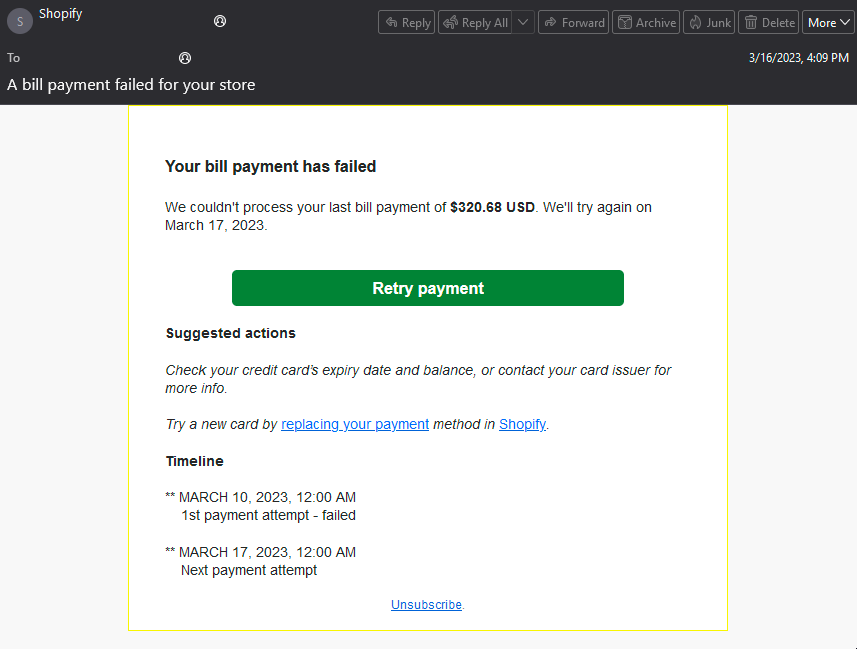

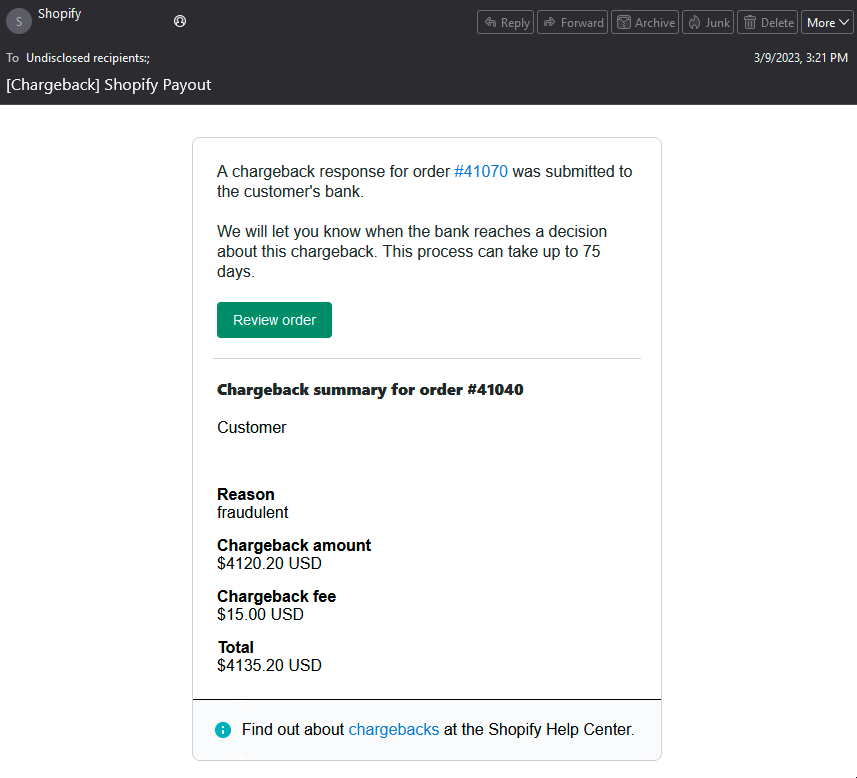

Below is another example. In this case, the threat actor has sent an email claiming that the seller has received a chargeback claim from a customer and prompting them to take action. Since chargebacks are often received for a variety of reasons, sellers may be more easily convinced to provide account or credit card information when prompted with a claim related to a chargeback.

If an email is received from an online marketplace, the platform will typically also provide warning messages, banners, and other mechanisms to alert sellers of the same issue. In the case that a seller receives an unexpected email related to payment issues, sellers should attempt to resolve issues directly on the platform rather than accessing content or responding to the email.

Scams to remove seller protection

When selling new or used items using online marketplaces, one of the most important elements influencing platform and payment method selection for many people are the seller protection policies in place. These policies often provide protection from common types of fraudulent claims that may be made by buyers, such as non-receipt, damage, etc. In order to remain in effect, they generally require both the buyer and seller to perform the transaction following certain requirements that enable proper resolution should an issue arise.

In an effort to remove these protections and make it easier to successfully monetize their scam(s), scammers posing as buyers will often attempt to convince sellers to conduct portions of the transaction “off-platform,” as this will void the protection policy that would otherwise be in place using a variety of different pretexts and themes. Likewise, some scammers target the shipping part of the transaction process, attempting to convince sellers to modify the shipping to void the seller protection policy afforded by the platform. By removing the ability for the seller to leverage the protection policy afforded by the platform used to sell the item, many of the normal recourse steps usually taken when dealing with fraudulent buyers are no longer available to the seller.

Off-platform transactions

Scammers also frequently use a variety of pretexts to attempt to convince sellers to perform the financial transaction associated with an item purchase using third-party platforms separate from the one on which an item was originally listed. They will attempt to trick victims into moving to an alternative mechanism for performing the transaction so that the seller loses most of the protection(s) afforded by the marketplace.

In many cases, scammers will use additional pressures, such as time sensitivity, urgency, or manipulated screenshots showing failed transaction attempts, to convince sellers to perform transactions using alternative means, such as wire transfers or money transfer platforms, where seller protections are limited and—in the case of fraud—most recourse options are no longer available.

There are countless examples of different pretexts being used in varying capacities, all ultimately for the same purpose, to convince sellers to leave the marketplace to perform the transaction elsewhere so that seller protections against fraudulent activity on the part of the buyer are removed.

Shipment detail changes

Since a seller account’s past and current item listings are easily accessible from the seller’s profile, it is easy for adversaries to leverage information contained within these listings (including photos) when building pretexts that are used to target the individual operating the seller account. One common way that adversaries leverage this information is by tailoring their messaging to account for both active and recently sold listings related to the seller they are communicating with.

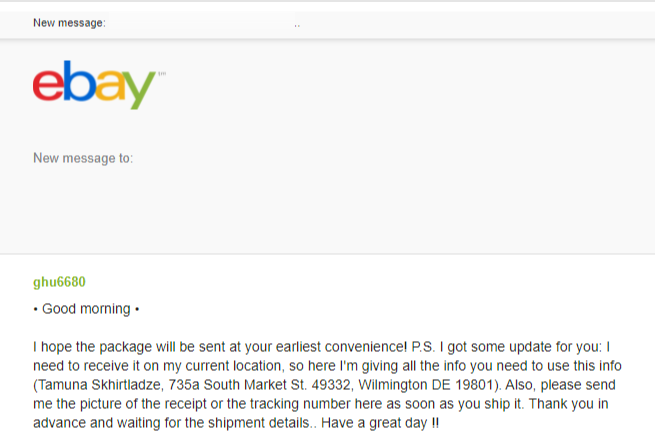

In the screenshot below, a scammer is contacting sellers on Ebay claiming to be an individual who purchased an item recently sold by the seller(s). The scammer hopes that by timing their message properly, they can take advantage of sellers who may not be paying close attention to the username listed in the message. For many sellers who are managing large numbers of listings, it can often be overwhelming to maintain many different streams of communication and/or multiple transactions simultaneously, leading them to respond quickly or otherwise take action (like modifying shipping instructions) without properly validating the content of the message.

Online reports indicate that it is not uncommon to receive these types of unsolicited messages, regardless of whether a seller has recently sold an item, which may be due to some level of automation being used to generate and transmit the messages.

If the seller is convinced to change the destination address for the shipment due to receipt of one of these scam messages, the item that was sold may be shipped to an address under the attacker’s control. The attacker is then able to monetize the item while the original buyer does not receive the item that they purchased. Many of the protections afforded by online marketplace are lost when the shipping address used does not reflect what was present on the order at time of purchase, opening the seller to additional exposure as the buyer’s loss will still need to be resolved.

The “friends and family” option

Social media sites like Reddit have become very popular for posting used items to solicit interest from other users of a given subreddit. In some subreddits, there are even official or unofficial “Want to Buy / Want to Sell” (WTB/WTS) threads, where users can list items they are selling or items they would like to purchase. This creates an ideal pool of potential victims for scammers seeking to target users of these platforms.

Since Reddit is not an online marketplace, the transactions associated with this activity typically take place using money transfer platforms, such as Paypal, Zelle, or Venmo. Scammers will often leverage compromised accounts on these money transfer platforms when communicating with sellers (or buyers), often attempting to convince them to use the “friends and family” (or equivalent) payment option available on many of these platforms. Often, sending or receiving money with this option enabled removes many of the mechanisms in place to protect sellers from chargebacks and other fraudulent activity. Sellers should never enable this option when processing payments for goods they may sell via online marketplaces, social media sites, or in any transaction where protection against fraud is desired.

In some cases, this method may be combined with other methods seeking to convince sellers to perform transactions outside of established platforms, then once the seller has agreed, scammers can leverage this option to further remove seller protections.

Recommendations

Most of the online literature related to defending against the scams pervasive on online marketplaces is geared towards the buyer experience. Recent trends in social media reporting indicate that sellers are also being increasingly targeted. While some of the scams faced by sellers resemble those faced by buyers, there are many that are unique. It is important that individuals or organizations leveraging online storefronts and/or marketplaces be aware of these threats so that they can prevent themselves from falling victim.

It is extremely important that online marketplace accounts be protected with multi-factor authentication (MFA) whenever the platform supports this capability. This will provide an enhanced layer of security in cases where the account credentials are compromised as an additional authentication factor will still be needed to successfully access the account.

When posting listings for items, be mindful of any photos that accompany the listing. Not unlike posting images to other social media platforms, items or objects in the background may unintentionally disclose sensitive information that could be used for malicious purposes. The information provided could also be leveraged to create more convincing or effective pretexts for later scam activities targeting the seller(s).

Avoid using third party services or platforms when conducting business transactions on online marketplaces. Take advantage of the protections afforded by the platform and avoid taking any actions that may jeopardize them. Reasonable buyers will be willing and able to conduct business following the standard platform transaction process. Sellers should avoid feeling pressured or worried about losing a sale and insist that the transaction take place in accordance with the policies of the online marketplace platform.

Verify the account associated with any messages received on online marketplaces. Always spend the time to validate that the message was actually received from the account of the buyer who purchased any recently sold items. Likewise, most platform support teams will not contact users via direct messaging for account verification or other sensitive processes as most of the time, these processes are directly supported by the platform itself. If a seller receives a direct message purporting to be from the platform itself, caution should be exercised and the message should be validated by contacting the support team separately using the information published on the marketplace website.

Sellers should also avoid modifying destination shipping addresses after orders have been placed. If a buyer asks to change the shipping address for an item they recently purchased, sellers should consider suggesting that they change their address using the online marketplace itself and contact support facilitate the change prior to shipping. This helps ensure that the seller is not deviating from the order that was placed, and helps ensure that they do not lose the seller protections afforded by the platform.

Share this document

We created this handy guide for you to share with anyone you know who sells online:

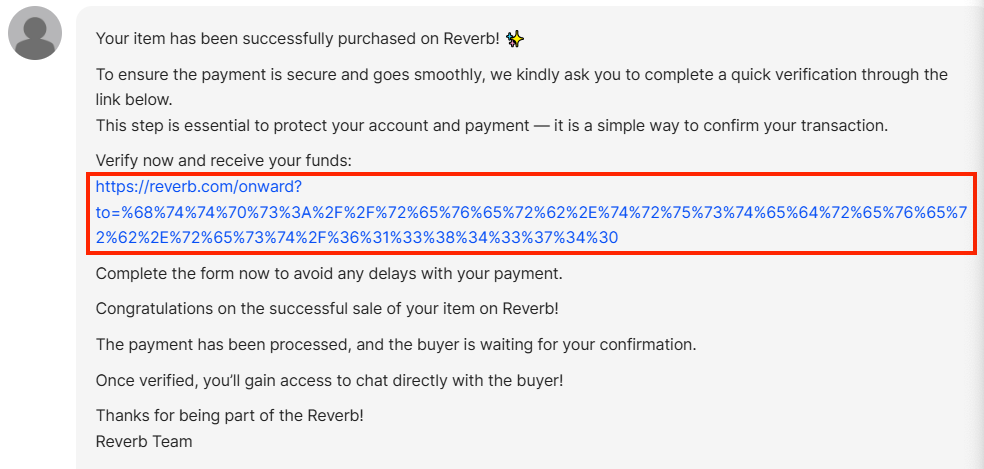

Coverage

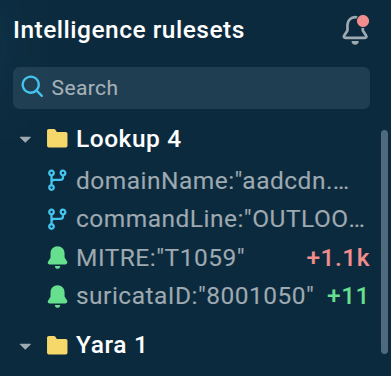

Ways our customers can detect and block this threat are listed below.

Cisco Secure Endpoint (formerly AMP for Endpoints) is ideally suited to prevent the execution of the malware detailed in this post. Try Secure Endpoint for free here.

Cisco Secure Web Appliance web scanning prevents access to malicious websites and detects malware used in these attacks.

Cisco Secure Email (formerly Cisco Email Security) can block malicious emails sent by threat actors as part of their campaign. You can try Secure Email for free here.

Cisco Secure Firewall (formerly Next-Generation Firewall and Firepower NGFW) appliances such as Threat Defense Virtual, Adaptive Security Appliance and Meraki MX can detect malicious activity associated with this threat.

Cisco Secure Malware Analytics (Threat Grid) identifies malicious binaries and builds protection into all Cisco Secure products.

Umbrella, Cisco’s secure internet gateway (SIG), blocks users from connecting to malicious domains, IPs and URLs, whether users are on or off the corporate network. Sign up for a free trial of Umbrella here.

Cisco Secure Web Appliance (formerly Web Security Appliance) automatically blocks potentially dangerous sites and tests suspicious sites before users access them.

Additional protections with context to your specific environment and threat data are available from the Firewall Management Center.

Cisco Duo provides multi-factor authentication for users to ensure only those authorized are accessing your network.

Open-source Snort Subscriber Rule Set customers can stay up to date by downloading the latest rule pack available for purchase on Snort.org.

Indicators of Compromise

IOCs for this research can also be found at our Github repository here.

Cisco Talos Blog – Read More