The who, where, and how of APT attacks in Q2 2025–Q3 2025

ESET Chief Security Evangelist Tony Anscombe highlights some of the key findings from the latest issue of the ESET APT Activity Report

WeLiveSecurity – Read More

ESET Chief Security Evangelist Tony Anscombe highlights some of the key findings from the latest issue of the ESET APT Activity Report

WeLiveSecurity – Read More

Former colleagues and friends remember the cybersecurity researcher, author, and mentor whose work bridged the human and technical sides of security

WeLiveSecurity – Read More

“We’ve hacked your computer! Send money to the specified account, or all your photos will be posted online”. You or someone you know has probably encountered an email with this kind of alarming message.

We’re here to offer some reassurance: nearly every blackmail email we’ve ever seen has been a run-of-the-mill scam. Such messages, often using identical text, are sent out to a massive number of recipients. The threats described in them typically have absolutely no basis in reality. The attackers send these emails out in a “spray and pray” fashion to leaked email addresses, simply hoping that at least a few recipients will find the threats convincing enough to pay the “ransom”.

This article covers which types of spam emails are currently prevalent in various countries, and explains how to defend yourself against email blackmailers.

Classic scam emails may vary in their content, but their essence always remains the same: the blackmailer plays the role of a noble villain, allowing the victim to walk away unharmed if they transfer money (usually cryptocurrency). To make the threat more believable, attackers sometimes include some of the victim’s personal data in the email, such as their full name, tax ID, phone number, or even their physical address. This doesn’t mean you’ve actually been hacked — more often than not, this information is sourced from leaked databases widely available on the dark web.

The most popular theme among email blackmailers is a “hack” where they claim to have gained full access to your devices and data. Within this theme, there are three common scenarios:

Blackmailers also don’t shy away from the topic of adult content. Typically, they simply intimidate the victim with threats that everyone will find out what kind of explicit content they’ve allegedly been viewing. Some attackers go further — they claim to have gained access to the person’s webcam and recorded intimate activity while simultaneously screen-recording their PC. The price of their silence starts at several hundred dollars in cryptocurrency. Crucially, these blackmailers intentionally try to isolate the victim by telling them not to report the email to law enforcement or loved ones, and claiming that doing so will immediately trigger the threats. By the way, safe and private viewing of adult content is a challenge unto itself, but we’ve covered that in Watching porn safely: a guide for grown-ups.

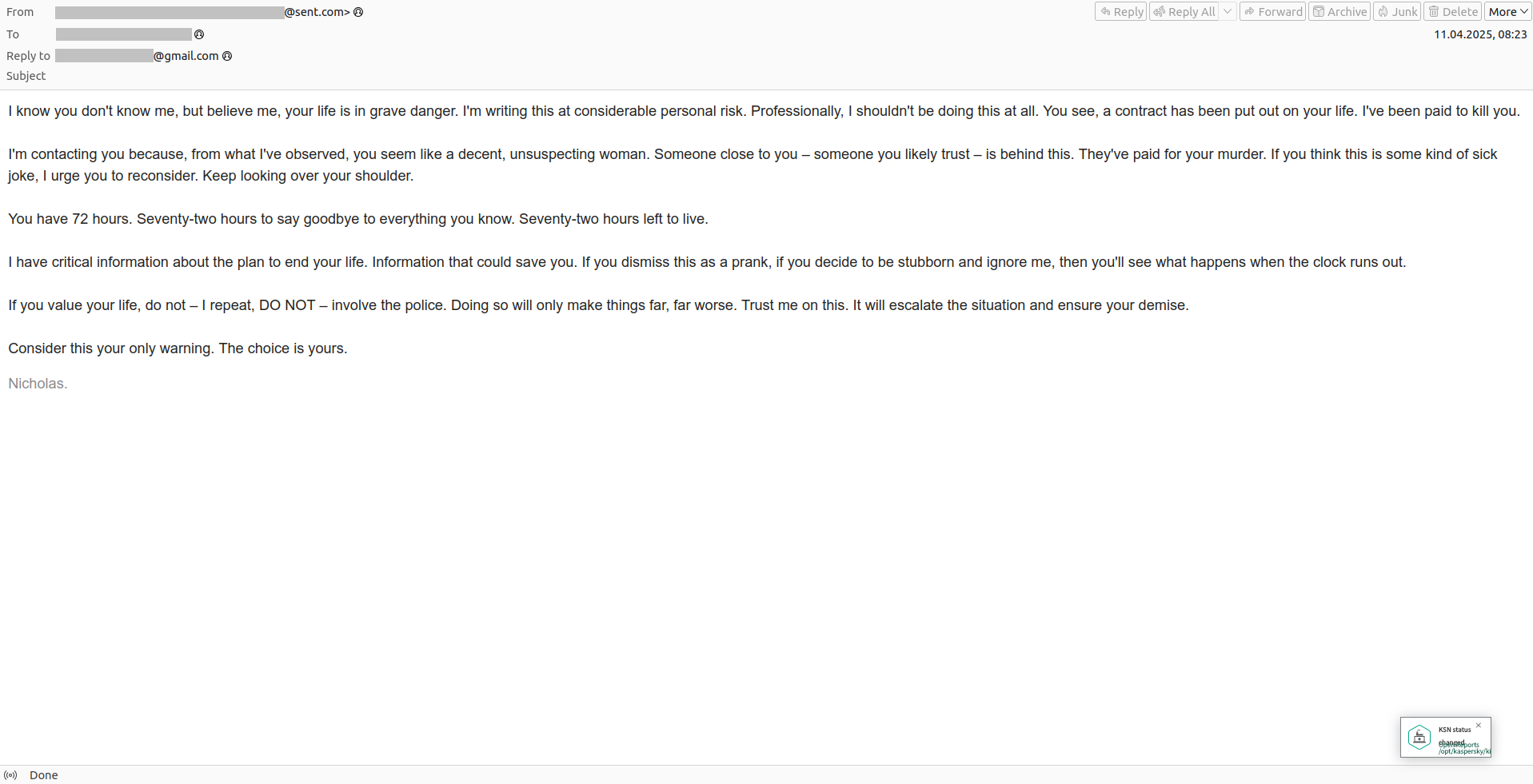

Perhaps the most extreme form of email blackmail involves death threats. Naturally, such an email would make anyone uneasy, and many people become genuinely worried for their own safety. The noble hitman, however, is always willing to spare the victim’s life if they can “outbid the one who ordered the hit”.

“You have 72 hours left to live.” The blackmailer suggests not involving the police and simply paying off “the one who ordered the hit” instead

Besides legends of “noble hackers” and “hitmen” who immediately offer a way out for a hefty fee, there are longer, more elaborate scams.

In these attacks, scammers pose as law enforcement officers. They don’t ask for money right away, as that would arouse suspicion. Instead, the victim receives a “summons” accusing them of committing a serious, often highly delicate crime. This typically involves allegations of distributing pornography (including child pornography), of pedophilia, human trafficking, or even indecent exposure. The “evidence” isn’t pulled out of thin air, but supposedly taken directly from the victim’s computer, to which the “special services” have gained “remote access”.

The document is designed to instill absolute terror: it includes a threat of arrest and a large fine, a signature with a seal, an official address, and names of high-ranking prosecutors. The scammers demand that the victim promptly makes contact via the email address provided in the message to offer an explanation — then, perhaps, the charges will be dropped. If the victim fails to respond, they’re threatened with arrest, registration on a list of sex offenders, and having their “file” passed to the media.

When the terrified victim contacts the attackers, the scammers then offer to “pay a fine” for an “out-of-court settlement of the criminal case” — a case that, of course, doesn’t exist.

These types of emails are sent under the guise of coming from major law enforcement organizations like Europol. They’re most frequently addressed to residents of France, Spain, the Czech Republic, Portugal, and other European countries. They also share a curious feature: typically, the subject line and the body of the email are quite brief, with the entire fraudulent case being laid out in attached documents. Reminder: we can’t stress this enough — never open email attachments if you don’t know or trust the sender! And to ensure that malicious and phishing emails don’t even reach your inbox, use a reliable protective solution.

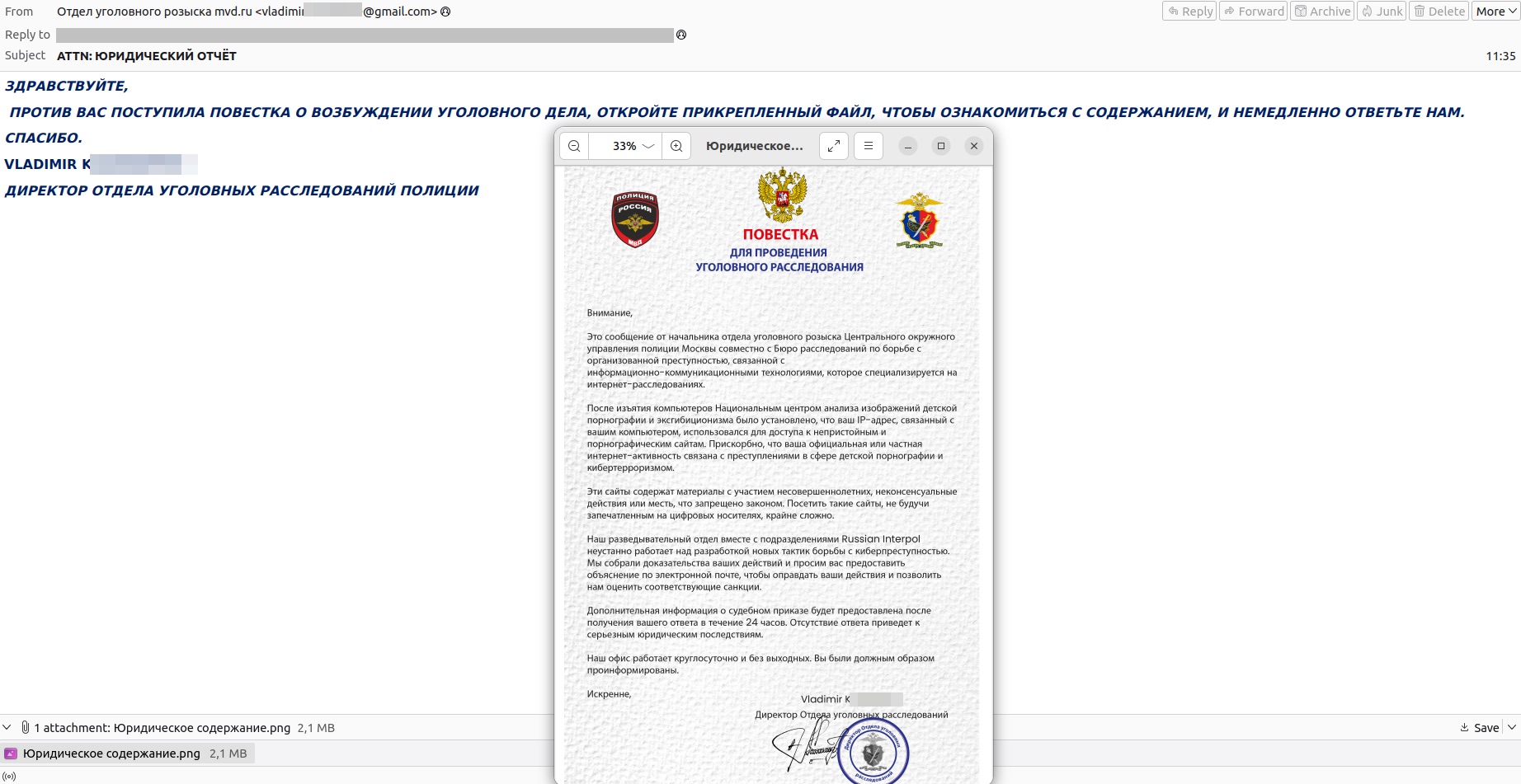

The “law enforcement theme” is also prevalent in CIS (former-Soviet-Union) countries. In 2025, scammers circulated “Summons for Criminal Investigation” alleging the initiation of a criminal case. This was supposedly issued by the Russian Ministry of Internal Affairs in collaboration with such fantastic units as “Russian Interpol” and the “Bureau of Investigation Against Organized Crime”.

According to the fictional narrative, a certain “National Center for the Analysis of Child Pornography and Exhibitionism Images” had seized computers somewhere and determined that the recipient’s IP address was used to “access inappropriate and pornographic websites”. Of course, a quick online search will reveal that none of the organizations mentioned in that email have ever officially existed in Russia.

The “Director of the Police Criminal Investigation Department” will, for added persuasiveness, write in ALL CAPS, and sign their name with an English transliteration

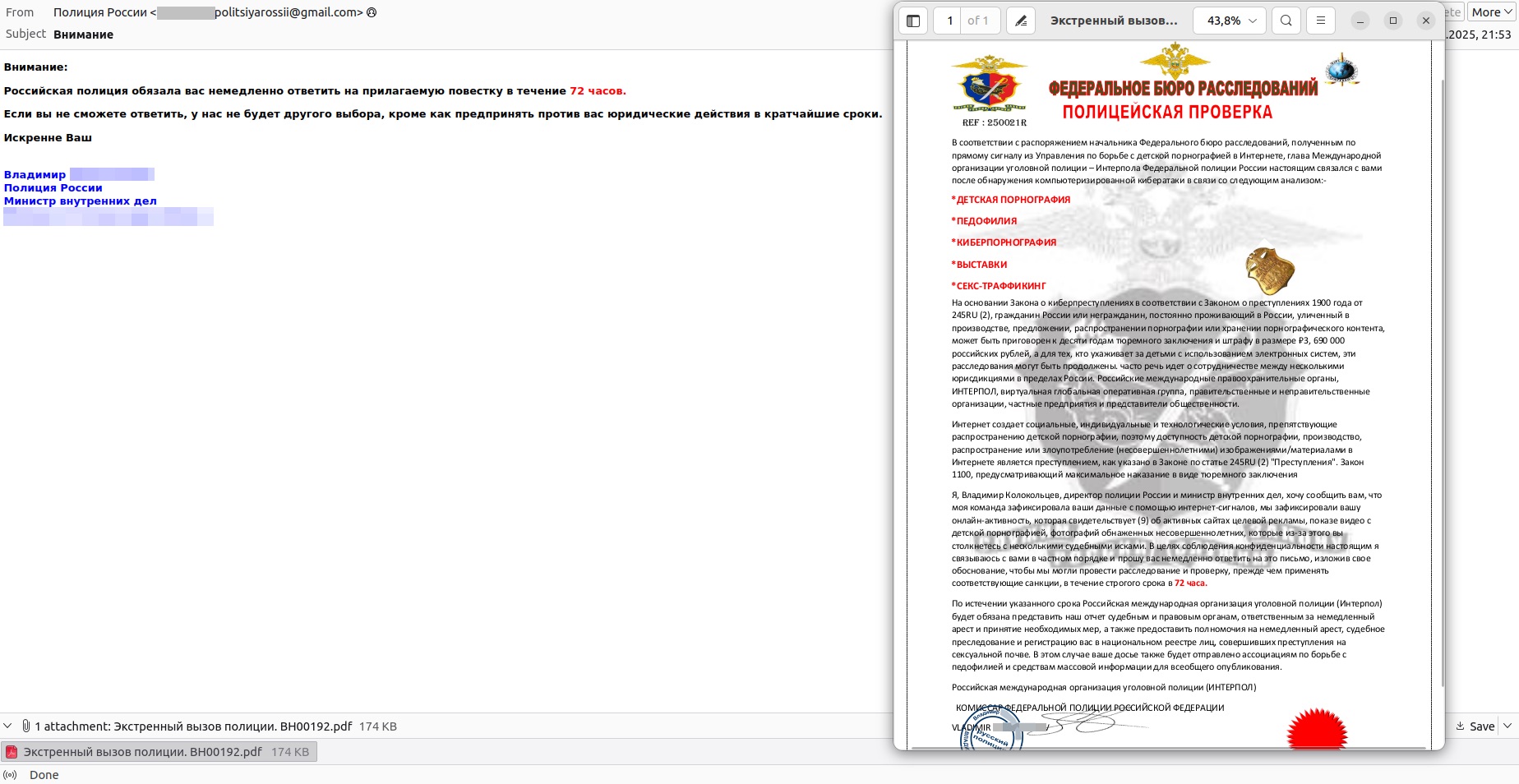

In another similar email, the recipient, at the behest of the head of the “Russian Federal Bureau of Investigation (FBI)”, supposedly became a person of interest to a certain “International Criminal Police Organization — Interpol of the Federal Police of Russia”. (We should clarify that no law enforcement agencies with even remotely similar names have ever existed in Russia.) In the email, the attackers refer to a “Cybercrime Act in accordance with the Crimes Act of 1900 (sic!) from 245RU(2)” — laws so secret that apparently no legal expert knows they exist. Moreover, the message, sent from a generic Gmail address, is supposedly from the Minister of Internal Affairs himself. However, in the attached summons, he is referred to as the “Commissioner of the Federal Police of the Russian Federation” — likely a clumsy translation from English.

The scam email from the non-existent “Russian Federal Bureau of Investigation” is signed by none other than the Minister of Internal Affairs

Similar scam emails also reach residents of Belarus, arriving in both Russian and Belarusian. The victims are supposedly being pursued by multiple agencies simultaneously: the Ministry of Internal Affairs and Ministry of Foreign Affairs of Belarus, the Militsiya of the Republic of Belarus, and a certain “Main Directorate for Combating Cybercrime of the Minsk City Internal Affairs Directorate for Interpol in Belarus”. One might assume that the email recipient is the country’s most wanted villain, being hunted by the “cyberpolice” itself.

In the summons, the blackmailers cite non-existent laws, and threaten to add the victim to a fictitious “National Register of Underage (sic!) Sexual Offenders” — a clear machine translation failure — and, of course, request an urgent reply to the email.

In another campaign, attackers sent emails in the name of the real State Security Committee of Belarus. However, they referenced a fake law and contacted the accused at the behest of the President of Europol — never mind that Europol doesn’t have a President, and the name of the real executive director is completely different.

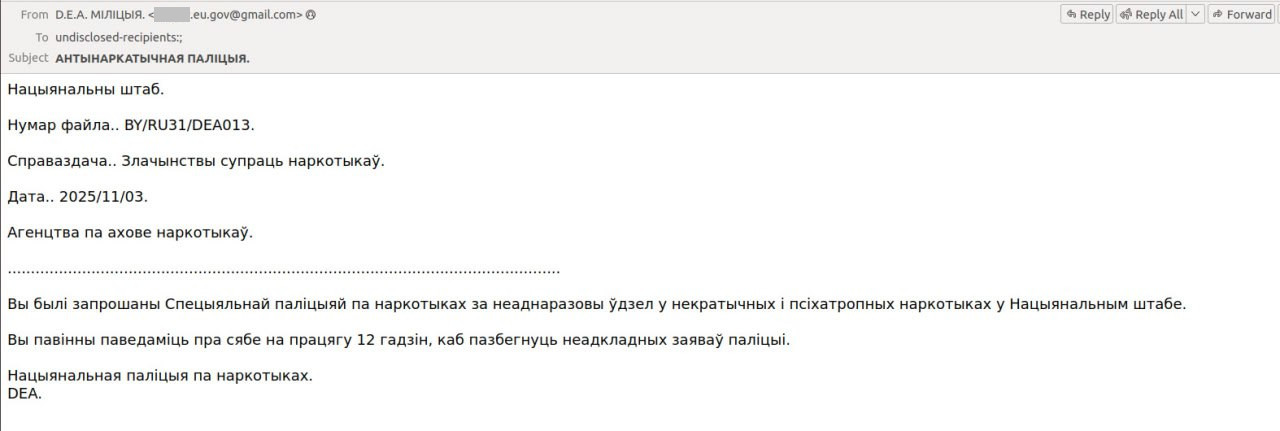

In addition to sex crimes, citizens of Belarus are also accused of “repeated use of necrotic (sic!) and psychotropic drugs”. In these emails, the attackers claim to be from the DEA — the U.S. Drug Enforcement Administration. Why a U.S. federal agency would be interested in Belarusian citizens remains a mystery.

The scammers failed to realize that the law enforcement body in Belarus is called the “militsya” (militia) rather than “politsya” (police)

As you can see from the examples above, the majority of these scam emails appear highly implausible — and yet they still find victims. That said, with scammers increasingly adopting AI tools, it’s reasonable to expect a significant improvement in both the text quality and design of these fraudulent campaigns. Let’s highlight several indicators that will help you recognize even the most skillfully crafted fakes.

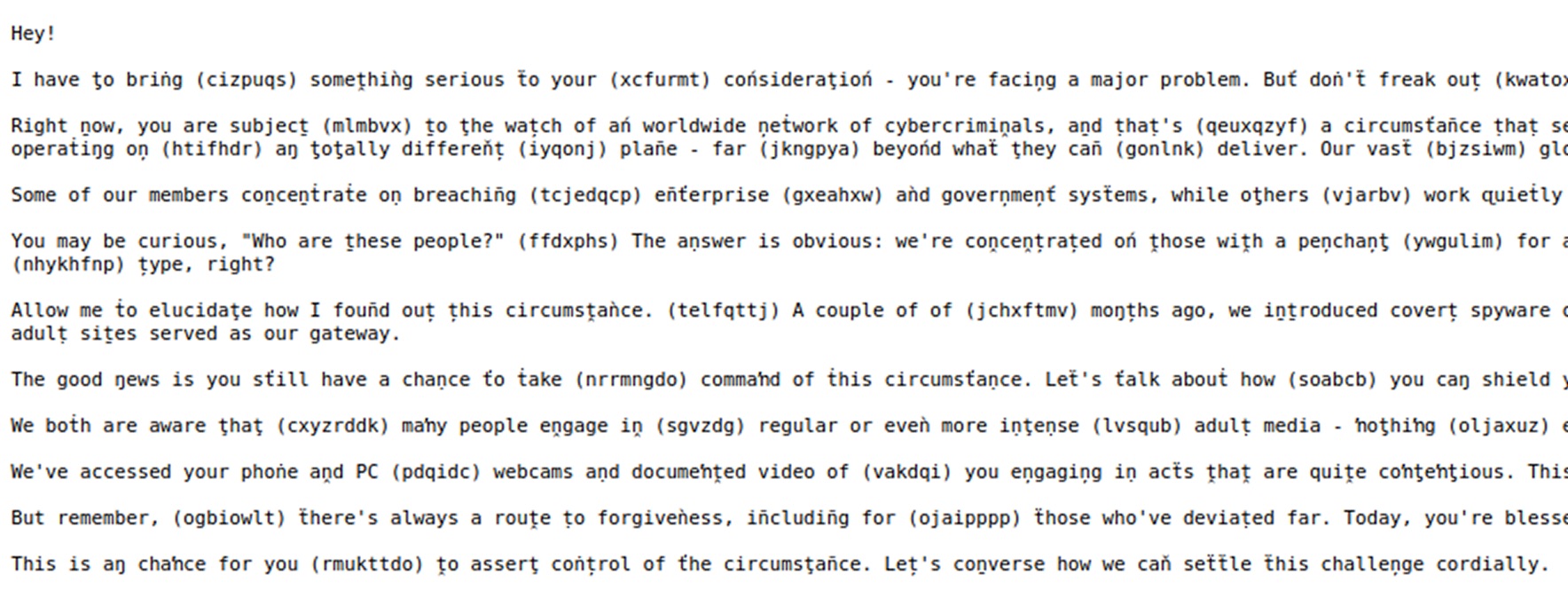

An example of scammers attempting to bypass spam filters by substituting characters and adding meaningless blocks of text

Read more on popular scammer tricks:

Kaspersky official blog – Read More

Welcome to this week’s edition of the Threat Source newsletter.

Ever heard the phrase in this week’s title?

For our non-British readers, here’s the quick version: Every year on November 5, people across the U.K. gather for bonfires, sparklers, fireworks, and attempting to literally handle a hot potato. I used to love these outings as a kid, but now, as a pet owner, I tend to stay in and try to calm the poor, scared creature during the fireworks.

Anyway, Bonfire Night is all about marking the evening when the Houses of Parliament didn’t get blown to pieces with gunpowder.

The Gunpowder Plot was the work of a group of conspirators who planned to assassinate King James I by detonating explosives beneath the House of Lords during the State Opening of Parliament on Tuesday, Nov. 5, 1605. They rented a vault in the cellars below the building, packed it with 36 barrels of gunpowder, and designated a fellow named Guy Fawkes to light the fuse.

Unbeknownst to the conspirators, an anonymous warning (the “Monteagle Letter”) was sent to one nobleman (who was due to attend the State Opening of Parliament), suggesting he come up with some sort of excuse to miss it:

“My lord, out of the love I bear to some of your friends, I have a care of your preservation. I would advise you, as you tender your life, to devise some excuse to shift your attendance at this Parliament; for God and man hath concurred to punish the wickedness of this time.

…for though there be no appearance of any stir, yet I say they shall receive a terrible blow this Parliament; and yet they shall not see who hurts them.”

Taking the warning seriously, the message was passed up the chain to Robert Cecil, the King’s chief minister, and the authorities ordered a search. Sir Thomas Knyvet, a Justice of the Peace, led a team to check the cellarsbeneath the House of Lords. There they found Fawkes guarding the barrels, carrying a lantern and some slow matches. He was arrested on the spot.

Several of Fawkes’ co-conspirators were killed while fleeing; the rest were captured, tried, and condemned. Fawkes himself was sentenced to be hanged, drawn, and quartered — though he died instantly after leaping from the scaffold and breaking his neck.

To this day, November 5th events across the UK include the burning of an effigy of Guy Fawkes in the middle of the bonfire. It’s always struck me as a very odd national tradition. But then again, we are a country of strange customs… such as when we chase a wheel of cheese down a near-vertical hill.

Centuries later, Fawkes’ face was stylised into a white mask with a sly grin for the graphic novel V for Vendetta. The mask became shorthand for protest and, eventually, for hacktivism. The man who didn’t light the fuse became the symbol for people trying to spark something. And that, Alanis, is ironic.

For the Gunpowder Plot, it was the act of someone doing the Jacobean equivalent of “better check that out,” based on some received threat intelligence. It’s a similar gut impulse that still saves many a day in modern cybersecurity settings: the analyst who follows a hunch, the responder who looks twice at a legitimate tool behaving oddly…

By the way, if you haven’t yet, do check out our latest Cisco Talos Incident Response report. It’s such a helpful tool for analysts whose days revolve around spotting suspicious behaviour.

For example, this quarter we saw an internal phishing campaign that was launched from compromised O365 accounts, where attackers “modified the email-management rules to hide the sent phishing emails and any replies.” As Craig pointed out in the most recent episode of The Talos Threat Perspective, he often asks his customers, “Can you effectively identify malicious inbox rules across your environment — not just for a single user’s mailbox, and not just for the last 90 days?”

So yes, while I think it’s still a bit odd that “Remember, remember the fifth of November” commemorates a disaster that never happened, most analysts I know would drink to that.

Two Tool Talks in a row? Christmas came early.

With the latest article, Cisco Talos’ Martin Lee explores how to empower autonomous AI agents with cybersecurity know-how, enabling them to make informed decisions about internet safety, such as evaluating the trustworthiness of domains. He demonstrates a proof-of-concept using LangChain and OpenAI, connected to the Cisco Umbrella API, so that AI agents can access real-time threat intelligence and make smarter security choices.

As AI agents become more autonomous and interact with the internet on your behalf, their ability to distinguish safe from unsafe sites directly impacts your digital security. Equipping AI with real-time threat intelligence means fewer mistakes and better protection for your data and devices in an evolving threat landscape.

If you work with or develop AI systems, consider incorporating real-time threat intelligence APIs like Cisco Umbrella to enhance your agents’ decision-making. As this technology evolves, staying informed and adapting these best practices will help ensure both your users and AI agents make safer choices online.

CISA: High-severity Linux flaw now exploited by ransomware gangs

Potential impact includes system takeover once root access is gained (allowing attackers to disable defenses, modify files, or install malware), lateral movement through the network, and data theft. (Bleeping Computer)

Phone location data of top EU officials for sale, report finds

Journalists in Europe found it was “easy” to spy on top European Union officials using commercially obtained location histories sold by data brokers, despite the continent having some of the strongest data protection laws in the world. (TechCrunch)

Poland hit by another major cyberattack

Polish authorities are investigating a large-scale cyberattack that compromised personal data belonging to clients of SuperGrosz, an online loan platform, Deputy Prime Minister and Minister for Digital Affairs Krzysztof Gawkowski confirmed. (Polskie Radio)

Conduent admits its data breach may have affected around 10 million people

The breach lasted nearly three months. Conduent is a major government contractor and works with more than 600 government entities globally, including those on state, local, and federal levels, and a majority ofFortune 100 companies. (Tech Radar)

The password for the Louvre’s video surveillance system was “Louvre”

Experts have been raising concerns about the museum’s security for more than a decade. In 2014, the museum’s video surveillance server password was “LOUVRE,” while a software program provided by the company Thales was secured with a password “THALES.” (Cybernews)

Tales from the Frontlines

On Wednesday, Nov. 12, hear Talos IR share candid stories of critical incidents last quarter, how we handled them, and what they mean for your organization. Registration is required.

Harnessing threat intel in Hybrid Mesh Firewall

Join us on Thursday, Nov. 13 to learn how Talos combines expert human research with advanced AI/ML to detect and stop emerging threats.

Dynamic binary instrumentation (DBI) with DynamoRio

This blog introduces DBI and guides you through building your own DBI tool with the open-source DynamoRIO framework on Windows 11.

SHA256: 9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507

MD5: 2915b3f8b703eb744fc54c81f4a9c67f

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507

Example Filename: e74d9994a37b2b4c693a76a580c3e8fe_1_Exe.exe

Detection Name: Win.Worm.Coinminer::1201

SHA256: 41f14d86bcaf8e949160ee2731802523e0c76fea87adf00ee7fe9567c3cec610

MD5: 85bbddc502f7b10871621fd460243fbc

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=41f14d86bcaf8e949160ee2731802523e0c76fea87adf00ee7fe9567c3cec610

Example Filename: 85bbddc502f7b10871621fd460243fbc.exe

Detection Name: W32.41F14D86BC-100.SBX.TG

SHA256: 96fa6a7714670823c83099ea01d24d6d3ae8fef027f01a4ddac14f123b1c9974

MD5: aac3165ece2959f39ff98334618d10d9

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=96fa6a7714670823c83099ea01d24d6d3ae8fef027f01a4ddac14f123b1c9974

Example Filename: 96fa6a7714670823c83099ea01d24d6d3ae8fef027f01a4ddac14f123b1c9974.exe

Detection Name: W32.Injector:Gen.21ie.1201

SHA256: a31f222fc283227f5e7988d1ad9c0aecd66d58bb7b4d8518ae23e110308dbf91

MD5: 7bdbd180c081fa63ca94f9c22c457376

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=a31f222fc283227f5e7988d1ad9c0aecd66d58bb7b4d8518ae23e110308dbf91

Example Filename: e74d9994a37b2b4c693a76a580c3e8fe_3_Exe.exe

Detection Name: Win.Dropper.Miner::95.sbx.tg

SHA256: d933ec4aaf7cfe2f459d64ea4af346e69177e150df1cd23aad1904f5fd41f44a

MD5: 1f7e01a3355b52cbc92c908a61abf643

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=d933ec4aaf7cfe2f459d64ea4af346e69177e150df1cd23aad1904f5fd41f44a

Example Filename: cleanup.bat

Detection Name: W32.D933EC4AAF-90.SBX.TG

Cisco Talos Blog – Read More

The implementation of Software-Defined Wide Area Networks (SD-WANs) boosts enterprise operational efficiency, saves money, and enhances security. These impacts are so significant that they’re sometimes visible on a national scale. According to The Transformative Impact of SD-WAN on Society and Global Development article from the International Journal for Multidisciplinary Research, the technology’s implementation can result in a 1.38% increase in GDP for developing countries. At the company level, the effects are even more pronounced. For example, in modern, deeply digitized industrial manufacturing, it can reduce unplanned downtime by 25%.

Furthermore, SD-WAN implementation projects not only offer a fast return on investment, but also continue to deliver additional benefits and increased efficiency as the solution receives updates, and new versions are released. To demonstrate this, we present the new Kaspersky SD-WAN 2.5 and its most compelling features.

This is a classic SD-WAN feature, and one of the technology’s primary competitive advantages. Traffic routing depends on the nature and location of the business application, but it also considers current priorities and network conditions: in some cases, reliability is paramount; in others, speed or low latency is key. The new version of Kaspersky SD-WAN improves the algorithm, and factors in detailed data about traffic loss on every possible path. This ensures the stable operation of critical services across geographically distributed networks — for example, by reducing issues with large-scale, nationwide video conferences. Crucially, this increase in reliability is accompanied by a reduced workload on network engineers and support staff, as the route adaptation process is fully automated.

This feature optimizes the speed of domain name resolution, and helps maintain security policies for different types of applications. For example, requests related to MS Office cloud infrastructure will be forwarded directly from the local office to Microsoft’s CDN, while internal network server names will be resolved through the corporate DNS server. This approach significantly improves the speed of establishing connections, and eliminates the need for manual configuration of routers in every office. Instead, a single, unified policy is sufficient for the entire network.

Any large-scale network reconfiguration increases the risk of interruptions and outages — even if brief. To ensure such an event doesn’t disrupt critical business processes, any policy change within Kaspersky SD-WAN can be scheduled for a specific time. Want to change the router settings in a hundred offices simultaneously? Schedule the change for 02:00 local time or Saturday morning. This eliminates the need for regional IT staff to be physically present during the deployment.

Analysis of BGP routing can now be done entirely through the orchestrator’s graphical interface. Did a routing loop suddenly appear somewhere between the Milan and Paris offices? Instead of logging into the equipment in each office and all intermediary nodes via SSH, you can now identify and resolve the issue through a single interface — significantly reducing downtime.

If the network equipment in an office needs to be replaced, you can now preserve all existing settings when swapping it out. The technician in the office simply plugs in the new CPE unit, and the Kaspersky SD-WAN orchestrator automatically restores all policies and tunnels on it. This offers several immediate benefits: it significantly reduces downtime; the replacement can be performed by a technician without deep expert knowledge of network protocols; and it substantially reduces the probability of additional failures caused by manual configuration errors.

While often the fastest and most cost-effective corporate communication channel to deploy, LTE comes with a drawback: instability. Both cellular coverage and operational speed can fluctuate frequently, requiring network engineers to take action — such as relocating the CPE to an area with better reception. Now, you can make these decisions with diagnostic data collected directly within the orchestrator. It displays the service parameters of connected LTE devices, including the signal strength level.

For companies with the most stringent requirements for fault tolerance and recovery time, specialized CPE variants equipped with a small built-in power source are available by special order. In the event of a power failure, the CPE will be able to send detailed data about the failure type to the orchestrator. This gives administrators time to investigate the cause so they can resolve the issue much faster.

These are just some of the innovations in Kaspersky SD-WAN. Others include the ability to configure security policies for connections to the CPE console port, and support for large-scale networks with 2000+ CPEs and load balancing across multiple orchestrators. To learn more about how all these new features increase the value of SD-WAN for your organization, our experts are available to provide a personalized demo. The solution is available in select regions.

Kaspersky official blog – Read More

In the late 1960s, the science fiction author Philip K. Dick wrote “Do Androids Dream of Electric Sheep,” which, among other themes, explored the traits that distinguish humans from autonomous robots. As advances in generative AI allow us to create autonomous agents that are able to reason and act on humans’ behalf, we must consider the human traits and knowledge that we must equip agentic AI with to allow them to act autonomously, reasonably, and safely.

One skill we need to impart on our AI agents is the ability to stay safe when navigating the internet. If agentic AI systems are interacting with websites and APIs in the same way as a human internet user, they need to be aware that not all websites or public APIs are trustworthy, and nor is user supplied input. Therefore, we must empower our AI agents with the ability to make appropriate cyber hygiene decisions. In an agentic world, it is for the autonomous agent to decide if it is safe and appropriate to “click the link.”

The threat landscape is constantly shifting, so there are no hard and fast rules that we can teach AI systems about what is a safe link and what is not. AI agents must verify the disposition of links in real time to determine if something is malicious.

There are many emerging approaches to building AI workflow systems that can integrate multiple sources of information to allow an AI agent to come to a decision about an appropriate course of action. In this blog, I show how it is possible to use one of these frameworks, LangChain, with OpenAI to enable an AI agent to access real-time threat intelligence via the Cisco Umbrella API.

To implement this example you will need API keys for Cisco Umbrella and a paid OpenAI account.

Follow along with the full sample code, which can be found in Talos’ GitHub repository.

First, we need to describe the tool to the AI agent.

Then we include the newly described tool in the list of available tools.

Next, we create the large language model (LLM) instance that we will use. This example uses GPT-3.5-Turbo from OpenAI, but other LLM models are supported.

Now, let’s give instructions to the LLM, describing what the LLM should do using natural language structured in a Question, Thought, Action, Observation format.

Create the agent and the executor instance that we will interact with.

As part of querying the Umbrella API, we must obtain a session token to pass to the Umbrella API with our request. This is obtained from an authentication call using our API key and secret.

Next, let’s define the tool that we have described to the AI system. It accepts input text as a parameter and checks for the presence of any domains. If any are found, the disposition of each one is checked.

The key functionality within the above code is “getDomainDisposition” which passes the domain to the Umbrella API to retrieve the disposition and categorization information about the domain.

We can now pass input text to “agent_executor” to discover the agent’s opinion.

This gives the response:

“Agent Response: www.cisco.com is safe to browse.”

Reassuringly, the agent reports that “cisco.com” is safe to connect to. If necessary, we can output the domain disposition report to see the logic by which the system arrives at this conclusion:

“This contains a URL. Considering www.cisco.com. The domain www.cisco.com has a positive disposition. The domain www.cisco.com is classified as: Computers and Internet, Software/Technology. Known malicious domains are never safe, domains with positive disposition are usually safe. A domain with an unknown disposition might be safe if it is categorized.”

Let’s try a different domain which is known to be malicious.

“Agent Response: do not connect”

When provided with a known malicious domain, the system identifies that the domain has a negative disposition and concludes that this is not a domain which is safe for connection.

Now let’s try input text with two domains.

“Agent Response: www.umbrella.com is safe to connect to. test.example.com has an unknown disposition, so it is uncertain if it is safe to connect to.”

The system is able to provide separate advice for each domain when supplied with input containing a domain with a positive disposition and one with an unknown disposition.

Finally, let’s see what happens when we pose an unrelated question without any domains.

“Agent Response: no opinion”

Examining the logic shows that the system made the correct decision not to attempt to answer the question.

“No URLs found. Since no internet domains were found in the user input, I have no information to assess the safety of any websites.“

This is very much proof-of-concept code, but it does show how we can integrate APIs offering real-time authoritative facts, such as the security disposition of domains from Umbrella, into the decision making process of AI agents.

There are other approaches that we can use to arrive at the same result. We could put the AI agent behind a web security gateway or require the agent to use Umbrella DNS, which would enforce the restriction not to connect to malicious sites. However, to do so removes the ability for the AI agent to learn how to make sense of potentially conflicting information and to make good decisions.

The current generation of LLM-based generative AI systems is only the beginning of the forthcoming advances in autonomous agentic AI. As part of building this next generation of AI systems, we need to ensure that they not only make good decisions, but understand cyber hygiene and have access to real-time threat intelligence on which to base their decision-making.

Cisco Talos Blog – Read More

Big news from the ANY.RUN team; we’ve just been named the 2025 “Trailblazing Threat Intelligence” winner at the Top InfoSec Innovators Awards!

This recognition means a lot to us because it celebrates what we care about most: helping analysts, SOC teams, and researchers access live, actionable threat intelligence that makes a real difference in investigations every day.

The Top InfoSec Innovator Awards celebrate cybersecurity companies that shape the future of the industry with new ideas and bold technology. Now in its 13th year, the program is known worldwide for spotlighting organizations that truly move the field forward.

Winning the Trailblazing Threat Intelligence award reinforces what drives us, transforming how teams investigate and respond to cyber threats through a connected, behavioral approach to intelligence.

For our users, this award reflects the impact they experience every day:

We earned this recognition because innovation at ANY.RUN is built around real analyst needs. Instead of scattering data across multiple tools, we created an ecosystem where threat intelligence is connected, interactive, and human-centered.

Our Threat Intelligence Lookup and Threat Intelligence Feeds bridge live malware behavior with verified indicators, giving teams instant context they can trust. Whether it’s uncovering hidden links between campaigns or enriching detections automatically, these solutions help analysts see more, decide faster, and collaborate better.

That’s what this award stands for: innovation that connects people and data to make threat intelligence more practical, powerful, and ready for what’s next.

This recognition fuels our drive to keep innovating.

In the coming months, we’re expanding our Threat Intelligence products with even deeper enrichment, new integrations for SIEM and SOAR platforms, and broader OS coverage.

But most importantly, we’ll keep growing together with our community; the analysts, researchers, and security teams who make ANY.RUN what it is today. Every sample executed, every IOC shared, every insight contributed helps make global defense stronger.

So, this win is yours as much as it is ours.

Experience threat intelligence that helps analysts act 21 minutes faster per case and uncover 24× more IOCs per incident.

With behavior-driven data and real-world context, ANY.RUN turns every investigation into clear, actionable insight.

Book a live demo and see how connected intelligence can sharpen your team’s response.

ANY.RUN, a leading provider of interactive malware analysis and threat intelligence solutions, makes advanced investigation fast, visual, and accessible.

The service processes millions of analysis sessions and is trusted by 15,000+ organizations and over 500,000 cybersecurity professionals worldwide.

Teams using ANY.RUN report tangible gains; up to 3× higher SOC efficiency, 90% faster detection of unknown threats, and a 60% reduction in false positives thanks to real-time interactivity and behavior-based analysis.

Explore ANY.RUN’s capabilities with a 14-day trial

The post ANY.RUN Wins Trailblazing Threat Intelligence at the 2025 Top InfoSec Innovators Awards appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More

The year is 2024. A team of scientists from both the University of California San Diego and the University of Maryland, College Park, discovers an unimaginable danger looming over the world — its source hiding in space. They start sounding the alarm, but most people simply ignore them…

No, this isn’t the plot of the Netflix hit movie Don’t Look Up. This is the sudden reality in which we find ourselves following the publication of a study confirming that corporate VoIP conversations, military operation data, Mexican police records, private text messages and calls from mobile subscribers in both the U.S. and Mexico, and dozens of other types of confidential data are being broadcast unencrypted via satellites for thousands of miles. And to intercept it, all you need is equipment costing less than US$800: a simple satellite-TV receiver kit.

Today, we explore what might have caused this negligence, if it’s truly as easy to extract the data from the stream as described in a Wired article, why some data operators ignored the study and took no action, and, finally, what we can do to ensure our own data doesn’t end up on these vulnerable channels.

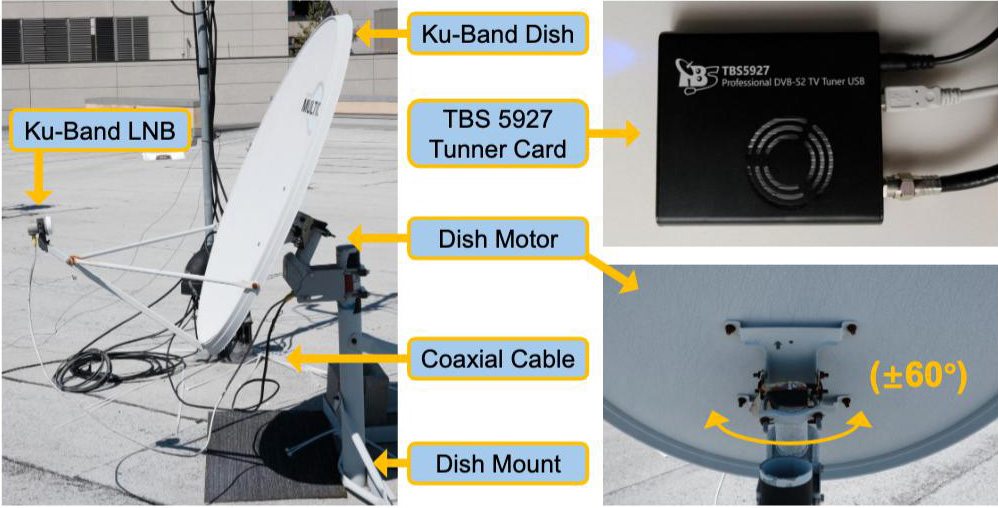

Six researchers set up a standard geostationary satellite-TV antenna — the kind you can buy from any satellite provider or electronics store — on the university roof in the coastal La Jolla area of San Diego, Southern California. The researchers’ no-frills rig set them back a total of US$750: $185 for the satellite dish and receiver, $140 for the mounting hardware, $195 for the motorized actuator to rotate the antenna, and $230 for a TBS5927 USB-enabled TV tuner. It’s worth noting that in many other parts of the world, this entire kit likely would have cost them much less.

What distinguished this kit from the typical satellite-TV antenna likely installed outside your own window or on your roof was the motorized dish actuator. This mechanism allowed them to reposition the antenna to receive signals from various satellites within their line of sight. Geostationary satellites, used for television and communications, orbit above the equator and move at the same angular velocity as the Earth. This ensures they remain stationary relative to the Earth’s surface. Normally, once you point your antenna at your chosen communication satellite, you don’t need to move it again. However, the motorized drive allowed the researchers to quickly redirect the antenna from one satellite to another.

Every geostationary satellite is equipped with numerous data transponders used by a variety of telecom operators. From their vantage point, the scientists managed to capture signals from 411 transponders across 39 geostationary satellites, successfully obtaining IP traffic from 14.3% of all Ku-band transponders worldwide.

The researchers were able to use their simple US$750 rig to examine traffic from nearly 15% of all active satellite transponders worldwide. Source

The team first developed a proprietary method for precise antenna self-alignment, which significantly improved signal quality. Between August 16 and August 23, 2024, they performed an initial scan of all 39 visible satellites. They recorded signals lasting three to ten minutes from every accessible transponder. After compiling this initial data set, the scientists continued with periodic selective satellite scans and lengthy, targeted recordings from specific satellites for deeper analysis — ultimately collecting a total of more than 3.7TB of raw data.

The researchers wrote code to parse data transfer protocols and reconstruct network packets from the raw captures of satellite transmissions. Month after month, they meticulously analyzed the intercepted traffic, growing increasingly concerned with each passing day. They found that half (!) of the confidential traffic broadcast from these satellites was completely unencrypted. Considering that there are thousands of transponders in geostationary orbit, and the signal from each one can, under favorable conditions, be received across an area covering up to 40% of the Earth’s surface, this story is genuinely alarming.

Pictured at the University of San Diego roof setup, from left to right: Annie Dai, Aaron Schulman, Keegan Ryan, Nadia Heninger, and Morty Zhang. Not pictured: Dave Levin. Source

The geostationary satellites were found to be broadcasting an immense and varied amount of highly sensitive data completely unencrypted. The intercepted traffic included:

While most of this data seems to have been left unencrypted due to sheer negligence or a desire to cut costs (which we’ll discuss later), the presence of cellular data in the satellite network has a slightly more intriguing origin. This issue stems from what is known as backhaul traffic — used to connect remote cell towers. Many towers located in hard-to-reach areas communicate with the main cellular network via satellites: the tower beams a signal up to the satellite, and the satellite relays it back to the tower. Crucially, the unencrypted traffic the researchers intercepted was the data being transmitted from the satellite back down to the remote cell tower. This provided them access to things like SMS messages and portions of voice traffic flowing through that link.

It’s time for our second reference to the modern classic by Adam McKay. The movie Don’t Look Up is a satirical commentary on our reality — where even an impending comet collision and total annihilation cannot convince people to take the situation seriously. Unfortunately, the reaction of critical infrastructure operators to the scientists’ warnings proved to be strikingly similar to the movie plot.

Starting in December 2024, the researchers began notifying the companies whose unencrypted traffic they’d successfully intercepted and identified. To gauge the effectiveness of these warnings, the team conducted a follow-up scan of the satellites in February 2025 and compared the results. They found that far from all operators took any action to fix the issues. Therefore, after waiting nearly a year, the scientists decided to publicly release their study in October 2025 — detailing both the interception procedure and the operators’ disappointing response.

The researchers stated that they were only publishing information about the affected systems after the problem had been fixed or after the standard 90-day waiting period for disclosure had expired. For some systems, an information disclosure embargo was still in effect at the time of the study’s publication, so the scientists plan to update their materials as clearance allows.

Among those who failed to address the notifications were: the operators of unnamed critical infrastructure facilities, the U.S. Armed Forces, Mexican military and law enforcement agencies, as well as Banorte, Telmex, and Banjército.

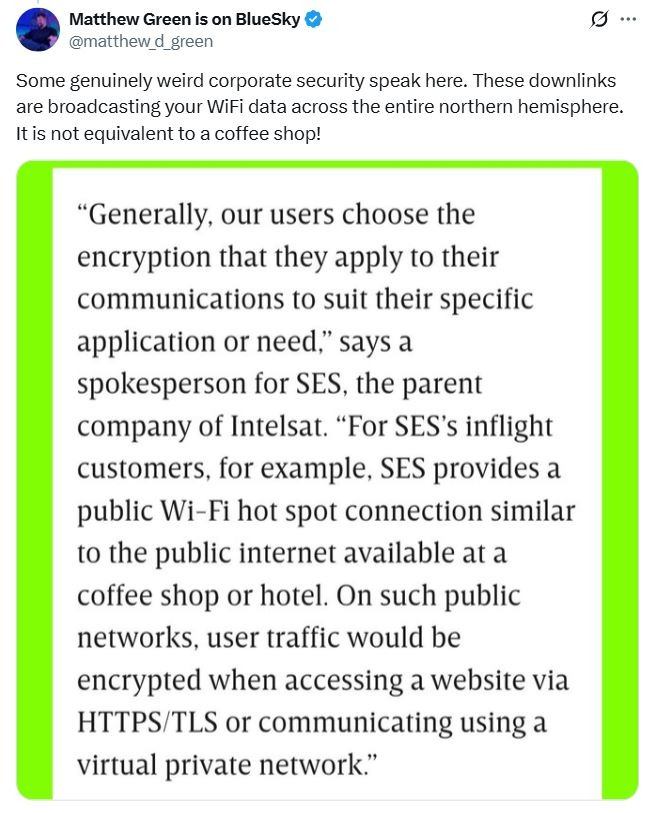

When questioned by Wired about the incident, in-flight Wi-Fi providers responded vaguely. A spokesperson for Panasonic Avionics Corporation said the company welcomed the findings by the researchers, but claimed they’d found that several statements attributed to them were either inaccurate or misrepresented the company’s position. The spokesperson didn’t specify what exactly it was that the company considered inaccurate. “Our satellite communications systems are designed so that every user-data session follows established security protocols,” the spokesperson said. Meanwhile, a spokesperson for SES (the parent company of Intelsat) completely shifted responsibility onto the users, saying, “Generally, our users choose the encryption that they apply to their communications to suit their specific application or need,” effectively equating using in-flight Wi-Fi with connecting to a public hotspot in a café or hotel.

The SES spokesperson’s response to Wired, along with a comment by Matthew Green, an associate professor of computer science at Johns Hopkins University in Baltimore. Source

Fortunately, there were also many appropriate responses, primarily within the telecommunications sector. T-Mobile encrypted its traffic within just a few weeks of being notified by the researchers. AT&T Mexico also reacted immediately, fixing the vulnerability and stating it was caused by a misconfiguration of some towers by a satellite provider in Mexico. Walmart-Mexico, Grupo Santander Mexico, and KPU Telecommunications all approached the security issue diligently and conscientiously.

According to the researchers, data operators have a variety of reasons — ranging from technical to financial — for avoiding encryption.

It’s highly likely they simply did not know how to respond. It’s difficult to believe that such a massive vulnerability could remain unnoticed for decades, so it’s possible the problem was intentionally left unaddressed. The researchers note that no single, unified entity is responsible for overseeing data encryption on geostationary satellites. Each time they discovered confidential information in their intercepted data, they had to expend considerable effort to identify the responsible party, establish contact, and disclose the vulnerability.

Some experts are comparing the media impact of this research to the declassified Snowden archives, given that the interception techniques used could be deployed for worldwide traffic monitoring. We can also liken this case to the infamous Jeep hack, which completely upended cybersecurity standards in the automotive industry.

We cannot exclude the possibility that this entire issue stems from simple negligence and wishful thinking — a reliance on the assumption that no one would ever “look up”. Data operators may have treated satellite communication as a trusted, internal network link where encryption was simply not a mandatory standard.

For regular users, the recommendations are similar to those we give for using any unsecured public Wi-Fi access point. Unfortunately, while we can encrypt the internet traffic originating from our devices ourselves, the same cannot be done for cellular voice data and SMS messages.

What else you need to know about telecommunication security:

Kaspersky official blog – Read More

Think you could never fall for an online scam? Think again. Here’s how scammers could exploit psychology to deceive you – and what you can do to stay one step ahead

WeLiveSecurity – Read More

ANY.RUN’s malware analysis and threat intelligence products are used by 15K SOCs and 500K analysts. Thanks to flexible API/SDK and read-made connectors, they seamlessly integrate with security teams’ existing software to expand threat coverage, reduce MTTR, and streamline performance.

Here’s how ANY.RUN’s solutions can transform your security.

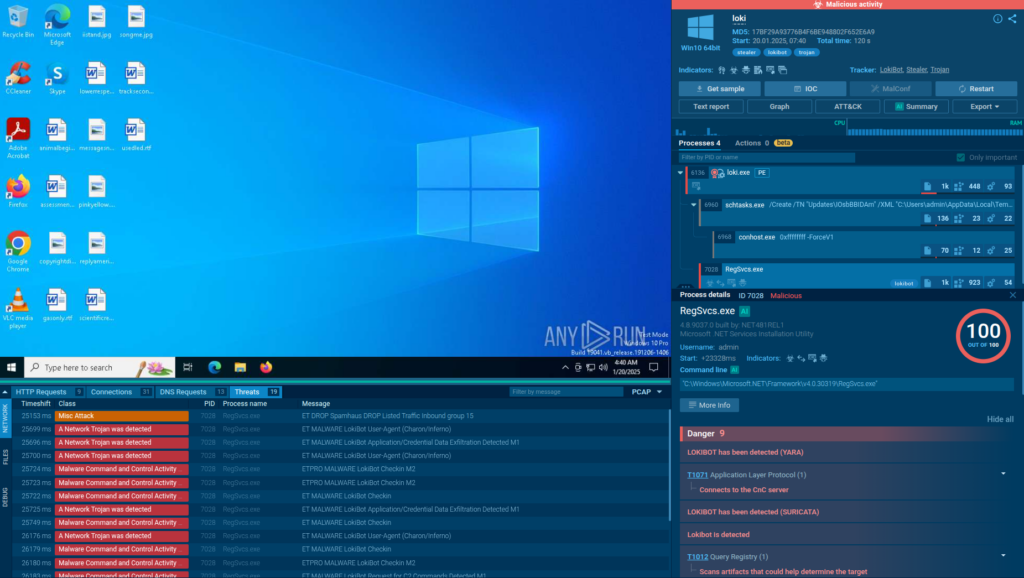

ANY.RUN’s Interactive Sandbox provides a real-time, cloud-based environment for detonating and analyzing suspicious files, URLs, and scripts across Windows, Linux, and Android systems. It lets analysts perform user actions like launching executables or opening links needed to trigger kill chains and force hidden payloads to reveal themselves, enabling faster detection and response.

The sandbox integrates with other solutions like SOAR platforms in an automated mode, which means it can fully detonate complex phishing and malware attacks on its own, including by solving CAPTCHAs and scanning QR codes.

The sandbox delivers immediate, actionable insights into the most evasive threats without risking production systems.

If your solution is not on the list, you can easily set up a custom integration using ANY.RUN’s API or Python-based SDK (see docs on GitHub or PyPi).

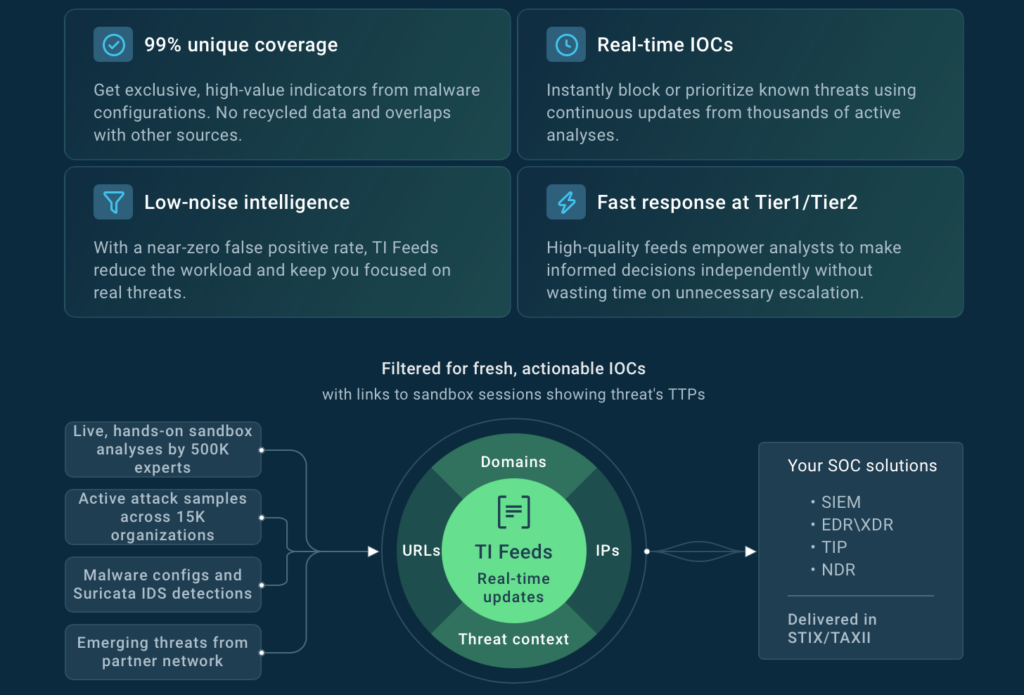

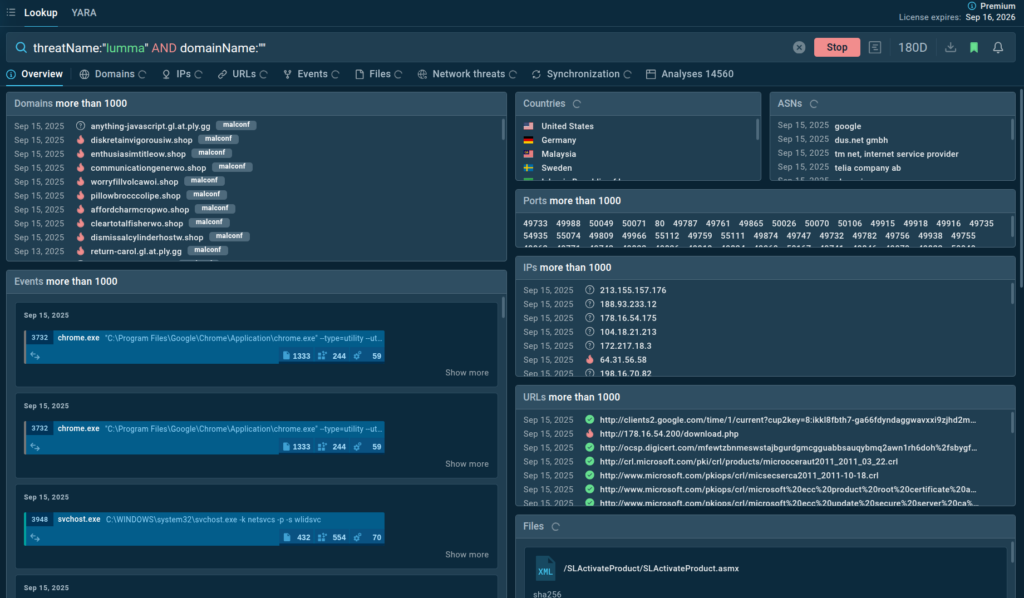

Threat Intelligence Feeds deliver real-time, high-confidence malicious indicators (IPs, domains, URLs) supplied in STIX/TAXII. The indicators are sourced from analyses of the latest malware and phishing attacks performed by 15,000 organizations and 500,000 analysts in ANY.RUN’s Interactive Sandbox.

Thanks to being powered by one of the largest malware analysis communities, these feeds provide 99% unique IOCs, not found in other sources, that are updated in real time.

As a result, they give SOCs up-to-date visibility into threats almost as soon as they emerge. With TI Feeds, security teams can:

If your solution is not on the list, you can easily set up a custom integration using ANY.RUN’s API or Python-based SDK (see docs on GitHub or PyPi).

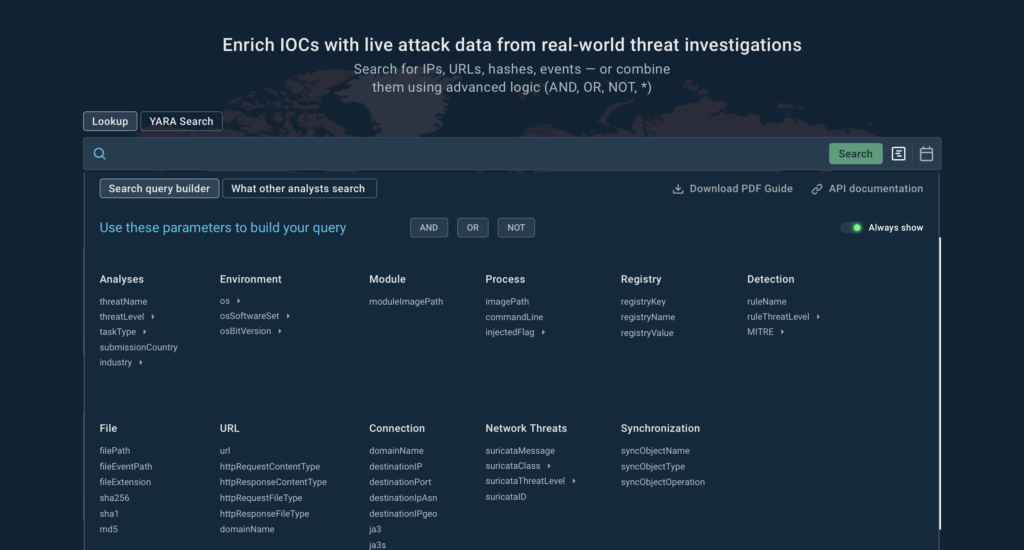

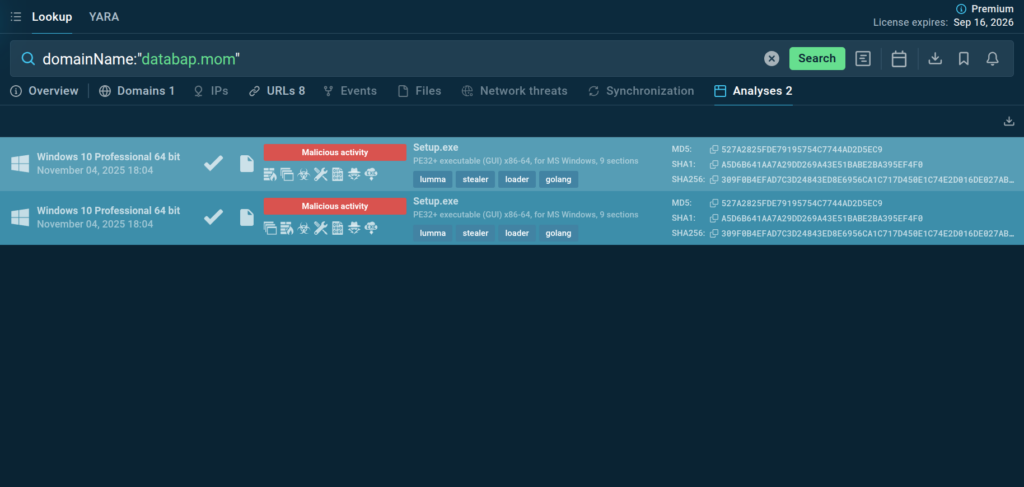

Threat Intelligence Lookup is a powerful solution designed to streamline and accelerate malware investigations, from proactive monitoring to incident response.

SOC teams can use it to quickly get actionable context for over 40 different types of Indicators of Compromise (IOCs), Attack (IOAs), and Behavior (IOBs), from an IP address and a domain to a mutex and a process name.

Each indicator in TI Lookup’s database is linked to a sandbox session, where it was observed, providing analysts with a complete view of the attack, including its TTPs.

If your solution is not on the list, you can easily set up a custom integration using ANY.RUN’s API or Python-based SDK (see docs on GitHub or PyPi).

Whether you want to uncover hidden threats in seconds, catch emerging attacks, or enrich alerts with actionable context, ANY.RUN equips your SOC with the visibility, speed, and efficiency needed to stay ahead.

With flexible API/SDK and ready-made connectors for leading platforms, implementation is smooth, and the impact is immediate: faster MTTR, higher detection rates, and a stronger defense posture.

Feel free to reach out to us about integrating ANY.RUN’s products in your SOC at support@any.run.

Trusted by over 500,000 cybersecurity professionals and 15,000+ organizations in finance, healthcare, manufacturing, and other critical industries, ANY.RUN helps security teams investigate threats faster and with greater accuracy.

Our Interactive Sandbox accelerates incident response by allowing you to analyze suspicious files in real time, watch behavior as it unfolds, and make confident, well-informed decisions.

Our Threat Intelligence Lookup and Threat Intelligence Feeds strengthen detection by providing the context your team needs to anticipate and stop today’s most advanced attacks.

Ready to see the difference?

Start your 14-day trial of ANY.RUN today →

The post Unified Security for Fast Response: All ANY.RUN Integrations for SIEM, SOAR, EDR, and More appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More