Year in Review by ANY.RUN: Key Threats, Solutions, and Breakthroughs of 2025

It’s December — that time of year when we take a pause and look back at how much we’ve achieved.

If you’re reading this, chances are you’ve shared these wins with us. Maybe you’ve launched one analysis, maybe thousands. Maybe you’ve browsed our Threat Intelligence Lookup daily or just joined us. Anyhow, thanks for being here!

2025 kept all of us busy for sure. But it also brought a ton of breakthrough studies, insights, and improvements. Let’s glance back at the year and see what we accomplished together — through numbers, stories, and proud moments.

Milestones We Achieved Together in 2025

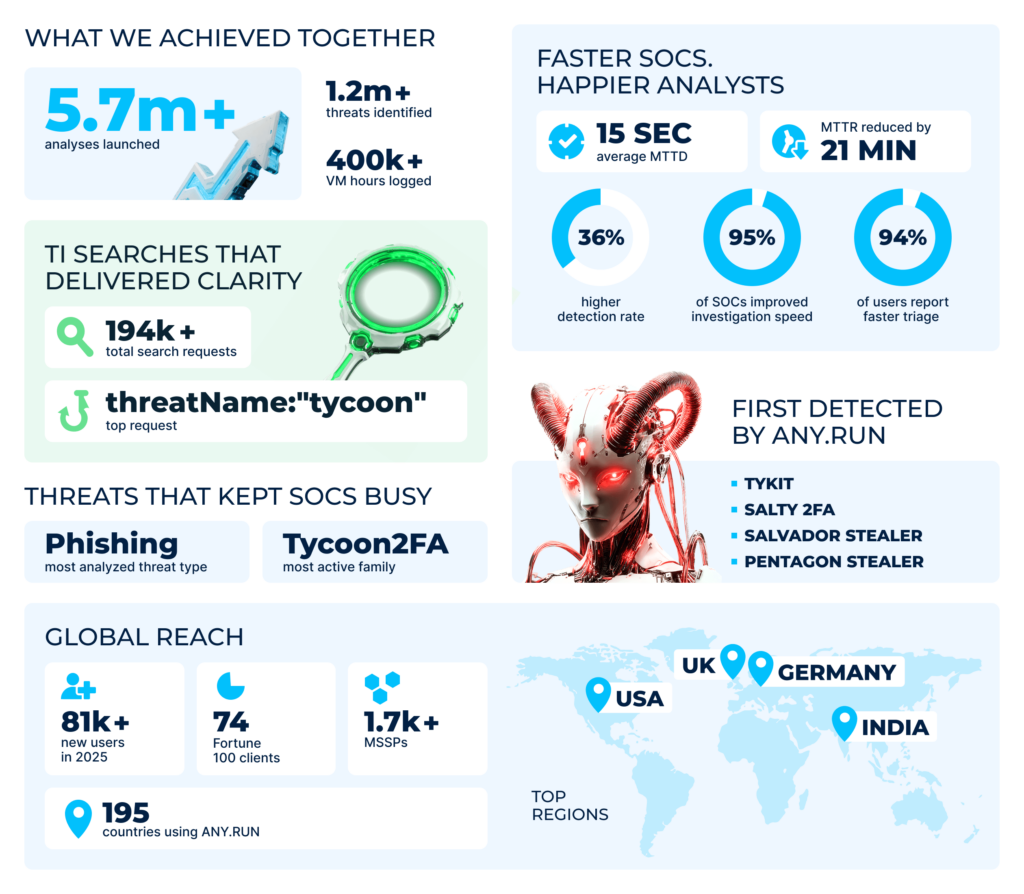

We bet it’s safe to say that no analyst was idle this year, and the numbers support this statement: the total number of analyses launched in ANY.RUN’s Interactive Sandbox across 195(!) countries exceeded 5.7 millions, with 1.1 million threats uncoveredin the process.

Our most active users this year were based in the US, Germany, UK, and India. Many of them represent big enterprises. In fact, 74 of Fortune 100 companies used our sandbox this year.

The community overall kept growing: out of 500,000+ users, 81K joined us this year, bringing new insights with them.

Altogether, ANY.RUN’s users have spent 400,000+ hours in our sandbox — that’s more than 45 years of research! Just imagine how much longer it would take without a solution built for fast and efficient analysis.

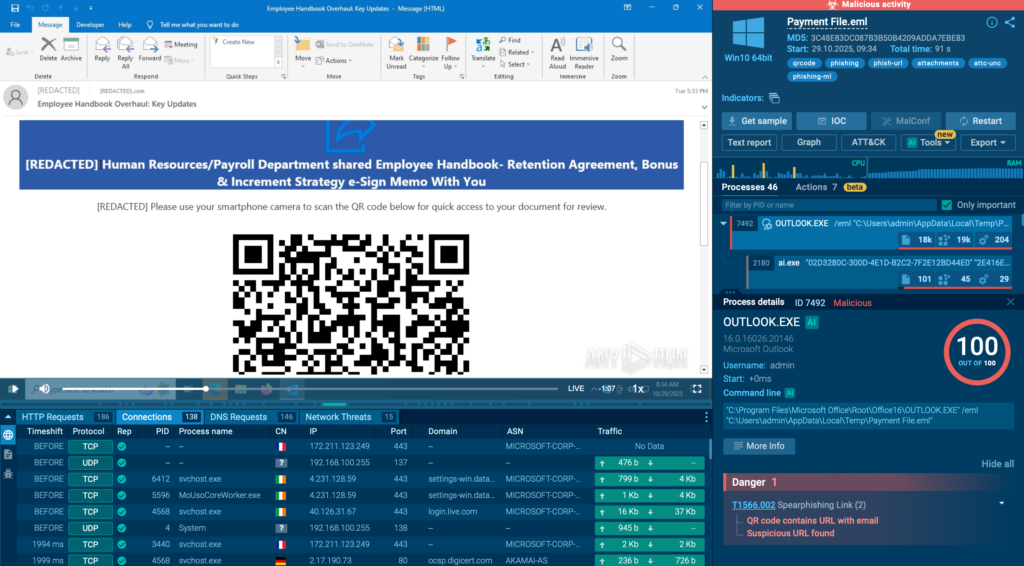

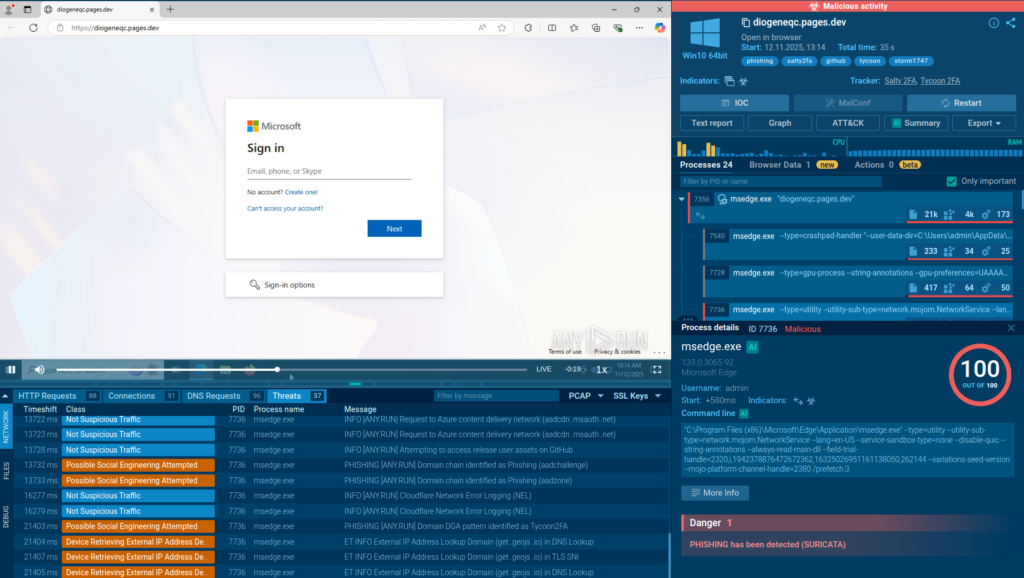

When it comes to what exactly our community analyzed most, there are no surprises: in 2025, phishing continued to reign over the threat landscape. In particular, the most active threat was Tycoon2FA.

The top suspects among file types were: executables, ZIP archives, PDFs, and emails (EML and MSG). A clear proof of how widespread both file- and email-based malware is.

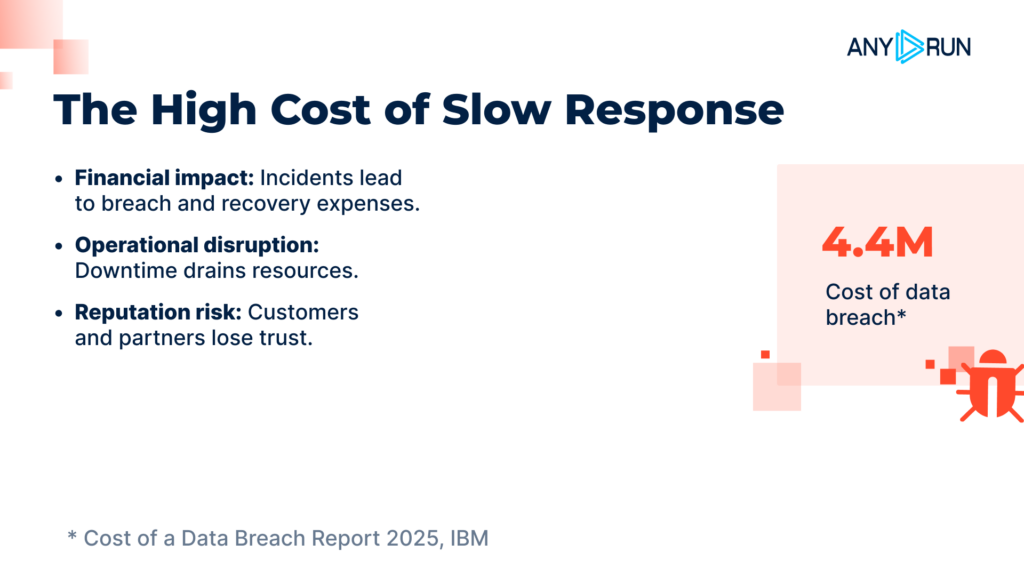

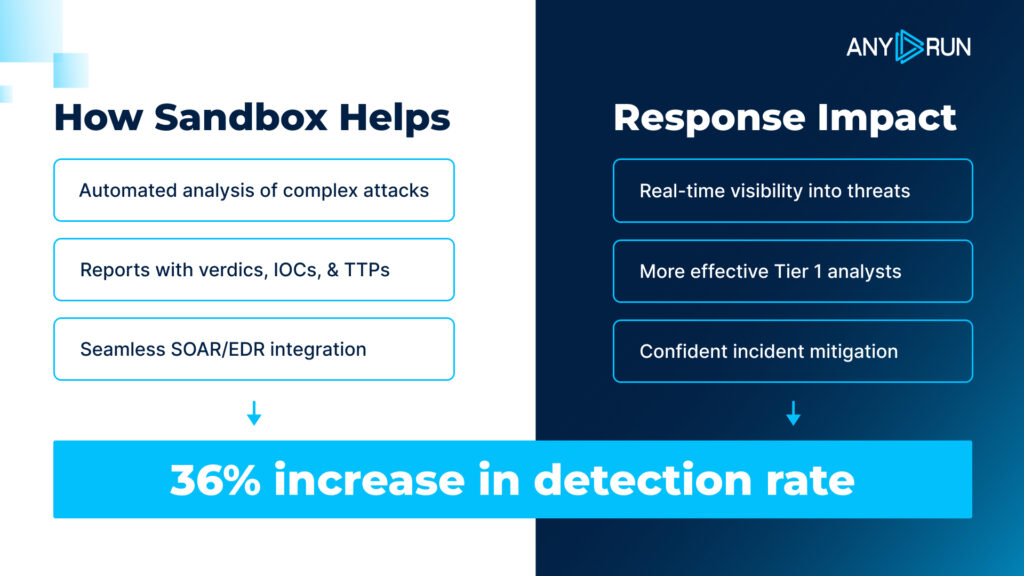

But no threat should scare an analyst equipped with strong security solutions. Here are some of the tangible results reported by ANY.RUN’s users in 2025:

| Measurable impact with ANY.RUN, 2025 |

|---|

| Average MTTD: 15 seconds |

| MTTR reduced by: 21 minutes |

| Investigation speed improved: in 95% of SOCs |

This is a solid proof of the fact that our malware analysis and threat intelligence solutions change SOC workflows for the better.

Key Sandbox Updates: Driving Malware Analysis Forward

More Ways to Run Malware

This year we broadened the sandbox horizons by adding new operating systems to our VM for more flexible and realistic environments.

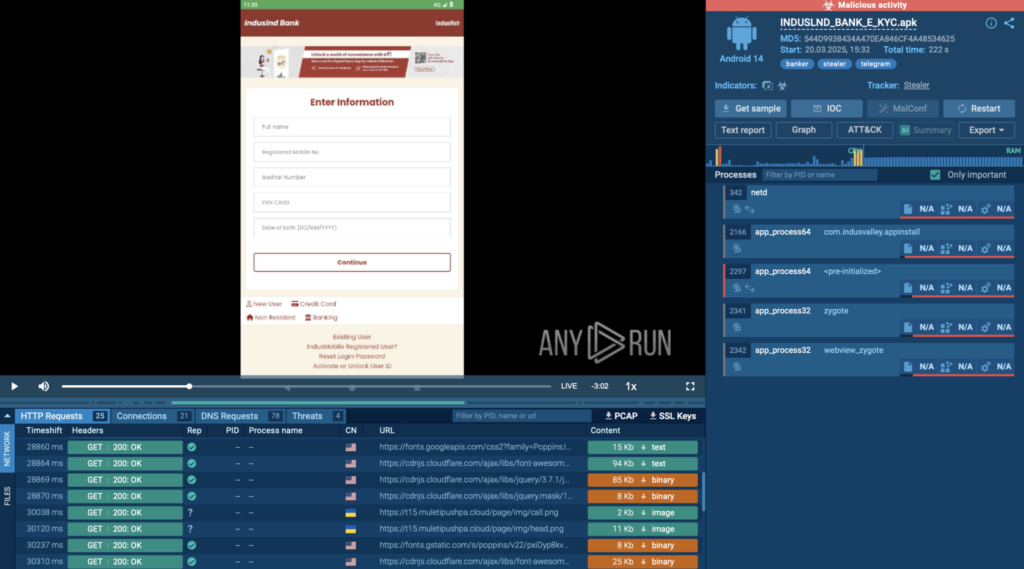

For teams tackling mobile threats, we introduced Android support. It gives you the opportunity to upload, interact, and analyze APK files in ANY.RUN’s virtual machine closely replicating a real Android device. Great timing, since mobile threats have been pretty active this year! But more on that below.

We also added Linux Debian OS, helping you detonate ARM-based threats. Since 2025, you can do full-scale malware built for IoT devices and other ARM systems in ANY.RUN’s Interactive Sandbox.

Thanks to these and other updates, our sandbox became even more universal and useful for faster, deeper, and more reliable analysis.

Deep Analysis Made Simple

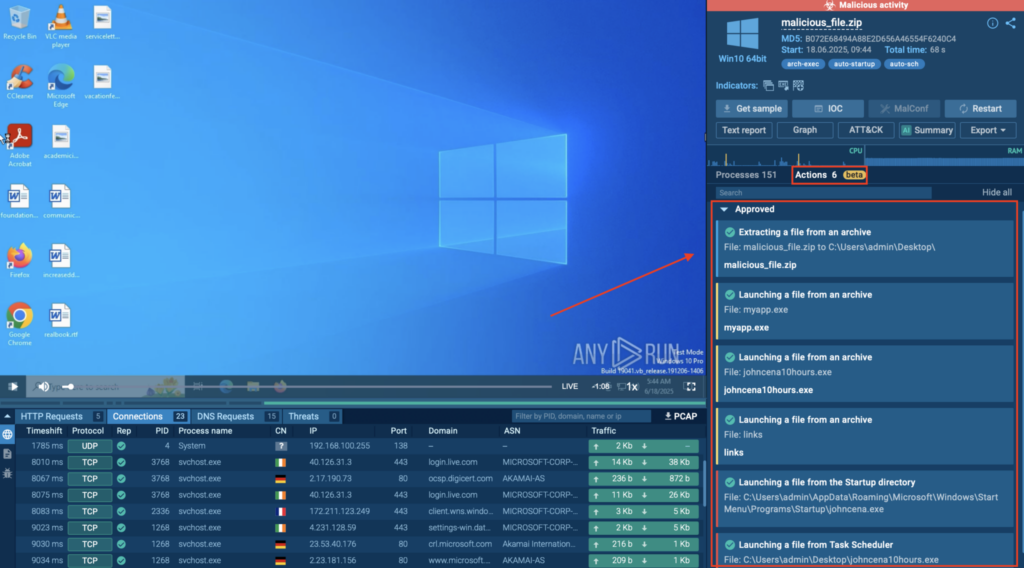

When it comes to malware analysis, it’s not always clear where to start, as threats get increasingly more complex and evasive. To simplify the process of uncovering them, we came up with Detonation Actions — hints that guide you through the analysis in our ANY.RUN Sandbox as you search for hidden threats.

Another feature we added solves one of the most time-consuming parts of detection: rule creation. Now our sandbox is equipped with AI Sigma Rules that reveal the logic behind threat behavior while saving manual effort. Just copy them to your SIEM, SOAR, or EDR for smooth deployment.

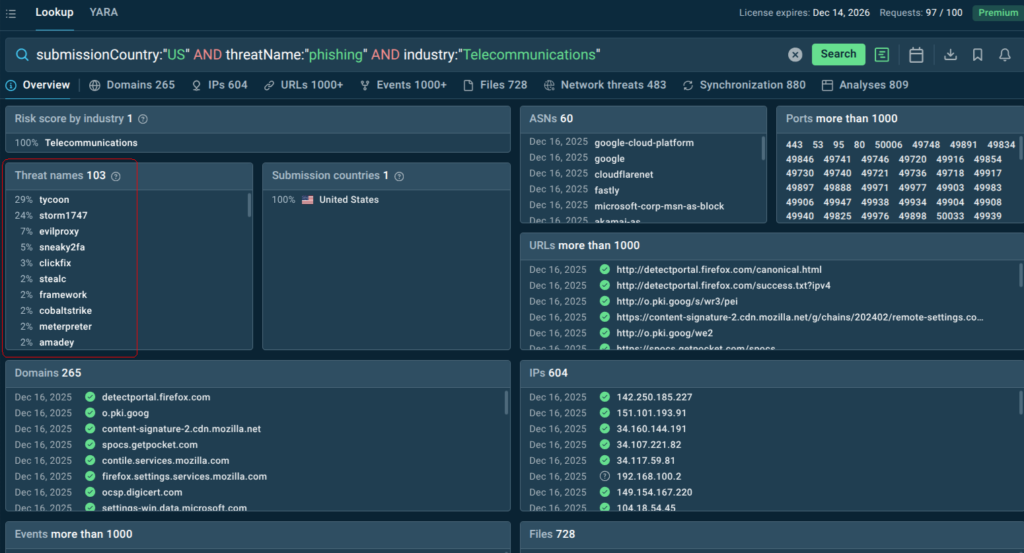

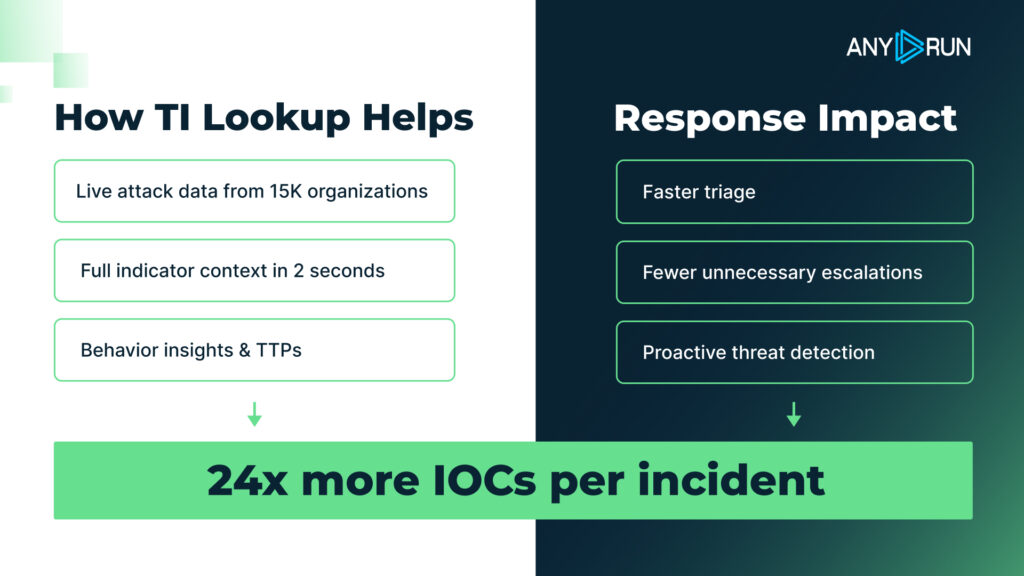

Threat Intelligence Lookup: Data Solving Real-World Challenges

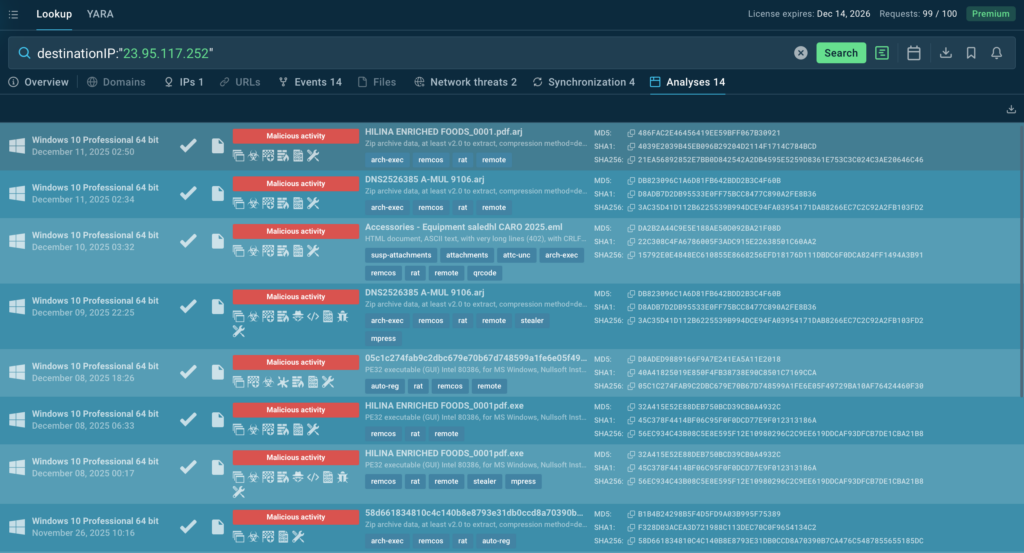

In 2025, our users made almost 195k requests in Threat Intelligence Lookup in search of actionable insights and verified indicators. Tycoon topped the list as the most searched malware.

Thanks to our global community, we have access to a rich collection of fresh, verified, ready- and safe-to-use data. It would be a shame not to share it with the world, right?

So, an important step we took this year to make TI Lookup more accessible. Namely, we introduced the Free plan, giving everyone the opportunity to enrich threat research with 100% verified context at no cost. It’s a perfect way to tap into quality intel and see it bring tangible results.

We also supported knowledge exchange by launching TI Reports, analyst-driven articles covering APTs, campaigns, and emerging threats. Each report comes with IOCs and queries for a deeper dive.

Finally, in 2025 we boosted threat monitoring capabilities of our users with Industry & geo threat landscape. It shows exactly how a given threat or indicator relates to sectors and countries — a real live-saver for those drowning in alerts with no context.

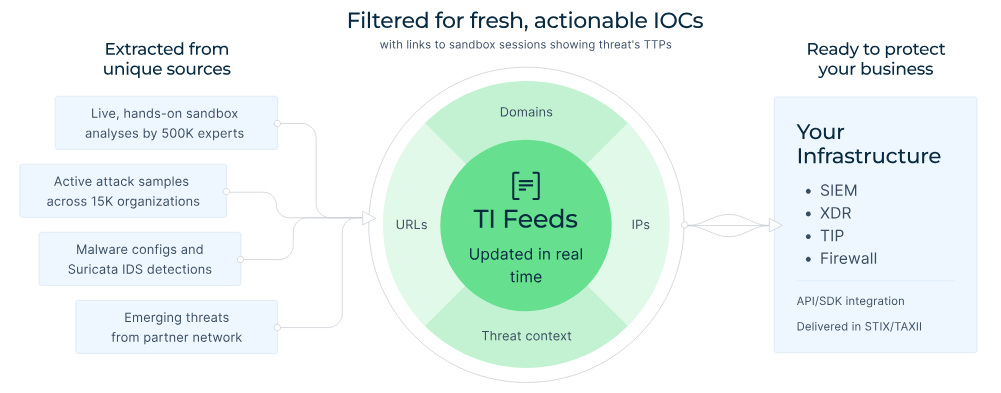

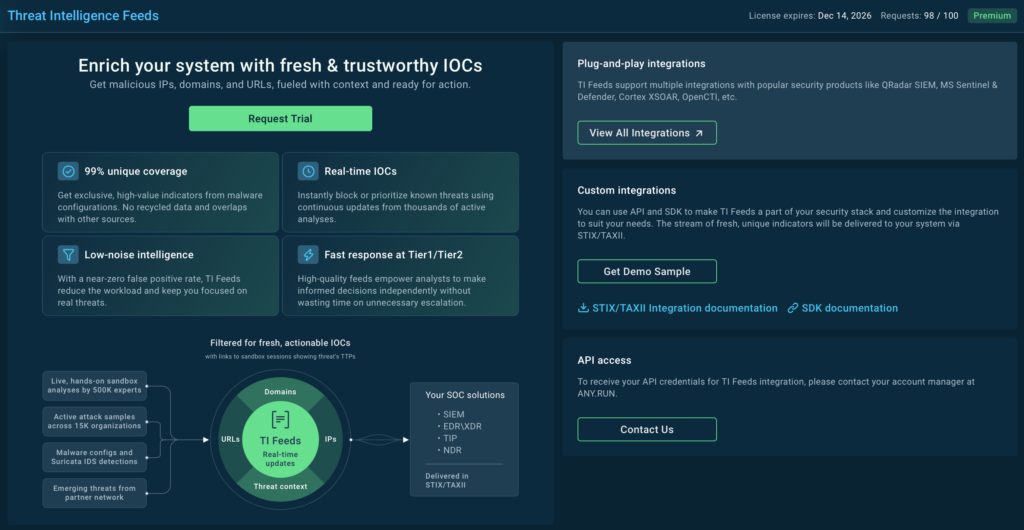

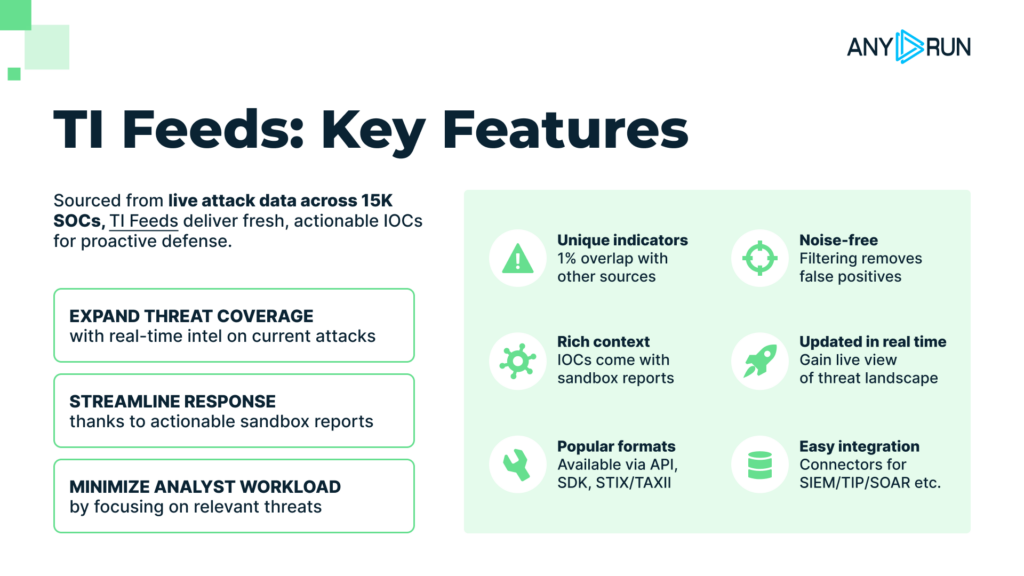

Threat Intelligence Feeds: Always Fresh and Relevant

Throughout 2025, Threat Intelligence Feeds grew both in terms of data and interoperability. It was powered by constant data updates coming from over 15K SOC teams, which guarantee that TI Feeds always remain on point.

The STIX/TAXII integration made the delivery of fresh, real-time data more efficient. And newly added integrations like ThreatQ + TI Feeds connector brought live, behavior-based malware for better prioritization and contextualization of indicators.

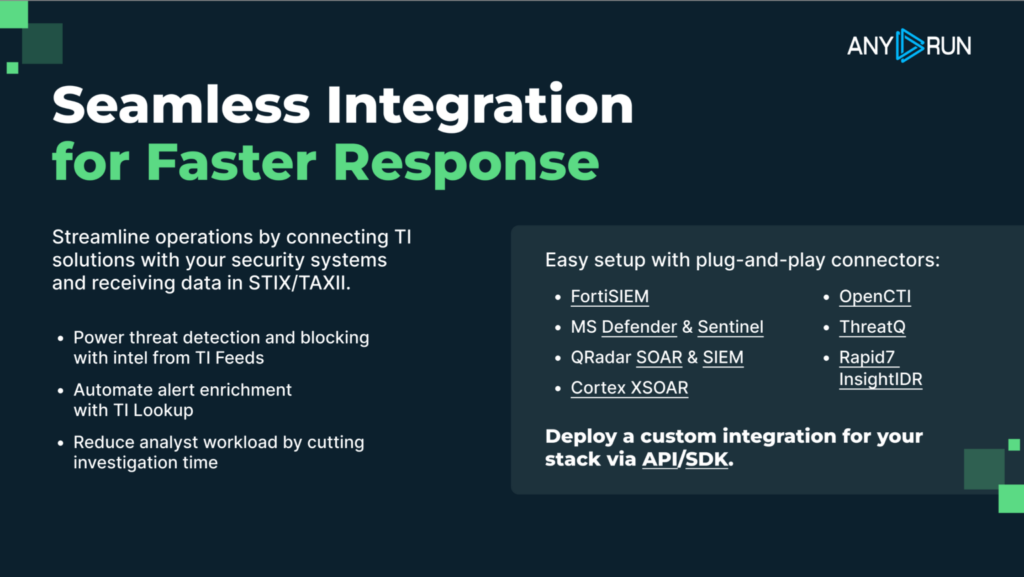

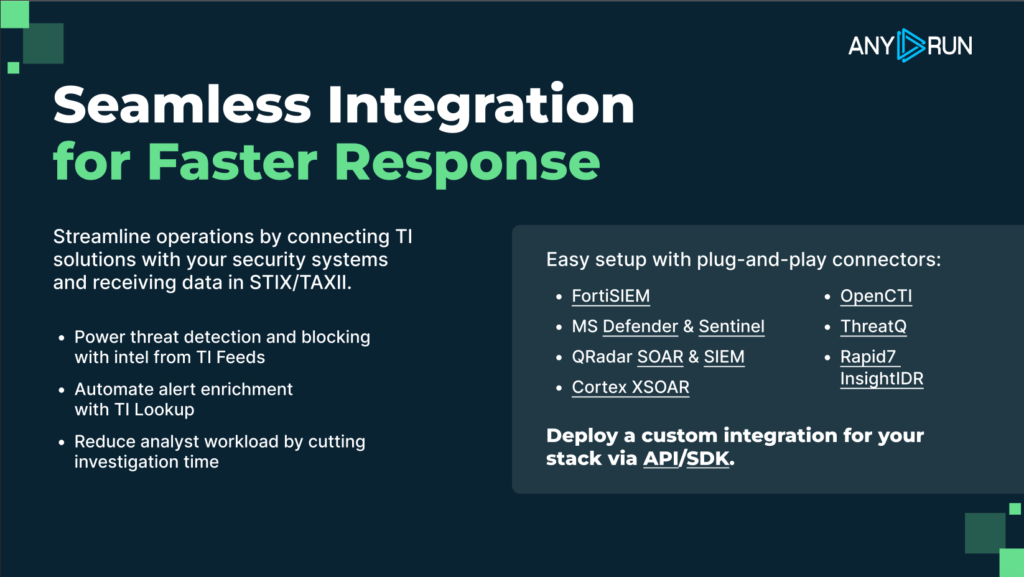

Expanding Our Reach with New Integrations & Connectors

Our goal is to make your workflow smoother and more efficient, simplifying daily tasks and automating what’s possible. One of the steps we took in this direction is the launch of SDK, which makes it easy to connect our solutions with tools you’re already using.

We also released a lot of ready-to-use integrations, such as:

- Palo Alto Networks Cortex XSOAR: Available for all three ANY.RUN’s products, it helps automate investigation and response.

- Microsoft Sentinel & Microsoft Defender: Integrate sandbox and TI Feeds with your Microsoft solutions for fast and confident decision-making.

- IBM Security QRadar SOAR: Turn alert noise into actionable conclusions without leaving your SOAR by integrating it with ANY.RUN sandbox and TI Lookup.

These and other integrations and connectors support your work without disrupting the way you already operate.

Catching What Others Miss

In 2025, ANY.RUN was the first to uncover multiple campaigns and malware families, giving a head start to the entire cybersecurity community. Let’s recap the most notable cases:

Salty 2FA

A newly discovered PhaaS framework that quickly raised to the level of major phishing kits in today’s threat landscape. Its ability to distribute payloads at scale, intercept 2FA authentication methods, and complex communication models ensured that.

Android Threats

Some of the recently occurred threats were Android-based, and we were able to break them down in detail and analyze their behavior in our sandbox.

- Salvador Stealer, an Android banking malware revealed in April 2025. By disguising itself as a legitimate app, it phishes critical personal and financial data — a clear example of how mobile malware continues to evolve and blend into everyday user environments.

- Pentagon Stealer, a relatively simple threat that quickly grew into a persistent, versatile, and widespread data-stealing malware.

Tykit

In October we took a closer look at Tykit, a credential-stealing malware. It might not reinvent phishing per se but clearly demonstrates how a tiny loophole in a defense system can lead to significant real-world impact.

Salty2FA & Tycoon2FA: A Hybrid Threat

We ended the year with a detection of a hybrid cross-kit malware Salty2FA & Tycoon2FA. It combines two phishing frameworks, multiplying the dangers of both.

ANY.RUN Recognized by Industry and Community

2025 brought us a handful of awards, indicating recognition and acclaim in the industry, for which we’re super grateful.

| Award | Title |

|---|---|

| Top InfoSec Innovators Awards | Winner at Trailblazing Threat Intelligence |

| Globee Awards | Gold winner (TI Lookup) Silver winner (Sandbox) |

| Cybersecurity Excellence Awards | Best TI Service |

| CyberSecurity Breakthrough Awards | Threat Intelligence Company of 2025 |

What we appreciate more than anything, however, is our community. Every nomination, vote, and kind word reflect your trust — a big thank-you to everyone involved!

Our Most Influential Reports

Alongside TI Reports you can find in TI Lookup, we regularly share technical analyses on our blog. 2025 was no exception. We published many nuanced studies of both newly discovered and evolved threats.

- April brought a surge inactivity around PE32 Ransomware, a Telegram-based encryptor. Our in-depth breakdown highlights how even unsophisticated ransomware can pose a very real danger.

- In July we covered DEVMAN, a malware sample tied to the DragonForce ransomware lineage but standing out with unique behaviors and identifiers.

- Later the same month we analyzed Ducex packer, an advanced tool used to conceal Android malware payloads. An increase in its activity highlights the escalating arms race between threat actors and security teams.

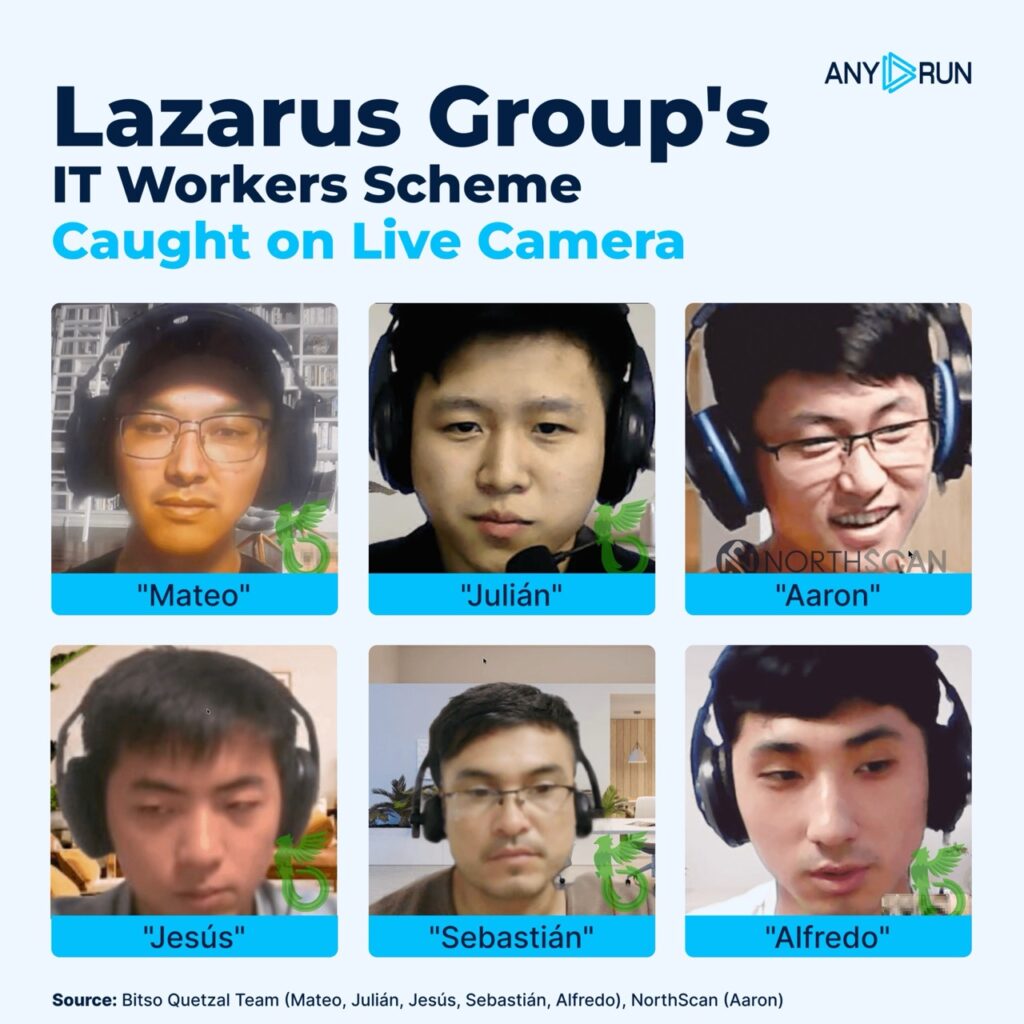

- Finally, in December we took an unprecedented look inside Lazarus Group’s North Korean IT workers infiltration scheme, capturing actors live inside controlled ANY.RUN environments and documenting their activities.

These and other reports by ANY.RUN are a testament to how interactive sandboxing and knowledge exchange makes analysis sharper and the entire community stronger.

Spoiler Alert: What to Look Forward to in 2026

We’ve grown a lot this year and we’re not planning to stop. Here’s a peek into what we’re working on and what you can expect from ANY.RUN in the coming year:

- Enhanced teamwork mode for efficient collaboration inside SOCs.

- Refined reporting, including new types of text reports, industry-focused prioritization, security recommendations, improved AI Summaries, and auto-generated YARA rules.

- Enrichment of sandbox detections with relevant threat intelligence data.

- Improved detection quality with features like SSL decryption without MITM, in-browser data inspection, and AI-powered analysis.

- Expanded analysis options for Enterprise users, including MacOS and Windows Server support in VM.

Conclusion

Everything’s changing — threats, TTPs, security measures… But our goal stays the same: to make malware analysis and threat investigations faster, easier, and smarter.

Thanks for analyzing, researching, experimenting, and growing together with us. Every contribution, insight, and a bit of feedback brings us closer to a more secure future.

Have alert-free holidays and stay safe in 2026!

About ANY.RUN

ANY.RUN supports over 500,000 cybersecurity professionals around the world. Its Interactive Sandbox makes malware analysis easier by enabling the investigation of threats targeting Windows, Android, and Linux systems. ANY.RUN’s threat intelligence solutions—Threat Intelligence Lookup and TI Feeds—allow teams to quickly identify IOCs and analyze files, helping them better understand threats and respond to incidents more efficiently.

Start a 2-week trial of ANY.RUN’s solutions →

The post Year in Review by ANY.RUN: Key Threats, Solutions, and Breakthroughs of 2025 appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More