UAT-7290 targets high value telecommunications infrastructure in South Asia

- Cisco Talos is disclosing a sophisticated threat actor we track as UAT-7290, who has been active since at least 2022.

- UAT-7290 is tasked with gaining initial access as well as conducting espionage focused intrusions against critical infrastructure entities in South Asia.

- UAT-7290’s arsenal includes a malware family consisting of implants we call RushDrop, DriveSwitch, and SilentRaid.

- Our findings indicate that UAT-7290 conducts extensive technical reconnaissance of target organizations before carrying out intrusions.

Talos assesses with high confidence that UAT-7290 is a sophisticated threat actor falling under the China-nexus of Advanced Persistent Threat actors (APTs). UAT-7290 primarily targets telecommunications providers in South Asia. However, in recent months we have also seen UAT-7290 expand their targeting into Southeastern Europe.

In addition to conducting espionage focused attacks where UAT-7290 burrows deep inside a victim enterprise’s network infrastructure, their tactics, techniques and procedures (TTPs) and tooling suggests that this actor also establishes Operational Relay Box (ORBs) nodes. The ORB infrastructure may then be used by other China-nexus actors in their malicious operations, signifying UAT-7290’s dual role as an espionage motivated threat actor as well as an initial access group.

Active since at least 2022, UAT-7290 has an expansive arsenal of tooling, including open-source malware, custom developed malware, and payloads for 1-day vulnerabilities in popular edge networking products. UAT-7290 primarily leverages a Linux based malware suite but may also utilize Windows based bespoke implants such as RedLeaves or Shadowpad commonly linked to China-nexus threat actors.

Our findings suggest that the threat actor conducts extensive reconnaissance of target organizations before carrying out intrusions. UAT-7290 leverages one-day exploits and target-specific SSH brute force to compromise public facing edge devices to gain initial access and escalate privileges on compromised systems. The actor appears to rely on publicly available proof-of-concept exploit code as opposed to developing their own.

UAT-7290 shares overlapping TTPs with known China-nexus adversaries, including the exploitation of high-profile vulnerabilities in networking devices, use of open-source web shells for persistence, leveraging UDP listeners, and using compromised infrastructure to facilitate operations.

Specifically, we have observed technical indicators that overlap with RedLeaves, a malware family attributed to APT10 (a.k.a. MenuPass, POTASSIUM and Purple Typhoon), as well as infrastructure associated with ShadowPad, a malware family used by a variety of China-nexus adversaries.

Additionally, UAT-7290 shares a significant amount of overlap in victimology, infrastructure, and tooling with a group publicly reported by Recorded Future as Red Foxtrot. In a 2021 report, Recorded Future linked Red Foxtrot to Chinese People’s Liberation Army (PLA) Unit 69010.

UAT-7290’s malware arsenal for edge devices

Talos currently tracks the Linux-based malware families associated with UAT-7290 in this intrusion as:

- RushDrop – The dropper that kickstarts the infection chain. RushDrop is also known as ChronosRAT.

- DriveSwitch – A peripheral malware used to execute the main implant on the infected system.

- SilentRaid – The main implant in the intrusion meant to establish persistent access to compromised endpoints. It communicates with its command-and-control server (C2) and carries out tasks defined in the malware. SilentRaid is also known as MystRodX.

Another malware implanted on compromised devices by UAT-7290 is Bulbature. Bulbature, first disclosed by Sekoia in late 2024, is an implant that is used to convert compromised devices into ORBs.

RushDrop and DriveSwitch

RushDrop is a malware dropper that consists of three binaries encoded and embedded within it. RushDrop first makes rudimentary checks to ensure it is running on a legitimate system instead of a sandbox.

Then it either checks for the existence of, or creates a folder called “.pkgdb” in the current working directory of the dropper. RushDrop then decodes and drops three binaries to the “.pkgdb” folder:

- “daytime” – A malware family that simply executes a file called “chargen” from the current working directory. This executor is being tracked as DriveSwitch.

- “chargen” – The central implant of the infection chain, tracked as SilentRaid. SilentRaid communicates with its C2 server, usually in the form of a domain and can carry out action as instructed by the C2.

- “busybox” – Busybox is a legitimate Linux utility that can be used to execute arbitrary commands on the system.

DriveSwitch simply executes the SilentRaid malware on the system.

SilentRaid: The multifunctional malware

SilentRaid is a malware written in C++ and consists of multiple functionalities, written in the form of “plugins” embedded in the malware. On execution, it does certain rudimentary anti-VM and analysis checks to ensure it isn’t running in a sandbox. Then the malware simply initializes its “plugins” and contacts the C2 server for instructions to carry out malicious tasks on the infected endpoint. The plugins are built in functionalities, but modular enough to enable the threat actor to stitch together a combination of them during compilation.

Plugin: my_socks_mgr

This plugin handles communication to C2 server. It obtains the C2 IP by resolving a domain using “8[.]8[.]8[.]8” and passes commands received from the C2 to the appropriate plugin.

Plugin:my_rsh

This plugin opens a remote shell by executing “sh” either via either “busybox” or “/bin/sh”. This remote shell is then used to run arbitrary commands on the infected system.

Plugin:port_fwd_mgr

This plugin sets up port forwarding between ports specified — a local port and a port on a remote server. It can also set up port forwarding across multiple ports.

Plugin:my_file_mgr

This is the file manager of the backdoor. It allows the SilentRaid to:

- Read contents of “/etc/passwd”

- Execute a specified file on the system

- Archive directories specified by the C2 using “tar -cvf” – executed via busybox

- Check if a file is accessible

- Remove a file or directory using the “rm” command – via busybox

- Read/write a specified file

SilentRaid can also parse thru x509 certificates and collect attribute information such as:

- id-at-dnQualifier | Distinguished Name qualifier

- id-at-pseudonym | Pseudonym

- id-domainComponent | Domain component

- id-at-uniqueIdentifier | Unique Identifier

Bulbature

The Bulbature malware discovered consisted of the same string encoding scheme as the other UAT-7290’s malware illustrated earlier. Usually UPX compressed, Bulbature can bind to and listen to either a random port of its choosing or one specified via command line via the “-d <port_number>” switch.

Bulbature obtains the local network interface’s name by executing the command:

cat /proc/net/route | awk '{print $1,$2}' | awk '/00000000/ {print $1}'

It also obtains basic system information and the current user using the command:

echo $(whoami) $(uname -nrm)

The malware typically records its C2 address in a config file in the /tmp directory. The file will have the same name as the malware binary with the “.cfg” extension appended to it. The C2 address may be an encoded string.

Bulbature can obtain additional or new C2 addresses from the current C2 and can switch over communications with them instead. The malware can open up a reverse shell with its C2 to execute arbitrary commands on the infected system.

A recent variant of Bulbature contained an embedded self-signed certificate that it used for communicating with the C2. This certificate matches the one from the sample disclosed by Sekoia as well:

509 Certificate: Version: 3 Serial Number: 81bab2934ee32534 Signature Algorithm: Algorithm ObjectId: 1.2.840.113549.1.1.11 sha256RSA Algorithm Parameters: 05 00 Issuer: O=Internet Widgits Pty Ltd S=Some-State C=AU Name Hash(sha1): d398f76c7ba0bbf79b1cac0620cdf4b42e505195 Name Hash(md5): 4a963519b4950845a8d76668d4d7dd29 NotBefore: 8/8/2019 3:33 AM NotAfter: 12/24/2046 3:33 AM Subject: O=Internet Widgits Pty Ltd S=Some-State C=AU Name Hash(sha1): d398f76c7ba0bbf79b1cac0620cdf4b42e505195 Name Hash(md5): 4a963519b4950845a8d76668d4d7dd29 Cert Hash(sha256): 918fb8af4998393f5195bafaead7c9ba28d8f9fb0853d5c2d75f10e35be8015a

Censys data shows that this certificate, with the exact Serial number, is present on at least 141 hosts, all either located in China or Hong Kong. On Virus Total, many of the IPs identified hosting this certificate are associated with other malware typically associated with China-nexus of threat actors such as SuperShell, GobRAT, Cobalt Strike, etc.

Coverage

The following ClamAV signatures detect and block this threat:

- Unix.Dropper.Agent

- Unix.Malware.Agent

- Unix.Packed.Agent

The following Snort Rule (SIDs) detects and blocks this threat: 65124

IOCs

723c1e59accbb781856a8407f1e64f36038e324d3f0bdb606d35c359ade08200

59568d0e2da98bad46f0e3165bcf8adadbf724d617ccebcfdaeafbb097b81596

961ac6942c41c959be471bd7eea6e708f3222a8a607b51d59063d5c58c54a38d

Cisco Talos Blog – Read More

How Cisco Talos powers the solutions protecting your organization

Cisco Talos is Cisco’s threat intelligence and security research organization that powers Cisco’s product portfolio with that intelligence. While we are well known for the security research in our blog, vulnerability discoveries, and our open-source software, you may not be aware of exactly how our know-how protects Cisco customers.

Talos’ core mission is to understand the broad threat landscape and distill the massive amount of telemetry we ingest into actionable intelligence. This intelligence is put to use in detecting and defending against threats with speed and accuracy, providing incident response and empowering our customers, constituents, and communities with context-rich actionable cyber intelligence. Under the hood of Cisco’s security portfolio, you will find our reputation and detection services applying our real time intelligence to detect and block threats.

Defending networks

Possibly our best-known service is the Cisco Talos Network Intrusion Prevention system, widely known as SNORT®. Snort performs deep packet inspection on network traffic, using advanced signature-based detection to identify known threats. In addition, its machine learning-powered component, SnortML, helps detect and block attempts to exploit zero-day vulnerabilities, providing robust protection against both familiar and emerging network attacks.

Securing the web

The core of securing our customers across our product portfolio is Cisco Talos Web Filtering Service. This service considers the reputation and categorization of domains, IP addresses, and indicators surrounding the URL. The service can proactively block web traffic to sites that have a poor reputation or that serve content in contravention of a customer’s web use policy.

The Cisco Talos DNS Security service augments our web filtering by defending specific attacks at the DNS layer. It detects domains used by threat actors for command and control (C2), data exfiltration, and phishing attacks. Behind the scenes, our machine learning algorithms constantly analyze patterns in the DNS traffic to identify new malicious domains to add to our own intelligence.

Protecting your inbox

Cisco Talos Email Filtering analyzes a wide range of indicators within email to determine if it is malicious, spam, or a genuine email. This includes assessing the sender’s domain and IP reputation and behavior, examining URLs and the content they reference, and evaluating the body of the email, header, and any attachments. By combining these factors, our email filtering can identify benign messages, spam, phish, as well as other unwanted messages.

Cisco Talos Email Threat Prevention goes one step further than DMARC, the standard for properly handling emails with inaccurate sender data, by analyzing anomalies in email traffic patterns with AI, to identify when brands are being impersonated. This technology can detect when an email is likely to be a phish or a business email compromise attempt.

Detecting malware

Talos provides two complementary technologies to detect malware: Cisco Talos Antivirus and Cisco Talos Malware Protection. The former provides signature and pattern detection of malware within files to identify known malware, similar to our ClamAV open-source product. The latter goes further, checking the dispositions of unknown files and looking for suspicious behavior on the machine. This layered approach allows us to quickly spot and contain threats while our researchers scour telemetry for any indications that a bad actor has gained access to a device.

We also provide Orbital queries and scripts, a platform by which administrators can collect information from networked devices and use their own queries (or those provided by us) to hunt for devices that are insecure, out of policy, or potentially affected by a security incident.

Summary

You can find Talos’ intelligence integrated into a wide variety of Cisco products:

Our published research and threat intelligence reports represent just a small part of the work we do at Talos. The many hours our researchers, analysts, and engineers spend researching the threat environment and developing systems to detect and block attacks bear fruit in the components that we deploy as part of the Cisco Security portfolio. Our intelligence and know-how protect Cisco Security customers from threats, brand new or decades old.

Note: You can benefit from the experience of our analysts directly through a Cisco Talos Incident Response (Talos IR) retainer. While Talos IR can provide relevant threat information and expert emergency incident response, you can also use our proactive services to help prepare your systems, support and train your team, or actively hunt for bad guys on your network.

Cisco Talos Blog – Read More

Singapore Cyber Agency Warns of Critical IBM API Connect Vulnerability (CVE-2025-13915)

Overview

The Cyber Security Agency of Singapore has issued an alert regarding a critical vulnerability affecting IBM API Connect, following the release of official security updates by IBM on 2 January 2026. The flaw, tracked as CVE-2025-13915, carries a CVSS v3.1 base score of 9.8, placing it among the most severe vulnerabilities currently disclosed for enterprise automation software.

According to IBM’s security bulletin, the issue stems from an authentication bypass weakness that could allow a remote attacker to gain unauthorized access to affected systems without valid credentials. The vulnerability impacts multiple versions of IBM API Connect, a widely used platform for managing application programming interfaces across enterprise environments.

Details of CVE-2025-13915 and Technical Impact

IBM confirmed that CVE-2025-13915 was identified through internal testing and classified under CWE-305: Authentication Bypass by Primary Weakness. The flaw allows authentication mechanisms to be bypassed, despite the underlying authentication algorithm itself being sound. The weakness arises from an implementation flaw that can be exploited independently.

The official CVSS vector for the vulnerability is:

CVSS:3.1/AV:N/AC:L/PR:N/UI:N/S:U/C:H/I:H/A:H

This indicates that the vulnerability is remotely exploitable, requires no user interaction, and can lead to a complete compromise of confidentiality, integrity, and availability. IBM stated that successful exploitation could enable attackers to access the application remotely and operate with unauthorized privileges.

Data from Cyble Vision further classifies the issue as “very critical,” confirming that IBM API Connect up to versions 10.0.8.5 and 10.0.11.0 is affected.

Affected IBM API Connect Versions

IBM confirmed that the following versions are vulnerable to CVE-2025-13915:

- IBM API Connect V10.0.8.0 through V10.0.8.5

- IBM API Connect V10.0.11.0

No evidence has been disclosed indicating active exploitation in the wild, and the vulnerability is not currently listed in the CISA Known Exploited Vulnerabilities (KEV) catalog.

Cyble Vision data also indicates that the vulnerability has not been discussed in underground forums, suggesting no known public exploit circulation at this time.

The EPSS score for CVE-2025-13915 stands at 0.37, indicating a moderate probability of exploitation compared to other high-severity vulnerabilities.

Remediation and Mitigation Guidance

IBM has released interim fixes (iFixes) to address the vulnerability and strongly recommends that affected organizations apply updates immediately. For IBM API Connect V10.0.8, fixes are available for each sub-version from 10.0.8.0 through 10.0.8.5. A separate interim fix has also been released for IBM API Connect V10.0.11.0.

IBM’s advisory explicitly states:

“IBM strongly recommends addressing the vulnerability now by upgrading.”

For environments where immediate patching is not possible, IBM advises administrators to disable self-service sign-up on the Developer Portal, if enabled. This mitigation can help reduce exposure by limiting potential abuse paths until updates can be applied.

Cyble Vision reinforces this recommendation, noting that upgrading removes the vulnerability entirely, and that temporary mitigations should only be considered short-term risk reduction measures.

Broader Security Context

The disclosure of CVE-2025-13915 reinforces the persistent risk posed by authentication bypass vulnerabilities in enterprise platforms such as IBM API Connect. Classified under CWE-305 and CWE-287, the flaw demonstrates how implementation weaknesses can negate otherwise robust authentication controls. Despite the absence of confirmed exploitation, the vulnerability, remote attack surface, and critical CVSS score of 9.8 make immediate remediation necessary.

The Cyber Security Agency of Singapore’s alert reflects heightened regional scrutiny of high-impact vulnerabilities affecting widely deployed enterprise software. IBM’s advisory, first published on 17 December 2025 and reinforced in January 2026, provides clear guidance on patching and mitigation. Organizations running affected versions of IBM API Connect should assess exposure without delay and apply the recommended fixes to reduce risk.

Threat intelligence data from Cyble Vision further confirms the vulnerability’s severity, its impact on confidentiality, integrity, and availability, and the effectiveness of upgrading as the primary remediation. Continuous monitoring and contextual intelligence remain critical for identifying and prioritizing vulnerabilities with enterprise-wide consequences like CVE-2025-13915.

Security teams tracking high-risk vulnerabilities like CVE-2025-13915 need real-time visibility, context, and prioritization. Cyble delivers AI-powered threat intelligence to help organizations assess exploitability, monitor new risks, and respond faster.

Learn how Cyble helps security teams stay protected from such vulnerabilities— schedule a demo.

References:

The post Singapore Cyber Agency Warns of Critical IBM API Connect Vulnerability (CVE-2025-13915) appeared first on Cyble.

Cyble – Read More

CISA Known Exploited Vulnerabilities Surged 20% in 2025

The U.S. Cybersecurity and Infrastructure Security Agency (CISA) added 245 vulnerabilities to its Known Exploited Vulnerabilities (KEV) catalog in 2025, as the database grew to 1,484 software and hardware flaws at high risk of cyberattacks.

The agency removed at least one vulnerability from the catalog in 2025 – CVE-2025-6264, a Velociraptor Incorrect Default Permissions vulnerability that CISA determined had insufficient evidence of exploitation – but the database has generally grown steadily since its launch in November 2021.

After an initial surge of added vulnerabilities after the database first launched, growth stabilized in 2023 and 2024, with 187 vulnerabilities added in 2023 and 185 in 2024.

Growth accelerated in 2025, however, as CISA added 245 vulnerabilities to the KEV catalog, an increase of more than 30% above the trend seen in 2023 and 2024. With new vulnerabilities surging in recent weeks, the elevated exploitation trend may well continue into 2026.

Overall, CISA KEV vulnerabilities grew from 1,239 vulnerabilities at the end of 2024 to 1,484 at the end of 2025, an increase of just under 20%.

We’ll look at some of the trends and vulnerabilities from 2025 – including 24 vulnerabilities known to be exploited by ransomware groups – along with the vendors and projects that had the most CVEs added to the list this year.

Older Vulnerabilities Added to CISA KEV Also Grew

The addition of older vulnerabilities to the CISA KEV catalog also grew in 2025. In 2023 and 2024, 60 to 70 older vulnerabilities were added to the KEV catalog each year. In 2025, the number of vulnerabilities from 2024 and earlier added to the catalog grew to 94, a 34% increase from a year earlier.

The oldest vulnerability added to the KEV catalog in 2025 was CVE-2007-0671, a Microsoft Office Excel Remote Code Execution vulnerability.

The oldest vulnerability in the catalog remains one from 2002 – CVE-2002-0367, a privilege escalation vulnerability in the Windows NT and Windows 2000 smss.exe debugging subsystem that has been known to be used in ransomware attacks.

Vulnerabilities Used in Ransomware Attacks

CISA marked 24 of the vulnerabilities added in 2025 as known to be exploited by ransomware groups. They include some well-known flaws such as CVE-2025-5777 (dubbed “CitrixBleed 2”) and Oracle E-Business Suite vulnerabilities exploited by the CL0P ransomware group.

The full list of vulnerabilities newly exploited by ransomware groups in 2025 is included below, and should be prioritized by security teams if they’re not yet patched.

| Vulnerabilities Exploited by Ransomware Groups | |

| CVE-2025-5777 | Citrix NetScaler ADC and Gateway Out-of-Bounds Read |

| CVE-2025-31161 | CrushFTP Authentication Bypass |

| CVE-2019-6693 | Fortinet FortiOS Use of Hard-Coded Credentials |

| CVE-2025-24472 | Fortinet FortiOS and FortiProxy Authentication Bypass |

| CVE-2024-55591 | Fortinet FortiOS and FortiProxy Authentication Bypass |

| CVE-2025-10035 | Fortra GoAnywhere MFT Deserialization of Untrusted Data |

| CVE-2025-22457 | Ivanti Connect Secure, Policy Secure, and ZTA Gateways Stack-Based Buffer Overflow |

| CVE-2025-0282 | Ivanti Connect Secure, Policy Secure, and ZTA Gateways Stack-Based Buffer Overflow |

| CVE-2025-55182 | Meta React Server Components Remote Code Execution |

| CVE-2025-49704 | Microsoft SharePoint Code Injection |

| CVE-2025-49706 | Microsoft SharePoint Improper Authentication |

| CVE-2025-53770 | Microsoft SharePoint Deserialization of Untrusted Data |

| CVE-2025-29824 | Microsoft Windows Common Log File System (CLFS) Driver Use-After-Free |

| CVE-2025-26633 | Microsoft Windows Management Console (MMC) Improper Neutralization |

| CVE-2018-8639 | Microsoft Windows Win32k Improper Resource Shutdown or Release |

| CVE-2024-55550 | Mitel MiCollab Path Traversal |

| CVE-2024-41713 | Mitel MiCollab Path Traversal |

| CVE-2025-61884 | Oracle E-Business Suite Server-Side Request Forgery (SSRF) |

| CVE-2025-61882 | Oracle E-Business Suite Unspecified |

| CVE-2023-48365 | Qlik Sense HTTP Tunneling |

| CVE-2025-31324 | SAP NetWeaver Unrestricted File Upload |

| CVE-2024-57727 | SimpleHelp Path Traversal |

| CVE-2024-53704 | SonicWall SonicOS SSLVPN Improper Authentication |

| CVE-2025-23006 | SonicWall SMA1000 Appliances Deserialization |

Projects and Vendors with the Highest Number of Exploited Vulnerabilities

Microsoft once again led all vendors and projects in CISA KEV additions, with 39 vulnerabilities added to the database in 2025, up from 36 in 2024.

Several vendors and projects had fewer vulnerabilities added in 2025 than they did in 2024, suggesting improved security controls. Among the vendors and projects that saw a decline in KEV vulnerabilities in 2025 were Adobe, Android, Apache, Ivanti, Palo Alto Networks, and VMware.

11 vendors and projects had five or more KEV vulnerabilities added this year, included below.

| Vendor/project | CISA KEV additions in 2025 |

| Microsoft | 39 |

| Apple | 9 |

| Cisco | 8 |

| Fortinet | 8 |

| Google Chromium | 7 |

| Ivanti | 7 |

| Linux Kernel | 7 |

| Citrix | 5 |

| D-Link | 5 |

| Oracle | 5 |

| SonicWall | 5 |

Most Common Software Weaknesses Exploited in 2025

Eight software and hardware weaknesses (common weakness enumerations, or CWEs) were particularly prominent among the 2025 KEV additions. The list is similar to last year, although CWE-787, CWE-79, and CWE-94 are new to the list this year.

- CWE-78 – Improper Neutralization of Special Elements used in an OS Command (‘OS Command Injection’) – was again the most common weakness among vulnerabilities added to the KEV database, accounting for 18 of the 245 vulnerabilities added in 2025.

- CWE-502 – Deserialization of Untrusted Data – again came in second, occurring in 14 of the vulnerabilities.

- CWE-22 – Improper Limitation of a Pathname to a Restricted Directory, or ‘Path Traversal’ – moved up to third place with 13 appearances.

- CWE-416 – Use After Free – slipped a spot to fourth and was behind 11 of the vulnerabilities.

- CWE-787 – Out-of-bounds Write – was a factor in 10 of the vulnerabilities.

- CWE-79 – Cross-site Scripting – appeared 7 times.

Conclusion

CISA’s Known Exploited Vulnerabilities catalog remains a valuable tool for helping IT security teams prioritize patching and vulnerability management efforts.

The CISA KEV catalog can also alert organizations to third-party risks – although by the time a vulnerability gets added to the database, it’s become an urgent problem requiring immediate attention. Third-party risk management (TPRM) solutions could provide earlier warnings about partner risk through audits and other tools.

Finally, software and application development teams should monitor CISA KEV additions to gain awareness of common software weaknesses that threat actors routinely target.

Take control of your vulnerability risk today — book a personalized demo to see how CISA KEV impacts your organization.

The post CISA Known Exploited Vulnerabilities Surged 20% in 2025 appeared first on Cyble.

Cyble – Read More

The Week in Vulnerabilities: The Year Ends with an Alarming New Trend

Cyble Vulnerability Intelligence researchers tracked 1,782 vulnerabilities in the last week, the third straight week that new vulnerabilities have been growing at twice their long-term rate.

Over 282 of the disclosed vulnerabilities already have a publicly available Proof-of-Concept (PoC), significantly increasing the likelihood of real-world attacks on those vulnerabilities.

A total of 207 vulnerabilities were rated as critical under the CVSS v3.1 scoring system, while 51 received a critical severity rating based on the newer CVSS v4.0 scoring system.

Here are some of the top IT and ICS vulnerabilities flagged by Cyble threat intelligence researchers in recent reports to clients.

The Week’s Top IT Vulnerabilities

CVE-2025-66516 is a maximum severity XML External Entity (XXE) injection vulnerability in Apache Tika’s core, PDF and parsers modules. Attackers could embed malicious XFA files in PDFs to trigger XXE, potentially allowing for the disclosure of sensitive files, SSRF, or DoS without authentication.

CVE-2025-15047 is a critical stack-based buffer overflow vulnerability in Tenda WH450 router firmware version V1.0.0.18. Attackers could potentially initiate it remotely over the network with low complexity, and a public exploit exists, increasing the risk of widespread abuse.

Among the vulnerabilities added to CISA’s Known Exploited Vulnerabilities (KEV) catalog were:

- CVE-2025-14733, an out-of-bounds write vulnerability in WatchGuard Fireware OS that could enable remote unauthenticated attackers to execute arbitrary code.

- CVE-2025-40602, a local privilege escalation vulnerability due to insufficient authorization in the Appliance Management Console (AMC) of SonicWall SMA 1000 appliances.

- CVE-2025-20393, a critical remote code execution (RCE) vulnerability in Cisco AsyncOS Software affecting Cisco Secure Email Gateway and Cisco Secure Email and Web Manager appliances. The flaw has reportedly been actively exploited since late November by a China-linked APT group, which has deployed backdoors such as AquaShell, tunneling tools, and log cleaners to achieve persistence and remote access.

- CVE-2025-14847, a high-severity MongoDB vulnerability that’s been dubbed “MongoBleed” and reported to be under active exploitation. The Improper Handling of Length Parameter Inconsistency vulnerability could potentially allow uninitialized heap memory to be read by an unauthenticated client, potentially exposing data, credentials and session tokens.

Vulnerabilities Under Discussion on the Dark Web

Cyble dark web researchers observed a number of threat actors sharing exploits and discussing weaponizing vulnerabilities on underground and cybercrime forums. Among the vulnerabilities under discussion were:

CVE-2025-56157, a critical default credentials vulnerability affecting Dify versions through 1.5.1, where PostgreSQL credentials are stored in plaintext within the docker-compose.yaml file. Attackers who access deployment files or source code repositories could extract these default credentials, potentially gaining unauthorized access to databases. Successful exploitation could enable remote code execution, privilege escalation, and complete data compromise.

CVE-2025-37164, a critical code injection vulnerability in HPE OneView. The unauthenticated remote code execution flaw affects HPE OneView versions 10.20 and prior due to improper control of code generation. The vulnerability exists in the /rest/id-pools/executeCommand REST API endpoint, which is accessible without authentication, potentially allowing remote attackers to execute arbitrary code and gain centralized control over the enterprise infrastructure.

CVE-2025-14558, a critical severity remote code execution vulnerability in FreeBSD’s rtsol(8) and rtsold(8) programs that is still awaiting NVD and CVE publication. The flaw occurs because these programs fail to validate domain search list options in IPv6 router advertisement messages, potentially allowing shell commands to be executed due to improper input validation in resolvconf(8). Attackers on the same network segment could potentially exploit this vulnerability for remote code execution; however, the attack does not cross network boundaries, as router advertisement messages are not routable.

CVE-2025-38352, a high-severity race condition vulnerability in the Linux kernel. This Time-of-Check Time-of-Use (TOCTOU) race condition in the posix-cpu-timers subsystem could allow local attackers to escalate privileges. The flaw occurs when concurrent timer deletion and task reaping operations create a race condition that fails to detect timer firing states.

ICS Vulnerabilities

Cyble threat researchers also flagged two industrial control system (ICS) vulnerabilities as meriting high-priority attention by security teams. They include:

CVE-2025-30023, a critical Deserialization of Untrusted Data vulnerability in Axis Communications Camera Station Pro, Camera Station, and Device Manager. Successful exploitation could allow an attacker to execute arbitrary code, conduct a man-in-the-middle-style attack, or bypass authentication.

Schneider Electric EcoStruxure Foxboro DCS Advisor is affected by CVE-2025-59827, a Deserialization of Untrusted Data vulnerability in Microsoft Windows Server Update Service (WSUS). Successful exploitation could allow for remote code execution, potentially resulting in unauthorized parties acquiring system-level privileges.

Conclusion

The persistently high number of new vulnerabilities observed in recent weeks is a worrisome new trend as we head into 2026. More than ever, security teams must respond with rapid, well-targeted actions to patch the most critical vulnerabilities and successfully defend IT and critical infrastructure. A risk-based vulnerability management program should be at the heart of those defensive efforts.

Other cybersecurity best practices that can help guard against a wide range of threats include segmentation of critical assets; removing or protecting web-facing assets; Zero-Trust access principles; ransomware-resistant backups; hardened endpoints, infrastructure, and configurations; network, endpoint, and cloud monitoring; and well-rehearsed incident response plans.

Cyble’s comprehensive attack surface management solutions can help by scanning network and cloud assets for exposures and prioritizing fixes, in addition to monitoring for leaked credentials and other early warning signs of major cyberattacks.

The post The Week in Vulnerabilities: The Year Ends with an Alarming New Trend appeared first on Cyble.

Cyble – Read More

The Week in Vulnerabilities: The Year Ends with an Alarming New Trend

Cyble Vulnerability Intelligence researchers tracked 1,782 vulnerabilities in the last week, the third straight week that new vulnerabilities have been growing at twice their long-term rate.

Over 282 of the disclosed vulnerabilities already have a publicly available Proof-of-Concept (PoC), significantly increasing the likelihood of real-world attacks on those vulnerabilities.

A total of 207 vulnerabilities were rated as critical under the CVSS v3.1 scoring system, while 51 received a critical severity rating based on the newer CVSS v4.0 scoring system.

Here are some of the top IT and ICS vulnerabilities flagged by Cyble threat intelligence researchers in recent reports to clients.

The Week’s Top IT Vulnerabilities

CVE-2025-66516 is a maximum severity XML External Entity (XXE) injection vulnerability in Apache Tika’s core, PDF and parsers modules. Attackers could embed malicious XFA files in PDFs to trigger XXE, potentially allowing for the disclosure of sensitive files, SSRF, or DoS without authentication.

CVE-2025-15047 is a critical stack-based buffer overflow vulnerability in Tenda WH450 router firmware version V1.0.0.18. Attackers could potentially initiate it remotely over the network with low complexity, and a public exploit exists, increasing the risk of widespread abuse.

Among the vulnerabilities added to CISA’s Known Exploited Vulnerabilities (KEV) catalog were:

- CVE-2025-14733, an out-of-bounds write vulnerability in WatchGuard Fireware OS that could enable remote unauthenticated attackers to execute arbitrary code.

- CVE-2025-40602, a local privilege escalation vulnerability due to insufficient authorization in the Appliance Management Console (AMC) of SonicWall SMA 1000 appliances.

- CVE-2025-20393, a critical remote code execution (RCE) vulnerability in Cisco AsyncOS Software affecting Cisco Secure Email Gateway and Cisco Secure Email and Web Manager appliances. The flaw has reportedly been actively exploited since late November by a China-linked APT group, which has deployed backdoors such as AquaShell, tunneling tools, and log cleaners to achieve persistence and remote access.

- CVE-2025-14847, a high-severity MongoDB vulnerability that’s been dubbed “MongoBleed” and reported to be under active exploitation. The Improper Handling of Length Parameter Inconsistency vulnerability could potentially allow uninitialized heap memory to be read by an unauthenticated client, potentially exposing data, credentials and session tokens.

Vulnerabilities Under Discussion on the Dark Web

Cyble dark web researchers observed a number of threat actors sharing exploits and discussing weaponizing vulnerabilities on underground and cybercrime forums. Among the vulnerabilities under discussion were:

CVE-2025-56157, a critical default credentials vulnerability affecting Dify versions through 1.5.1, where PostgreSQL credentials are stored in plaintext within the docker-compose.yaml file. Attackers who access deployment files or source code repositories could extract these default credentials, potentially gaining unauthorized access to databases. Successful exploitation could enable remote code execution, privilege escalation, and complete data compromise.

CVE-2025-37164, a critical code injection vulnerability in HPE OneView. The unauthenticated remote code execution flaw affects HPE OneView versions 10.20 and prior due to improper control of code generation. The vulnerability exists in the /rest/id-pools/executeCommand REST API endpoint, which is accessible without authentication, potentially allowing remote attackers to execute arbitrary code and gain centralized control over the enterprise infrastructure.

CVE-2025-14558, a critical severity remote code execution vulnerability in FreeBSD’s rtsol(8) and rtsold(8) programs that is still awaiting NVD and CVE publication. The flaw occurs because these programs fail to validate domain search list options in IPv6 router advertisement messages, potentially allowing shell commands to be executed due to improper input validation in resolvconf(8). Attackers on the same network segment could potentially exploit this vulnerability for remote code execution; however, the attack does not cross network boundaries, as router advertisement messages are not routable.

CVE-2025-38352, a high-severity race condition vulnerability in the Linux kernel. This Time-of-Check Time-of-Use (TOCTOU) race condition in the posix-cpu-timers subsystem could allow local attackers to escalate privileges. The flaw occurs when concurrent timer deletion and task reaping operations create a race condition that fails to detect timer firing states.

ICS Vulnerabilities

Cyble threat researchers also flagged two industrial control system (ICS) vulnerabilities as meriting high-priority attention by security teams. They include:

CVE-2025-30023, a critical Deserialization of Untrusted Data vulnerability in Axis Communications Camera Station Pro, Camera Station, and Device Manager. Successful exploitation could allow an attacker to execute arbitrary code, conduct a man-in-the-middle-style attack, or bypass authentication.

Schneider Electric EcoStruxure Foxboro DCS Advisor is affected by CVE-2025-59827, a Deserialization of Untrusted Data vulnerability in Microsoft Windows Server Update Service (WSUS). Successful exploitation could allow for remote code execution, potentially resulting in unauthorized parties acquiring system-level privileges.

Conclusion

The persistently high number of new vulnerabilities observed in recent weeks is a worrisome new trend as we head into 2026. More than ever, security teams must respond with rapid, well-targeted actions to patch the most critical vulnerabilities and successfully defend IT and critical infrastructure. A risk-based vulnerability management program should be at the heart of those defensive efforts.

Other cybersecurity best practices that can help guard against a wide range of threats include segmentation of critical assets; removing or protecting web-facing assets; Zero-Trust access principles; ransomware-resistant backups; hardened endpoints, infrastructure, and configurations; network, endpoint, and cloud monitoring; and well-rehearsed incident response plans.

Cyble’s comprehensive attack surface management solutions can help by scanning network and cloud assets for exposures and prioritizing fixes, in addition to monitoring for leaked credentials and other early warning signs of major cyberattacks.

The post The Week in Vulnerabilities: The Year Ends with an Alarming New Trend appeared first on Cyble.

Cyble – Read More

Integrating a Malware Sandbox into SOAR Workflows: Steps, Benefits, and Impact

SOAR platforms are excellent at moving work forward. They trigger playbooks, route incidents, and enforce consistent response steps. What they don’t do well on their own is confirm what’s actually SOAR helps teams move faster, but speed isn’t the real problem.

The real issue is figuring out what an alert actually means. A sandbox solves that by safely running the file or link and showing what it really does. With clear evidence in hand, playbooks make better decisions, triage moves quicker, and fewer incidents turn into long investigations.

Let’s walk through how teams use a sandbox inside SOAR, and what that means for faster decisions and lower risk.

Why a Sandbox Changes SOAR Outcomes

SOAR platforms are excellent at moving work forward. They trigger playbooks, route incidents, and enforce consistent response steps. What they don’t do well on their own is confirm what’s actually happening.

That gap matters. When alerts arrive with limited context, automation can only go so far. Teams hesitate, escalations increase, and playbooks stall while someone manually checks files, links, or indicators across multiple tools.

A sandbox changes this dynamic by adding behavior-based proof directly into the workflow. Instead of relying on assumptions or partial signals, SOAR receives concrete answers: what executed, what connected out, what dropped, and how risky it really is.

With that clarity:

- Triage decisions happen faster

- Playbooks trigger with more confidence

- Fewer cases get escalated “just in case”

In practice, SOAR stops being a traffic controller and starts acting like a decision engine; one that’s backed by real evidence, not guesses.

What a Sandbox Does Inside SOAR Workflows

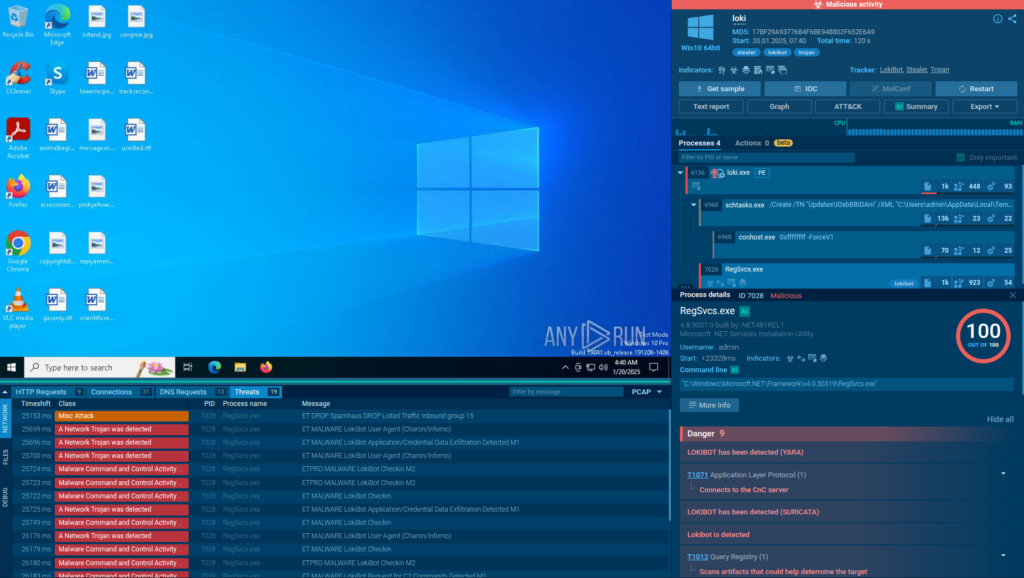

When integrated into SOAR, ANY.RUN’s sandbox covers a few critical steps that static tools alone can’t reliably handle:

- Validates alerts with real behavior: Suspicious files and links are executed in a safe environment to confirm whether they’re actually malicious. This replaces guesswork with evidence early in the workflow.

- Uncovers multi-stage and evasive attacks: Many threats reveal their intent only after redirects, downloads, or user interaction. A sandbox follows the full execution chain so SOAR can act on what truly happens, not what appears safe at first glance.

- Returns decision-ready context to playbooks: SOAR receives clear verdicts, risk scores, and indicators tied to observed behavior, giving playbooks the confidence to move forward without manual checks.

- Reduces unnecessary escalations: With reliable evidence available upfront, fewer cases are passed up the chain “just in case,” keeping response focused and queues under control.

- Enables safer automation: Once behavior is confirmed, SOAR can trigger containment, enrichment, and documentation steps with much lower risk of false positives.

Together, these capabilities allow SOAR workflows to run with more confidence and consistency, even during alert spikes, and without increasing operational overhead.

Where Sandbox-Driven SOAR Fits in Real Security Stacks

In enterprise environments, SOAR operates across SIEM, endpoint, and threat intelligence platforms. A sandbox fits into this layer as the system that validates behavior and feeds trusted context back into automation.

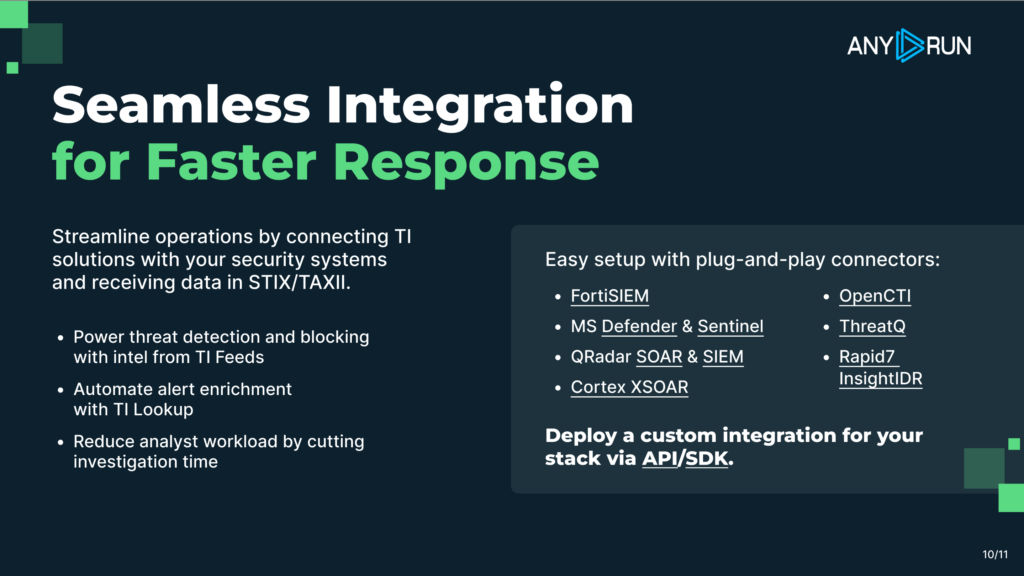

That’s why Interactive Sandbox integrations and connectors are designed to work directly inside widely used SOAR and security platforms, including:

Within these environments, sandbox execution is triggered automatically from incidents or alerts. Files, URLs, and artifacts are analyzed in a safe environment, and the results, verdicts, risk scores, indicators, and behavioral context, are returned directly into the SOAR case.

This means teams don’t have to switch tools to understand what’s happening. Automation continues with confidence, response actions are triggered earlier, and threat intelligence is enriched as part of the same workflow.

Sandbox-driven SOAR is embedded into the platforms large organizations rely on today, making it easier to scale response without adding operational complexity.

From Faster Triage to Lower Risk: The Business Impact of Sandbox-Driven SOAR

When ANY.RUN’s sandbox is embedded into SOAR workflows, the impact goes beyond faster investigations. It changes how incidents are prioritized, handled, and closed with measurable effects at both the SOC and business level.

- Real-time threat visibility: Observe full attack chains as they unfold, with 90% of malicious activity exposed within the first 60 seconds, significantly accelerating mean time to detect (MTTD).

- Higher detection rates for evasive threats: Sandbox execution uncovers low-detection attacks, including multi-stage malware and interaction-dependent phishing, resulting in up to 58% more threats identified and fewer missed incidents.

- Lower MTTR across common incidents: With behavior confirmation available early, response steps trigger sooner and manual verification is removed from first-line playbooks, consistently shortening response cycles.

- Operational efficiency at scale: Automated sandbox execution reduces manual analysis time, cutting Tier 1 workload by up to 20% and allowing less experienced team members to handle more complex cases with confidence.

- Stronger performance during alert spikes: Evidence-driven automation keeps workflows stable during phishing waves or malware campaigns, helping teams avoid backlog growth and burnout.

- Clear business-level impact: Faster containment reduces the risk of lateral movement, data loss, and downtime, while automation lowers the cost per incident by minimizing repeated manual effort.

Turning Sandbox-Driven SOAR into a Scalable Security Strategy

SOAR works best when automation is backed by proof. By adding a sandbox into the workflow, teams replace uncertainty with clear behavior, shorten response cycles, and keep decisions consistent even under pressure.

With ready-made integrations across common SOAR and security platforms, sandbox-driven workflows fit naturally into existing stacks. The result is faster response, lower operational load, and reduced business risk, without expanding teams or tools.

See how sandbox-driven SOAR fits into your environment. Explore ANY.RUN’s Enterprise integrations and unified security workflows.

About ANY.RUN

ANY.RUN helps security teams make faster, clearer decisions when it matters most. The platform is trusted by over 500,000 security professionals and 15,000+ organizations across industries where response speed and accuracy are critical.

ANY.RUN’s Interactive Sandbox allows teams to safely execute suspicious files and links, observe real behavior in real time, and confirm threats before they escalate. Combined with Threat Intelligence Lookup and Threat Intelligence Feeds, it adds the context needed to prioritize alerts, reduce uncertainty, and stop advanced attacks earlier in the response cycle.

Start a 2-week ANY.RUN trial →

SOAR moves tickets fast but can’t tell you what’s really happening. Malware sandbox gives you the proof: what ran, what connected out, what files dropped. Your playbooks turn into decision engines instead of waiting on manual checks.

Connectors trigger malware sandbox on alerts. You send files or URLs. Results come back fast. Verdicts, risk scores, IOCs, TTPs. Playbooks use that to triage, contain, or close without humans jumping in.

Multi-stage phishing and evasive malware. Malware sandbox follows redirects and downloads to show the full chain. Static scans miss this stuff.

Yes. Tier 1 gets clear evidence upfront. They close 70% more cases without passing them up. No more “just in case” handoffs.

For ANY.RUN’s Interactive Sandbox, 90% of malicious behavior shows up in 60 seconds. Your playbooks act right away.

FortiSOAR, Cortex XSOAR, Splunk SOAR, Microsoft Sentinel, IBM QRadar SOAR, Google SecOps, and more.

Grab a 2-week trial. Pick your connector. Test it on real alerts. See the difference yourself.

The post Integrating a Malware Sandbox into SOAR Workflows: Steps, Benefits, and Impact appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More

5 Ways MSSPs Can Win Clients in 2026

By 2026, MSSPs will compete less on tooling and more on clarity, speed, and foresight. Security buyers want proof that their provider understands what threats matter now, how fast they can respond, and how security decisions reduce business risk.

At the center of this challenge sits threat intelligence. Not as a research output, but as an operational input shaping every security decision.

What Clients Will Demand This Year: The Five Deciding Factors

Winning deals in 2026 means excelling where others fall short. Prospects grill providers on these five areas before signing:

- Relentless Threat Blocking: They want evidence you’re stopping attacks they can’t see coming.

- Clean, Actionable Alerts: Too many false positives waste time and erode confidence.

- Blazing-Fast Incident Response: Slow containment turns small incidents into headline breaches.

- Forward-Leaning Threat Hunting: Reactive-only services feel outdated in a zero-trust world.

- Undeniable ROI Visibility: Executives need clear proof of risks blocked and value delivered.

Master these with actionable threat intelligence, and you’ll close more deals while your competitors hassle.

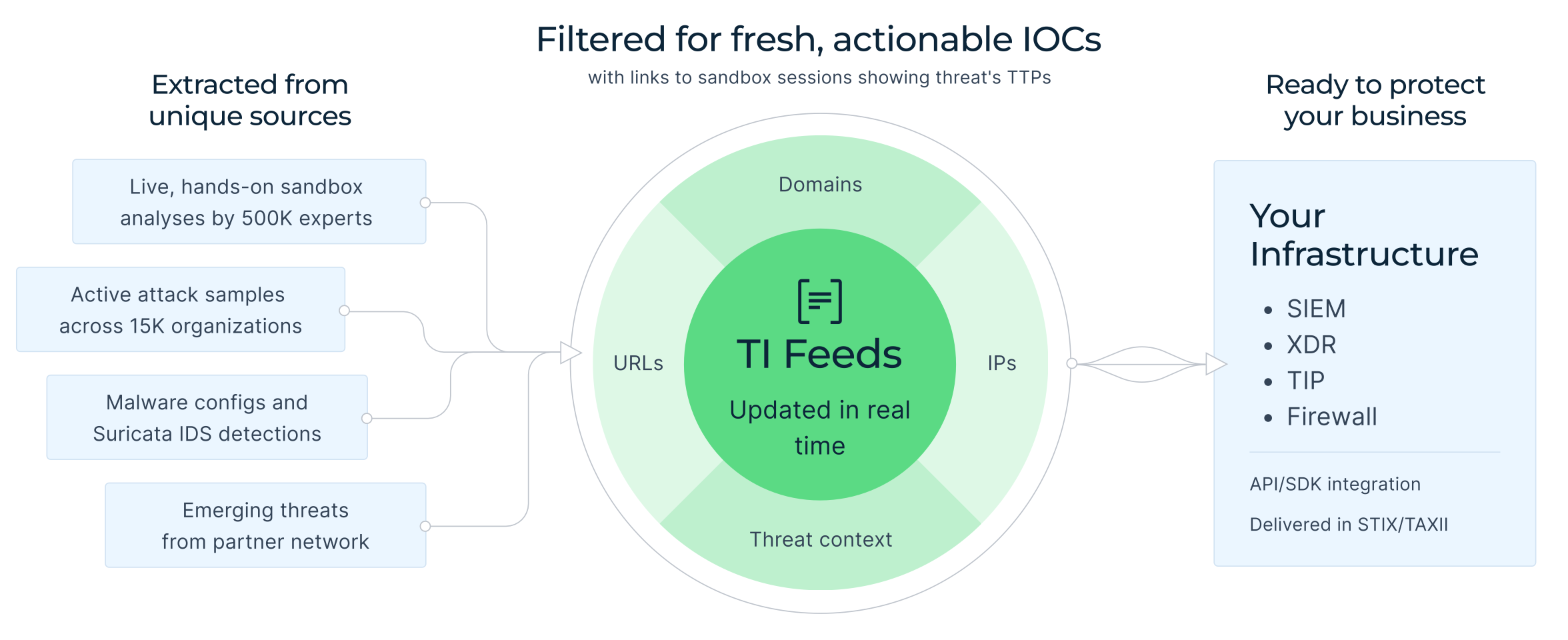

ANY.RUN’s Threat Intelligence Feeds contain real-time streams of malicious IPs, domains, and URLs pulled from millions of sandbox submissions, updated frequently to keep you ahead. TI Feeds are not background data. They are the operational core of detection, response, hunting, reporting, and proactive defense.

Here are the five ways to stand out by employing real-time trustworthy threat data.

1. Early awareness defines credibility

Clients rarely see the attacks you stop. They only notice the ones you miss. One successful intrusion can erase years of good service and trigger immediate vendor reassessment.

The difficulty lies in the speed of attacker adaptation. Malware changes quickly, infrastructure rotates, and indicators expire fast. Detection logic based on static or delayed intelligence struggles to keep pace.

What mature threat intelligence enables:

ANY.RUN’s TI Feeds deliver continuously updated indicators along with sandbox analyses documenting attacker behavior extracted from live malware execution. For MSSPs, this means:

- Detection rules that evolve as threats evolve;

- Faster identification of emerging malware families;

- Visibility into infrastructure used in active attacks;

- Reduced reliance on outdated or recycled indicators.

Instead of reacting to incidents reported elsewhere, your MSSP detects threats while competitors are still catching up. For clients, that difference is felt as safety, not statistics.

2. Response matters more than perfection

No MSSP can prevent every incident. What matters is how quickly and confidently you respond. Long investigations, unclear answers, and delayed containment create anxiety at the executive level.

Most delays come from context gaps. Analysts must validate indicators, understand attackers’ intent, and assess scope before acting. That takes time when intelligence is fragmented.

TI Feeds give response teams immediate access to:

- Fresh, validated indicators of compromise: malicious IPs, domains, and URLs continuously updated from real malware analysis by over 15K SOC teams;

- Contextual metadata including links to sandbox analysis sessions that provide additional insight into threat behavior;

- Intelligence that supports instant containment decisions;

- Formats compatible with SIEMs and security platforms (STIX, MISP) for seamless integration into existing workflows;

- API-enabled delivery so intelligence can feed automated detection and monitoring systems in real time.

This allows MSSPs to move from alert to action in minutes, not hours. Over time, clients associate your service with decisiveness and control. That perception is critical for renewal conversations.

3. Proactive security becomes expected

By 2026, clients will not ask whether you offer threat hunting. They will assume you do. The real question will be whether your hunting produces meaningful results or just internal noise.

Without fresh intelligence, hunting teams often chase weak signals or outdated hypotheses. That leads to low impact and poor client communication.

With TI Feeds, MSSPs can anchor threat hunting in reality:

- Hunt for indicators tied to active attacker campaigns;

- Correlate client telemetry with known malicious behavior;

- Continuously refine hypotheses using new feed data;

- Demonstrate findings backed by observable attacker activity.

This makes threat hunting repeatable, scalable, and easier to justify commercially.

4. Reporting shapes executive perception

Executives don’t want dashboards full of alerts. They want confidence that risk is being reduced and managed. Poor reporting creates the impression that security work is abstract or disconnected from business reality.

In many cases, churn begins not after incidents, but after months of unclear reporting.

TI Feeds allow MSSPs to report on outcomes, not effort:

- Indicators with associated threat context help explain why a detected indicator matters;

- Detection timestamps and threat labels reveal whether an indicator is recent and connected to active campaigns;

- Consistent delivery of validated, actionable IOCs allows reports to reflect real threat activity, not noise.

Reports become stories of protection delivered, not lists of events processed. This is where MSSPs justify their value in language decision-makers understand.

5. Generic defense won’t hold clients

Clients increasingly expect their security posture to evolve alongside attacker behavior. Generic controls applied uniformly across clients feel outdated and inattentive. The challenge is keeping defenses current without overwhelming analysts.

By aligning TI Feeds with each client’s risk profile, MSSPs can:

- Base decisions on high-confidence threat data, minimizing distraction from low-value or false signals;

- Use enriched contextual data to explain how specific IOCs are connected to observed or emerging threats;

- Update detection logic as campaigns evolve.

Security stops being reactive and starts feeling anticipatory. Clients feel seen, protected, and prioritized. That emotional factor matters more than most technical metrics.

Final Thought: What Clients Are Willing to Pay For

MSSPs don’t lose clients because attackers exist. They lose clients because they fail to show awareness, speed, and progress.

In 2026, Threat Intelligence Feeds are the foundation of competitive MSSP services. They power better detection, faster response, meaningful hunting, credible reporting, and proactive protection. The key metrics demonstrate this clearly:

- Security teams report up to a 21‑minute reduction in MTTR per case, with automation-ready feeds accelerating triage and containment actions.

- Up to 58% more threats identified after integrating TI Feeds into detection rules and playbooks.

- Streamlined intelligence lets Tier 1 teams resolve more incidents independently, reducing escalations to senior analysts by 30%.

The MSSPs that win will be those who turn intelligence into visible outcomes their clients can trust month after month.

About ANY.RUN

Trusted by over 500,000 cybersecurity professionals and 15,000+ organizations in finance, healthcare, manufacturing, and other critical industries, ANY.RUN helps security teams investigate threats faster and with greater accuracy.

Our Interactive Sandbox accelerates incident response by allowing you to analyze suspicious files in real time, watch behavior as it unfolds, and make confident, well-informed decisions.

Our Threat Intelligence Lookup and Threat Intelligence Feeds strengthen detection by providing the context your team needs to anticipate and stop today’s most advanced attacks.

Start a 2-week ANY.RUN trial →

FAQ: Threat Intelligence Feeds for MSSPs

Because attackers move faster than manual analysis can handle. TI Feeds provide a continuous stream of fresh indicators and attacker behavior that allow MSSPs to detect, respond, and adapt in near real time—something clients will increasingly expect as standard service.

Unlike static or delayed sources, TI Feeds are updated continuously and reflect indicators observed in real malware executions. This helps MSSPs detect emerging threats earlier and avoid relying on outdated indicators.

Yes. By supplying validated IOCs and associated TTPs upfront, TI Feeds remove much of the uncertainty during investigations. Response teams spend less time validating alerts and more time containing threats.

TI Feeds give hunters access to indicators and techniques tied to active campaigns, making hunting more focused and defensible. This allows MSSPs to offer threat hunting as a repeatable, intelligence-driven service rather than ad hoc investigation.

They do. TI Feeds enable MSSPs to report on real threats detected, blocked campaigns, and changes in risk exposure. This turns reports into proof of value, which is critical for client retention and contract renewals.

Absolutely. By tracking evolving attacker behavior and infrastructure, TI Feeds help MSSPs keep detection rules updated and defenses aligned with current threats—before attacks reach client environments.

TI Feeds automate intelligence delivery. Instead of analysts manually researching each alert, intelligence is continuously ingested into security tools, allowing MSSPs to protect more clients without proportional growth in headcount.

The post 5 Ways MSSPs Can Win Clients in 2026 appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More

Release Notes: AI Sigma Rules, Live Threat Landscape & 1,700+ New Detections

ANY.RUN is wrapping up 2025 with updates that take pressure off your SOC and help your team work faster. You can now get AI‑generated Sigma rules, track threats by industry and region, and detect new campaigns with better speed and accuracy.

Let’s see what these improvements bring to your security stack.

Product Updates

Industry & Geo Threat Landscape in TI Lookup

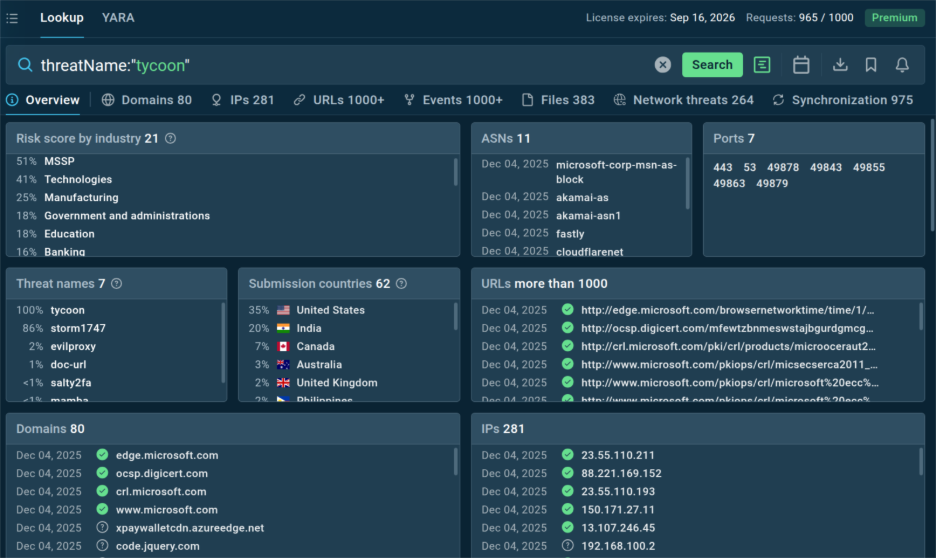

TI Lookup now gives every indicator extra context showing which industries and countries are linked to the threat of your industry and where similar activity is trending. It’s an easy way to see whether a threat actually affects your business or if it’s just background noise.

Built on live data from more than 15,000 organizations, this update helps your team tighten detection focus and reduce blind spots:

- See what matters first: Identify threats targeting your market or region so you can prioritize high‑risk activity.

- Triage faster: Skip irrelevant alerts and go straight to the ones that match your exposure.

- Work with better insight: Use targeted intelligence to guide hunts, automate enrichment, and improve MTTD.

With TI Lookup, you spot threats earlier, respond faster, and keep your attention where it counts.

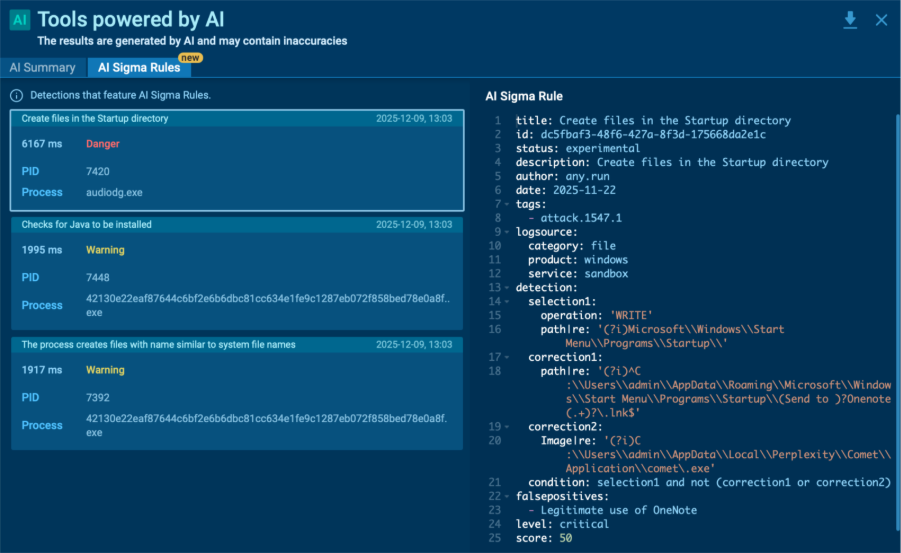

AI Sigma Rules in ANY.RUN Sandbox

The new AI Sigma Rules feature in the Interactive Sandbox turns your confirmed detections into ready‑to‑use Sigma rules automatically. Instead of spending hours writing them by hand, you can now take the rule straight from the sandbox and add it to your SIEM or SOAR in seconds.

The rules are created from the same processes, files, and network events you see in the sandbox, so they stay closely tied to real attacker behavior. That means better accuracy and quicker response without extra effort.

Here’s what you gain:

- Less manual work: Every confirmed threat instantly becomes a reusable detection rule.

- Better coverage: Each investigation now improves how your SOC spots similar attacks later.

- Faster action: Analysts spend less time writing rules and more time acting on real signals.

Threat Coverage Updates

In December, our detection team rolled out another wave of coverage improvements with:

- 86 new behavior signatures

- 13 new YARA rules

- 1,686 new Suricata rules

These updates enhance phishing detection, expand coverage of stealers, loaders, and RATs, and clean up false positives across multi‑stage attacks.

New Behavior Signatures

Fresh signatures add visibility into persistence, lateral movement, and abuse of system tools seen across mixed environments.

Highlighted families include:

These detections help analysts catch miner and loader activity earlier and recognize evasion tricks like rundll32 abuse or PowerShell obfuscation.

New YARA Rules

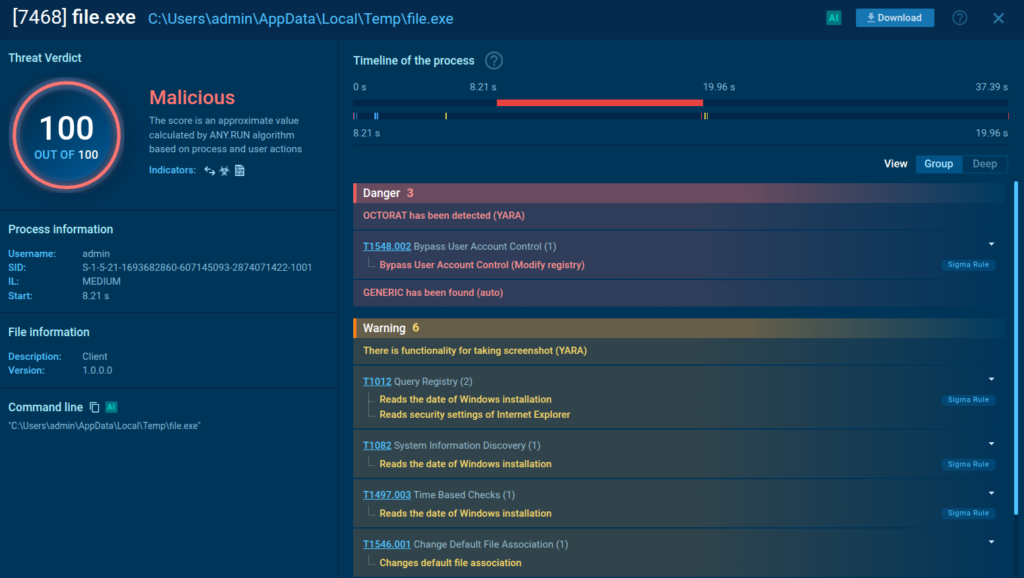

We added 13 YARA rules to improve detection across new malware strains and living‑off‑the‑land tools.

Highlighted families are STEAL1, SANTASTEALER, UNIXSTEALER, OCTORAT, DonutLoader.

These cover credential theft, modular loaders, and dual‑use administrative tools to ensure better coverage for both Windows and Linux‑based systems.

New Suricata Rules

We’ve added 1,686 Suricata rules targeting phishing, botnet activity, and evasive network behaviors often missed by standard IDS.

Together, these bring better coverage of C2 traffic, phishing domains, and low‑signal campaign infrastructure.

Businesses that are constantly being bombarded by hundreds of hacker attacks daily can upgrade their proactive defense with ANY.RUN’s Threat Intelligence Feeds.

Powered by sandbox analyses of the latest malware & phishing samples across 15K SOCs, they deliver fresh, real-time malicious network IOCs to numerous companies around the globe. Enriched with detailed sandbox reports, TI Feeds not only help you catch emerging threats early but also provide your analysts with actionable intelligence for fast remediation, boosting your detection rate and driving down the MTTR.

Threat Intelligence Reports

In December we published new TI Reports summarizing late‑year activity:

- End of Year Phishing (Available as part of the TI Lookup Premium subscription)

Each brief distills TTPs, campaigns, and IOCs from live submissions to help SOC teams anticipate what’s next.

About ANY.RUN

ANY.RUN powers SOCs at more than 15,000 organizations, giving them faster visibility into live threats through interactive sandboxing and cloud‑based intelligence.

Our Interactive Sandbox lets you analyze Windows, Linux, and Android samples in real time, watch the execution flow second‑by‑second, and pull IOCs instantly, no installs, no waiting. Combined with Threat Intelligence Lookup and Threat Intelligence Feeds, you get a single workflow built to speed up investigation, cut MTTD and MTTR, and keep your SOC focused on the right threats.

Start 2026 with faster detection, better threat intel, and less noise.

Request trial of ANY.RUN’s products for your SOC.

The post Release Notes: AI Sigma Rules, Live Threat Landscape & 1,700+ New Detections appeared first on ANY.RUN’s Cybersecurity Blog.

ANY.RUN’s Cybersecurity Blog – Read More