Google Cloud Platform Data Destruction via Cloud Build

Background & Public Research

Google Cloud Platform (GCP) Cloud Build is a Continuous Integration/Continuous Deployment (CI/CD) service offered by Google that is utilized to automate the building, testing and deployment of applications. Orca Security published an article describing certain aspects of the threat surface posed by this service, including a supply chain attack vector they have termed “Bad.Build”. One specific issue they identified, that Cloud Build pipelines with the default Service Account (SA) could be utilized to discover all other permissions assignments in a GCP project, was resolved by Google after Orca reported it. The general threat vector of utilizing Cloud Build pipelines to perform malicious actions, however, is still present. A threat actor with just the ability to submit and run a Cloud Build job (in other words, who has the cloudbuild.builds.create permission) can execute any gcloud GCP command line interface (CLI) command that the Cloud Build SA has the permissions to perform. A threat actor could perform these techniques either by obtaining credentials for a GCP user or SA (Mitre ATT&CK T1078.004) or by pushing or merging code into a repository with a Cloud Build pipeline configured (Mitre T1195.002). Orca Security focused on utilizing this threat vector to perform a supply chain attack by adding malicious code to a victim application in GCP Artifact Registry. Talos did not extensively examine this technique since Orca’s research was quite comprehensive, but confirmed it is still possible.

Original Research

Cisco Talos research detailed below focused on malicious actions enabled by the storage.* permission family, while the original Orca research detailed a supply chain attack scenario enabled by the artifactregistry.* permissions. Beyond the risk posed by the default permissions, any additional permissions assigned to the Cloud Build SA could potentially be leveraged by a threat actor with access to this execution vector, which is an area for potential future research. While Orca mentioned that a Cloud Build job could be triggered by a merge event in a code repository, in their article they utilized the gcloud Command Line Interface (CLI) tool to trigger the malicious build jobs they performed. Talos meanwhile utilized commits to a GitHub repository configured to trigger a Cloud Build job, since this is a form of Initial Access vector that would allow a threat actor to target GCP accounts without access to an identity principal for the GCP account.

Unlike the Orca Security findings, Talos does not assess that the attack path illustrated in the following research represents a vulnerability or badly architected service. There are legitimate business use cases for every capability and default permission that we utilized for malicious intent, and Google has provided robust security recommendations and defaults. This research, instead, should be taken as an instructive illustration of the risks posed by these capabilities for cloud administrators who may be able to limit some of the features that were misused if they are not needed in a particular account. It can also be a guide for security analysts and investigators who can monitor the Operations Log events identified, threat hunt for their misuse, or identify them in a post-incident incident response workflow.

Defensive Recommendations Summary

Talos recommends creating an anomaly model-style threat detection for the default Cloud Build SA performing actions that are not standard for it to execute in a specific environment. As always, properly applying the principle of least privilege by assigning Cloud Build a lower privileged Service Account with just the permissions needed in a particular environment will also reduce the threat surface identified here. Finally, review the configuration applied to any repositories that can trigger Cloud Build or other CI/CD service jobs, require manual approval for builds triggered by Pull Requests (PRs) and avoid allowing anyone to directly commit code to GitHub repositories without a PR. More details on all three of these topics are described below.

Lab Environment Setup

Talos has an existing Google Cloud Platform lab environment utilized for offensive security research. Within that environment, a Cloud Storage bucket was created and the Cloud Build Application Programming Interface (API) were enabled. Additionally, a target GitHub repository was created; For research purposes, this repository was set to private, but an actual adversary would likely take advantage of a public repository in most cases. The Secrets Manager API is also needed for Cloud Build to integrate with GitHub, so that was also enabled. These actions can be performed using the gcloud and GitHub gh CLI tools using the following commands:

gcloud storage buckets create BUCKET_NAME --location=LOCATION --project=PROJECT_ID

gcloud services enable cloudbuild.googleapis.com --project=PROJECT_ID

gcloud services enable secretmanager.googleapis.com --project=PROJECT_ID

gh repo create REPO_NAME --private --description "Your repository description"

Next, to simulate a real storage bucket populated with data, a small shell script was executed that created 100 text files containing random data and transferred them to the new Cloud Storage bucket.

#!/bin/bash

# Set your bucket name here

BUCKET_NAME="data-destruction-research"

# Create a directory for the files

mkdir -p random_data_files

# Generate 500 files with 10MB of random data

for i in {1..100}

do

FILE_NAME="random_data_files/file_$i.txt"

# Use /dev/urandom to generate random data and `head` to limit to 10MB

head -c $((10*1024*1024)) </dev/urandom > "$FILE_NAME"

echo "Generated $FILE_NAME"

done

# Upload the files to the GCP bucket

for FILE in random_data_files/*

do

gsutil cp "$FILE" "gs://$BUCKET_NAME/"

echo "Uploaded $FILE to gs://$BUCKET_NAME/"

done

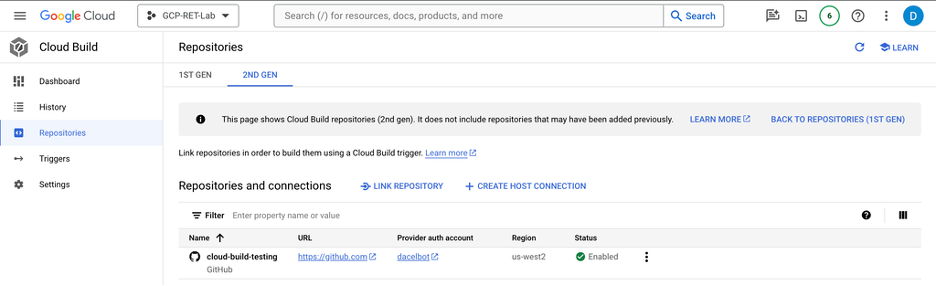

Then the GitHub repository and Cloud Build were integrated by creating a connection between the two resources, which can be done using the following command, followed by authenticating and granting access on the GitHub.com side.

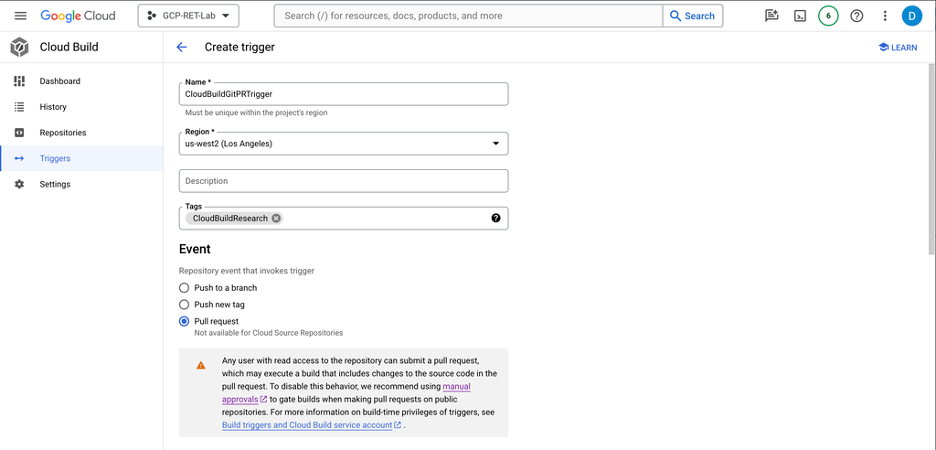

gcloud builds connections create github CONNECTION_NAME --region=REGIONFinally, a Cloud Build “trigger” that starts a build when code is pushed to the main branch, a pull request (PR) is created, or code is committed to an existing pull request in the victim GitHub repository, was configured. This can be done using the following gcloud command:

gcloud builds triggers create github

--name=TRIGGER_NAME

--repository=projects/PROJECT_ID/locations/us-west1/connections/data-destruction/repositories/REPO_NAME

--branch-pattern=BRANCH_PATTERN # or --tag-pattern=TAG_PATTERN

--build-config=BUILD_CONFIG_FILE

--region=us-west1

Defensive Notes

Google’s documentation warns users that it is recommended to require manual approval to trigger a build if utilizing the creation of a PR or a commit to a PR as a trigger condition, since any user that can read a repo can submit a PR. This is excellent advice that should be followed whenever possible, and reviewers should be made aware of the threat surface posed by Cloud Build. For the purpose of illustrating the potential threat vector here, this advice was not heeded and manual approval was not setup in the Talos lab environment. Builds can also be triggered based on a PR being merged into the main branch, which is another reason besides protecting the integrity of the repository that an approving PR review should be required before PRs are merged.

There may be real world scenarios where a valid business case exists to allow automatic build events when a PR is created, which is why a proper defense in depth strategy should include monitoring the events performed by any Service Accounts assigned to Cloud Build. Google also offers the ability to require a comment containing the string “/gcbrun” from either just a user with the GitHub repository owner or collaborator roles or any contributor to be made on a PR to trigger the Cloud Build run. This is another strong security feature that should be configured with the owner or collaborator option selected if possible. If performing a penetration test or red teaming engagement and attempting to target GCP via a GitHub PR, it may be worth commenting that string on your malicious PR in case the Cloud Build trigger is configured to allow any contributor this privilege.

Research & Recommendations

Data Destruction (Mitre ATT&CK T1485)

Talos has previously covered data destruction for impact within GCP Cloud Storage during the course of a Purple Teaming Engagement focused on GCP, but expanded upon this research and utilized the GitHub-to-Cloud Build execution path in this research. The first specific behavior performed, deleting a Cloud Storage bucket, can be performed using the following gcloud command:

gcloud storage rm --recursive gs://BUCKET_NAMETo perform this via a Cloud Build pipeline, Orca’s simple Proof of Concept (PoC) example Cloud Build configuration file, itself in turn based on the example in Google’s documentation, was modified slightly as follows:

- name: 'gcr.io/cloud-builders/gcloud'

args: ['storage', 'rm', '--recursive', 'gs://BUCKET_NAME']

This YAML file was then committed to a GitHub branch and a PR was created for it, which can be done utilizing the following commands:

git clone <repo URL>

cd <repo name>

cp ../totally-not-data-destruction.yaml cloudbuild.yaml

git add cloudbuild.yaml

git commit -m "Not going to do anything bad at all"

git push

gh pr create

In the Orca Security research, a Google Cloud Storage (GCS) bucket for the Cloud Build runtime logs is required. Since threat actors typically wish to avoid leaving forensic artifacts behind, they may choose to specify a GCS bucket in a different GCP account under their control. This provides a detection opportunity by looking for the utilization of an external GCS bucket in a Cloud Build event, assuming all the storage buckets in the account are known. However, when running a Cloud Build job via a GitHub or other repository trigger, specifying a GCS bucket for storing logs is not required.

GCP offers the ability to configure “Soft Delete”, a feature that enables the restoration of accidentally or maliciously deleted GCS buckets and objects for a configurable time period. This is a strong security feature and should be enabled whenever possible. However, as is noted in their official documentation, when a bucket is deleted, its name becomes available for use again and if claimed during the creation of a new bucket, it will no longer by possible to restore the deleted GCS bucket. To truly destroy data in a GCS bucket, an adversary therefore just needs to immediately create a new bucket with the same name after deleting the previous one.

The Cloud Build configuration file can be updated to accomplish this as follows:

steps:

- name: 'gcr.io/cloud-builders/gcloud'

args: ['storage', 'rm', '--recursive', 'gs://BUCKET_NAME']

args: ['storage', 'buckets', 'create', 'gs://BUCKET_NAME', '--location=BUCKET_LOCATION']

Defensive Notes

Log Events

All of the events discussed above are logged by Google Operations Logs. The following Operations Logs events were identified during the research:

- google.devtools.cloudbuild.v1.CloudBuild.RunBuildTrigger

- This event logs the creation of a new build via a connection trigger, such as the GitHub Pull Request trigger method discussed above. This will be very useful for a Digital Forensics & Incident Response (DFIR) investigator as part of an investigation, but is unlikely to be a good basis for a threat detection.

- google.devtools.cloudbuild.v1.CloudBuild.CreateBuild

- This event logs the manual creation of a new build, and indicates what identity principal triggered the event. If the build was manually triggered and has a GCS bucket specified as a destination for build logs, that bucket’s name will be specified in the field protoPayload.request.build.logsBucket=”gs://gcb_testing”. If this field is present, a threat detection or threat hunting query for unknown buckets outside known infrastructure may be of use. Additionally, like with most cloud audit log events, significant quantities of failed CreateBuild events followed by a successful event may be indicative of an adversary attempting to discover new capabilities or escalate privileges Otherwise, since this is a perfectly legitimate event, like RunBuildTrigger it will primarily be of use for DFiR investigations rather than threat detections.

- storage.buckets.delete

- An event with this methodName value logs the deletion of a GCS bucket. It is automatically of interest during the course of a DFIR investigation that involves data destruction, and may be worth threat hunting for if the value of the protoPayload.authenticationInfo.principalEmail is the default Cloud Build Service Account. This is not automatically worthy of a threat detection, as it can be legitimate for Cloud Build to use a GCS bucket to store temporary data and delete it after the build is complete, but it is likely a good candidate for an anomaly model detection.

- storage.buckets.create

- While relatively uninteresting in isolation, if this event shortly follows a storage.buckets.delete event it may be indicative of an attempt to bypass the protections offered by Safe Delete, as described above. This may be automatically detection worthy, and would definitely be a useful threat hunting or DFIR investigation query.

Data Encrypted for Impact (Mitre ATT&CK T1486)

Cloud object storage is not inherently immune from ransomware, but despite the concerns of a potential for “ransomcloud” attacks and other similar threat vectors, it is actually quite difficult to irreversibly encrypt objects in a cloud storage bucket. It is much more likely that a data-focused cloud impact attack will involve exfiltrating the objects and deleting them before offering them back in exchange for a ransom, rather than encrypting them. In Google Cloud Storage, all objects are encrypted by default using a Google-managed encryption key, but GCS also supports three other methods of encrypting objects. These methods are server side encryption using the Cloud Key Management Service (CKMS) to manage the keys, also known as customer-managed encryption, server side encryption using a customer provided key not stored in the cloud account at all, and client side encryption of the objects. With customer-managed encryption, if an adversary implements this approach, the legitimate owner of the objects will have access to the CKMS and be able to decrypt them. With either a customer provided encryption key or client side encryption, a customer may be able to overwrite the original versions of the objects, though if the bucket has Object Versioning enabled, an administrator can simply revert to a previous version of the object to retrieve it.

If an adversary is able to identify a bucket that does not have Object Versioning configured, they may be able to utilize the Cloud Build attack vector described previously to encrypt existing objects with a customer provided key that they control. This is possible using the following gcloud CLI command:

gcloud storage cp SOURCE_DATA gs://BUCKET_NAME/OBJECT_NAME --encryption-key=YOUR_ENCRYPTION_KEYAnd was performed by updating the previously described cloudbuild.yaml file with entries for 10 objects in the bucket, then triggering another build. In an actual attack, enumeration of the stored objects followed by encryption of all of them would be required.

Defensive Notes

The creation of the new encrypted file was logged with an event of type storage.objects.create, but unfortunately there was no indication that a customer-provided encryption key was utilized for encryption in the event’s body. Therefore there was nothing especially anomalous about the event for a detection or investigator to look for. This whole attack vector though can again be obviated by enabling Object Versioning and Soft Delete, so that is highly recommended.

Cisco Talos Blog – Read More