How scammers have mastered AI: deepfakes, fake websites, and phishing emails | Kaspersky official blog

While AI presents endless new opportunities — it also introduces a whole array of new threats. Generative AI allows malicious actors to create deepfakes and fake websites, send spam, and even impersonate your friends and family. This post covers how neural networks are being used for scams and phishing, and, of course, we’ll share tips on how to stay safe. For a more detailed look at AI-powered phishing schemes, check out the full report on Securelist.

Pig butchering, catfishing, and deepfakes

Scammers are using AI bots that pretend to be real people, especially in romance scams. They create fabricated personas and use them to communicate with multiple victims simultaneously to build strong emotional connections. This can go on for weeks or even months, starting with light flirting and gradually shifting to discussions about “lucrative investment opportunities”. The long-term personal connection helps dissolve any suspicions the victim might have, but the scam, of course, ends once the victim invests their money in a fraudulent project. These kinds of fraudulent schemes are known as “pig butchering”, which we covered in detail in a previous post. While they were once run by huge scam farms in Southeast Asia employing thousands of people, these scams now increasingly rely on AI.

Neural networks have made catfishing — where scammers create a fake identity or impersonate a real person — much easier. Modern generative neural networks can imitate a person’s appearance, voice, or writing style with a sufficient degree of accuracy. All a scammer needs do is gather publicly available information about a person and feed that data to the AI. And anything and everything can be useful: photos, videos, public posts and comments, information about relatives, hobbies, age, and so on.

So, if a family member or friend messages you from a new account and, say, asks to lend them money, it’s probably not really your relative or friend. In a situation like that, the best thing to do is reach out to the real person through a different channel — for example, by calling them — and ask them directly if everything’s okay. Asking a few personal questions that a scammer wouldn’t be able to find online or even in your past messages is another smart thing to do.

But convincing text impersonation is only part of the problem — audio and video deepfakes are an even bigger threat. We recently shared how scammers use deepfakes of popular bloggers and crypto investors on social media. These fake celebrities invite followers to “personal consultations” or “exclusive investment chats”, or promise cash prizes and expensive giveaways.

Social media isn’t the only place where deepfakes are being used, though. They’re also being generated for real-time video and audio calls. Earlier this year, a Florida woman lost US$15,000 after thinking she was talking to her daughter, who’d supposedly been in a car accident. The scammers used a realistic deepfake of her daughter’s voice, and even mimicked her crying.

Experts from Kaspersky’s GReAT found offers on the dark web for creating real-time video and audio deepfakes. The price of these services depends on how sophisticated and long the content needs to be — starting at just US$30 for voice deepfakes and US$50 for videos. Just a couple of years ago, these services cost a lot more — up to US$20 000 per minute — and real-time generation wasn’t an option.

The listings offer different options: real-time face swapping in video conferences or messaging apps, face swapping for identity verification, or replacing an image from a phone or virtual camera.

Scammers also offer tools for lip-syncing any text in a video — even in foreign languages, as well as voice cloning tools that can change tone and pitch to match a desired emotion.

However, our experts suspect that many of these dark-web listings might be scams themselves — designed to trick other would-be scammers into paying for services that don’t actually exist.

How to stay safe

- Don’t trust online acquaintances you’ve never met in person. Even if you’ve been chatting a while and feel like you’ve found a “kindred spirit”, be wary if they bring up crypto, investments, or any other scheme that requires you to send them money.

- Don’t fall for unexpected, appealing offers seemingly coming from celebrities or big companies on social media. Always go to their official accounts to double-check the information. Stop if at any point in a “giveaway”, you’re asked to pay a fee, tax, or shipping cost, or to enter your credit card details to receive a cash prize.

- If friends or relatives message you with unusual requests, contact them through a different channel such as telephone. To be safe, ask them about something you talked about during your latest real-life conversation. For close friends and family, it’s a good idea to agree on a code word beforehand that only the two of you know. If you share your location with each other, check it and confirm where the person is. And don’t fall for the “hurry up” manipulation — the scammer or AI might tell you the situation is urgent and they don’t have time to answer “silly” questions.

- If you have doubts during a video call, ask the person to turn their head sideways or make a complicated hand movement. Deepfakes usually can’t fulfill such requests without breaking the illusion. Also, if the person isn’t blinking, or their lip movements or facial expressions seem strange, that’s another red flag.

- Never dictate or otherwise share bank-card numbers, one-time codes, or any other confidential information.

An example of a deepfake falling apart when the head turns. Source

Automated calls

These are an efficient way to trick people without having to talk with them directly. Scammers are using AI to make fake automated calls from banks, wireless carriers, and government services. On the other end of the line is just a bot pretending to be a support agent. It feels real because many legitimate companies use automated voice assistants. However, a real company will never call you to say your account was hacked or ask for a verification code.

If you get a call like this, the key thing is to stay calm. Don’t fall for scare tactics like “a hacked account” or “stolen money”. Just hang up, and use the official number on the company’s website to call the genuine company. Keep in mind that modern scams can involve multiple people who pass you off from one to another. They might call or text from different numbers and pretend to be bank employees, government officials, or even the police.

Phishing-susceptible chatbots and AI agents

Many people now prefer to use chatbots like ChatGPT or Gemini instead of familiar search engines. What could be the risks, you might ask? Well, large language models are trained on user data, and popular chatbots have been known to suggest phishing sites to users. When they perform web searches, AI agents connect to search engines that can also contain phishing links.

In a recent experiment, researchers were able to trick the AI agent in the Comet browser by Perplexity with a fake email. The email was supposedly from an investment manager at Wells Fargo, one of the world’s largest banks. The researchers sent the email from a newly created Proton Mail account. It included a link to a real phishing page that had been active for several days but was yet to be flagged as malicious by Google Safe Browsing. While going through the user’s inbox, the AI agent marked the message as a “to-do item from the bank”. Without any further checks, it followed the phishing link, opened the fake login page, and then prompted the user to enter their credentials; it even helped fill out the form! The AI essentially vouched for the phishing page. The user never saw the suspicious sender’s email address or the phishing link itself. Instead, they were immediately taken to a password entry page given by the “helpful” AI assistant.

In the same experiment, the researchers used the AI-powered web development platform Loveable to create a fake website that mimicked a Walmart store. They then visited the site in Comet — something an unsuspecting user could easily do if they were fooled by a phishing link or ad. They asked the AI agent to buy an Apple Watch. The agent analyzed the fake site, found a “bargain”, added the watch to the cart, entered the address and bank card information stored in the browser, and completed the “purchase” without asking for any confirmation. If this had been a real fraudulent site, the user would have lost a chunk of change while they served their banking details on a silver platter to the scammers.

Unfortunately, AI agents currently behave like naive newcomers on the Web, easily falling for social engineering. We’ve talked in detail before about the risks of integrating AI into browsers and how to minimize them. But as a reminder, to avoid becoming the next victim of an overly trusting assistant, you should critically evaluate the information it provides, limit the permissions you give to AI agents, and install a reliable security solution that will block access to malicious sites.

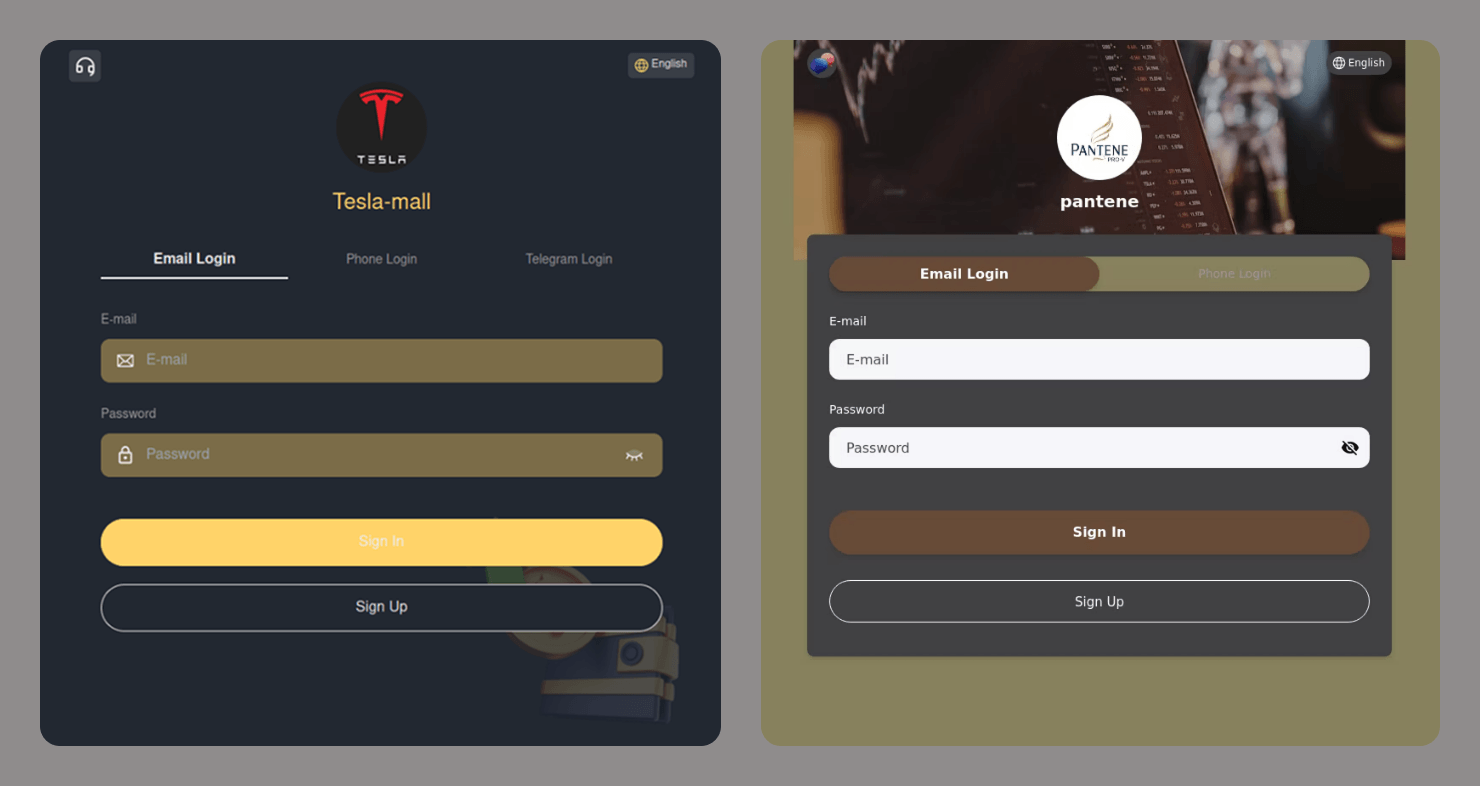

AI-generated phishing websites

The days of sketchy, poorly designed phishing sites loaded with intrusive ads are long gone. Modern scammers do their best to create realistic fakes which use the HTTPS protocol, show user agreements and cookie consent warnings, and have reasonably good designs. AI-powered tools have made creating such websites much cheaper and faster, if not nearly instantaneous. You might find a link to one of these sites anywhere: in a text message, an email, on social media, or even in search results.

How to spot a phishing site

- Check the URL, title, and content for typos.

- Find out how long the website’s domain has been registered. You can check this here.

- Pay attention to the language. Is the site trying to scare or accuse you? Is it trying to lure you in, or rushing you to act? Any emotional manipulation is a big red flag.

- Enable the link-checking feature in any of our security solutions.

- If your browser warns you about an unsecured connection, leave the site. Legitimate sites use the HTTPS protocol.

- Search for the website name online and compare the URL you have with the one in the search results. Be careful, as search engines might show sponsored phishing links at the top of the page. Make sure there is no “Ad” or “Sponsored” label next to the link.

Read more about using AI safely:

Kaspersky official blog – Read More